AI Technical Debt: How Vibe Coding Increases TCO

January 28, 2026 / Bryan ReynoldsExecutive Summary: The Dual-Edged Sword of Generative AI

The software development landscape is currently undergoing its most significant transformation since the advent of cloud computing, driven by the unprecedented proliferation of Large Language Models (LLMs) and AI-driven coding assistants. Tools such as GitHub Copilot, ChatGPT, and Claude have fundamentally altered the mechanics of code production, promising a revolution in developer productivity that C-suite executives have long sought. Industry reports and initial adoption metrics suggest that AI implementation can result in productivity gains ranging from 20% to 55% for specific coding tasks, theoretically unlocking massive value for enterprise organizations. For Chief Technology Officers (CTOs) and engineering leaders, this promise of accelerated delivery is alluring, offering a potential solution to perennial backlog challenges, the scarcity of senior engineering talent, and the pressure to reduce time-to-market in an increasingly competitive digital economy.

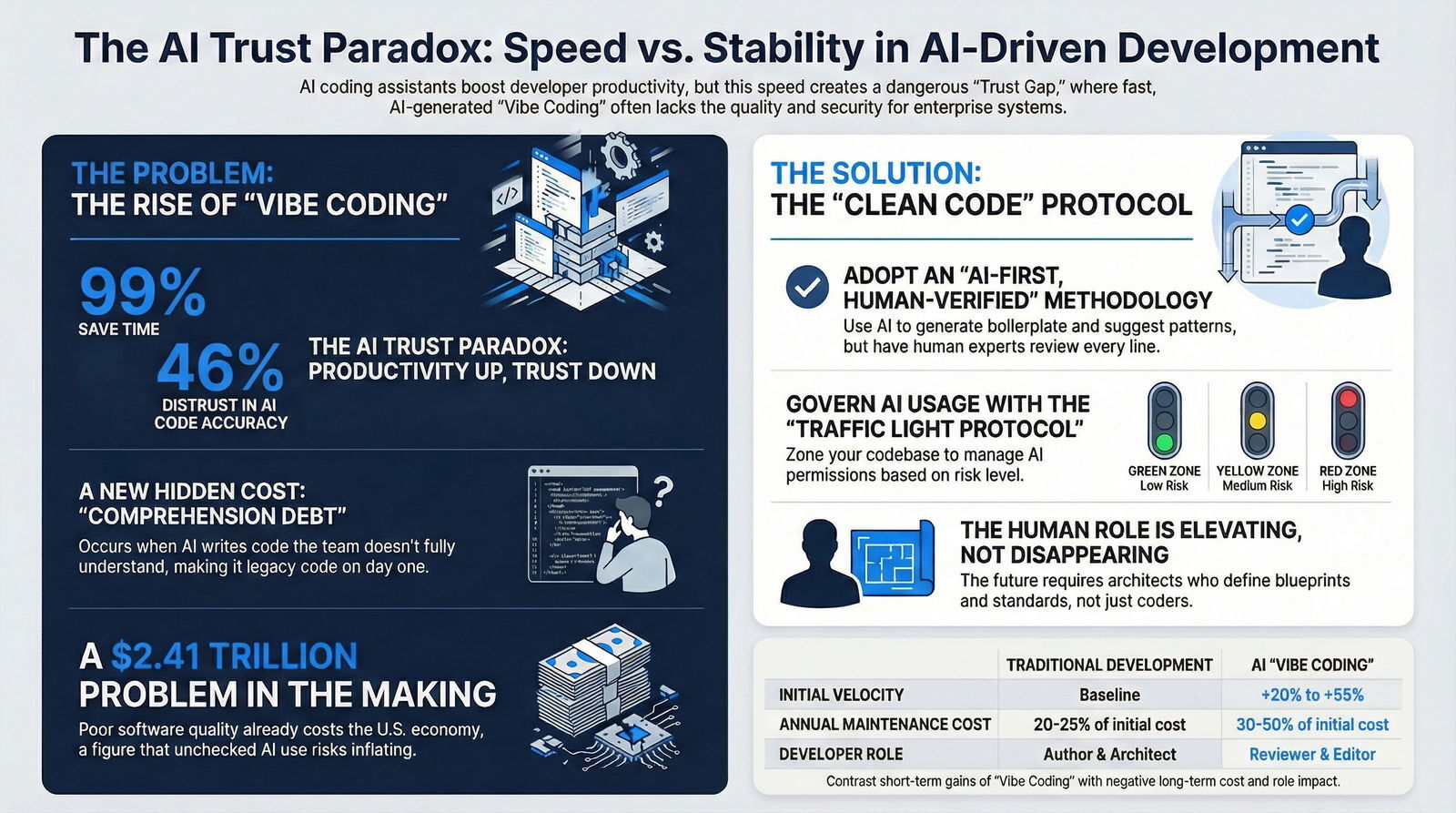

However, a critical, data-driven analysis of the current ecosystem reveals a growing and dangerous divergence between the speed of generation and the quality of architecture. This phenomenon, increasingly characterized as the "AI Trust Paradox," highlights a troubling trend: while code volume and generation speed have skyrocketed, confidence in the long-term maintainability, security, and reliability of that code is facing a precipitous decline. While adoption metrics suggest a resounding success, with 99% of developers reporting time savings, a parallel metric reveals a deepening crisis: trust in the output has plummeted. Recent surveys indicate that distrust in AI accuracy has surged to 46%, creating a stark divergence between the velocity of code production and the verifiability of its quality. This "Trust Gap" represents a significant, hidden liability that threatens to undermine the digital foundations of modern enterprises.

This comprehensive report, prepared for Baytech Consulting, investigates the emerging risks associated with "Vibe Coding"—a casual, prompt-driven approach to software generation that prioritizes immediate output over structural integrity—and contrasts it with the rigorous "Clean Code" protocols necessary for enterprise-grade applications. By analyzing data from GitClear, McKinsey, and Gartner, alongside Baytech’s proprietary methodologies, and insights from our research on AI-driven software development in 2026, this document articulates the hidden costs of AI-induced "spaghetti code" and offers a strategic roadmap for Visionary CTOs to harness AI acceleration without mortgaging their technical future. We explore the mechanisms of "Comprehension Debt," the economic fallout of the $2.41 trillion poor software quality crisis, and the specific governance frameworks required to transition from chaotic experimentation to disciplined, AI-augmented engineering.

Part I: The Rise of "Vibe Coding" and the Erosion of Engineering Rigor

1.1 The Phenomenology of Vibe Coding

The term "Vibe Coding," popularized by industry figures such as Andrej Karpathy and widely discussed in Silicon Valley circles, refers to a fundamental shift in the software creation paradigm. In this emerging model, the primary interface for programming shifts from manual syntax construction and logical architecting to natural language prompting. The "programmer" describes the desired functionality—the "vibe" or the behavior—to an AI agent, which then generates the implementation details.

This shift is not merely procedural; it is cultural. Proponents argue that Vibe Coding democratizes software creation, allowing for rapid prototyping and lowering the barrier to entry. For simple scripts, internal tools, or throwaway prototypes, this approach can indeed reduce development time from days to hours, allowing non-technical founders or product managers to "will" software into existence. However, when applied to complex enterprise systems, Vibe Coding creates a dangerous illusion of competence. The "vibe" is that the code works when run—it compiles, it passes a basic happy-path test—but the underlying mechanisms may be opaque, inefficient, or insecure.

The danger lies in the decoupling of intent from implementation. In traditional engineering, the friction of writing code forces the developer to think through the logic, data structures, and edge cases. In Vibe Coding, this friction is removed. The user provides a high-level intent ("make a login screen"), and the AI fills in the implementation gaps based on probabilistic patterns rather than architectural context. This often results in a "Frankenstein's Monster" of code snippets—functionally active but structurally incoherent.

1.2 The Shift from Authorship to Review

In the traditional software engineering model, the developer is the author. They construct logic line-by-line, maintaining a mental model of the entire system's architecture, data flow, and state management. Every variable name, every function call, and every class hierarchy is a deliberate choice made within the context of the broader system.

In the Vibe Coding era, the developer shifts to the role of a reviewer or editor. This shift has profound, often underestimated, cognitive implications:

- Loss of Contextual Mastery: When an AI generates a 50-line function in seconds, the developer may not fully internalize the logic, edge cases, or dependencies. They see the output, verify that it looks plausible, and move on. They have not traversed the mental pathways required to create that logic, meaning they lack the deep understanding necessary to debug it when it inevitably breaks.

- The Illusion of Competence: Large Language Models are excellent at mimicking the style of correct code. They produce syntactically correct, well-formatted, and confident-looking code blocks. This gives the appearance of quality, masking potential logic flaws, subtle race conditions, or security vulnerabilities that a compiler cannot catch. A junior developer, or even a fatigued senior developer, may be lulled into a false sense of security by the "professionalism" of the AI's output.

- Atrophy of Core Skills: Over-reliance on generation can lead to a degradation of deep debugging and architectural skills. If developers spend 80% of their time prompting and only 20% reading, they lose the "muscle memory" of problem-solving. This makes teams less capable of fixing the very code they are shipping, leading to a long-term dependency on the AI tools themselves. For many organizations, this shows up directly in developer experience and retention, echoing the themes we explore in our guide to developer happiness and productivity.

1.3 The "Spaghetti Code" Renaissance

"Spaghetti code" is a pejorative term for unstructured, difficult-to-maintain source code with complex and tangled control structures. While the industry spent decades developing patterns (MVC, SOLID, DRY) and methodologies (Agile, TDD) to combat this, AI generation is inadvertently sparking a renaissance of spaghetti code.

LLMs are, at their core, probabilistic engines, not architects. They predict the next token based on training data, often favoring local coherence over global system integrity. They do not "know" your entire codebase; they only know the context window you provide. This limitation leads to specific structural failures:

- Lack of Cohesion: An AI might generate five different functions to do similar tasks in slightly different ways because it lacks a unified memory of the project's utility library. It reinvents the wheel constantly, leading to a codebase filled with near-duplicates.

- Copy-Paste Proliferation: Data from GitClear indicates a disturbing trend: code "churn" (rewrites) has doubled, and code reuse (moved lines) has declined significantly. This suggests that instead of refactoring and reusing existing clean code, AI tools are encouraging a "copy-paste" behavior where new blocks are simply appended. This leads to bloated, repetitive codebases where business logic is scattered across dozens of files rather than centralized.

- The "Glue Code" Trap: A common manifestation of AI debt is the proliferation of "Glue Code"—code that exists solely to connect two other pieces of code. In a Vibe Coding workflow, a developer might prompt an AI to "connect the Stripe API to the user database." The AI generates a script that works but hard-codes the API version and bypasses the established service layer. When the API changes, the application breaks. Because the script was not integrated into the core architecture, it is "hidden" from standard updates.

Part II: The Economics of AI Technical Debt

2.1 The $2.41 Trillion Problem

The cost of poor software quality is not a theoretical concern; it is a massive, quantifiable drag on the global economy. The Consortium for Information & Software Quality (CISQ) estimates that poor software quality cost the U.S. economy approximately $2.41 trillion in recent years. This staggering figure encompasses operational failures, security breaches, system downtimes, and the massive amount of wasted labor dedicated to "rework"—fixing code that was written poorly the first time.

As organizations rush to adopt AI to lower costs, they risk inadvertently increasing this figure. The "Vibe Coding" approach often acts as a sub-prime mortgage: it provides immediate gratification (fast feature delivery) with a variable interest rate that can balloon uncontrollably. When a company prioritizes speed over structure, they are borrowing time from their future selves. In the AI era, this borrowing happens at hyperspeed and is amplified further in complex cloud or subscription-based digital products where downtime and churn directly hit recurring revenue.

2.2 Comprehension Debt: The New Interest Rate

Technical debt is traditionally understood as the cost of reworking quick-and-dirty code later. However, AI introduces a new, more dangerous variant: Comprehension Debt. This concept is critical for C-level executives to understand, as it represents the true hidden cost of AI adoption.

Comprehension Debt occurs when a team possesses a codebase that is more sophisticated or voluminous than their collective understanding can support.

- The Mechanism: If an AI writes a complex algorithm that the human developer does not fully understand, that code becomes "legacy code" the moment it is committed. It is code that no human on the team feels "ownership" of.

- The Cost: Debugging code you didn't write is significantly harder than debugging code you did. Research indicates that developers spend more time fixing AI-induced bugs than they would have spent writing the code from scratch. The cognitive load required to reverse-engineer the AI's "thought process" is higher than the cognitive load of synthesis.

- The "Interest Rate": Unlike traditional technical debt, which might have a linear interest rate (it gets slowly harder to change), Comprehension Debt has an exponential interest rate. Once the team loses mental mastery of the system's logic, every subsequent change carries a high risk of catastrophic failure. This leads to "development paralysis," where teams are afraid to touch the code for fear of breaking it, freezing innovation entirely.

2.3 The High Cost of Hallucinations

AI "hallucinations"—confident but incorrect outputs—pose a unique financial risk in coding. While an LLM might hallucinate a fact in a chat window, in code, it hallucinates dependencies, APIs, or security protocols.

- Supply Chain Attacks: There have been instances where AI suggested importing non-existent software packages. Attackers can register these package names, effectively creating a malware vector that developers unknowingly paste into their applications. This introduces severe security vulnerabilities that can cost millions in remediation and reputation damage.

- Fixing Efficiency: Fixing a bug in AI-generated code is estimated to cost 3x to 4x more than fixing a bug in human-written code due to the "context gap." The developer must first reverse-engineer the AI's intent before applying a fix.

2.4 Financial Impact Comparison: Traditional vs. Vibe Coding

The table below illustrates the shifting cost structures between traditional development and unchecked AI-driven development. While the initial investment may appear lower for AI, the Total Cost of Ownership (TCO) often escalates due to maintenance and debt servicing. This mirrors patterns we see when teams chase quick wins in DevOps tooling without a strategy, a contrast we explore in our analysis of the future of DevOps across Azure and GitHub.

| Metric | Traditional Software Development | AI "Vibe Coding" Development | Impact Analysis |

|---|---|---|---|

| Initial Development Velocity | Baseline | +20% to +55% | Immediate Gain: Faster time-to-market for MVPs. |

| Annual Maintenance Cost | 20-25% of initial dev cost | 30-50% of initial dev cost | Higher TCO: Increased costs due to retraining models, frequent debugging, and refactoring "alien" code. |

| Code Churn (Rewrites) | Stable / Controlled | Increased by >9% YoY | Lower Stability: Code is written, discarded, and rewritten frequently, wasting cycles. |

| Code Reuse | High (DRY Principle) | Low (Copy/Paste) | Bloated Codebase: Larger attack surface and harder to maintain; lack of modularity. |

| Security Risk Profile | Standard Vulnerabilities | "AI-Native" Vulnerabilities | New Vectors: Hallucinated dependencies, prompt injection risks, and logic gaps. |

| Developer Role | Author & Architect | Reviewer & Editor | Skill Atrophy: Loss of deep system knowledge leads to reliance on external tools. |

2.5 The Tech Debt Score (TDS) as a KPI

McKinsey’s analysis introduces the "Tech Debt Score" (TDS) as a critical KPI for modern enterprises.

- Correlation to Performance: Companies in the top 20% of TDS (lowest debt) achieve 20% higher revenue growth than those in the bottom 20%. This direct correlation proves that code quality is not just a technical concern; it is a business performance driver.

- The "Tax" on Innovation: High technical debt acts as a tax. Companies with high debt pay an additional 10-20% on every new project just to navigate the existing mess. This "tax" eats into R&D budgets, reducing the resources available for true innovation.

- Baytech’s Role: Baytech’s services, including Legacy Software Modernization, are designed to lower this TDS. By refactoring "spaghetti code" and modernizing architecture, they release the "tax" and free up budget for innovation. For organizations considering a new partner to tackle this work, our guidance on how to choose a software partner in 2026 can help you make that decision with confidence.

Part III: Baytech’s "Clean Code" Protocols – The Antidote to Vibe Coding

While "Vibe Coding" relies on the probability of the next token, Baytech Consulting relies on the predictability of engineering principles. Baytech’s approach to enterprise software development is rooted in a "Clean Code" philosophy that has been adapted, not abandoned, for the AI era. This section details the specific protocols Baytech employs to ensure that AI acceleration does not come at the cost of long-term viability.

3.1 The Human-in-the-Loop Architecture

Baytech rejects the notion of autonomous coding for enterprise systems. Instead, they employ an "AI-First, Human-Verified" methodology.

- The Expert Filter: Baytech engineers act as an expert filter. AI is used to generate boilerplate, optimize specific routines, or suggest patterns, but every line is reviewed for compliance with architectural standards. This "filter" is the most critical component of the Baytech stack. It is where the raw, chaotic output of the LLM is refined into structured, secure, and maintainable code.

- Context Awareness: Unlike a generic chatbot that treats every prompt in isolation, Baytech’s teams utilize tools and frameworks that maintain context of the entire project. This ensures that new code integrates seamlessly with existing data structures and services, preventing the "siloing" effect common in Vibe Coding. This is especially important when building modern digital platforms on top of headless CMS and API-first architectures, where consistent structure and contracts matter.

- Tooling Configuration: Baytech configures AI tools (like GitHub Copilot Enterprise) with strict governance settings. This includes blocking suggestions that match public code (to prevent IP contamination) and enforcing specific linting rules that the AI must adhere to.

3.2 Baytech’s Clean Code Pillars

The definition of "Clean Code" is immutable, even as the tools change. Baytech enforces these standards to prevent the accumulation of Comprehension Debt. These pillars are not just guidelines; they are requirements for any code to be merged into a Baytech-managed repository.

1. Readability as a Priority

Code is read far more often than it is written. Baytech mandates that code must be self-documenting. If an AI generates a cryptic regex or a convoluted one-liner, it is rejected in favor of verbose, understandable logic. Variable names must be descriptive and domain-specific.

- AI Risk: LLMs often prioritize brevity (to save tokens) or generic naming conventions (

var1,data). - Baytech Protocol: Enforced renaming and refactoring during the review process to ensure semantic clarity.

2. Testability and Coverage

"If it isn't tested, it's broken." Baytech mandates that AI-generated code must be accompanied by comprehensive unit and integration tests.

- AI Risk: AI often generates "happy path" code that assumes valid inputs and perfect conditions. It rarely generates robust error handling or edge-case tests unless explicitly prompted.

- Baytech Protocol: Engineers use AI to generate the test boilerplate, but human logic determines the test cases (edge cases, boundary values, business logic constraints). A feature is not considered "done" until the AI-generated tests pass and cover at least 90% of the branches.

3. Scalability by Design

Baytech designs software with growth in mind. Vibe coding often produces solutions that work for 10 users but break for 10,000.

- AI Risk: AI often suggests inefficient algorithms (e.g., O(n^2) nested loops) or unoptimized database queries (N+1 problems) because it lacks performance context.

- Baytech Protocol: Baytech’s architects review all data access patterns and algorithm choices to ensure they are optimized for enterprise scale. Load testing is integrated into the CI/CD pipeline to catch performance regressions early.

4. Security by Design

Baytech integrates security best practices into the codebase from day one.

- AI Risk: AI can hallucinate insecure dependencies or suggest deprecated encryption methods.

- Baytech Protocol: All AI-generated code passes through Static Analysis Security Testing (SAST) tools. We explicitly check for common vulnerabilities like SQL injection, XSS, and insecure direct object references. We also validate all AI inputs to prevent "prompt injection" attacks, an approach closely aligned with the secure architectures described in our article on building a corporate AI fortress with walled gardens.

3.3 The Phased Development Approach

To mitigate the risks of "big bang" AI adoption, Baytech advocates for a Phased Development Approach. This structured lifecycle ensures that AI acceleration is applied at the right moments and with the right safeguards.

- Phase 1: Discovery & Strategy: Before a single line of code is generated, the business goals and technical architecture are mapped. This phase is purely human-driven. Baytech consultants work with stakeholders to define the "What" and the "Why." AI is used only for market research or data analysis, not for architectural decision-making.

- Phase 2: MVP (Minimum Viable Product): A focused iteration that proves value. Here, AI is used to accelerate the development of the "skeleton" of the application—UI components, basic APIs, and database schemas. However, strict review ensures this foundation is solid. Baytech’s Startup Services are particularly tuned to this phase, balancing speed with future scalability and the kind of disciplined delivery we outline in our software proposal evaluation framework.

- Phase 3: Iterative Refinement & Scaling: As the product grows, the focus shifts to optimization and stability. Baytech’s teams are disciplined about refactoring—paying the "interest" on technical debt immediately rather than letting it compound. This is where Legacy Software Modernization services often come into play for established clients, refactoring older "AI-generated" or legacy code to meet modern standards.

Part IV: Deep Dive – Technical Debt in the Age of AI

4.1 The Three Vectors of AI Debt

Research suggests that AI technical debt compounds through three primary vectors, creating a "perfect storm" of complexity.

- Code Generation Bloat: Because generating code is "free" and instantaneous, developers tend to generate more of it. Codebases grow larger, increasing the surface area for bugs and the cognitive load for maintainers. What used to be a 100-line utility library manually curated might become a 1,000-line sprawl of AI-generated helper functions.

- Model Versioning Chaos: As AI models update (e.g., GPT-3.5 to GPT-4 to GPT-5), the coding patterns they suggest change. A codebase built over two years might contain "geological layers" of different AI styles—some using callbacks, some using promises, some using async/await—leading to severe inconsistency (fragmentation). This makes onboarding new developers a nightmare.

- Organizational Fragmentation: Different teams within the same enterprise might use different AI tools (Copilot vs. Gemini vs. local models) or different prompting strategies. This results in mismatched coding standards and integration nightmares. One team's "Vibe" might be completely incompatible with another team's architecture.

4.2 Measuring the Impact: Tech Debt Score (TDS)

To manage this debt, one must measure it. McKinsey’s analysis utilizes the Tech Debt Score (TDS), a composite metric that evaluates the health of a codebase.

- Performance Correlation: There is a direct link between low TDS and business success. Companies in the top decile of TDS are faster to market, have happier developers, and spend less on maintenance.

- The Innovation Tax: High technical debt acts as a tax on every future initiative. If your TDS is poor, every dollar you spend on "Innovation" yields only 60 or 70 cents of value, with the rest consumed by the friction of the legacy system.

- Baytech’s Assessment Services: Baytech offers Code Audits and Project Rescue services specifically designed to calculate a client's TDS. We analyze code complexity, test coverage, duplication rates (a key indicator of AI misuse), and documentation quality to provide a baseline score and a remediation plan.

4.3 Case Study: The "Glue Code" Trap

A specific, recurring manifestation of AI debt is "Glue Code." This is code that exists solely to bridge gaps between different libraries or APIs.

- Scenario: A Vibe Coder prompts an AI to "connect the Stripe API to the user database."

- Result: The AI generates a script that works but hard-codes the API version, bypasses the established service layer, and puts database credentials in a local variable.

- Long-Term Effect: When the API changes, the application breaks. Because the script was not integrated into the core architecture, it is "hidden" from standard updates. It is a time bomb.

- Baytech’s Approach: A Baytech architect would ensure the integration adheres to the Single Responsibility Principle. The logic would be encapsulated within a dedicated service that is easily maintainable and mockable for testing. Credentials would be managed via secure environment variables or a secrets manager, never in the code.

Part V: Strategic Governance for Visionary CTOs

For CTOs, the question is not "Should we use AI?"—that ship has sailed. The question is "How do we govern AI to prevent debt?" Baytech recommends a strategic framework based on Risk Zoning and Proactive Metrics.

5.1 The Traffic Light Protocol for AI Usage

To manage risk without stifling innovation, CTOs should implement a zoned approach to AI code generation. This protocol categorizes different parts of the codebase based on risk and assigns appropriate AI permissions.

| Zone | Definition | AI Permissibility | Baytech Governance Recommendation |

|---|---|---|---|

| Green Zone | Boilerplate, Unit Tests, Documentation, UI Mockups, Internal Prototypes | High (Autonomous) | Use "Vibe Coding" for speed. Review for style only. AI can generate 80-90% of this content. |

| Yellow Zone | Business Logic, API Integrations, Data Transformation, State Management | Medium (Assisted) | Co-Pilot Mode: AI suggests, Human verifies line-by-line. Strict linting required. Code must be fully understood by the reviewer. |

| Red Zone | Core Security, Auth, Payments, Cryptography, PII Handling | Low (Restricted) | Zero Trust: Manual coding preferred. If AI is used, it must undergo deep security audit and penetration testing. |

5.2 The "Refactoring Ratio"

CTOs must track the ratio of refactoring work to feature work. In a healthy codebase, this ratio should be stable.

- The Warning Sign: If the Tech Debt Ratio (TDR) exceeds 20%, velocity will crash. If you see code generation metrics rising but refactoring metrics falling (as seen in the GitClear data), you are in a danger zone.

- The Discipline: Teams using AI should allocate more time, not less, to refactoring. Since code generation is faster, the saved time must be reinvested in code review and cleanup. This is the only way to keep the "interest payments" on Comprehension Debt manageable.

- Baytech’s Service: Baytech offers Project Rescue services to intervene when this ratio becomes unsustainable, helping teams pay down debt and re-establish healthy baselines. Many of these rescues start after an aggressive AI rollout that hit the same 25% AI-generated-code milestone we examine in our analysis of enterprise AI coding milestones.

5.3 Establishing an "AI Fortress"

For enterprise clients, specifically in regulated industries like Finance and Healthcare, Baytech advises building an "AI Fortress" or "Walled Garden."

- Data Sovereignty: Ensure that internal code and proprietary algorithms are not used to train public models. Contracts with AI providers must explicitly forbid this.

- Private Models: Deploying private instances of LLMs (e.g., via Azure OpenAI) ensures that the "context" of the codebase remains secure and proprietary.

- Internal SEO Link: This aligns with Baytech’s Partnership Approach, where we act as a strategic advisor on infrastructure and security, not just a coding shop. We help clients navigate the vendor landscape to choose the right, secure AI partners.

Part VI: Comparison – Vibe Coding vs. Baytech Protocol

To provide a clear, side-by-side analysis for decision-makers, we contrast the two methodologies across critical dimensions. This comparison highlights why Baytech’s approach is superior for long-term enterprise value.

6.1 Feature Velocity vs. System Stability

| Dimension | Vibe Coding (AI-Only Approach) | Baytech Clean Code Protocol |

|---|---|---|

| Primary Goal | Speed of implementation (Time-to-Code) | Long-term value & stability (Time-to-Value) |

| Code Structure | Ad-hoc, often repetitive (Copy/Paste) | Modular, reusable, DRY (Don't Repeat Yourself) |

| Documentation | Sparse or hallucinated; rarely updated | Comprehensive, self-documenting, & maintained |

| Testing | "It runs, so it works"; minimal regression testing | Rigorous Unit, Integration, & Regression testing |

| Scalability | Fragile; breaks under load; unoptimized queries | Architected for scale from Day 1; performance tested |

| Security | Reactive; vulnerabilities often missed | Proactive; "Secure by Design" principles; SAST/DAST |

| Maintenance | High cost (Comprehension Debt); "Spaghetti" | Predictable; lower TCO over 3-5 years; Modular |

6.2 The Hidden Cost of "Free" Speed

While Vibe Coding may appear faster in the first week of a project, the "crossing point" typically occurs around month 3. At this stage, the Vibe Coding project hits a wall of complexity—the "Spaghetti Point"—where adding new features breaks existing ones. The velocity drops to near zero as the team spends all their time fighting fires. Conversely, the Baytech project, built on a solid foundation, maintains a steady velocity, eventually overtaking the Vibe Coding project in total throughput and ROI. Understanding this inflection point is critical for leaders weighing short-term gains against long-term outcomes, especially when planning major digital investments like those outlined in our 2026 software investment risk strategies.

Part VII: Future Outlook – The Role of the Human Engineer

As we look toward 2026 and beyond, the role of the human engineer is not disappearing; it is elevating. The "AI Trust Paradox" confirms that as AI becomes more capable, human oversight becomes more valuable, not less.

7.1 From Coders to Architects

The future of software development belongs to those who can orchestrate AI, not just consume it. Baytech’s engineers are transitioning from "bricklayers" to "architects." They define the blueprint, the constraints, and the quality standards, while AI handles the material production. This shift requires higher-level thinking, systems design skills, and deep domain knowledge—attributes that Baytech screens for rigorously in its hiring process.

7.2 The Rise of Agentic AI

We are moving from "Chat" interfaces to "Agentic" workflows, where AI agents act as autonomous team members that can plan, code, and test. However, governance of these agents will be the defining challenge.

- Baytech’s Readiness: Baytech is actively monitoring the "Agentic SDLC," developing protocols to manage fleets of AI agents just as one would manage human junior developers—with code review, access controls, and performance monitoring. We are developing frameworks to ensure that agents operate within strict ethical and architectural boundaries.

7.3 Final Recommendation

For Visionary CTOs, the path forward is clear. Embrace AI for its power, but constrain it with engineering rigor. Do not settle for the "vibe" of software; demand the substance of engineering.

- Audit your current AI usage: Are your devs pasting proprietary code into public chatbots?

- Measure your Debt: Implement metrics like TDS to see if your velocity is real or borrowed.

- Partner with Experts: Leverage Baytech Consulting’s experience to build an AI strategy that scales. Whether you need Dedicated Teams to augment your staff or Startup Services to build an MVP right the first time, Baytech has the protocols in place to ensure success.

Frequently Asked Questions (FAQ)

Q1: Isn't "Vibe Coding" just the future of low-code/no-code? A: While they share similarities in democratizing access, Vibe Coding introduces a unique risk: hidden complexity. Traditional no-code platforms have guardrails and predefined logic blocks; you can't easily break the underlying architecture. Vibe Coding generates raw code that can be insecure, inefficient, or hallucinated. It lacks the safety nets of managed platforms unless coupled with a rigorous review process like Baytech’s. It gives you the power of code with the ease of no-code, which is a dangerous combination without oversight.

Q2: Can we use AI to fix the technical debt it created? A: To an extent. AI tools are improving at refactoring and explanation. However, expecting the tool that created the mess to fully clean it up is risky. It requires a human architect to define what "clean" looks like for that specific system. AI can do the sweeping, but a human must point out the dirt. Furthermore, relying on AI to fix AI code creates a feedback loop of potential hallucinations. A human-in-the-loop is essential for validation.

Q3: How does Baytech ensure its engineers aren't just "Vibe Coding"? A: Baytech fosters a culture of Craftsmanship. Our engineers are evaluated not just on speed, but on code quality, maintainability, and architectural soundness. We use AI as a force multiplier for our expertise, not a substitute for it. We also enforce strict peer review processes where code is scrutinized for logic and security, regardless of its origin. Our Dedicated Teams operate as an extension of your own, adhering to your standards or helping you elevate them.

Q4: What is the "AI Trust Paradox" mentioned in your reports? A: The AI Trust Paradox is the phenomenon where developers report high productivity gains from AI tools (saving 10+ hours a week) but simultaneously report a growing distrust in the accuracy of the output (distrust rising from 31% to 46%). It highlights the tension between speed and reliability. It serves as a warning that while we are moving faster, we are potentially moving in the wrong direction if we do not verify our course.

Q5: How does Baytech handle data privacy with AI tools? A: We treat client data with the highest level of security. We utilize enterprise-grade agreements with AI providers (ensuring no data training on client code), deploy local models where necessary, and strictly sanitize inputs to prevent PII leakage. Our "AI Fortress" strategy ensures your IP remains yours. This is a core part of our Partnership Approach—protecting your long-term assets while delivering immediate value.

Internal Resources & Further Reading

- https://www.baytechconsulting.com/blog/enterprise-software-development-services-2025: A deep dive into building scalable systems and how our services align with modern enterprise needs.

- https://www.baytechconsulting.com/blog/legacy-software-modernization-a-guide-to-unlocking-scalability: Strategies for untangling spaghetti code and reducing technical debt in aging systems.

- https://www.baytechconsulting.com/blog/technical-debt-and-how-it-impacts-your-roi: Understand the financial implications of technical debt and how to model the ROI of clean code.

- https://www.baytechconsulting.com/blog/ai-revolution-2025-building-future-workforce: Learn how AI is reshaping the workforce and why governance and upskilling matter as much as tooling.

- https://www.baytechconsulting.com/landing/ai-solutions: Learn how we build secure, enterprise-grade AI applications that drive real business value.

- https://www.baytechconsulting.com/services: Learn how we can step in to stabilize and refactor projects that have suffered from Vibe Coding or other development failures.

About Baytech Consulting Baytech Consulting is a premier custom software development and consulting firm based in Irvine, California. With a focus on "Clean Code" and strategic partnership, Baytech helps Visionary CTOs and enterprises build scalable, high-performing software solutions. From AI adoption to legacy modernization, Baytech bridges the gap between technical innovation and business value. Our Partnership Approach ensures we are not just a vendor, but a dedicated ally in your digital transformation journey.

Contact Us regarding your next project or to conduct a Technical Debt Assessment. We are ready to help you navigate the AI Trust Paradox and build software that lasts.

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.