AI-Native SDLC: A Strategic Blueprint for CTOs

January 30, 2026 / Bryan Reynolds

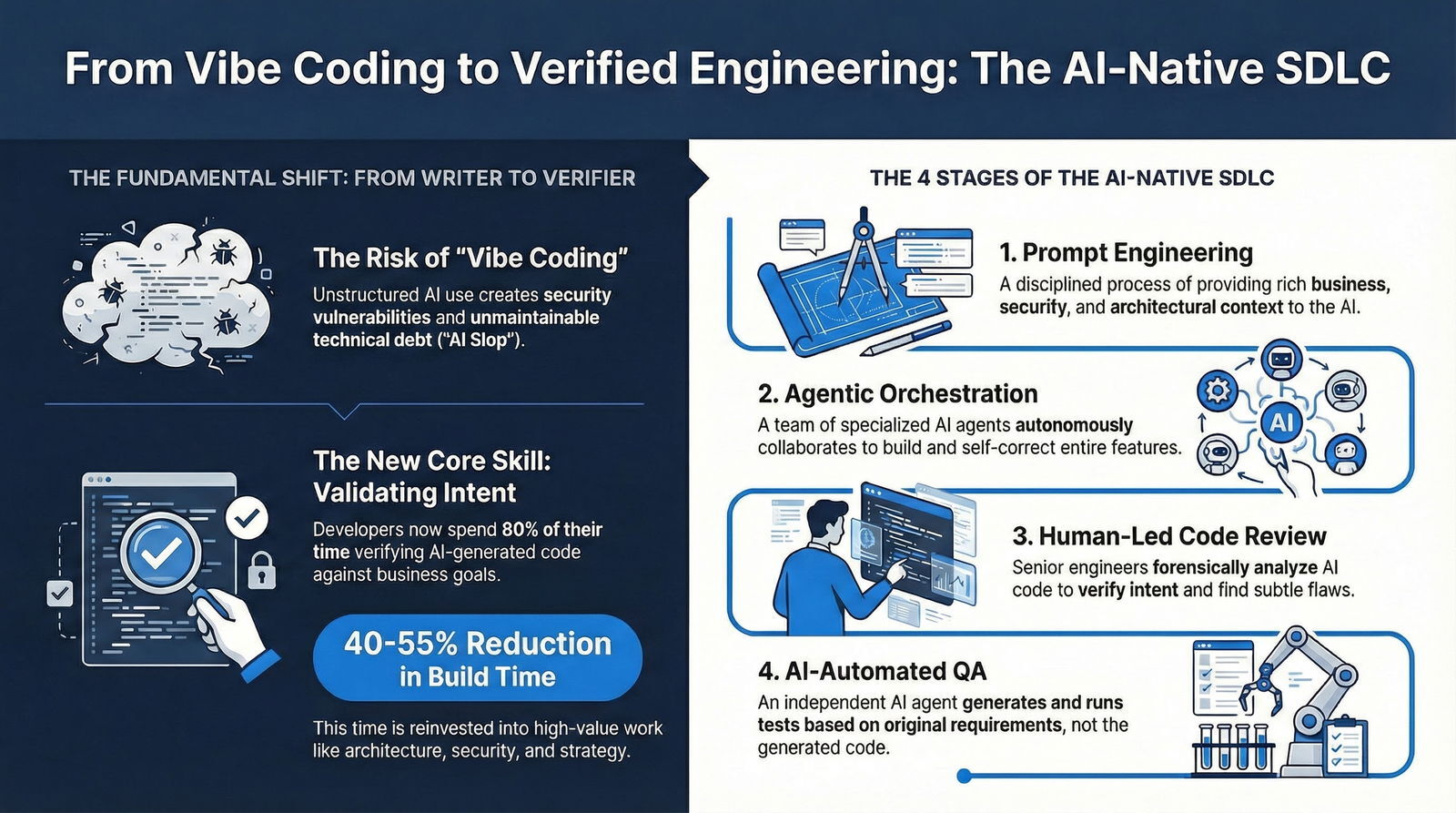

Executive Summary: The Industrialization of Generative Code

The global software development landscape is currently navigating its most significant inflection point since the abstraction of machine code into high-level languages. We are witnessing the effective death of the traditional "Write-Run-Test" loop—a manual, labor-intensive cycle that has defined engineering for fifty years—and the birth of an AI-Native Software Development Life Cycle (SDLC). While viral concepts like "Vibe Coding"—the practice of casually prompting AI to build apps through natural language—capture the imagination of the hobbyist and the startup founder, they pose an existential risk of technical debt, security vulnerability, and architectural drift to the enterprise.

For visionary Chief Technology Officers (CTOs) and Product Managers, the challenge of this era is not merely adopting AI tools to speed up typing. It is a fundamental restructuring of how software is conceived, architected, and delivered. The future belongs to organizations that can successfully transition their engineering teams from the manual labor of writing syntax to the high-level discipline of validating intent.

This report provides an exhaustive, expert-level analysis of this new lifecycle. It details how the ad-hoc nature of generative AI is systematized into a rigorous, four-stage workflow: Prompt Engineering, Agentic Orchestration, Human-Led Code Review, and AI-Automated QA. Drawing on extensive industry data, emerging academic research, and the operational frameworks of Baytech Consulting, we demonstrate how this shift reduces "Build" phase duration by approximately 40% while simultaneously increasing the time allocated to 'Architecture' and 'Strategy.'

We explore the implementation of these stages within robust enterprise ecosystems like Azure DevOps and Kubernetes, arguing that operational maturity—specifically through Baytech’s "Tailored Tech Advantage" and "Rapid Agile Deployment"—is the only viable defense against the rising tide of "AI slop." This is not a speculative forecast; it is a blueprint for the modern digital factory.

1. Introduction: The Evolution of the "Build"

1.1 The "Vibe Coding" Phenomenon vs. Enterprise Reality

In early 2025, Andrej Karpathy, a founding figure in modern AI and former Director of AI at Tesla, coined the term "Vibe Coding". The concept is seductive in its simplicity: a developer (or even a non-technical creator) describes what they want in plain English, "giving in to the vibes," and allows a Large Language Model (LLM) to handle the implementation details. "I just see stuff, say stuff, run stuff, and copy paste stuff, and it mostly works," Karpathy noted, describing a workflow where the human forgets the code even exists.

For a weekend project, a hackathon, or a rapid prototype, this is revolutionary. It democratizes creation, lowering the barrier to entry and allowing for rapid experimentation. Platforms like Replit have embraced this, allowing users to generate functional applications from a single prompt. However, for an enterprise managing complex, long-lived systems—where uptime, security, and scalability are non-negotiable—"Vibe Coding" is a dangerous misnomer. It implies a lack of rigor that, if applied to production environments, invites disaster.

The enterprise reality is distinct. We are not seeing the end of engineering; we are seeing the elevation of engineering. The role of the human developer is shifting from the manual laborer laying bricks (writing syntax) to the architect and site foreman (designing systems and supervising autonomous agents). The "vibe" is not enough; the "structure" is paramount.

1.2 The Crisis of Intent: Why "Vibe" Fails at Scale

The core problem with unstructured "Vibe Coding" in a professional setting is the degradation of intent preservation. When a developer manually writes code, there is a direct cognitive link between the business requirement ("Process a refund") and the logic implementation (if (balance > 0) processRefund()). The developer understands the edge cases because they built the logic path by path.

In a generative model, that link is severed. The LLM is a probabilistic engine, a "glorified guesser" that predicts the next token based on statistical likelihood, not logical understanding. It may generate code that is syntactically perfect and functionally plausible but subtly incorrect—perhaps it misses a race condition in the refund logic or fails to check for a specific compliance flag required by the finance team.

Without a structured lifecycle, this leads to "Shadow AI"—ungoverned scripts and code snippets entering the repository without proper vetting. This accumulation of plausible-but-unverified code creates a new form of technical debt, often referred to as "AI Slop", which creates a brittle infrastructure that is impossible to maintain because no human fully understands how it works.

1.3 The AI-Native SDLC: A Systematized Approach

To harness the speed of AI without sacrificing the stability of engineering, the traditional SDLC (Waterfall or Agile) must evolve. The traditional model relies on human labor for every step: requirements gathering, coding, unit testing, integration, and deployment. The bottleneck has historically been the human typing speed and cognitive load required to translate business logic into syntax.

The AI-Native SDLC inverts this. The "Build" phase—the actual generation of code—is becoming commoditized and automated. The value shifts to the edges:

- The Inputs (Strategy & Prompting): Defining what to build with extreme precision.

- The Outputs (Review & QA): Verifying that what was built is safe, secure, and accurate.

At Baytech Consulting, this is not theoretical. It is the operational reality we deploy for clients in finance, healthcare, and high-tech sectors. The lifecycle has evolved into four distinct, integrated stages:

- Stage 1: Prompt Engineering (The Ideation & Architecture Phase)

- Stage 2: Agentic Orchestration (The Construction Phase)

- Stage 3: Human-Led Code Review (The Quality Assurance Phase)

- Stage 4: AI-Automated QA (The Validation Phase)

This report maps these stages, providing a blueprint for leaders who need to move beyond the hype and implement a sustainable, high-velocity development machine.

2. The Core Philosophy: From "Writing Code" to "Validating Intent"

2.1 The Fundamental Shift in Cognitive Load

To understand the AI-Native SDLC, one must first appreciate the fundamental shift in the developer's cognitive task. For fifty years, "programming" meant learning the syntax of a machine to manually translate human intent (e.g., "I want a user login page") into machine instructions (e.g., public class UserController...). The developer was the translator.

Generative AI acts as a universal translator. It creates the syntax instantly. However, because LLMs are non-deterministic, the output for the same prompt can vary. This creates a "Crisis of Intent."

When a developer writes a line of code manually, they (usually) understand why they wrote it. When an AI generates 500 lines of code in seconds, that link between intent and implementation is weak. The code might compile; it might even run. But does it actually do what the business needs it to do, securely and efficiently?

2.2 Validating Intent as the New Primary Skill

In this new paradigm, the primary skill of the senior engineer is no longer writing the code—it is Validating Intent.

- Old World: The developer spends 80% of their time implementing the logic and 20% verifying it works.

- New World: The AI implements the logic in near-real-time. The developer spends 80% of their time verifying that the implementation matches the architectural intent, checking for subtle regressions, security holes, and "hallucinations".

This shift requires a higher level of seniority. A junior developer can copy-paste code from ChatGPT ("Vibe Coding"), but they often lack the experience to spot the subtle flaw in the authorization logic that leaves the database exposed. This is why Baytech emphasizes Human-Led Code Review as a non-negotiable stage. As noted in industry discussions, "AI can generate code, but it can't preserve institutional reasoning".

2.3 Operational Maturity: The Baytech Differentiator

Many organizations are currently in the "Wild West" phase of AI adoption—developers are secretly using tools like ChatGPT to write code, pasting it into corporate repositories without governance. This is "Shadow AI".

Baytech’s approach transforms this ad-hoc usage into a systematized process. By defining clear stages for the AI-Native lifecycle, we ensure that the speed of AI is balanced with the rigor of engineering discipline. This demonstrates Operational Maturity—we are not just reacting to the trend; we have built a factory that runs on it.

This maturity is built on two pillars:

- Tailored Tech Advantage: We do not use generic prompts. We build proprietary context libraries for our clients. If a client is in the healthcare sector, our prompt engineering framework automatically injects HIPAA compliance constraints into every code generation request.

- Rapid Agile Deployment: We integrate AI into a disciplined Agile process, ensuring that the velocity of code generation matches the velocity of testing and deployment. This builds on our broader DevOps efficiency approach, not a one-off experiment.

3. Stage 1: Prompt Engineering (The New "Write")

In the AI-Native SDLC, the "Write" phase is replaced by Prompt Engineering. However, this is not merely "chatting" with a bot. It is a disciplined engineering practice often referred to as Context Engineering.

3.1 Beyond the Chatbot: Context Architecture

The quality of AI-generated output is strictly determined by the quality of its input (context). "Garbage in, garbage out" still applies, but now it happens at the speed of light.

Effective Prompt Engineering at the enterprise level involves constructing a "Context Architecture" that feeds the AI not just the immediate request, but the surrounding constraints. A simple prompt like "Build a login page" will result in generic, unsecure code. A Baytech-engineered prompt involves a complex payload:

- Project Context: The file structure, existing libraries, and specific version dependencies (e.g., "Use React 18 with TypeScript 5.0").

- Business Context: The user persona, the business goal, and the acceptance criteria.

- Architectural Constraints: "Use the existing

AuthServiceclass," "Implement using the repository pattern," "Adhere to the Tailwind CSS design system defined intailwind.config.js." - Security Context: "Ensure all inputs are sanitized using the OWASP guidelines," "Do not use

dangerouslySetInnerHTML."

This elevates the "Prompt Engineer" to a role similar to a Technical Architect. They must understand the system well enough to describe it accurately to the AI.

3.2 The Baytech "Context Library" Strategy

This is where Baytech's Tailored Tech Advantage comes into play. We do not rely on developers remembering to add these constraints. We build proprietary context libraries for our clients.

For example, when working with a client in the construction estimating sector, our prompt library includes specific definitions for their risk-scoring models. When a developer asks the AI to "update the bid calculation," the system automatically injects the mathematical formulas and business rules governing that calculation into the prompt context.

This prevents the AI from generating generic, "tutorial-style" code that doesn't fit the client's specific ecosystem. It ensures that even the first draft of the code is aligned with the client's "Operational DNA".

3.3 Advanced Prompting Techniques for High-Fidelity Generation

We employ advanced prompting techniques to ensure reliability and reduce the "hallucination rate":

- Chain-of-Thought (CoT): We instruct the AI to "think step-by-step" and explain its logic before generating the code. Research shows this significantly reduces logical errors in complex tasks.

- One-Shot and Few-Shot Learning: We provide the AI with examples of "good code" from the client's existing codebase. If the client prefers a specific way of handling API errors, we show the AI an example of that pattern so it can mimic the style.

- Plan Mode (Pseudo-Code First): Before generating the full syntax, we ask the AI to generate a high-level plan or pseudo-code. This allows the human architect to validate the approach before the AI wastes tokens generating the implementation. This "measure twice, cut once" approach saves time and computational resources.

3.4 Integration with IDEs

This stage is integrated directly into the developer's environment (VS Code / VS 2022). Baytech engineers leverage tools like GitHub Copilot and Cursor, but configured with custom instructions. This ensures that the "Vibe Coding" happening at the individual developer's desk is actually "Guided Engineering" constrained by the organization's standards and broader DevOps toolchain choices.

4. Stage 2: Agentic Orchestration (The New "Run/Integrate")

While Prompt Engineering handles the generation of individual functions or files, Agentic Orchestration manages the complexity of building entire systems. This is the "Construction Phase" of the AI-Native lifecycle.

4.1 From Copilots to Autonomous Agents

A "Copilot" (like GitHub Copilot) is a passive assistant that suggests lines of code as you type. An AI Agent is an active entity that can reason, plan, and execute multi-step tasks autonomously.

Agentic Orchestration is the management of these agents. It is the layer where we coordinate multiple AI specialized agents to work together. Instead of one generalist AI trying to do everything, we deploy a team of specialist agents:

- The Architect Agent: Breaks down a high-level user story into technical tasks.

- The Coder Agent: Writes the code for a specific task.

- The Reviewer Agent: Critiques the code against style guides and linting rules.

- The Test Agent: Generates unit tests for the code.

4.2 The Orchestration Workflow Architecture

At Baytech, we leverage frameworks like Microsoft's AutoGen, Semantic Kernel, and the Azure AI Agent Service to build these workflows. The architecture typically runs on Azure Kubernetes Service (AKS) to ensure scalability and isolation.

The workflow proceeds as follows:

- Task Decomposition: The "Manager Agent" (or Orchestrator) receives a feature request (e.g., "Add a Stripe payment gateway"). It analyzes the request and breaks it down into sub-tasks: modify the Postgres database schema, update the Backend API (C#/.NET), and build the Frontend UI (React).

- Delegation: The Manager assigns the DB task to the "Database Specialist Agent," the API task to the "Backend Agent," and the UI task to the "Frontend Agent."

- Collaboration & Tool Use: The agents "talk" to each other. The Backend Agent shares the new API schema with the Frontend Agent to ensure the UI sends the correct data structure. These agents have access to tools: they can run CLI commands, read files, and check documentation.

- Execution & Loop: The agents interact with the codebase. If the Coder Agent writes code that fails to compile, the "Compiler Agent" feeds the error log back to the Coder Agent, which then self-corrects and retries. This "self-healing" loop happens without human intervention.

4.3 Why Orchestration Matters for CTOs

This stage is the engine of the 40% reduction in build time. It allows for parallel processing. While a human developer can only focus on one file at a time, an agentic system can update the database, the API, and the frontend simultaneously.

However, this power comes with risk. Without orchestration, agents can "hallucinate" conflicting changes. This is why Baytech's "Human-Led" approach is vital. We do not let agents commit directly to the main branch. They commit to a feature branch, which then triggers the next phase: Human Review.

The orchestration layer is also where we implement Governance. We can set rules within the Orchestrator Agent—for example, "Never allow a change to the User table without flagging it for a Senior DBA review." This brings the "Tailored Tech Advantage" into the automation layer itself and fits neatly alongside broader AI-driven software development strategies.

5. Stage 3: Human-Led Code Review (The New "Quality Gate")

If Prompt Engineering is the gas pedal, Human-Led Code Review is the steering wheel. It is the most critical phase of the AI-Native SDLC, yet it is the one most often neglected by "Vibe Coding" enthusiasts.

5.1 The Trust Paradox

Baytech’s internal research and broader market analysis highlight a "Trust Paradox" in 2025: adoption of AI tools is near-universal, yet trust in their output is plummeting.

- 46% of developers do not trust the accuracy of AI tools.

- 66% of developers report spending more time fixing subtle AI bugs than writing code from scratch.

This "AI Slop"—code that looks correct at a glance but contains subtle logical flaws, inefficiencies, or security vulnerabilities—is the new technical debt. It is the result of using AI without a structured review process, a concern we examine in depth in our analysis of the AI code revolution and productivity paradox.

5.2 The Shift in Review Dynamics

In a traditional code review, the reviewer looks for syntax errors and logic flaws. In an AI-Native review, the reviewer acts as a forensic analyst. They are looking for different types of errors:

- Hallucinations: Calls to libraries that don’t exist or methods that were deprecated years ago.

- Security Gaps: Valid syntax that introduces SQL injection or XSS vulnerabilities because the AI didn't understand the specific security context of the application.

- Business Logic Drift: Code that is technically perfect but solves the wrong problem because the prompt was slightly ambiguous.

- Context Blindness: The AI might introduce a new library that conflicts with the existing dependency tree, or write code that violates the project's architectural pattern (e.g., putting database logic in the controller).

5.3 The Baytech Review Protocol

To combat this, Baytech employs a rigorous review protocol:

- Seniority Requirement: AI-generated code is reviewed by senior engineers, not juniors. Juniors often lack the intuition to spot "confident but wrong" AI code. They may be impressed by the complexity of the generated solution and assume it is correct. A senior engineer knows that complexity is often a sign of failure.

- Intent Verification: The reviewer does not just read the code; they verify it against the original intent (the User Story). They ask, "Does this code fulfill the promise of the prompt?"

- Automated Pre-Review: Before a human sees the code, we run it through static analysis tools (like SonarQube) to catch basic syntax and security issues. This ensures high-paid senior engineers aren't wasting time on trivial errors.

- "Red Teaming" the Code: We encourage reviewers to actively try to break the AI-generated logic. Because AI often optimizes for the "happy path," humans must rigorously test the edge cases and failure modes.

This stage is where we deliver the Tailored Tech Advantage. By enforcing strict human oversight, we ensure that the speed of AI doesn't compromise the enterprise-grade quality our clients expect. We turn "Vibe Coding" into "Verified Engineering."

6. Stage 4: AI-Automated QA (The New "Test")

The final stage of the AI-Native SDLC is AI-Automated QA. Testing has traditionally been a bottleneck, often done manually or with brittle automated scripts that break whenever the UI changes. AI transforms this into a self-healing, adaptive process.

6.1 The "Two-Agent" System: Separation of Concerns

Just as we use AI to write the application code, we use AI to write the tests. However, we follow a strict separation of concerns: The AI agent that writes the code should never write the tests for that code.

If the same agent does both, it is "marking its own homework". It will likely share the same blind spots and logical fallacies in both the implementation and the test, leading to passing tests that hide critical bugs.

At Baytech, we use a separate "QA Agent" (often running on a different model or with a different prompt context) to generate the test cases based on the original User Story, not the generated code. This ensures independent validation of the intent, and aligns well with our broader focus on operationalizing QA for profit and risk reduction.

6.2 Self-Healing Tests and "Intent-Based" Testing

One of the biggest pain points in DevOps is maintaining test scripts. A simple change in a button's CSS class ID can break a Selenium script, causing the build to fail even though the application works perfectly.

AI-driven testing tools (like those integrated into Azure DevOps or specialized tools like TestRigor) use "Computer Vision" and semantic understanding to test. They don't look for div #submit-btn; they look for "a button that looks like a submit button".

If the underlying ID changes, the AI adapts, "healing" the test automatically. This drastically reduces the maintenance burden and allows for Rapid Agile Deployment. We can deploy faster because our regression suite is robust and resilient.

6.3 Automated Test Case Generation from Requirements

We leverage tools that ingest the raw Requirements (User Stories/Tickets) from Azure DevOps and automatically generate structured test cases. This closes the loop:

- Prompt: Human defines intent.

- Code: AI Agent builds implementation.

- Test: Independent AI Agent builds tests based on the Prompt, not the Code.

- Verify: The Test Agent runs against the Code Agent's work.

This triangulation ensures that the final product aligns with the business goal, not just the code's internal logic. It validates that the software behaves as the business intended, not just as the developer coded.

6.4 Integration with Azure DevOps Pipelines

This entire QA process is integrated into our Azure DevOps Pipelines. When a pull request is created:

- The pipeline triggers a build.

- An ML model predicts the likelihood of build success based on historical data.

- The automated test suite runs, prioritized by AI to run the most relevant tests first.

- If a test fails, the AI analyzes the logs and suggests a fix to the developer.

This creates a continuous feedback loop that catches defects early, preventing them from reaching production.

7. The Baytech Strategic Edge: Tailored Tech & Rapid Agile

The AI-Native SDLC is not a commodity; it is a capability that must be built. Baytech Consulting differentiates itself by wrapping this new lifecycle in two core proprietary frameworks: Tailored Tech Advantage and Rapid Agile Deployment.

7.1 Tailored Tech Advantage: The Anti-Commodity

In an era where "anyone can code," the code itself becomes less valuable. The value lies in the System Architecture and Business Alignment.

Tailored Tech Advantage is our philosophy that software should be a perfect reflection of your unique business model, not a generic wrapper around an LLM.

- Custom Context Libraries: As mentioned, we build prompt libraries specific to your industry (e.g., specific risk-scoring models for construction bidding).

- Hybrid Architectures: We know when to use AI and when not to. We integrate AI agents into robust, legacy-compatible microservices architectures. We use Harvester HCI and Rancher for managing Kubernetes clusters, ensuring that your AI workloads are orchestrated with the same rigor as your transactional systems.

- Proprietary Knowledge Integration: We train the AI on your documentation and your code patterns, so the output feels like it was written by your best engineer.

All of this is grounded in a broader view of how predictive and generative AI transform business value across sales, marketing, and product.

7.2 Rapid Agile Deployment: The Velocity Engine

AI increases the speed of coding, but without an agile process, that just creates a "traffic jam" at the testing and deployment phase.

Rapid Agile Deployment is our methodology for matching the process velocity to the coding velocity.

- Shorter Sprints: We move from 2-week sprints to 1-week or even continuous flow, enabled by faster build times.

- Fixed Time/Cost, Variable Scope: We use the speed of AI to iterate on scope dynamically. If AI saves us 3 days on a feature, we reinvest that time immediately into the next highest-priority item in the backlog.

- Continuous Feedback: AI allows us to prototype a working MVP in days, not months. This means we get user feedback earlier, reducing the risk of building the wrong product.

This approach also supports better partnerships with software vendors and delivery teams, because cadence and expectations are clear from the outset.

8. The Economic Case: ROI & Resource Reallocation

For the CFO and Strategic Head of Sales, the shift to an AI-Native SDLC is a financial imperative. The data suggests a massive potential for efficiency gains, but only if managed correctly.

8.1 The "40% Build Reduction" Reality

Industry data indicates that AI-assisted development can reduce the time spent on the "Build" phase (coding and unit testing) by 40% to 55%.

- Pinterest reported a 6x improvement in build phase velocity.

- Publicis Sapient data suggests a 50-70% reduction in engineering time for specific tasks.

- Studies show that developers using AI coding assistants complete tasks 56% faster.

8.2 Reinvesting the Dividend: The Strategy Shift

At Baytech, we do not view this 40% saving as a reason to cut headcount. We view it as a Strategy Dividend. We reinvest this time into:

- Architecture: Building more robust, scalable systems that can handle future growth.

- User Experience (UX): Spending more time refining the "vibe" and flow of the application to ensure it delights users, connecting directly to our dedicated UX design services.

- Security: Conducting deeper penetration testing and threat modeling to ensure the application is bulletproof.

This shift allows us to deliver a product that is not just "done faster," but is better aligned with your business goals. We move the needle from "Output" (lines of code) to "Outcome" (business value).

8.3 Cost Structure: CAPEX to OPEX?

There is a subtle shift in cost structure. While labor hours on coding decrease, the compute costs (inference costs for LLMs, agent hosting on Azure) increase. However, this trade-off is highly favorable. The cost of a developer hour ($100+) significantly outweighs the cost of even millions of tokens ($10-20). This improves the unit economics of software production, allowing for more experimentation and "shots on goal" for the same budget.

9. Risks: The Shadow of AI

Operational maturity requires an honest assessment of risk. The AI-Native SDLC is not without its perils, and any CTO deploying these agents must be aware of them.

9.1 The "Lazy Coder" and Skill Atrophy

There is a long-term risk that junior developers, relying too heavily on AI, will fail to develop the "mental muscle" needed to understand complex systems. If an AI writes the hard logic, the junior never learns why it works. This "skill atrophy" is a threat to the future of the industry.

Baytech mitigates this by enforcing "AI-Assisted, Not AI-Replaced" policies. We use AI to automate the mundane, but we require engineers to understand the why behind every line. We treat code review as a mentorship opportunity, where seniors quiz juniors on the AI-generated code to ensure they understand it. This people-first approach mirrors our broader thinking on building the future AI-enabled workforce.

9.2 Data Privacy and IP Leakage

AI models can inadvertently be trained on insecure code patterns. If not filtered, they can suggest vulnerable code. Furthermore, sending proprietary code snippets to public LLMs (like standard ChatGPT) poses a data leakage risk.

Baytech’s Tailored Tech Advantage includes a "Secure AI Gateway." We use enterprise-grade instances of models (via Azure OpenAI Service) that guarantee zero data retention—your code is never used to train the public model. We also implement "Guardrails" that scan all AI output for secrets (API keys) and vulnerabilities before it even reaches the developer.

9.3 The "Black Box" Problem

As AI agents become more autonomous, there is a risk that the system becomes a "Black Box" that no one understands. If an agentic workflow breaks, debugging it can be incredibly difficult if there are no logs of the agent's "thought process."

We solve this through Observability. We log every prompt, every agent decision, and every tool execution. We treat the agent's activity logs as part of the system documentation, ensuring that we can always trace why a decision was made.

10. Conclusion: The Operational Maturity Mandate

The transition to an AI-Native SDLC is inevitable. The question is not if you will adopt it, but how.

Will you allow "Vibe Coding" to create a tangled mess of unverified "AI Slop" in your codebase? Or will you embrace a structured, disciplined approach that treats AI as a powerful engine within a rigorous engineering chassis?

At Baytech Consulting, we have chosen the latter. By formalizing the stages of Prompt Engineering, Agentic Orchestration, Human-Led Review, and AI-Automated QA, we deliver software that is faster, smarter, and strategically aligned. This is not just technology; it is operational maturity. It is the difference between a prototype and a product.

Next Steps for the Visionary CTO

- Audit Your AI Usage: Identify where "Shadow AI" exists in your organization today.

- Define Your Context: Begin building your "Context Libraries"—the rules and constraints that define your business.

- Partner for Maturity: Engage with a partner like Baytech who can bring the Tailored Tech Advantage and Rapid Agile Deployment frameworks to your organization, and help you avoid the security pitfalls highlighted in our analysis of vibe coding’s impact on enterprise security.

Ready to transform your development lifecycle?

- Learn how we prevent project derailment: https://www.baytechconsulting.com/blog/preventing-scope-creep-software-projects

- Understand the risks we mitigate: https://www.baytechconsulting.com/blog/the-ai-trust-paradox-software-development-2025

- Discover our unique approach: https://www.baytechconsulting.com/blog/custom-software-competitive-advantage

Frequently Asked Questions

Q: Does "Vibe Coding" mean I don't need senior engineers anymore? A: No, it means you need them more than ever. While AI can replace the manual labor of writing syntax (the "junior" task), it cannot replace the architectural judgment, security awareness, and business context that a senior engineer provides. The role shifts from "builder" to "reviewer" and "orchestrator," requiring higher-level operational maturity.

Q: How does Baytech’s approach differ from just using GitHub Copilot? A: GitHub Copilot is a tool; Baytech provides a system. Copilot helps an individual write code faster. Baytech’s Tailored Tech Advantage and Agentic Orchestration frameworks manage the entire lifecycle—from ensuring the prompt aligns with your business goals to validating the security of the output and automating the testing. We wrap the tool in a process that guarantees enterprise-grade quality and aligns with your long-term software investment risk strategy.

Q: Will the AI-Native SDLC lower the cost of my project? A: It creates efficiency (speed), but the primary value is usually realized in velocity and quality rather than a direct reduction in total budget. The 40% time savings in the "Build" phase is typically reinvested into better Architecture, Strategy, and more rigorous Testing. You get a much better, more robust product to market significantly faster for a similar investment level.

Q: Is my proprietary data safe if we use AI to write code? A: Yes, but only if you use an Enterprise-grade approach. Using public, free tools (like consumer ChatGPT) puts your IP at risk. Baytech utilizes private, enterprise instances of models (via Azure OpenAI Service) with strict "zero data retention" policies, ensuring your code and business logic never leave your secure environment to train public models.

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.