Unlocking 2026: The Future of AI-Driven Software Development

January 06, 2026 / Bryan ReynoldsExecutive Summary: The "Willing but Reluctant" Reality

The software development industry currently stands at a precarious and transformative inflection point. As we traverse the fiscal landscape of 2025 and look toward 2026, the narrative surrounding Artificial Intelligence in software engineering has fundamentally shifted. We have moved past the initial phase of breathless hype—characterized by utopian predictions of ten-fold productivity gains and the "end of coding"—into a nuanced, complex, and data-driven reality check. The initial promise that AI would act simply as a force multiplier for speed has evolved into a realization that we are witnessing a complete industrial transformation of the software delivery lifecycle.

For executive leaders at B2B firms—particularly those in high-stakes sectors such as finance, healthcare, and enterprise software—understanding this landscape is no longer merely a question of whether to adopt AI tools. Adoption is effectively a foregone conclusion. The challenge now lies in managing the systemic volatility, quality assurance risks, and workflow bottlenecks that these tools introduce. The data suggests a distinct paradox: while individual developer velocity has undeniably increased, organizational throughput often faces new, unforeseen impediments. We are witnessing the "Industrialization of Code," where the historical scarcity of syntax generation has been replaced by a new scarcity: verification, architectural coherence, and human judgment.

This report serves as an exhaustive analysis of the impact of AI on software developer productivity in the 2025–2026 cycle. It synthesizes data from tens of thousands of developers, major cloud infrastructure providers, and extensive ecosystem surveys to answer the critical question: Is AI actually making us faster, or is it just making us busier? By examining the friction between adoption and trust, the migration of bottlenecks, and the rise of agentic workflows, we provide a strategic roadmap for navigating this new era of software engineering.

1. The State of Adoption: Ubiquity Without Unanimity

The widespread integration of AI into the developer workspace represents arguably the fastest tooling adoption curve in the history of software engineering, outpacing even the adoption of Git or the transition to Cloud computing. However, the nature of this adoption has changed significantly between early 2024 and late 2025. It is no longer a story of experimentation; it is a story of saturation, yet one marked by profound ambivalence.

1.1 The Saturation Point: A New Baseline

According to the comprehensive 2025 Stack Overflow Developer Survey, AI usage has effectively saturated the market, establishing a new baseline for what constitutes a modern development environment. Approximately 84% of respondents are now either currently using or planning to use AI tools in their development process, a significant leap from 76% in the previous year. This data point indicates that AI assistance is no longer a competitive differentiator in terms of access; it is table stakes. A development team without access to Large Language Model (LLM) assistance is now operating at a structural disadvantage regarding raw text generation speed.

Jellyfish’s "2025 AI Metrics in Review" corroborates this widespread penetration, reporting that 90% of engineering teams have integrated AI tools into their workflows. This ubiquity spans all levels of experience, dismantling the early assumption that AI would be primarily a crutch for juniors or a luxury for seniors. While "Early Career Devs" (1–5 years of experience) do show the highest daily usage rate at 55.5%, professional developers across the board are integrating these tools into their daily rituals.

The tooling market itself reflects this rapid consolidation and fierce competition. While GitHub Copilot retains a dominant market position—cited as the primary tool of choice by 42% of respondents in engineering management surveys—it is facing aggressive competition from next-generation "Agentic" IDEs. Tools like Cursor have surged in popularity, capturing nearly 40% of the AI-assisted pull request market by October 2025, a dramatic rise that underscores the developer demand for tools that do more than just autocomplete—they want tools that understand context.

1.2 The Trust Crisis and the "Almost Right" Phenomenon

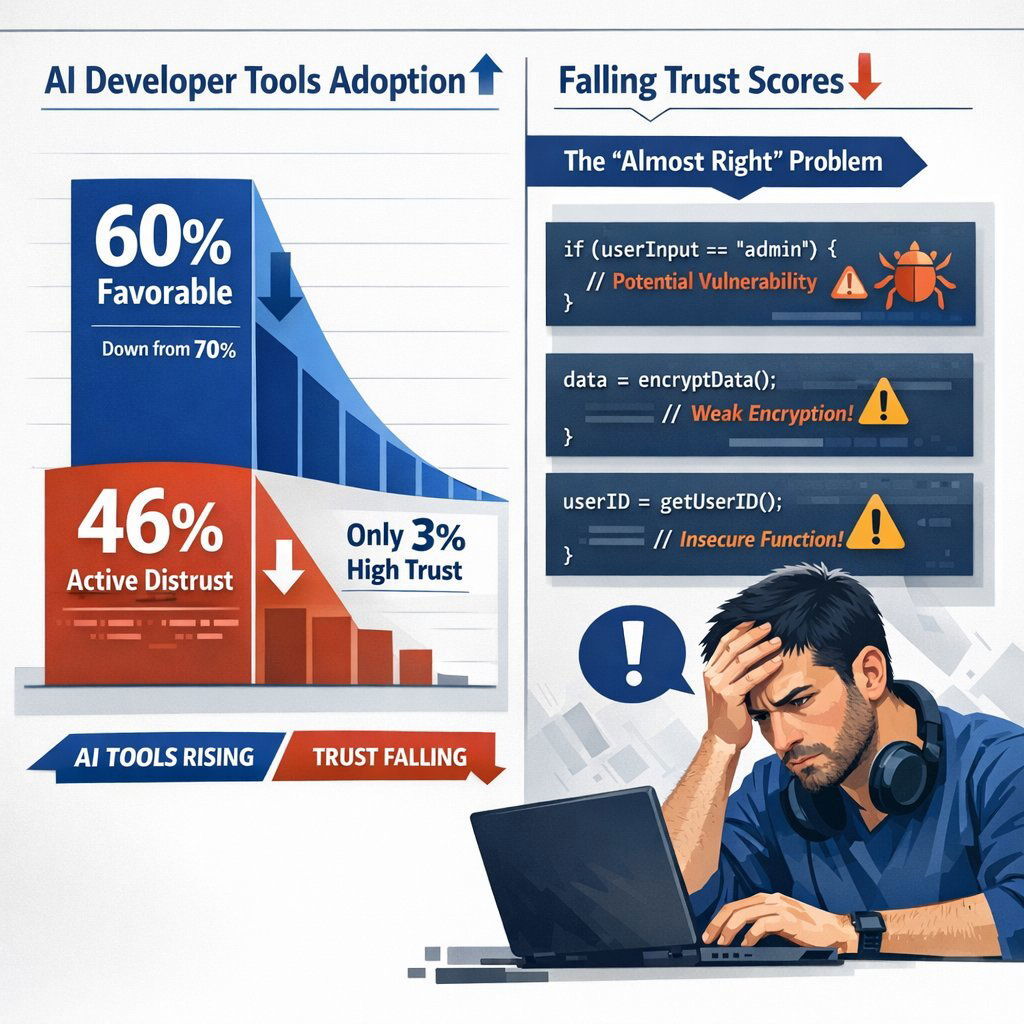

Despite these impressive adoption numbers, a deeper look at the data reveals a startling "Adoption-Trust Paradox." While usage is high, developer sentiment has notably soured. Favorable views toward AI tools have dropped from over 70% in the 2023–2024 period to just 60% in 2025. This decline in sentiment amidst rising adoption suggests that developers feel compelled to use these tools by market pressure or corporate mandate, even as they harbor significant reservations about their reliability.

The specific metrics regarding trust are alarming for any CTO concerned with code quality. 46% of developers actively distrust the accuracy of AI tools, and only 3% report "high trust" in the output. This lack of trust stems from the fundamental nature of Large Language Models: they are probabilistic engines, not logic engines. They operate on statistical likelihood rather than semantic understanding. This results in the widespread phenomenon of "Almost Right" solutions—code that looks syntactically plausible, adheres to the project's style guide, but contains subtle logical flaws, security vulnerabilities, or hallucinations of deprecated libraries.

Sixty-six percent of developers cite dealing with these "almost right" solutions as their primary frustration. The cognitive load required to debug a subtle error in code one did not write is often higher than the effort required to write the code from scratch. This has led to a rejection of the "Vibe Coding" narrative—the popular media idea that one can simply "vibe" their way to an application via natural language prompts without technical knowledge. Nearly 72% of professional developers explicitly state that "vibe coding" plays no part in their professional work. The industry is collectively realizing that as AI lowers the barrier to writing code, it exponentially raises the barrier to reading and debugging it.

1.3 The Emergence of the "Reluctant User" Persona

We are seeing the emergence of a new demographic: the "reluctant user." These are not Luddites; they are pragmatic professionals who use AI because the competitive velocity of the market demands it, yet they guard their high-accountability tasks jealously. They utilize AI as a powerful autocomplete for boilerplate and documentation, but they restrict its access to the critical path of architectural decision-making.

Data from the Stack Overflow survey highlights this defensive posture:

- 76% of developers refuse to use AI for deployment and monitoring tasks.

- 69% refuse to use it for project planning.

- 75% cite "lack of trust" as the primary reason they would still seek a human arbitrator in a future dominated by AI.

This segmentation of tasks suggests that while AI has conquered the keyboard, it has not yet conquered the whiteboard. Developers are happy to let AI handle the implementation details of a function, but they are unwilling to cede control over the why and the how of the broader system. For a firm like Baytech Consulting, which prides itself on "Tailored Tech Advantage," this distinction is vital. It reinforces the value of human expertise in architectural design and strategic oversight, even as the tactical execution becomes increasingly automated.

2. The Productivity Reality Check: Velocity vs. Value

The most contentious debate in the 2025 technology landscape revolves around the measurement of AI's impact. If every developer is typing faster and committing more code, why are features not necessarily shipping to customers at a proportionally faster rate? The answer lies in the critical distinction between individual task velocity and systemic value delivery.

2.1 The Surge in Individual Output

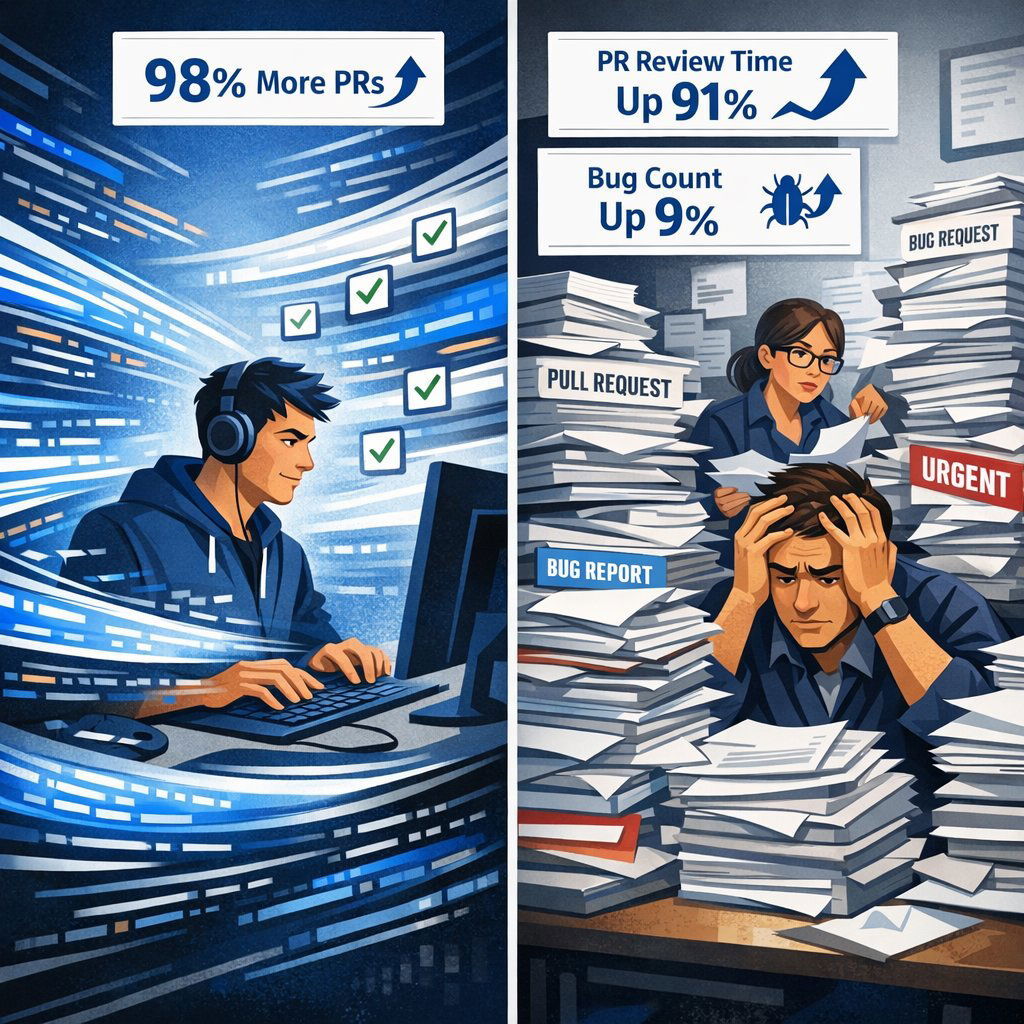

At the micro-level—the level of the individual contributor—the gains in raw output are undeniable and substantial. Data from Index.dev’s extensive study of over 10,000 developers reveals that AI-assisted engineers finished 21% more tasks and created 98% more pull requests (PRs) per person compared to their non-AI counterparts. This represents a near-doubling of the artifact generation rate.

Jellyfish data mirrors this finding, showing a 113% increase in merged PRs per engineer for teams moving from 0% to 100% adoption. Furthermore, the median cycle time—the time from the first commit to deployment—was reduced by 24%, dropping from 16.7 hours to 12.7 hours. On the surface, these metrics paint a picture of runaway success. However, this explosion in output has created a "Productivity Paradox." The volume of code is increasing, but the capacity of the system to absorb, verify, and maintain that code has remained largely constant.

2.2 The Bottleneck Migration: A Systemic Analysis

The AWS enterprise strategy team highlights a critical systems theory concept: software delivery is a value stream. When you optimize one part of a stream (coding) by 30–40% without adjusting the rest of the system, you inevitably shift the bottleneck downstream. The efficiency gains in the "Coding" phase have effectively flooded the downstream phases of "Review" and "Quality Assurance."

The following table contrasts the metrics of a Traditional Workflow against the emerging AI-Augmented Workflow, illustrating where the pressure points have shifted:

| Metric | Traditional Workflow | AI-Augmented Workflow (2025) | % Change | Implication |

|---|---|---|---|---|

| PR Volume / Dev | Baseline | 2.9 PRs (up from 1.36) | +113% | Massive increase in review inventory. |

| PR Review Time | Baseline | Significant Increase | +91% | Reviewers are overwhelmed; bottleneck shifts here. |

| Average PR Size | Baseline | Larger, more complex | +150% | AI encourages verbose code generation. |

| Bug Count | Baseline | Increased defect rate | +9% | "Almost Right" code introduces subtle bugs. |

| Debugging Time | Standard | Longer than writing | High | Shift from "Creation" to "Fixing" mode. |

The Review Gridlock: Because developers are generating nearly double the number of PRs, the burden on code reviewers has effectively doubled. Index.dev reports that PR review times increased by 91% in 2025. Senior engineers, who typically shoulder the burden of code review, are finding themselves drowning in a sea of AI-generated code. This code requires intense scrutiny because of the "Almost Right" problem; a reviewer cannot trust the AI's logic implicitly and must verify every line, often without the context of having written it.

The Quality Tax: The volume of code is up, but so is the noise. Average PR sizes increased by 150%, leading to a 9% rise in bug counts. This suggests that while we are shipping code faster, we are also shipping defects faster. The "Change Failure Rate"—a key DORA metric—is the canary in the coal mine. Teams that prioritize velocity over rigor are seeing their stability metrics degrade, trading long-term reliability for short-term speed.

2.3 The Counter-Intuitive "Senior Developer Slowdown"

Perhaps the most counterintuitive and significant finding of the 2025–2026 cycle is that AI can actually slow down expert developers. A controlled experiment by METR on experienced OS developers found that on complex, novel tasks, senior developers were 19% slower when using AI.

This phenomenon can be explained by Cognitive Load Theory. Senior developers operate on deep, internalized mental models of the entire system architecture. When they write code manually, they are simultaneously verifying it against that mental model—the creation and verification processes are coupled. When they utilize AI, the creation is decoupled; the AI produces the code, and the expert must then switch context to "Reviewer Mode." They must reverse-engineer the AI's logic, check for subtle hallucinations, and then integrate it. For an expert, the "verification cost" of this process often exceeds the "creation cost" of simply writing the code themselves. This underscores that AI is not a uniform accelerator; its impact is highly dependent on the user's expertise and the task's complexity.

2.4 DORA Metrics in the AI Era

The industry-standard DORA metrics (DevOps Research and Assessment) are being stretched by these new realities.

- Deployment Frequency is generally up, driven by the ease of code generation.

- Lead Time for Changes is fluctuating—while the "coding" portion is faster, the "waiting for review" portion is expanding.

- Change Failure Rate is the metric to watch. With the reported rise in bugs, this metric acts as the primary counter-balance to velocity.

- Mean Time to Restore (MTTR) may increase if the bugs introduced by AI are subtle, logic-based errors that are difficult to trace, rather than simple syntax errors.

Leading organizations are recognizing the insufficiency of these velocity-focused metrics and are moving toward more holistic economic measurements like "GDP-B" (GDP-Benefits) and "CTS-SW" (Cost to Serve Software) to capture the true picture of engineering health.

3. The Economic Impact: ROI and The J-Curve

As AI subscriptions become a significant line item in IT budgets—with tools like GitHub Copilot and Cursor charging premium rates—executives are increasingly asking a hard question: "We are paying for thousands of licenses. Where is the return?" The answer is not immediate; it follows a classic economic pattern known as the Productivity J-Curve.

3.1 The J-Curve Effect

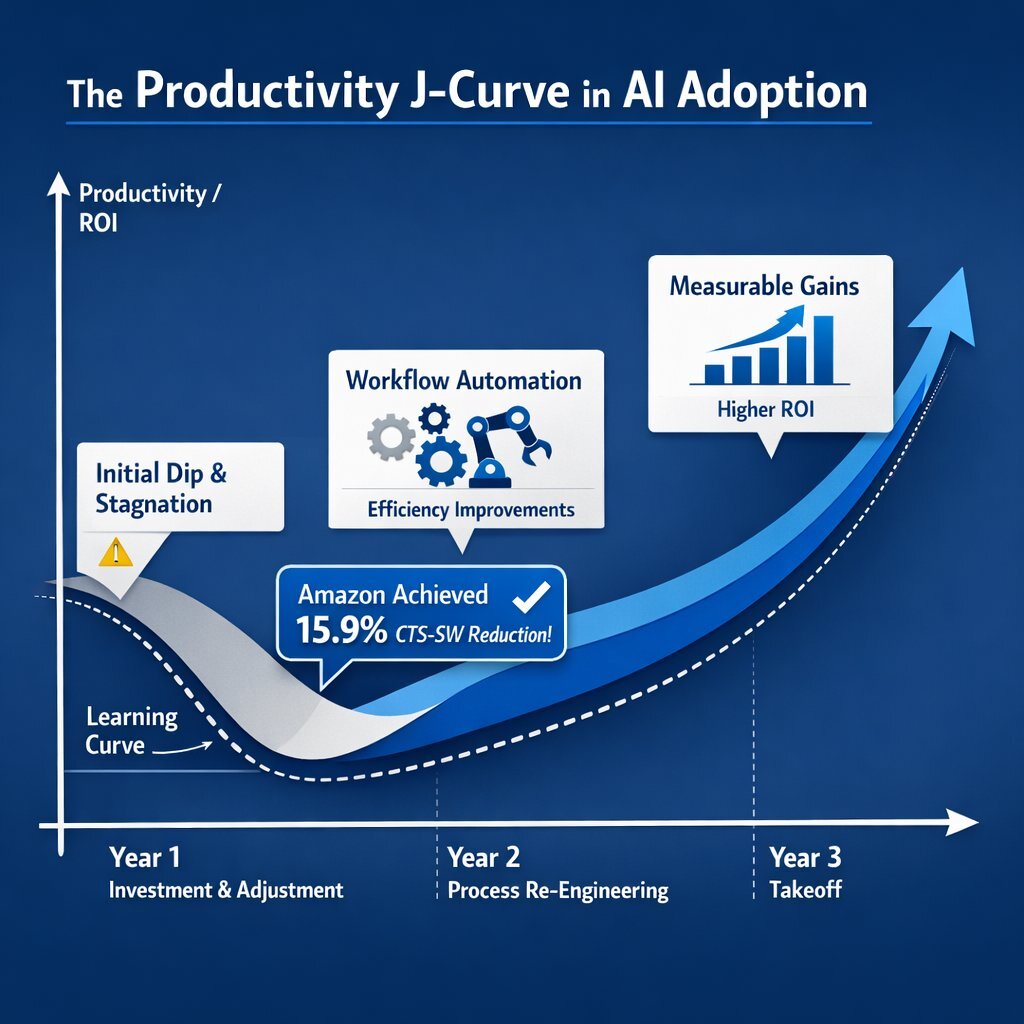

As described by economist Erik Brynjolfsson and highlighted in McKinsey’s analysis, the introduction of a general-purpose technology like AI typically causes an initial productivity dip or stagnation before the takeoff. This "J-Curve" phenomenon occurs because the technology itself is not enough; organizations must invent new processes to harness it.

- Phase 1 (Investment & Adjustment): This is where many organizations sit in 2025. Teams adopt tools, fight with "almost right" code, and struggle with new review bottlenecks. Productivity feels flat or chaotic. The "Cost to Serve" might actually increase due to license fees and cloud costs from inefficient code.

- Phase 2 (Process Re-engineering): Companies realize they cannot just add AI to old workflows. They begin to automate testing, implement AI-based reviewers to filter low-quality PRs, and restructure teams to optimize for review capacity rather than coding capacity.

- Phase 3 (Takeoff): The system realigns. This is where the measurable gains appear. Amazon, for example, saw a 15.9% reduction in Cost to Serve Software (CTS-SW) year-over-year in 2024 after systematically optimizing their developer experience.5

3.2 Measuring What Matters: CTS-SW

To escape the trap of vanity metrics like "Lines of Code" or "PRs Merged," AWS has pioneered the Cost to Serve Software (CTS-SW) framework as a superior alternative for measuring developer productivity.

Definition: CTS-SW is calculated as the total cost of delivering a unit of software (including developer salaries, infrastructure costs, and tooling fees) divided by the number of delivery units (e.g., successful deployments or completed features).

Why it works: This metric forces the organization to look at the entire delivery pipeline.

- If AI helps developers write code 50% faster, but that code is inefficient and doubles cloud infrastructure costs, CTS-SW will rise, correctly signaling a negative ROI.

- If AI increases feature output but leads to a spike in customer support tickets due to bugs, the "operational costs" included in CTS-SW will capture that negative impact.

The ROI Reality: Amazon used this framework to prove that upgrading developer experience and tooling (including AI) generated a hypothetical 10x ROI for a bank scenario by avoiding $20 million in costs.5 This shifts the conversation from "How fast are we typing?" to "How efficiently are we delivering value?"

4. Emerging Architectures: The Shift to Agentic Workflows

As we look toward 2026, the "Copilot" era—characterized by a human typing and an AI suggesting completions—is rapidly giving way to the "Agentic" era. In this new paradigm, the human sets the goal, and the AI executes a multi-step plan to achieve it.

4.1 From Chatbots to Agents: The Levels of Autonomy

Current usage is dominated by Level 1 and Level 2 workflows, but Level 3 is the frontier for 2026.

- Level 1 (AI Workflows): The developer prompts a chat window. The AI outputs text. The developer copies and pastes. This is the "Chatbot" model.

- Level 2 (Router Workflows - The 2025 Standard): Tools like Cursor and Windsurf act as "Routers." They have read/write access to the file system and can make limited decisions about which files to edit. They are "Context Aware" and can see the whole repository, making them significantly more effective than isolated chat windows.

- Level 3 (Autonomous Agents - The 2026 Frontier): These systems utilize advanced reasoning patterns to plan and execute tasks. They can independently plan a multi-step task, execute it, encounter an error, debug the error, and retry—all without human intervention.

4.2 Key Workflow Patterns for 2026

Enterprises are standardizing on specific agentic patterns to ensure reliability and mitigate the "Almost Right" problem.

- Reflection Pattern: This pattern forces the AI to critically evaluate its own output. The AI generates a draft, then a secondary "Critic" agent reviews it for errors, style violations, or logical gaps before showing it to the human. This mimics the human writing process (drafting vs. editing) and has been shown to significantly reduce hallucinations.

- Tool Use Pattern: Agents are given access to "tools" like compilers, linters, and database connections. Instead of just guessing code, the agent can try to run the code. If the compiler throws an error, the agent reads the error, fixes the code, and retries. This "Grounding" in reality is a massive leap forward in reliability.

- Planning Pattern: For complex features, an "Architect Agent" breaks the high-level goal into sub-tasks (e.g., "Update DB schema," "Create API endpoint," "Update Frontend") and delegates them to specialized "Worker Agents."

4.3 The Rise of Specification-Driven Development

A significant trend accompanying the rise of agents is the return to Specification-Driven Development. Tools like Kiro are pioneering this approach. Instead of vague natural language prompts ("Make the button look nice"), developers write structured specifications (requirements, acceptance criteria, schema definitions). The AI then generates the implementation plan and the code to match the spec.

This signifies a return to engineering discipline: the AI handles the implementation, but the human must handle the precise specification. For B2B firms, this is a crucial insight. The role of the developer is not disappearing; it is shifting from "Code Writer" to "Technical Product Owner." The ability to write clear, unambiguous specifications becomes the primary skill set.

5. The Human Element: Engineering Management in the AI Era

The introduction of AI is not just a technological shift; it is a cultural and managerial upheaval. For Baytech Consulting and similar firms, the key differentiator in the market will not be the tools themselves—which are available to everyone—but how they manage the human side of this transition.

5.1 The "Turing Trap" and Augmentation

Economist Erik Brynjolfsson warns of the "Turing Trap": the mistake of trying to use AI to replicate human-level performance exactly (Automation), rather than using it to extend human capabilities (Augmentation).

- Automation Strategy: Replacing a junior developer with an AI agent. This offers immediate cost savings but risks a long-term "hollowing out" of the talent pipeline and potential quality degradation.

- Augmentation Strategy: Giving a junior developer an AI "Coach" that explains the codebase, suggests best practices, and automates toil. This focuses on capacity building and upskilling.

The data supports augmentation. Studies in comparable knowledge-work industries (like call centers) showed that while AI helped everyone, it helped low-skilled workers the most, reducing the performance gap between novices and experts and significantly lowering turnover. In software, this means AI is the ultimate onboarding tool, allowing new hires to become productive contributors in weeks rather than months.

5.2 Burnout and The "Always On" Developer

While AI removes the toil of boilerplate code, it increases the "cognitive density" of the work. Developers are spending less time on "easy" tasks (which provide a mental break) and more time on hard debugging, architectural review, and complex problem-solving.

- Context Switching: AWS notes that teams switching between too many contexts (now exacerbated by the rapid generation of AI tasks) deliver 40% less work and double their defect rate.5

- Satisfaction as a Metric: Team health metrics are now a critical leading indicator of productivity. If developers feel they are becoming "janitors" for AI-generated code—cleaning up messes rather than building features—burnout will spike, and turnover will follow.

6. Strategic Roadmap for B2B Leaders

Based on the 2025–2026 data, the following strategic roadmap offers a path for B2B executives to maximize AI impact while mitigating the associated risks.

Phase 1: Audit & Baselines (Months 1–3)

- Metric Shift: Stop counting lines of code or commit volume. Establish a baseline for CTS-SW and Cycle Time. Understand your true cost of delivery.

- Trust Survey: Deploy an internal survey modeled on Stack Overflow’s trust metrics. Do your developers trust their tools? If not, why? Address the "Almost Right" frustration early.

- Bottleneck Identification: Analyze your PR queues. If PR review time is greater than 24 hours or is trending upward, you have an "AI flooding" problem that needs immediate process intervention.

Phase 2: Workflow Re-engineering (Months 4–6)

- Automated Review Gates: Implement AI-based PR reviewers (using tools like Codium or specialized GitHub Actions) to act as a first line of defense. These tools should catch syntax errors, style violations, and basic bugs before a human reviewer sees the code.

- Specification Enforcement: Move toward Specification-Driven Development. Require structured prompts or written specs for any AI-generated feature. This forces developers to think through the "What" before asking the AI for the "How."

- Tooling Evaluation: Look beyond standard copilots. Evaluate Agentic IDEs (like Cursor or Windsurf) that support context-aware file editing.

Phase 3: The Agentic Leap (Months 7+)

- Internal RAG Agents: Build "Retrieval Augmented Generation" (RAG) agents that are trained on your specific internal documentation, legacy code, and architectural decision records. This creates an institutional memory that AI can access.

- Talent Reallocation: Explicitly reallocate the time saved from coding. If coding takes 30% less time, invest that time in System Architecture, User Research, and Security Audits. Do not simply expect 30% more features; expect better, more secure features.

Conclusion: The New Engineering Discipline

By 2026, the question "Does AI increase productivity?" will likely be viewed as too simplistic to be useful. The data confirms that AI increases output volume and individual speed. However, without a corresponding evolution in workflows, testing protocols, and management strategies, this speed creates friction, resulting in a system that spins faster but travels no further.

The winners of this era will not be the companies that generate the most code. They will be the companies that build the best filtering and verification systems—organizations that can verify, integrate, and deploy AI-generated solutions with high confidence. The role of the software developer is transitioning from "writer of syntax" to "architect of intent" and "verifier of logic".

For firms like Baytech Consulting, this shift serves as the ultimate validation of the "Tailored Tech Advantage." In a world where code generation is a commodity, the value lies in the custom crafting of the system that directs that code toward a specific business outcome. The human element—the ability to understand the business problem, design the solution, and verify the AI's execution—remains the irreplaceable core of software engineering.

Frequently Asked Questions

Q: Will AI replace junior developers in 2025–2026?

A: No, but it will fundamentally change their job description. The data shows that "Early Career Devs" are actually the heaviest users of AI tools (55.5% daily usage).1 They are not being replaced; they are being "supercharged." However, the traditional entry-level tasks—writing simple functions, boilerplate, or basic unit tests—are effectively gone. Junior developers must now learn to be "editors" and "reviewers" much earlier in their careers. Mentorship programs must adapt to focus on code reading and architectural thinking from day one.

Q: Why do senior developers report feeling slower when using AI?

A: Senior developers operate on deep, internalized mental models of the system. AI breaks their "flow" by forcing them to verify code that looks right but might be subtly wrong. A controlled study showed experts were 19% slower on complex tasks because the "verification cost" of checking AI code was higher than the cost of writing it themselves.4 The cognitive load of reverse-engineering an AI's logic is significant.

Q: What is the most important metric to track for AI success?

A: Cost to Serve Software (CTS-SW). This metric captures the holistic picture of the value stream. If AI makes coding cheaper but testing and cloud hosting more expensive (due to code bloat or inefficiencies), CTS-SW will reveal the negative ROI. Do not rely on "Lines of Code" or "PRs merged" as primary success metrics, as these are easily inflated by AI without adding business value.5

Recommended Reading

- https://newsletter.pragmaticengineer.com/p/how-tech-companies-measure-the-impact-of-ai

- https://aws.amazon.com/blogs/enterprise-strategy/measuring-the-impact-of-ai-assistants-on-software-development/

- https://jellyfish.co/blog/2025-ai-metrics-in-review/

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.