Build Your Corporate AI Fortress: The 2026 Guide to Walled Gardens

January 21, 2026 / Bryan ReynoldsData Sovereignty in 2026: Building "Walled Gardens" for Corporate AI

1. The 2026 Reckoning: From Hype to "Hard Hat" Reality

The year is 2026, and the artificial intelligence landscape has undergone a profound metamorphosis. If 2023 was the year of unbridled euphoria and 2024 the year of chaotic experimentation, 2026 has firmly established itself as the year of the "Reckoning".

The initial rush to adopt generative AI—driven by a Fear Of Missing Out (FOMO) that gripped boardrooms from Silicon Valley to Singapore—has subsided. In its place, a more pragmatic, disciplined, and rigorous era has emerged, one that industry analysts at Forrester have aptly termed the "Hard Hat" era of AI work.

For the modern B2B executive, this shift is not merely atmospheric; it is operational. The conversation has moved from "How fast can we integrate this?" to "How do we control this?" The "do-it-now" adoption mentality that characterized the early generative AI boom has left a legacy of technical debt and governance gaps that organizations are now scrambling to address.

We are witnessing a fundamental transition where the "art of the possible" is succumbing to the "science of the practical," necessitating a heavy prioritization of governance, security, and verifiable return on investment (ROI). For many leadership teams, this also means rethinking how modern CTOs drive revenue and value from AI rather than treating it as an experimental side project.

The End of the "Wild West" of Shadow AI

The period between 2023 and 2025 was defined by the proliferation of "Shadow AI"—the unsanctioned use of public AI tools by employees desperate to increase productivity. Marketing teams quietly used public chatbots to draft campaign copy; developers pasted proprietary code snippets into web interfaces to debug errors; and HR professionals summarized sensitive employee feedback using cloud-based agents. While this boosted individual productivity, it created a massive, invisible risk surface for the enterprise.

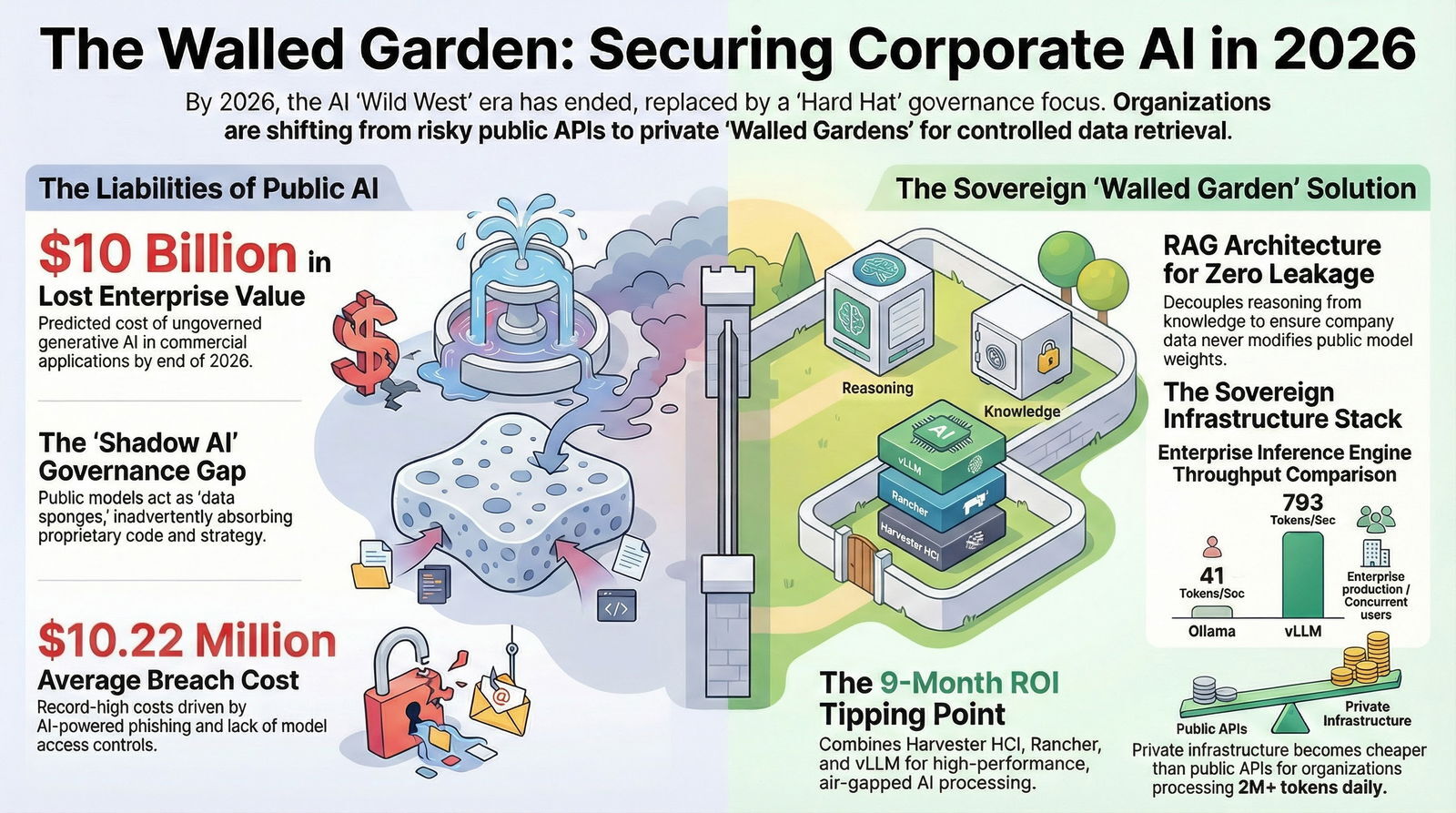

By 2026, the consequences of this ungoverned adoption have become quantifiable. The "Shadow AI" phenomenon has forced organizations to confront a stark reality: public AI models, by their very nature, are data sponges. They are designed to absorb context to generate relevance. When that context is your intellectual property, the model becomes a vector for leakage. The governance gap is no longer theoretical; it is a board-level crisis. Forrester predicts that ungoverned generative AI in commercial applications will cost B2B companies over $10 billion in lost enterprise value by the end of 2026.

This loss is not just in regulatory fines but in the erosion of competitive advantage, brand dilution, and the degradation of customer trust. It is also tightly connected to the broader AI trust paradox in software development, where companies depend on tools they do not fully trust.

The Rise of Agentic AI and the Need for Determinism

A critical driver of this new era is the technological evolution from "Generative" to "Agentic" AI. In 2024, we marveled at AI that could write a poem. In 2026, we depend on AI that can execute a trade, book a flight, or patch a server. Gartner predicts that by 2028, 33% of enterprise software will include agentic AI—digital workers that handle complex tasks and make decisions—up from less than 1% in 2024.

This shift to agency raises the stakes exponentially. If a passive chatbot hallucinates, it produces bad text that a human can edit. If an autonomous agent hallucinates, it might delete a production database, transfer funds to the wrong account, or email sensitive schematics to a competitor. The tolerance for error in Agentic AI is near zero. Consequently, the "Black Box" nature of public models—where the provider might update the model weights overnight, subtly altering its behavior—is unacceptable for mission-critical workflows. Enterprises require deterministic performance; they need to know that the AI agent running the payroll process today will behave exactly the same way tomorrow. This need for stability is a primary catalyst for the move toward private, controlled infrastructure.

As organizations make this leap, they are also rethinking how they build and ship software itself, leaning into AI-driven software development strategies for 2026 that treat reliability and governance as first-class requirements.

The Geopolitical Fracture: Sovereignty is No Longer Optional

Simultaneously, the dream of a borderless digital world has fractured. We are witnessing the end of "borderless AI" and the rise of "Sovereign AI." Gartner predicts that by 2027, 35% of countries will be locked into region-specific AI platforms.

This fragmentation is driven by a complex web of regulatory frameworks, from the mature GDPR in Europe to the emerging AI laws in APAC and the Middle East, all designed to keep data within national borders.

For a multinational corporation, this creates a logistical nightmare if relying on a centralized public model. A single API endpoint hosted in a US data center cannot legally process the data of a German citizen, a Saudi oil firm, and a Chinese manufacturer simultaneously without violating multiple sovereignty mandates. The solution is the deployment of "Regional AI Stacks"—localized, sovereign instances of AI that respect the data residency laws of the jurisdiction they serve. This shift mirrors the broader move toward treating AI as a strategic, no-longer-optional capability rather than a generic cloud feature.

At Baytech Consulting, we have observed this shift firsthand. Our clients, ranging from fast-growing startups to established enterprises in finance and healthcare, are no longer asking for "access to ChatGPT." They are asking for "a ChatGPT that lives on our servers, follows our rules, and never talks to strangers." They are asking for a Walled Garden.

This report serves as a comprehensive guide to building that fortress. We will explore the risk landscape that necessitates this shift, the architectural blueprint for a sovereign AI stack using open-source technologies like Harvester and Rancher, and the economic reality that—contrary to popular belief—often makes private AI cheaper than public APIs for the enterprise.

2. The Risk Landscape: Why Public AI is a Liability in 2026

To understand the necessity of the "Walled Garden," one must first confront the quantifiable liabilities of the status quo. The model of "Public AI Consumption"—where an enterprise acts as a tenant on a mega-vendor's infrastructure, sending data out via API and receiving answers back—has revealed itself to be fraught with peril. By 2026, the risks associated with this model have transitioned from theoretical security warnings to hard financial losses.

2.1 The High Cost of Intellectual Property Leakage

The most immediate and damaging risk is the leakage of Intellectual Property (IP). When an organization utilizes a public model, it is effectively outsourcing its cognition to a third party. Despite "enterprise" assurances, the technical reality of data transmission and processing creates vectors for exposure.

The "Training Data" Trap

The primary mechanism of leakage is inadvertent training. While major providers offer non-training agreements for enterprise tiers, the complexity of these agreements and the "Shadow AI" factor mean data often slips through. If a model ingests proprietary code or strategy documents for fine-tuning or reinforcement learning, that IP effectively becomes part of the model's latent knowledge. In 2026, "Model Inversion" attacks—where hackers query a model to extract the training data—have become sophisticated enough to retrieve sensitive information that was thought to be diluted in the massive training corpus.

The Financial Toll of Breaches

The statistics for 2025 paint a grim picture of the cost of inaction. According to the IBM Cost of a Data Breach Report, the average cost of a data breach in the United States surged to an all-time high of $10.22 million.

This figure represents a dramatic increase from previous years, driven largely by regulatory fines and the increasing complexity of detection and escalation.

Crucially, the nature of these breaches is evolving. In 2025, 16% of all data breaches involved attackers using AI, utilizing tools like generative phishing and deepfakes to bypass traditional security perimeters.

Even more concerning is the vulnerability of the AI systems themselves. IBM found that 13% of organizations reported breaches specifically of their AI models or applications. Of those breached, a staggering 97% reported not having proper AI access controls in place. This data confirms that the rush to adopt AI outpaced the implementation of security frameworks, leaving a "governance gap" that attackers are now exploiting with ruthlessness.

The healthcare sector remains the most vulnerable, with the highest average breach cost of $7.42 million, marking it as the costliest industry for 12 consecutive years.

For B2B healthcare firms handling patient data (PHI), the implications of an AI-driven leak are existential. The intersection of high-value data and strict regulation makes the "Walled Garden" not just a security preference but a survival necessity.

2.2 The Regulatory Stranglehold: Sovereignty as Law

Beyond the direct financial cost of breaches lies the complex web of global regulation. Data Sovereignty laws have evolved from broad principles into specific, enforceable mandates that dictate the physical location of data storage and processing.

The European Sovereignty Push

In Europe, the push for "simplification and sovereignty" is redefining the tech landscape. Forrester reports that European firms are intensifying their efforts to reduce dependency on non-European firms, including US-based hyperscalers and LLM providers.

The EU AI Act specifically targets high-risk AI use cases, imposing transparency and governance requirements that are difficult to satisfy when using a "black box" API hosted abroad. While a complete break from US tech dominance is impractical in the short term, the regulatory pressure is forcing a hybrid approach where sensitive data must remain on sovereign infrastructure.

APAC and the "Pragmatic" Approach

In the Asia-Pacific region, a different but equally stringent dynamic is at play. APAC leaders are choosing "pragmatism over hype," with half of the firms in the region planning to invest heavily in digital sovereignty to maintain national control over foundational infrastructure.

Countries like Saudi Arabia and members of the ASEAN bloc are enforcing "diverse cloud" strategies, mandating local data residency.

This means that an American software company wishing to sell AI-powered analytics to a Saudi bank must be able to deploy its AI stack inside the Kingdom. A cloud-based SaaS model relying on a single inference cluster in Virginia is effectively locked out of these markets. Executives are increasingly evaluating these issues as part of broader software investment risk strategies for 2026, not just as technical edge cases.

2.3 The "Death by AI" Litigation Wave

Gartner's prediction of "Death by AI" legal claims has come to fruition. By 2026, the industry is seeing a surge in litigation where the primary cause of damage is AI malfunction or misuse.

These are not just copyright lawsuits; they are liability claims for AI agents that made incorrect financial decisions, misdiagnosed medical conditions, or exposed private data.

In this litigious environment, explainability and auditability are the primary defenses. When a public model makes a mistake, the enterprise has limited visibility into why. Was it the prompt? Was it a change in the model's safety filter? Was it a hallucination caused by new training data? In a public API scenario, the enterprise cannot access the model's weights or training logs to conduct a forensic audit. They are left holding the liability without the evidence to defend themselves.

In contrast, a private "Walled Garden" offers total auditability. The enterprise controls the model version, the context data, and the system prompt. They can replay the exact state of the system at the time of the error. This forensic capability is essential for risk management in 2026.

2.4 The Infrastructure Defense

Ultimately, AI safety has become an infrastructure problem. Gartner notes that risk mitigation now requires infrastructure engineered to enforce boundaries rather than just accelerate models.

It requires "auditable pipelines" and "deterministic performance." This is the domain of the Walled Garden: a purpose-built environment where the infrastructure itself acts as the final line of defense against IP leakage and AI risk.

Designing this kind of foundation also fits naturally with modern DevOps efficiency practices, where automation, observability, and repeatable pipelines are core to how systems are built and secured.

3. The Solution: Architecture of the "Walled Garden"

The "Walled Garden" is not a product you buy; it is an architecture you build. It is a sovereign ecosystem that mimics the capabilities of public clouds—scalability, ease of use, API accessibility—but operates entirely within the organization's controlled infrastructure. Whether that infrastructure is a rack of servers in your basement (On-Premise) or a Virtual Private Cloud (VPC) with dedicated hosts, the principle remains the same: You own the model, you own the data, and you control the connection.

The core philosophy of this architecture is the decoupling of Reasoning from Knowledge, implemented through Retrieval-Augmented Generation (RAG) and powered by Local Inference.

3.1 The Concept: RAG and the Separation of Reasoning

In the traditional "Fine-Tuning" approach, an organization would take a base model (like GPT-3) and train it on their data. The model would "learn" the data. This is dangerous because once data is learned, it is hard to unlearn (or "forget") without destroying the model. It also makes access control impossible; the model knows everything it was trained on and will tell anyone who asks.

In 2026, the dominant architecture for corporate AI is RAG.

In a Walled Garden RAG system:

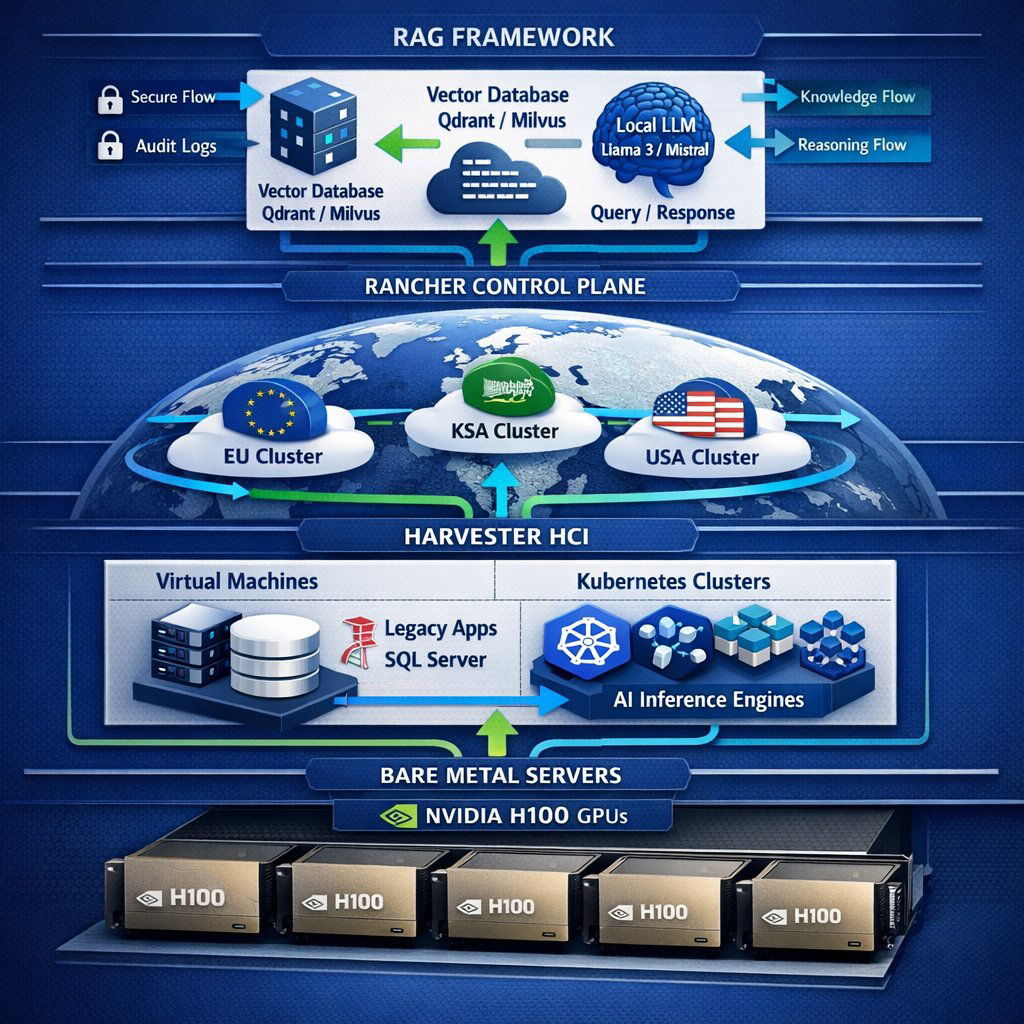

- The Reasoning Engine: We use a powerful open-weight Large Language Model (LLM) like Llama 3 or Mistral. We do not train this model on company data. Its only job is to understand language, follow instructions, and reason logically. It knows how to summarize a balance sheet, but it doesn't know your balance sheet.

- The Knowledge Base: We store company data (PDFs, emails, databases) in a secure Vector Database. This database converts text into mathematical vectors, allowing for semantic search (finding data by meaning, not just keywords).

- The Process: When a user asks, "What is the Q3 forecast for Project Alpha?", the system first queries the Vector Database. It retrieves the specific document containing the Q3 forecast. It then sends a prompt to the LLM: "Using ONLY the text below, answer the user's question:".

This architecture ensures Zero Training Leakage. The model processes the data in its temporary memory (context window) to generate the answer, and then instantly forgets it. The data never modifies the model weights. Furthermore, the retrieval step respects existing permissions—if a user doesn't have access to the "Project Alpha" document in the Vector Database, the model never sees it and cannot answer the question.

3.2 The Infrastructure Stack: Baytech's Tailored Advantage

To build this robustly, we look toward a stack that offers the agility of the cloud with the control of bare metal. At Baytech Consulting, we specialize in what we call the "Tailored Tech Advantage," utilizing a specific set of open-source and enterprise-grade tools that have become the gold standard for on-premise AI in 2026.

The foundation of this stack is the triad of Harvester, Rancher, and Kubernetes.

3.2.1 The Foundation: Harvester HCI

Harvester is an open-source Hyper-Converged Infrastructure (HCI) solution built on top of Kubernetes.

- The Problem: Traditional virtualization (like VMware) creates a silo between Virtual Machines (VMs) and Containers. AI workloads are often hybrid—you might need a Windows VM to run a legacy SQL Server database (the data source) and a Linux Container to run the AI Inference Engine. Managing these in separate silos is inefficient.

- The Harvester Solution: Harvester runs on bare metal servers and unifies VMs and containers. It allows us to run legacy VMs alongside modern Kubernetes clusters on the same physical hardware, sharing the same storage and network fabric.

- Why it matters for AI: GPU management. Harvester excels at GPU passthrough and vGPU slicing. We can take a powerful NVIDIA H100 and "slice" it, dedicating 50% of its power to the production AI agent and 50% to the data science team for testing, maximizing the ROI on expensive hardware.

3.2.2 The Orchestrator: Rancher

Rancher serves as the centralized management plane. It sits above Harvester and manages the Kubernetes clusters.

- Role in Walled Garden: Rancher provides the "Single Pane of Glass" for IT operations. It handles security policies, user authentication (connecting to your Microsoft Entra ID/Active Directory), and the deployment of the AI applications.

- Multi-Cluster Management: If you are a multinational with a "Regional Stack" strategy (one cluster in Frankfurt, one in Riyadh), Rancher manages them all from a single interface, ensuring consistent security policies across all sovereign zones.

3.2.3 The Inference Engine: vLLM vs. Ollama

A critical decision in 2026 is the choice of the inference engine—the software that actually acts as the "brain," loading the model and processing queries. The two main contenders are Ollama and vLLM.

- Ollama: Ollama is fantastic for local development. It is easy to install and runs well on a laptop. However, in a corporate environment with hundreds of concurrent users, Ollama struggles. It is designed for serial processing and can become a bottleneck.

- vLLM: vLLM has emerged as the enterprise standard for production inference. It utilizes a groundbreaking technique called PagedAttention to manage GPU memory non-contiguously (similar to how an OS manages RAM).

- Performance: Benchmarks show vLLM achieving significantly higher throughput (up to 793 tokens per second vs Ollama's 41 in stress tests) and lower latency under load.

- Recommendation: For the Walled Garden, we deploy vLLM running as a scalable service on Kubernetes. This ensures that when the CEO and the Head of Sales both ask complex questions at 9:00 AM, neither experiences a slowdown.

3.3 The Data Layer: Vector Databases and Embedding

The "Knowledge" component relies on the Vector Database. In 2026, we favor high-performance, self-hostable options like Qdrant or Milvus, or using the pgvector extension for PostgreSQL if the dataset is smaller and relational data integration is key.

The process begins with "Embedding." We run a local embedding model (like nomic-embed-text) which turns your documents into vectors. Crucially, this embedding model also runs locally within the Walled Garden. Many "private" RAG solutions fail because they send text to OpenAI's API just to get the embeddings, leaking data at the very first step. Our architecture ensures that from the moment a document is ingested to the moment the answer is generated, no data leaves the perimeter.

4. Implementation Guide: Building the Fortress

Constructing this environment requires a disciplined approach to DevOps and security. The "do-it-yourself" nature of private AI means the organization is responsible for the plumbing. This is where the expertise of a partner like Baytech Consulting, with deep roots in custom software and Azure DevOps, becomes invaluable. We employ a "Rapid Agile Deployment" methodology to stand up these environments, moving from bare metal to a working "Hello World" AI in weeks, not months. That same mindset underpins many of our Agile methodology engagements, where fast feedback and tight iteration are key.

4.1 The Air-Gapped "Airlock" Strategy

For the highest security environments—such as defense contractors, financial trading desks, or R&D labs—the Walled Garden should be air-gapped. This means the cluster has no physical or logical connection to the public internet.

However, software is not static. AI models need updates, Linux packages need security patches, and Python libraries need upgrades. How do we reconcile total isolation with the need for maintenance?

The solution is an "Airlock" CI/CD Pipeline.

- The "Dirty" Zone (Connected): We maintain a staging environment with controlled internet access. Here, a CI/CD pipeline (using Azure DevOps) downloads the latest model weights (e.g.,

Llama-3-70B-Instruct), container images, and security patches from the outside world. - The Scanning Station: Before moving further, all artifacts act as "cargo" that must be inspected. We use automated scanning tools to check for Common Vulnerabilities and Exposures (CVEs), malware, and supply chain attacks. This is critical, as "model poisoning" (where attackers hide malicious triggers in model weights) is a rising threat in 2026.

- The Transfer: The verified artifacts are transferred to the secure registry inside the air-gapped zone. In extreme cases, this is done via "Data Diodes" (hardware that allows data to flow only one way, physically preventing any outbound leakage) or secure physical media transfer.

- The "Clean" Zone (Air-Gapped): The production Rancher cluster pulls images only from this internal, secure registry. It has no knowledge of the outside world.

4.2 MLOps: CI/CD for Machine Learning

Deploying AI is not a one-time event; it is a lifecycle. Models drift, new optimized versions are released (e.g., Llama 3.1 to Llama 3.2), and prompt templates need tuning based on user feedback. We leverage Azure DevOps On-Premise to orchestrate this.

Managing Heavy Weights

One of the unique challenges of AI DevOps is the size of the artifacts. A standard Docker image is a few hundred megabytes. A high-quality LLM weight file can be 140 gigabytes.

- Anti-Pattern: Baking the model weights into the Docker image. This creates massive containers that take hours to build and deploy, clogging the network.

- Best Practice: We use Volume Mounting or Object Storage (like MinIO). The Docker container for the Inference Engine (vLLM) is lightweight (containing only the code). When it starts up, it mounts a volume where the heavy model weights are stored. This allows us to update the code without re-downloading the model, or swap the model without rebuilding the code container.

GitOps with Rancher Fleet

We define the entire state of the cluster in a Git repository. This is known as GitOps.

- The Workflow: When we want to upgrade the AI model, we simply update a line of code in the Git repo (e.g., change

model_version: v1tomodel_version: v2). - Rancher Fleet: Fleet is the GitOps engine built into Rancher. It detects this change in the Git repo and automatically applies it to the cluster, rolling out the update to the pods. This ensures that the production environment is always in sync with the approved configuration in version control, providing a perfect audit trail for compliance.

4.3 Security: The Model Context Protocol (MCP)

Security in 2026 goes beyond network firewalls. It involves securing the context given to the AI. We implement the Model Context Protocol (MCP) or similar governance frameworks to enforce granular control.

- Prompt Injection Defense: We implement a "Guardrail" layer (using tools like NVIDIA NeMo Guardrails or custom Python middleware) that sanitizes user inputs before they reach the model. This prevents "jailbreak" attacks where users try to trick the model into ignoring its safety instructions.

- Role-Based Access Control (RBAC): The RAG retrieval step must be aware of the user's identity. If a Junior Analyst asks "What are the CEO's stock options?", the retrieval system checks their permissions in Active Directory. Finding they lack clearance, it returns zero documents. The model then answers, "I cannot answer that based on the available information," rather than leaking the sensitive document.

- Audit Trails: Every prompt, every retrieved document, and every generated response is logged (internally) for forensic analysis. This allows the security team to monitor for abuse patterns, such as an employee repeatedly trying to access restricted topics.

5. The Economics of Sovereignty: ROI Analysis

A common objection to private AI is cost. The perception is that building a "Walled Garden" requires a massive capital expenditure (Capex) that cannot compete with the "pay-as-you-go" efficiency of public APIs. While this is true for low-volume, sporadic usage, for the data-intensive enterprise of 2026, the math flips dramatically.

5.1 The Tipping Point: Volume vs. Cost

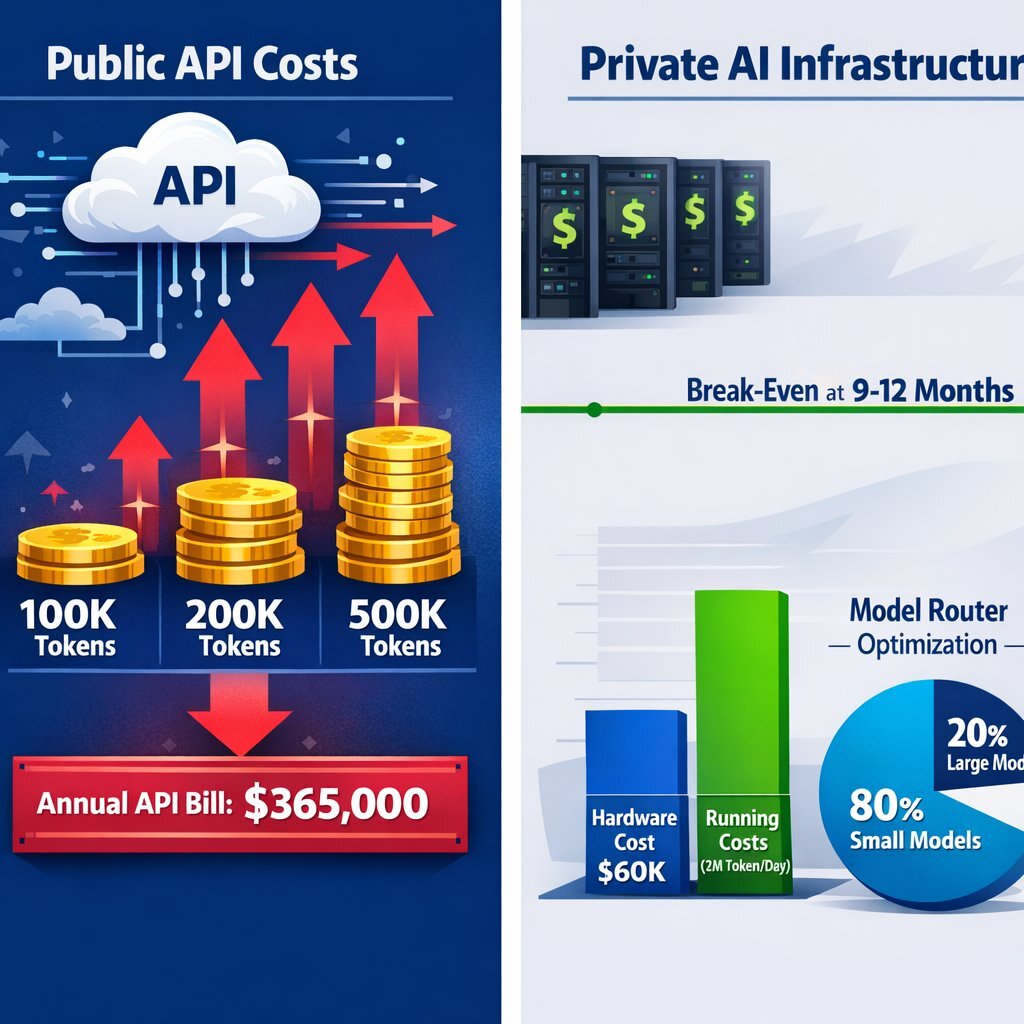

Public API costs are variable and linear—the more you use, the more you pay. This is the "Token Tax."

- The Mechanics: You pay for every "Input Token" (your question + the 10 pages of documents you attached for context) and every "Output Token" (the answer).

- The RAG Multiplier: In a RAG system, the "context" is the killer. A single complex query might require feeding the model 10,000 tokens of background information. If you have 1,000 employees sending 20 such queries a day, you are processing 200 million tokens daily.

- The Bill: At hypothetical 2026 enterprise rates (e.g., $5 per million input tokens), this daily volume costs $1,000 per day or $365,000 per year—and that is for just one specific use case. As you add more agents, the cost scales linearly to infinity.

Private Infrastructure costs are fixed. You pay for the hardware (Capex) or the lease (Opex), and then utilization is effectively "free" (minus electricity).

- The Hardware: A robust server with a pair of NVIDIA H100 GPUs might cost $60,000 upfront.

- The Capacity: These GPUs can process millions of tokens per hour, 24/7.

- The Break-Even: Recent analysis suggests that for organizations processing over 2 million tokens per day, private infrastructure becomes significantly cheaper within 9 to 12 months.

5.2 Efficiency Through Hybrid Models

The economics are further improved by using a "Hybrid" model strategy within the Walled Garden.

- The Concept: Not every question needs a genius. If a user asks, "How do I reset my password?", you don't need a massive, expensive 70-billion parameter model to answer. A small, fast, cheap 8-billion parameter model is sufficient.

- The Routing: We implement a "Model Router" (or Gateway). It analyzes the complexity of the incoming query. Simple queries go to the small model (running on cheaper hardware). Complex reasoning queries go to the big model (running on the H100s).

- The Savings: By offloading 80% of routine traffic to smaller models, organizations can reduce their hardware requirements—and thus their TCO—by up to 80%.

5.3 The Intangible Value of Certainty

Beyond simple dollar comparisons, the Walled Garden offers the intangible but vital asset of Business Continuity.

- Resilience: In a volatile geopolitical world, relying on a trans-oceanic cable to access your corporate intelligence is a risk. If a cable is cut, or a sanction is imposed, or a cloud provider has an outage, the public API goes dark. Your "Walled Garden" keeps running.

- Predictability: Public APIs often suffer from latency spikes during peak hours. A private instance offers deterministic performance, ensuring your internal SLAs are met.

- Asset Value: From a balance sheet perspective, building a Walled Garden transforms AI from an operating expense (rent) into a capital asset (ownership). You are not just renting intelligence; you are building a sovereign cognitive engine that increases the valuation of your enterprise.

Viewed through this lens, data sovereignty and private AI are not only about risk mitigation; they become levers for new revenue-generating, predictive AI capabilities that are safer and more defensible.

6. Conclusion: The Strategic Imperative

As we move deeper into 2026, the differentiation between market leaders and laggards will not be defined by who uses AI, but by who owns their AI intelligence. The "Walled Garden" is not merely a security measure; it is a strategic asset. It transforms the enterprise from a renter of intelligence into a sovereign owner of a cognitive engine that is deeply knowledgeable about its specific business, yet utterly silent to the outside world.

The "Reckoning" is here. The choice facing the C-suite is clear: continue to lease intelligence at the risk of your IP and compliance, or build the fortress that secures your future. By combining the robust infrastructure of Harvester and Rancher with the efficiency of modern open-source models and the contextual power of RAG, organizations can build systems that are secure, compliant, and economically superior.

At Baytech Consulting, we stand ready to help you navigate this transition. Our expertise in "Tailored Tech" and "Rapid Agile Deployment" ensures that your journey to sovereignty is not a multi-year odyssey, but a decisive strategic maneuver. For many executives, that journey starts with choosing the right software development partner in 2026—one that understands AI, infrastructure, and long-term business impact.

Frequently Asked Question

Q: "We don't have the in-house expertise to manage Kubernetes and bare metal servers. Isn't this too complex for us?"

A: This is the most common concern we hear, and it was a valid one in 2023. However, the ecosystem has matured significantly to address exactly this gap.

- Simplification: Tools like Harvester are designed specifically to abstract the complexity of Kubernetes. It provides a user interface that looks and feels like traditional virtualization platforms (like VMware). If your IT team can manage VMs, they can manage Harvester.

- Managed Services: You don't have to go it alone. Partners like Baytech Consulting specialize in setting up this exact "Tailored Tech" stack. We handle the architectural heavy lifting—deploying the clusters, configuring the "Airlock" pipelines, and tuning the vLLM engines—so your internal team can focus on building the business applications that sit on top of the infrastructure.

- The Hybrid Ramp: You can start with a "Private Cloud" approach—renting dedicated, isolated infrastructure in a secure cloud provider (like OVHCloud or a dedicated Azure VPC)—before moving to full "On-Premise" hardware. The software stack (Rancher/Kubernetes) remains the same, making the eventual transition to your own data center seamless when you are ready to bring it in-house for cost or sovereignty reasons.

Along the way, you will also want to pay attention to developer experience and productivity, ensuring your teams can safely and confidently build on top of this new AI foundation.

Recommended Reading

- (https://www.forrester.com/predictions/)

- (https://www.ddn.com/blog/ai-sovereignty-skills-and-the-rise-of-autonomous-agents-what-gartners-2026-predictions-mean-for-data-driven-enterprises/)

- (https://www.ibm.com/reports/data-breach)

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.