Unlocking Speed: How Full-Stack JavaScript Accelerates Your Tech Team in 2026

January 19, 2026 / Bryan Reynolds

Executive Summary: The "Universal Language" Gamble

For the modern Chief Technology Officer (CTO) or VP of Engineering, the architectural landscape has shifted dramatically over the last decade. The once-rigid demarcation between the browser and the server has eroded, giving rise to the "Universal Application" or "Isomorphic JavaScript" paradigm. The allure of Full-Stack JavaScript—often realized through stacks like MEAN (MongoDB, Express, Angular, Node.js) or MERN (MongoDB, Express, React, Node.js)—is theoretically irresistible. It promises the holy grail of software engineering efficiency: a unified language for client and server, a single mental model for developers, and the ability to move talent fluidly across the stack.

It suggests a world where the friction of context switching vanishes, and data flows in native JSON from the database to the DOM without transformation. It offers the enticing possibility of "Rapid Agile Deployment," a core tenet of modern software delivery, where feature teams can own a slice of functionality from the database schema to the CSS pixel.

However, the transition from a polyglot environment (e.g., Java/Spring or C#/.NET on the backend, React/Angular on the frontend) to a pure JavaScript architecture is not merely a syntactic shift; it is a fundamental change in concurrency models, architectural philosophy, and team governance. It requires a recalibration of how organizations view performance, security, and long-term maintainability.

This report provides an exhaustive, expert-level analysis of this architectural paradigm. We move beyond the surface-level "pros and cons" to explore the second-order effects of this decision on Total Cost of Ownership (TCO), scalability, and long-term maintainability. We analyze why giants like Netflix and PayPal made the switch, where the model breaks down for CPU-intensive workloads, and how TypeScript has emerged as the non-negotiable safety layer for the enterprise. Drawing on industry data, engineering benchmarks, and the operational realities of custom software development, we answer the critical question: Is the velocity gained by a single language worth the structural discipline required to maintain it at scale?

1. The Business Case for Isomorphic JavaScript

The primary driver for adopting Node.js on the backend is rarely raw computational performance; it is organizational velocity. In the high-stakes world of B2B technology, where time-to-market often dictates market share, the efficiency of the engineering team is a critical KPI. The traditional siloed environment, characterized by the "impedance mismatch" between backend and frontend teams, creates a drag coefficient on delivery that many organizations can no longer afford.

1.1 The Velocity of Unification and Impedance Mismatch

In a traditional architecture, the backend and frontend are often separated not just by network protocols, but by conceptual chasms. A backend engineer working in Java or C# thinks in terms of classes, rigid schemas, and synchronous threading models. A frontend engineer working in JavaScript thinks in terms of events, asynchronous flows, and dynamic objects.

When these two worlds meet, friction occurs. The backend engineer must map a database entity (e.g., a SQL row) to a server-side object (POJO), serialize that object into JSON, and send it over the wire. The frontend engineer must then parse that JSON, validate it, and hydrate it into a client-side state model. Every new feature requires a handshake agreement on API contracts, data types, and error handling. If the backend team changes a field name from userId to user_id, the frontend breaks. This translation layer is a breeding ground for bugs and a bottleneck for velocity.

In a full-stack JavaScript environment, particularly one using a document store like MongoDB, this friction is dramatically reduced. The data remains in a JSON-like structure (BSON in MongoDB, JSON in Node.js, JSON objects in React) throughout its entire lifecycle. This "isomorphic" nature allows for rapid prototyping and iteration. For startups and enterprises launching Minimum Viable Products (MVPs), this speed is a competitive moat. Developers can write a database query, an API endpoint, and a React component in the same session, often reusing validation logic or type definitions across the boundary.

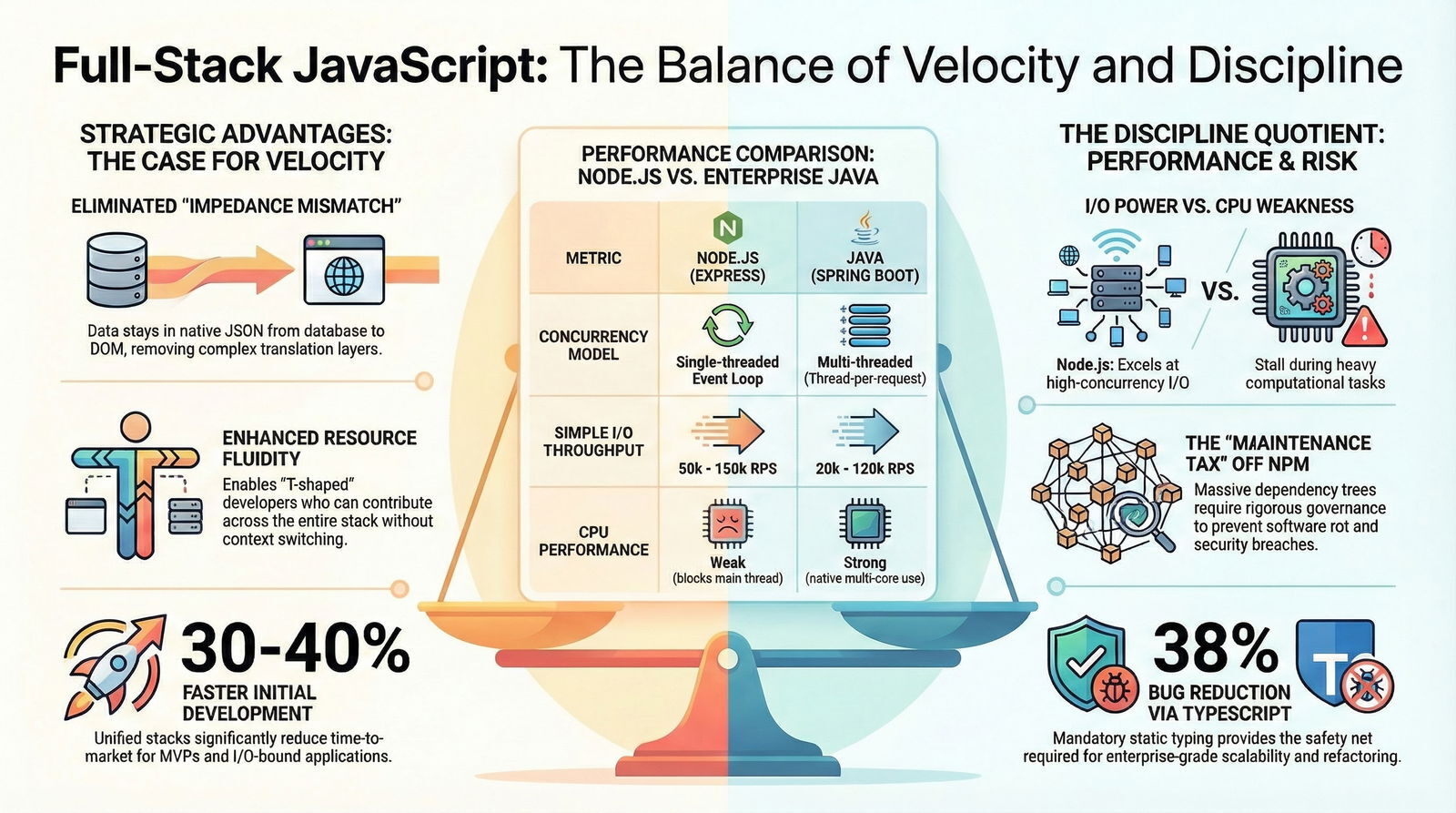

The diagram above illustrates this fundamental shift. In the polyglot world, the developer essentially serves as a translator between two disparate nations—the Kingdom of Java and the Republic of JavaScript. In the unified world, they speak a common tongue. This is not just a matter of convenience; it is a matter of cognitive load. By reducing the number of syntax rules, build tools, and ecosystems a developer must keep in their working memory, we free up mental capacity for solving business problems rather than wrestling with boilerplate.

1.2 Resource Fluidity and the "Full-Stack" Myth

One of the most touted benefits of a unified language is the ability to move engineers between frontend and backend tasks as sprint priorities shift. In a polyglot team, a bottleneck in the Java backend leaves React developers idle, or worse, blocked. In a Node.js environment, frontend developers can theoretically pick up backend tickets, and vice versa.

However, a nuanced view reveals a risk that experienced CTOs must navigate carefully: the "Full-Stack" myth. Knowing JavaScript syntax does not equate to understanding backend systems architecture. A frontend developer might be proficient in ES6 syntax and React hooks but lack experience with database normalization, concurrency management, ACID transactions, or security compliance.

Strategic Insight: The "Full-Stack" benefit is best realized not by expecting every developer to be an expert in everything, but by lowering the barrier to entry for contribution. A frontend developer can fix a backend bug in a Node.js resolver without setting up a complex JDK environment or learning Maven. A backend developer can tweak a UI component without struggling through a webpack configuration they don't understand. This "T-shaped" skill distribution—where developers have deep expertise in one area but broad capability in others—enhances agile agility, even if true full-stack mastery remains rare.

This fluidity supports the "Rapid Agile Deployment" methodology championed by firms like Baytech Consulting. When the entire team speaks the same technical language, code reviews become more effective because every team member can read and understand every pull request, regardless of whether it touches the database or the DOM. This collective ownership of the codebase fosters a higher quality bar and faster resolution of cross-cutting concerns.

1.3 The Talent Pool Reality and Hiring Economics

JavaScript is the most widely used programming language in the world, dominating the Stack Overflow Developer Survey for over a decade. By standardizing on JavaScript, organizations tap into the largest possible talent pool. This is crucial for rapid scaling.

The democratization of coding education has largely centered on the web stack. Bootcamps and online courses overwhelmingly favor the MERN stack as the primary curriculum for new developers. This means the pipeline of junior to mid-level JavaScript talent is robust and continuous. For a growing B2B SaaS company, this accessibility allows for rapid team augmentation.

However, the ubiquity of JavaScript creates a "noise" problem in hiring. Because the barrier to entry is low, the market is flooded with developers who may know how to use a framework but lack foundational engineering principles. Finding senior engineers capable of architecting scalable Node.js systems—those who understand the event loop mechanics, memory management, and distributed systems patterns—remains a significant challenge for hiring managers.

This contrasts with the Java or .NET talent pools. While perhaps smaller in absolute numbers and perceived as "less trendy," the developers in these ecosystems often come from a background of enterprise application development. They are frequently well-versed in design patterns, strong typing, and system architecture by virtue of the languages' constraints. The hiring strategy for a Node.js team, therefore, requires a more rigorous technical assessment to filter for engineering maturity amidst the volume of applicants.

2. Technical Deep Dive: The Node.js Runtime Architecture

To understand the strategic risks and benefits of full-stack JavaScript, one must look under the hood. The fundamental difference between Node.js and its enterprise competitors (Java Spring Boot, .NET) lies in its runtime architecture. Node.js operates on a single-threaded, event-driven, non-blocking I/O model, a design choice that dictates its performance profile. If you want a more detailed side-by-side, see our guide to software-driven enterprise architecture and QA.

2.1 The Event Loop: Feature and Bug

In a traditional blocking architecture (e.g., older Apache/PHP setups or classic Java servlets), the server employs a "thread-per-request" model. When a client connects, the server spawns a new thread (or grabs one from a pool) to handle that request. If the request involves reading from a database, that thread sits idle, blocking system resources, waiting for the database to respond. This model is robust but resource-intensive. As concurrency rises, the server creates more threads, consuming memory (stack space) until it hits a ceiling. At high loads, the server spends more CPU cycles context-switching between thousands of threads than actually processing requests.

Node.js takes a radically different approach. It runs a single main thread—the Event Loop—that handles all client connections. When an I/O operation occurs (e.g., "select * from users"), Node.js does not block. Instead, it offloads that task to the system kernel (via libuv) and immediately moves on to process the next request. When the database returns data, a callback function is placed in a queue, and the Event Loop picks it up as soon as it is free.

The Consequence: This makes Node.js exceptionally fast and lightweight for I/O-bound applications. It can handle tens of thousands of concurrent connections with a minimal memory footprint because it isn't carrying the overhead of thousands of threads. This is ideal for real-time applications: chat systems, collaborative tools (like Trello or Google Docs), streaming services, and single-page application (SPA) APIs where the server mostly acts as a high-speed router between the client and the database.

The Risk: The Achilles' heel of this model is CPU-bound work. Because there is only one thread, if a request requires heavy calculation—such as image processing, complex sorting, cryptographic hashing, or massive JSON parsing—that single thread is blocked. The Event Loop stops spinning. No other requests can be processed, effectively hanging the server for all users until that one calculation finishes. A single poorly written regular expression or an infinite loop can bring a production Node.js cluster to its knees.

2.2 Performance Benchmarks: Node.js vs. The Enterprise Titans

When comparing Node.js to Spring Boot (Java), the benchmarks reveal a clear dichotomy based on workload type. It is not a question of "which is faster," but "which is faster for what?"

Concurrency and Memory Efficiency: Node.js generally achieves higher concurrency with lower resource usage for simple requests. In benchmarks involving simple REST endpoints (CRUD operations), Node.js can handle ~50,000 to 150,000 requests per second (RPS) on a standard 16-core server. Spring Boot, particularly with the traditional Tomcat server, typically handles ~20,000 to 50,000 RPS in similar configurations, though its reactive stack (WebFlux) is closing this gap.

The memory difference is stark. A Node.js connection might consume a few kilobytes of heap, whereas a Java thread stack can consume megabytes. For a startup running on limited cloud infrastructure, Node.js allows for more users per dollar of hardware.

Computation and Throughput: However, as task complexity increases, the tables turn. For complex business logic, data transformation, or heavy computation, the Java Virtual Machine (JVM) is superior. The JVM's Just-In-Time (JIT) compiler is incredibly mature, optimizing code paths over time to near-native performance. Furthermore, Java's multi-threaded model naturally utilizes all CPU cores for a single request if necessary, whereas Node.js requires awkward workarounds (like clustering or worker threads) to break out of its single-core prison.

| Metric | Node.js (Express/Fastify) | Java (Spring Boot / WebFlux) | Insight |

|---|---|---|---|

| Concurrency Model | Single-threaded Event Loop | Multi-threaded (Thread per request) | Node handles high connection counts with significantly lower RAM overhead. |

| I/O Performance | Excellent (Non-blocking) | Good (getting better with Virtual Threads) | Node excels at "pass-through" architecture (API Gateways). |

| CPU Performance | Weak (blocks the loop) | Strong (utilizes multi-core natively) | Spring Boot is superior for computation-heavy logic and complex processing. |

| Startup Time | Very Fast (< 1 second) | Slower (JVM warm-up) | Node is ideal for Serverless/Lambda functions where cold starts degrade UX. |

| Throughput (RPS) | ~50k - 150k RPS | ~20k - 120k RPS | Node creates higher throughput for simple queries; Java wins on complex processing. |

Data Synthesis: Research suggests that for 80% of web applications—which are primarily fetching data from a database and sending it to a client—Node.js offers a performance profile that is not only adequate but superior in terms of resource efficiency. However, for the 20% of applications that perform heavy lifting, Node.js can become a liability without careful architectural segmentation.

The chart above demonstrates this "Performance Crossover." Notice how Node.js (in green) dominates the "Simple I/O" category—this is its sweet spot. But as we move right towards "CPU Heavy," the blue bars of Spring Boot take the lead. This visual should serve as a primary heuristic for your architectural decisions: What is the nature of your workload?

3. The Ecosystem Dilemma: NPM vs. Stability

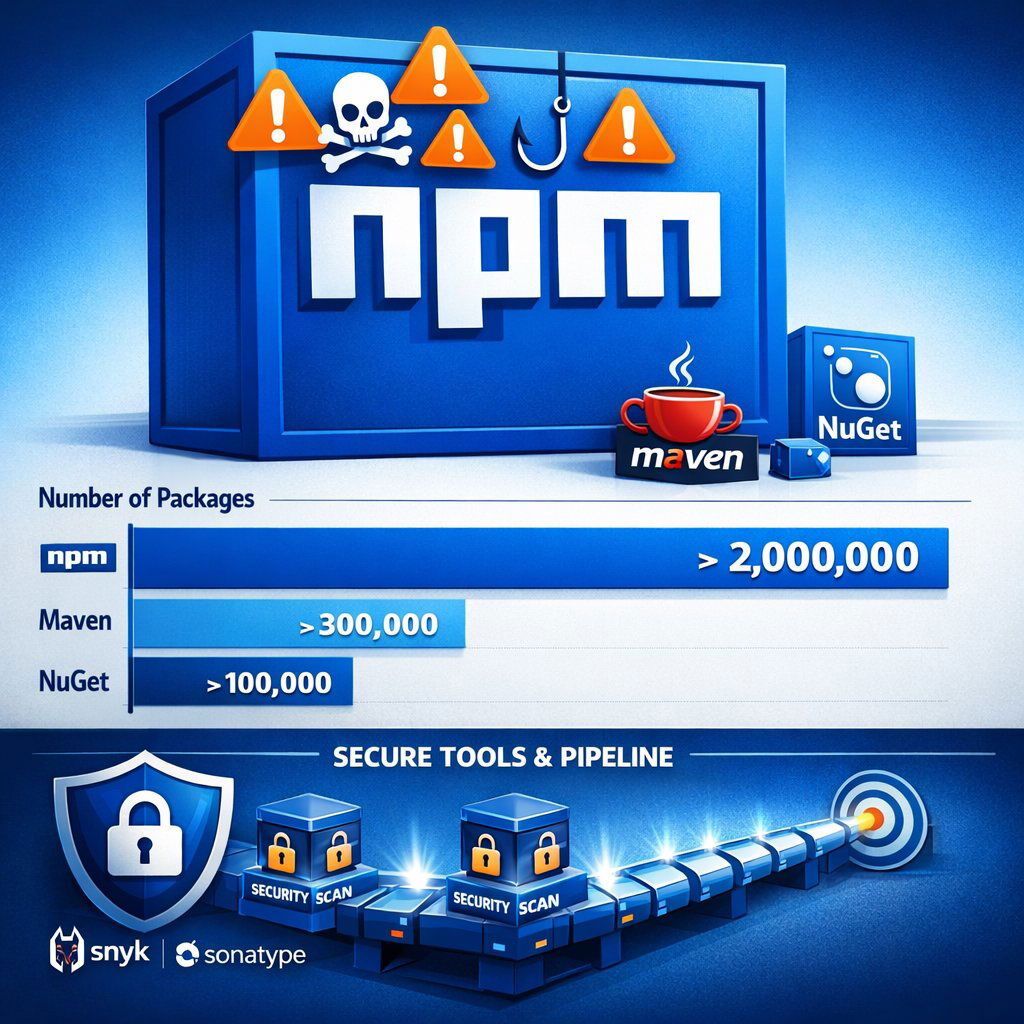

A technology stack is more than just a language; it is the library of solutions available to solve problems. The Node.js ecosystem, driven by the Node Package Manager (npm), is a double-edged sword of massive proportions. It is the largest software registry in the world, with over 2 million packages, dwarfing the repositories of Java (Maven) and .NET (NuGet).

3.1 The Velocity of Open Source

For a startup or agile enterprise team, npm is a phenomenal accelerator. Features that would take weeks to build from scratch—or require heavy, opinionated frameworks in .NET or Django—can be installed in seconds via npm install. The "lego block" philosophy of the community encourages small, single-purpose modules. Need to format a date? There’s a library for that. Need to validate an email? There are twenty libraries for that.

The sheer volume of activity means that for any third-party service (Stripe, Twilio, AWS, OpenAI), the Node.js SDK is likely a first-class citizen, released on day one, and updated frequently. This vibrancy ensures that a Node.js team is rarely blocked waiting for vendor support.

3.2 "Dependency Hell" and the Maintenance Tax

However, this granularity creates significant maintenance overhead. A typical enterprise Node.js application may have over 1,000 dependencies, once the transitive dependency tree (dependencies of dependencies) is accounted for. This introduces several critical risks:

1. Software Rot and Churn: The JavaScript ecosystem moves at breakneck speed. Libraries fall out of favor, maintainers abandon projects, and breaking changes are frequent. "Software rot" sets in faster in Node.js than perhaps any other ecosystem. A perfectly functioning application today might be unbuildable in 18 months because a deprecated dependency has been removed from the registry or is incompatible with a new Node.js version. This imposes a "Maintenance Tax"—a persistent need for developer hours spent just to keep the build green, without adding any business value.

2. Supply Chain Security: The openness of npm makes it a prime target for bad actors. Unlike the more curated gardens of Nuget or Maven Central, npm has historically had a lower barrier to entry for publishing. In Q1 2025 alone, over 17,000 malicious packages were discovered in open-source repositories, a number that doubled year-over-year. Attacks often involve "typosquatting" (publishing a package with a name similar to a popular one, e.g., react-dom-conf instead of react-dom-conf) or "repojacking" (taking over abandoned packages to inject malware).

Comparison: In contrast, the Java (Maven) and .NET (NuGet) ecosystems tend to favor larger, more stable standard libraries maintained by foundations (Apache, Eclipse) or corporations (Microsoft). While they are not immune to vulnerabilities (the Log4Shell incident being a prime example), the frequency of risk from "trivial packages"—tiny libraries that do one small thing and introduce a vector for attack—is significantly lower.

The chart above illustrates the overwhelming scale of NPM compared to its counterparts. While this scale represents "choice," it also represents "surface area" for attacks. CTOs must implement rigorous governance: using tools like Snyk or Sonatype Nexus to scan dependencies, locking dependency versions (package-lock.json), and perhaps even running a private npm proxy to vet packages before they enter the corporate environment.

4. The TypeScript Pivot: Enterprise Safety Net

Early criticisms of Node.js focused on JavaScript's lack of type safety. In a large codebase, the dynamic nature of JavaScript ("weak typing") meant that a variable could change from a string to an integer without warning, leading to runtime errors that were difficult to debug. For the enterprise CTO, TypeScript is the answer. It is no longer an optional add-on; it is the industry standard for scalable JavaScript development.

4.1 The Standardization of TypeScript

According to the State of JS 2024 survey, the industry is "firmly in the TypeScript era," with 67% of respondents writing more TypeScript than plain JavaScript. Major tech companies like Airbnb, Slack, and Stripe have migrated millions of lines of code to TypeScript to improve maintainability. It effectively acts as a "compile-time" check for JavaScript, catching errors before the code ever runs.

4.2 Measurable ROI on Maintainability

The return on investment (ROI) for TypeScript is quantifiable and significant. Airbnb's engineering analysis estimated that 38% of bugs in their codebase were preventable with TypeScript.

- Refactoring Confidence: In a large dynamic codebase, refactoring is terrifying. Changing a function signature might break code in a dozen unknown places. Static typing allows IDEs to safely rename variables and refactor complex logic across thousands of files, alerting the developer immediately to any breaks. This capability alone changes the lifecycle of a legacy application from "do not touch" to "maintainable".

- Self-Documentation: Type definitions serve as living documentation. A developer interacting with an API knows exactly what data structure to expect—an

Userobject has anid(string) andemail(string), butageis optional. This reduces the need to constantly consult external docs or read source code to understand how to use a function.

Strategic Recommendation: Adopting Node.js in 2026 without TypeScript is professional negligence for any application intended to last more than six months. It bridges the gap between the flexibility of JS and the rigor of C#/Java, providing the safety net required for enterprise-grade software.

5. Scalability and Architecture at Scale

The "single-threaded" limitation of Node.js requires a specific architectural approach to scale. You cannot simply "throw more RAM" at a single Node.js process (Vertical Scaling) and expect it to handle CPU load. Scaling must be horizontal and architectural.

5.1 The Microservices Fit

Node.js is architecturally predisposed to Microservices. Because Node.js services are lightweight and start quickly, they are ideal candidates for containerization (Docker) and orchestration (Kubernetes).

- The Pattern: Instead of a monolithic backend, the application is broken into small, discrete services. A "User Service," "Order Service," and "Notification Service" can all run independently.

- The "Strangler Fig": For legacy modernization, Node.js is often used to build a "Strangler Fig" layer—a new API gateway that sits in front of a legacy Java/monolith system. This gateway handles the modern frontend requirements and gradually replaces backend functionality endpoint by endpoint, allowing for risk-mitigated migration.

5.2 Handling CPU-Bound Tasks: The "Offloading" Pattern

When a Node.js application must perform CPU-intensive work (e.g., generating a PDF report, processing data analytics, resizing images), the architecture must offload this work to prevent blocking the event loop for other users.

- Worker Threads: Node.js now supports Worker Threads to execute JavaScript in parallel, sharing memory. This helps with medium-load tasks within the same instance.

- Polyglot Microservices: A more robust, enterprise-grade pattern is to use Node.js for the I/O-heavy API layer and offload the heavy computation to a microservice written in a language suited for it, such as Go, Rust, or Python. This "best tool for the job" approach retains the benefits of Node.js for the user-facing responsiveness while mitigating its computational weakness.

The diagram above illustrates this critical pattern. The "Main Thread" (in green) remains free to accept new user requests because the heavy lifting is diverted to the "Offload Path." This ensures that a user trying to log in isn't waiting for another user's PDF to generate.

5.3 Case Studies: Netflix, Uber, and PayPal

The industry's giants provide validation for this model.

- Netflix: Netflix’s transition to Node.js was driven by the need to unify their stack. They achieved a 70% reduction in startup time compared to their previous Java stack. By using Node.js for their "edge" services (the API layer that devices talk to), they empowered frontend engineers to own the data fetching logic, decoupling them from backend teams.

- Uber: Uber adopted Node.js for its massive real-time dispatching system due to its ability to handle asynchronous I/O (thousands of driver pings per second). However, as their systems matured and required more complex computation, they didn't dogmatically stick to one tool. They introduced Go for high-throughput, high-performance services, creating a polyglot ecosystem where Node.js handles the I/O and orchestration, and Go handles the computation.

- PayPal: PayPal reported that their Node.js apps were built twice as fast with fewer people compared to their Java apps, with 33% fewer lines of code and 40% fewer files. This drastic reduction in code volume correlates directly to lower maintenance costs and higher agility.

6. Total Cost of Ownership (TCO) Analysis

When evaluating the financial implications, the experienced executive must look beyond the hourly rate of developers to the total lifecycle cost of the software.

6.1 Development Phase (Node.js Advantage)

Node.js typically wins on Time-to-Market and initial development costs. The abundance of open-source packages and the ability to use the same language on the frontend and backend can reduce initial development time by 30-40% compared to verbose languages like Java. For a custom software project—where time is billed or burn rate is constant—this efficiency translates to significant savings or a richer feature set for the MVP.

6.2 Maintenance Phase (Java/.NET Advantage)

However, the TCO equation shifts over time. The "churn" of the Node ecosystem requires continuous maintenance.

- Dependency Management: A Node.js project requires regular auditing of

package.jsonto fix breaking changes and security vulnerabilities. This is an ongoing operational expense. - Strictness: Java and .NET’s rigid structures (interfaces, dependency injection containers) enforce a consistency that pays off in years 3-5 of a project’s life. A "loose" Node.js project without TypeScript can become a legacy nightmare that is expensive to refactor.

Baytech Consulting Perspective: We observe that the most successful Node.js projects are those that invest early in "Enterprise Rigor"—TypeScript, automated linting, CI/CD pipelines, and strict code reviews. Without this governance, the initial speed gains are lost to technical debt in the long run.

6.3 Infrastructure Costs

Infrastructure costs for Node.js are typically lower for I/O-bound applications due to its efficient memory model. Hosting a Node.js app on Azure App Service or Azure Container Apps (serverless containers) can be more cost-effective than provisioning large VMs for heavy Java application servers. The lightweight nature of Node.js containers allows for denser packing on Kubernetes nodes, optimizing cloud spend.

7. Industry-Specific Considerations

7.1 Fintech & High-Precision Data

Fintech applications face a unique challenge with JavaScript: Math. JavaScript uses binary floating-point numbers (IEEE 754) for all numeric values. This leads to infamous errors like 0.1 + 0.2 === 0.30000000000000004.

- The Risk: In a banking ledger or trading application, such precision errors are unacceptable.

- The Solution: Fintech apps using Node.js must strictly use arbitrary-precision libraries like

Big.jsorDecimal.jsfor all monetary math. Alternatively, the "polyglot" approach is often favored here: Node.js handles the customer-facing dashboard and API, while a backend service in Java, C#, or Go handles the ledger and transaction processing.

7.2 Healthcare & HIPAA

Node.js is fully capable of being HIPAA compliant—compliance is about process and data protection, not the language itself. However, the risk lies in the supply chain.

- The Risk: A healthcare app might pull in a third-party npm package that is not HIPAA compliant or has a vulnerability exposing patient data (PHI).

- The Solution: Strict containment. Healthcare Node.js apps should run in isolated environments (containers) with locked-down dependency trees and automated vulnerability scanning (SCA) tools integrated into the pipeline. Role-Based Access Control (RBAC) must be implemented meticulously, often utilizing middleware like Passport.js but backed by robust identity providers.

8. Conclusion: The Strategic Verdict

The decision to adopt Full-Stack JavaScript is not a binary choice between "good" and "bad"; it is a strategic choice between Velocity and Convention.

Adopt Full-Stack JavaScript (Node.js + React) if:

- Time-to-Market is critical: You need an MVP or a product iteration rapidly.

- Workload is I/O Bound: Your app is heavy on API calls, real-time data, chats, or streaming.

- Team Flexibility is a Goal: You want to break down silos between frontend and backend developers.

- You Commit to TypeScript: You are willing to enforce strict typing and tooling to maintain quality.

Stick to Java/Spring or .NET if:

- Computation is the Core: Your app performs heavy financial modeling, image processing, or AI model inference on the server.

- Stability > Speed: You are building a banking core or a legacy system where stability for 10 years is more important than shipping features next week.

- Regulatory Environment is Extreme: The mature, slower-moving ecosystems of Java/.NET offer a slightly smaller attack surface for supply chain vulnerabilities.

The "Hybrid" Future

For many enterprise CTOs, the answer is not "either/or" but "and." The modern enterprise architecture often features a robust Node.js API Gateway handling user traffic, authentication, and aggregation—delivering the speed and developer experience of the full stack—while orchestrating calls to specific backend microservices (in Java, Go, or Python) that handle the heavy lifting.

This approach leverages the strengths of Baytech’s "Tailored Tech Advantage"—using the right tool for the specific component, rather than forcing a monolith into a single language.

Actionable Next Steps

- Audit Your Workload: Classify your application's tasks. Are they waiting for databases (I/O) or crunching numbers (CPU)? For advanced teams, explore predictive analytics using Predictive AI for smart workload classification.

- Mandate TypeScript: If you choose Node.js, forbid vanilla JavaScript for the backend. The safety net is non-negotiable.

- Secure the Supply Chain: Implement tools like Snyk or Sonatype to scan npm dependencies automatically in your CI/CD pipeline.

- Prototype the Architecture: Before rewriting a monolith, use the "Strangler Fig" pattern to build a Node.js microservice for a single high-traffic feature to test team adaptability—see our detailed analysis on AI-powered process modernization and risk for guidance.

Full-stack JavaScript is a powerful accelerator for the digital business. Managed with discipline, it transforms engineering teams from siloed workers into product creators. Managed poorly, it creates a fragile web of dependencies. The difference lies not in the language, but in the leadership.

Further Reading

- Netflix: Making Netflix.com Faster

- PayPal: Node.js at PayPal

- https://www.uber.com/blog/tech-stack-part-one-foundation/

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.