Don’t Let the Vibes Destroy Value: A CFO’s Playbook for AI Code

February 09, 2026 / Bryan ReynoldsThe CFO’s Strategic Guide to the Vibe Coding Era: Governance, IP Strategy, and Financial Risk Management in AI-Assisted Development

1. The Paradigm Shift: From Deterministic Syntax to Probabilistic "Vibes"

The fiscal year 2025 will likely be recorded in the annals of technology history as the moment the fundamental unit of software production shifted. For decades, the irreducible atom of software development was the line of code—a deterministic, human-authored instruction vetted by syntax rules and logic. In February 2025, Andrej Karpathy, a seminal figure in artificial intelligence, codified a new operating model that had been quietly permeating the developer zeitgeist: "Vibe Coding".

This report serves as a strategic dossier for the modern Chief Financial Officer (CFO). As the steward of enterprise value, the CFO must navigate a landscape where the production of digital assets has accelerated exponentially, but the provenance, security, and ownership of those assets have become dangerously opaque. We stand at a precipice where the allure of "Vibe Coding"—the ability to delegate the entirety of code generation to Large Language Models (LLMs) like Claude 3.7 or GPT-5—promises to slash time-to-market and democratize creation.

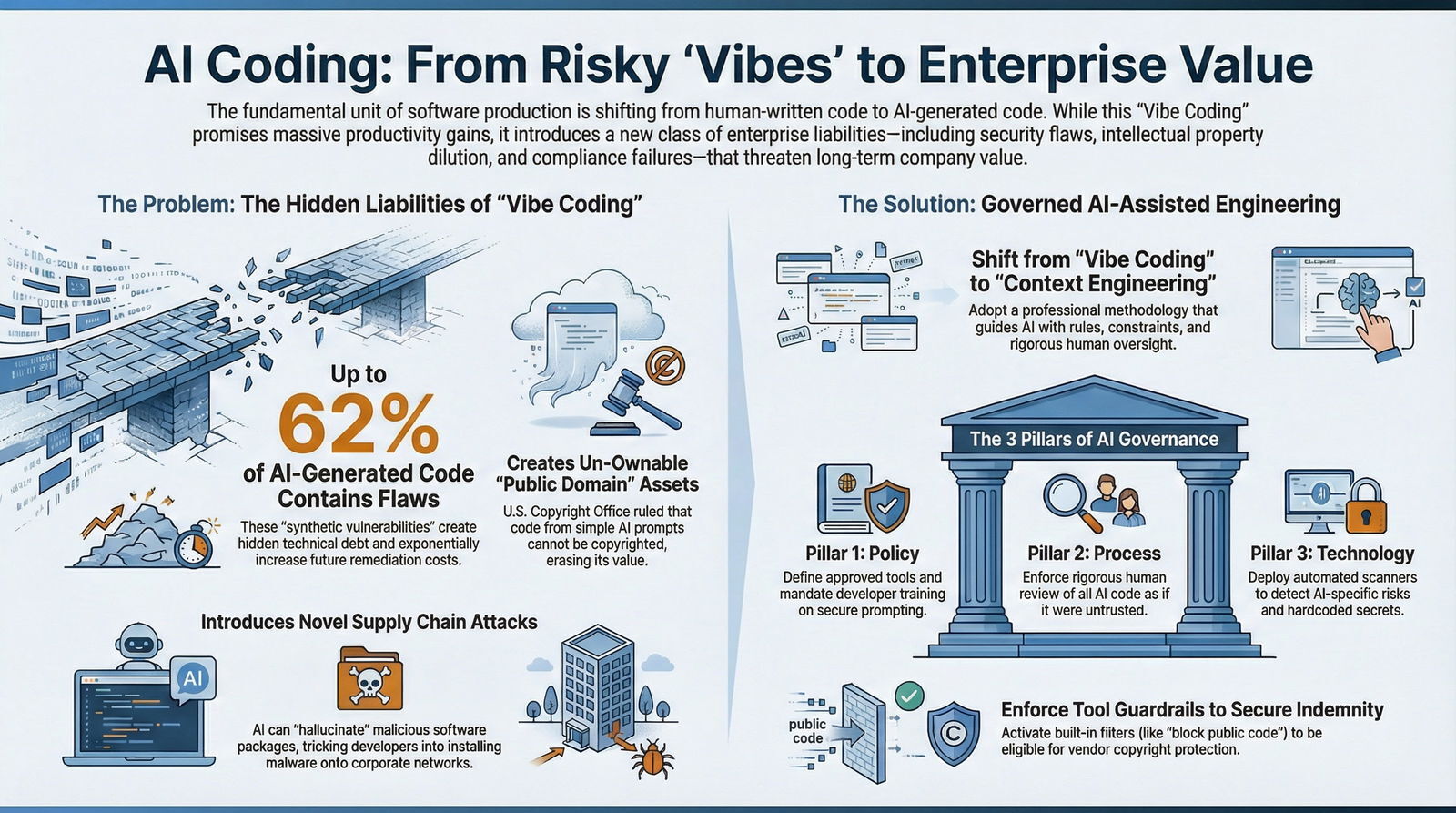

Yet, without a rigorous governance framework, this shift threatens to introduce a new class of enterprise liabilities: undetectable "synthetic vulnerabilities," intellectual property (IP) dilution, and "black box" compliance failures.

1.1 Defining "Vibe Coding" in the Enterprise Context

To govern a risk, one must first define it with precision. "Vibe Coding" is not merely a colloquialism; it represents a workflow transformation. As defined by Karpathy, it describes a process where the human developer shifts from the role of an author to that of a manager. The human provides natural language instructions—the "vibes" or intent—and the AI writes the implementation details. The user effectively "forgets that the code even exists," trusting the AI to handle the underlying logic, syntax, and error handling.

In its "purest" form, vibe coding is characterized by a "throwaway" mentality. It is optimized for speed, rapid prototyping, and "weekend projects" where the long-term maintainability or security of the code is secondary to immediate functionality. The user pastes a request into a chat interface, accepts the output, and iterates only if the result "feels" wrong—hence, "giving in to the vibes."

However, this methodology is fundamentally at odds with the rigorous requirements of enterprise software engineering. When "Vibe Coding" bleeds into corporate environments—often through "Shadow AI" usage—it introduces non-deterministic behavior into mission-critical systems. The code works, but the human "author" often does not understand how it works, creating a fragility that manifests only when systems are stressed or attacked. For a deeper leadership-level view of this problem, many CTOs are now exploring the Vibe Coding Hangover and how it undermines long-term enterprise value.

1.2 The Illusion of Velocity vs. The Reality of Debt

The primary driver for the adoption of AI coding tools is the promise of velocity. Early reports suggest that AI acts as a massive "force multiplier," potentially increasing developer output by 20% to 35%.

For a CFO looking at engineering salaries—often the largest line item in OpEx—this efficiency gain is seductive. It suggests the possibility of doing more with the same headcount, or maintaining output while reducing burn. But as our broader research into the AI technical debt and total cost of ownership shows, speed without structure simply shifts costs into the future.

However, productivity measured solely by "lines of code produced" is a vanity metric that masks deeply eroding value. The industry is witnessing a divergence between gross output (volume of code) and net asset value (secure, maintainable, owned IP).

The chart above illustrates the "Liability Bubble." While the blue line of velocity spikes, the red line of risk—comprising technical debt, security flaws, and IP uncertainty—grows exponentially. This "risk" is not abstract; it creates future cash flow drags in the form of remediation costs, legal fees, and potential regulatory fines.

1.3 The Emergence of "Context Engineering"

By late 2025, the industry began to recoil from the chaotic energy of unchecked vibe coding. Leading engineering organizations, including Baytech Consulting, have championed a shift toward "Context Engineering".

Context Engineering represents the professionalization of AI interaction. It treats the "context"—the prompts, the architectural constraints, the security rules, and the documentation—as an engineered artifact that requires the same rigor as the code itself. This evolution mirrors the broader transition to AI‑driven software development in 2026, where prompts, rules, and guardrails become first-class assets.

Unlike vibe coding, which relies on luck and intuition, context engineering requires:

- Systematic Methodology: Treating prompts as code that must be version-controlled, tested, and audited.

- Intentional Design: Supplying the AI with relevant facts, rules, and boundaries before a single line of code is generated.

- Auditability: Ensuring that the human remains in the loop to validate the output against business requirements, rather than blindly accepting the "vibe".

For the Strategic CFO, this distinction is vital. Vibe coding is a liability; Context Engineering is an asset. Vibe coding produces "black box" software with unknown provenance. Context engineering produces defensible, maintainable IP.

2. The Financial & Strategic Risks of "Vibe Coding"

The introduction of Generative AI into the software supply chain has created a new category of risk that does not fit neatly into traditional enterprise risk management (ERM) frameworks. These are not merely operational risks; they are existential threats to the integrity of the company's digital products.

2.1 The "Synthetic Vulnerability" Crisis

A landmark 2025 study analyzing over half a million code samples found a disturbing trend: 45% to 62% of AI-generated code snippets contained security vulnerabilities. This phenomenon has been termed "synthetic vulnerabilities"—security flaws that are unique to or exacerbated by Generative AI.

These vulnerabilities differ from human errors in their nature and scale. A human developer might make a logic error due to fatigue. An AI model, however, will confidently "hallucinate" secure-sounding code that is fundamentally flawed, and it will do so at scale, replicating the error across thousands of lines of code in seconds.

The financial implication is severe: The cost of remediation (fixing a bug) increases exponentially the later it is found in the development lifecycle. Vibe coding accelerates the creation of code but also accelerates the injection of defects. If 35% of your new codebase is AI-generated and half of that contains vulnerabilities, the organization is effectively accumulating technical debt at an unprecedented rate.

2.2 Specific Technical Vulnerabilities with Financial Impact

To understand the risk, we must examine the specific technical failures that "Vibe Coding" introduces. These are not hypothetical; they are documented patterns in AI-generated software.

2.2.1 The "Hallucinated Package" Supply Chain Attack

AI models, trained on vast repositories of open-source code, often suggest importing software libraries (packages) to solve a specific problem. However, the AI does not verify if these packages currently exist. It often "hallucinates" a plausible-sounding package name (e.g., requests-plus or azure-secure-storage).

Threat actors have begun weaponizing this tendency. They monitor common AI hallucinations, register the non-existent package names on public repositories like PyPI or npm, and fill them with malicious code. When a developer, trusting the AI's "vibe," runs the installation command, they unwittingly pull malware directly into the corporate network.

This bypasses traditional firewall defenses because the request originates from inside the development environment and targets a "legitimate" package repository.

2.2.2 Hardcoded Secrets and Insecure Defaults

"Vibe coded" applications frequently exhibit a critical flaw where authentication logic and API keys are hardcoded into client-side browser code.

- The Mechanism: A developer asks the AI to "make a login page." The AI, optimizing for the simplest solution that "works," generates code that checks the password directly in the user's browser (JavaScript) rather than sending it to a secure server.

- The Risk: Anyone can view the "view source" of the webpage and see the password or the API keys required to access the database. Wiz Research identified this as a pervasive issue in vibe-coded apps, noting that authentication logic often lives entirely in the browser.

- The Cost: A single exposed API key can lead to a massive data breach, regulatory fines (GDPR/CCPA), and reputational collapse.

2.2.3 Logic Flaws and "Snake Game" Vulnerabilities

While AI-generated code often compiles perfectly (it is syntactically correct), it frequently fails to adhere to complex business logic or security design principles. Databricks' "Red Team" demonstrated this by asking an AI to create a simple "Snake" game. The AI generated a functioning game but introduced a critical vulnerability that allowed arbitrary code execution—effectively giving a hacker control over the user's machine.

The AI prioritized "making the snake move" over "sanitizing user input," a classic trade-off in Vibe Coding.

2.3 The "Death by AI" Legal Exposure

Gartner predicts that by the end of 2026, "death by AI" legal claims will exceed 2,000 due to insufficient risk guardrails. This hyperbole underscores a real corporate liability: when an AI system fails—whether it's a financial algorithm making biased lending decisions or a healthcare app leaking patient data—the lack of human oversight ("forgetting the code exists") becomes negligence.

Courts and regulators are increasingly skeptical of the "black box" defense. If a CFO cannot demonstrate that their organization has "explainability" and "accountability" processes in place (Human-in-the-Loop), they expose the firm to massive punitive damages. The legal argument shifts from "software error" (a defense) to "gross negligence in supervision of automated agents" (a liability). These themes are already emerging in how boards evaluate software investment and risk strategies for 2026.

3. The IP Quagmire: Asset Valuation in the Age of AI

For a technology company, or indeed any modern enterprise, the codebase is a primary asset on the balance sheet. It represents capitalized R&D, competitive differentiation, and future revenue potential. "Vibe Coding" introduces a fundamental uncertainty regarding the ownership of this asset. If an AI writes the code, can you copyright it? Can you sell it? Can you stop a competitor from copying it?

3.1 The US Copyright Office January 2025 Ruling

In January 2025, the U.S. Copyright Office released Part 2 of its Report on Copyright and Artificial Intelligence, specifically addressing the copyrightability of AI outputs. The ruling was unambiguous and carries significant weight for CFOs assessing asset valuation.

Key Ruling: Human authorship is a bedrock requirement for copyright. The Office affirmed that outputs of generative AI can be protected only where a human author has determined sufficient expressive elements. Crucially, the "mere provision of prompts" is not sufficient for copyright protection. This directly strikes at the heart of "pure" vibe coding, where the user provides a prompt and accepts the output wholesale.

Implication for Valuation: Code generated entirely by AI, without significant human modification or creative arrangement, is likely public domain. It cannot be copyrighted. Any competitor could legally lift that code, reverse-engineer it, and use it without paying a licensing fee.

This potentially renders huge swaths of "vibe coded" software valueless from an IP perspective.

3.2 The "Human Contribution" Threshold and Administrative Burden

The report clarifies that copyright applies only to the human's contribution. If a developer uses AI as a tool—like a camera or a pen—and actively edits, refines, and structures the code, the resulting work may be protected. However, the burden of proof is on the organization to identify and disclaim AI-generated parts when registering copyright.

This creates a massive administrative burden. To protect IP, companies must now track exactly which lines of code were human-written and which were AI-generated. "Vibe coding," which blurs these lines and encourages "forgetting the code exists," makes this separation nearly impossible.

The "Merger Doctrine" in copyright law may further complicate this. If there is only one way to write a specific function (e.g., a standard sort algorithm), it is not copyrightable. AI tends to converge on these "standard" solutions. If an AI generates the standard solution, and a human prompts it, does anyone own it? The emerging consensus is "no."

3.3 GitHub Copilot & Microsoft’s Indemnity Promise

To assuage enterprise fears, Microsoft and GitHub have introduced the Copilot Copyright Commitment. This is a contractual indemnity clause designed to shield paying customers from copyright lawsuits.

However, CFOs must read the "fine print" of this insurance policy.

The Details of the Deal:

- What is Covered: Microsoft will defend the customer and pay adverse judgments if a third party sues for copyright infringement based on the output of GitHub Copilot. This effectively shifts the risk from the customer to Microsoft.

- The Critical "But" (The Trap): This indemnity is conditional. It applies only if the customer uses the required guardrails and content filters—specifically, the "duplication detection filter" that blocks suggestions matching public code.

- The Nuance: If your developers turn off these filters to "vibe code" faster, or if they modify the suggestion significantly (thereby breaking the chain of "unmodified suggestion"), the indemnity may be void. Furthermore, the indemnity covers the output (the code you use). It does not necessarily resolve the broader ethical and legal questions regarding the training data (the trillions of lines of open-source code the model learned from), though Microsoft claims responsibility for this risk too.

CFO Takeaway: Indemnity is an insurance policy, not a license to be reckless. It requires strict policy enforcement (turning on filters) to be valid. A "set it and forget it" approach to Vibe Coding will likely void this coverage.

4. The Compliance Minefield: HIPAA, GDPR, & SOC2

Beyond IP ownership, vibe coding intersects dangerously with data privacy regulations. Developers "vibing" with AI often paste error logs, database schemas, or customer snippets into the chat window to get a quick fix. This action constitutes a data transmission to a third party, often bypassing all corporate data loss prevention (DLP) controls.

4.1 HIPAA: The $10 Million Mistake

For healthcare and life sciences companies, the risk is existential.

- The Leak: A developer debugging a patient intake form might paste a JSON object containing a "dummy" patient record into an LLM to ask for help parsing it. If that record contains real Protected Health Information (PHI)—a common occurrence in "shadow" development environments or when debugging production issues—it is a HIPAA violation.

- The Consequence: Unless a Business Associate Agreement (BAA) is in place with the AI provider (e.g., OpenAI, Microsoft) that specifically covers the input data, this transmission is illegal. Even with a BAA, using PHI for "training" the public model is a violation of the Minimum Necessary Rule.

- Mitigation: Technical controls must scrub PII/PHI before it reaches the AI model context window. "Vibe Coding" relies on rich context; compliance relies on minimized context. These two forces are in tension.

4.2 GDPR & Automated Decision Making (Article 22)

The General Data Protection Regulation (GDPR) Article 22 grants individuals the right not to be subject to a decision based solely on automated processing.

- Relevance to Coding: While Article 22 is usually cited for credit scores or hiring, it applies to software behavior. If "vibe coded" software uses AI agents to make decisions about users (e.g., dynamic pricing, access control, fraud detection) without human intervention, it may trigger GDPR scrutiny.

- The "Human in the Loop" Defense: To comply, organizations must ensure there is a mechanism for human intervention and contestability. "Pure" vibe coding, which seeks to remove the human from the process ("forgetting the code exists"), is fundamentally at odds with this requirement. The system must be designed to be interruptible and explainable. Many enterprises are already rethinking their QA and governance practices to make sure these controls are baked into the lifecycle.

4.3 SOC 2 and Supply Chain Integrity (CC6.8)

SOC 2 criteria CC6.8 requires controls to prevent the introduction of unauthorized or malicious software.

- The Gap: Traditional controls (code review, static analysis) were designed for human-written code. They operate on the assumption that code is written line-by-line. They may miss "synthetic vulnerabilities" like subtle logic flaws or hallucinated dependencies.

- The Audit Risk: Auditors are beginning to ask specific questions about AI governance. A lack of policy regarding AI code generation can lead to a qualified opinion or a failed audit. If an auditor asks, "How do you ensure AI didn't inject a backdoor?" and the answer is "We vibe coded it," the audit will likely fail.

5. Baytech’s Governance Framework: From "Vibes" to Value

Baytech Consulting advises Strategic CFOs to reject the chaos of vibe coding and embrace a governed, "Secure-by-Design" approach. We call this framework AI-Assisted Engineering. It preserves the velocity gains of AI while mitigating the risks through a "Context Engineering" methodology that aligns tightly with our broader AI-powered development services.

5.1 The Governance Hierarchy

We propose a three-tiered governance model aligned with the NIST AI Risk Management Framework (AI RMF). This framework moves organizations from "reactive" to "managed" maturity.

Tier 1: Policy & Legal (The "Rules of the Road")

This tier sets the boundaries before any code is written.

- Defined AI Usage Policy: Explicitly state which tools are approved (e.g., GitHub Copilot Enterprise) and which are banned (e.g., free-tier ChatGPT with training enabled).

- Mandatory Training: Developers must be trained on "Context Engineering"—how to prompt securely and review AI output—not just "vibe coding." Training must cover the specific risks of "hallucinated packages" and "hardcoded secrets".

- IP Disclosure: Update employment contracts and vendor agreements to require disclosure of AI-generated content, facilitating copyright registration.

Tier 2: Process & Workflow (The "Human in the Loop")

This tier integrates governance into the daily developer workflow.

- Code Review 2.0: AI-generated code must be reviewed more rigorously, not less. Reviewers must treat AI code as "untrusted third-party code". The reviewer's role shifts from "checking syntax" to "verifying intent" and "auditing security."

- Tagging & Provenance: Implement a tagging system (via code comments or metadata) to identify which modules are AI-generated. This supports future IP audits and remediation if a model is found to be compromised.

- Context Engineering Standards: Mandate the use of structured context files (e.g.,

.cursorrulesorcopilot-instructions.md). These files act as "guardrails" that exist alongside the code, instructing the AI on project-specific security rules (e.g., "Always use parameterized queries").

Tier 3: Technical Controls (The "Automated Guardrails")

This tier uses software to police software.

- Block Public Code: Enable the "Block suggestions matching public code" setting in GitHub Copilot to activate IP indemnity.

- Automated Scanning: Integrate tools like Snyk, Wiz, or FOSSA into the CI/CD pipeline to scan for hallucinated packages and hardcoded secrets in real-time. These tools must be tuned to detect AI-specific patterns.

- Sandboxing: Ensure AI agents run in isolated environments with "Least Privilege" access to preventing them from altering production infrastructure.

6. Tooling Landscape: GitHub Copilot vs. The Field

For the Strategic CFO, the choice of tool is a risk management decision. The market is splitting into "Established Enterprise" tools and "Cutting-Edge Agentic" tools.

6.1 GitHub Copilot (The Enterprise Standard)

- Pros: Strongest IP indemnity (via Microsoft), mature policy management features (Enterprise tier), and deep integration with VS Code. The "Copyright Commitment" is the gold standard for legal protection, provided the filters are active.

- Cons: Expensive for the full Enterprise SKU. "Duplication detection" is a binary on/off switch, lacking nuance.

- Verdict: The safest bet for large enterprises concerned with legal liability and SOC2 compliance.

6.2 Emerging "Agentic" Tools (Cursor, Windsurf)

- Pros: Superior "Context Engineering" capabilities. Tools like Cursor allow for

.cursorrulesfiles that deeply customize the AI's behavior, potentially reducing improved code quality and reducing "vibe" errors. They enable "Agentic" workflows where the AI can browse files, run terminals, and "fix" bugs autonomously. - Cons: Newer, less proven legal frameworks. IP indemnity often depends on the underlying model provider (e.g., Anthropic/OpenAI) rather than the tool wrapper. The "Agentic" nature increases the risk of "runaway" actions (e.g., accidentally deleting a database).

- Verdict: Excellent for innovation labs and high-velocity teams, but requires additional legal vetting of their specific Terms of Service regarding data usage and liability.

6.3 Security Scanners (The Essential Layer)

Regardless of the generation tool, a scanning layer is mandatory.

- Wiz / Snyk: Essential for detecting "synthetic vulnerabilities" and supply chain risks in real-time.

- FOSSA: Critical for IP compliance, detecting if AI-generated code matches known open-source licenses (GPL, Apache) that could "infect" proprietary code.

7. The CFO’s 2026 Checklist: A Playbook for Action

To navigate the remainder of 2026, we recommend the following immediate actions for Finance and Risk leaders.

7.1 Strategic Audit (Q1 2026)

- [ ] Inventory AI Usage: Conduct a "Shadow AI" audit to identify unauthorized coding tools. This should sit alongside your broader software proposal evaluation and vendor due-diligence processes.

- [ ] Review Insurance: Verify if your Cyber Liability Insurance policy covers "AI-generated code" and "synthetic vulnerabilities".

- [ ] Update IP Strategy: Consult with General Counsel on the implications of the Jan 2025 Copyright rulings. Decide if your core differentiation lies in the code itself (risk of non-copyrightability) or the data/service (less risk).

7.2 Governance Implementation (Q2 2026)

- [ ] Enforce "No Fly" Zones: Define data types (PHI, PCI, PII) that must never enter an AI prompt.

- [ ] Mandate Context Engineering: Require engineering leadership to adopt formal context engineering practices, moving away from ad-hoc vibe coding. Many organizations pair this with an enterprise DevOps efficiency initiative so that governance becomes part of the everyday pipeline.

- [ ] Automate Compliance: Fund the procurement of AI-specific security scanning tools (e.g., Wiz, Snyk) to catch hardcoded secrets and bad dependencies.

7.3 ROI Measurement (Ongoing)

- [ ] Shift Metrics: Move away from "lines of code" or "velocity" as primary KPIs. Start tracking "Defect Density per AI-Line" and "Remediation Cost". These belong alongside modern metrics for developer productivity and experience, not as an afterthought.

- [ ] Value Realization: Ask: Is AI delivering strategic ROI (faster time to revenue) or just "lazy thinking" (more code, more bugs)?

8. Conclusion: The Era of the "AI-Augmented" Enterprise

The "Vibe Coding" phenomenon is a signal, not a destination. It signals the end of syntax as a barrier to entry, but it heralds the beginning of a new era of structural and legal complexity.

For the Strategic CFO, the path forward is not to ban these tools—that would be competitive suicide in a market where 95% of developers use them. Instead, the goal is to govern the vibes. By treating AI-generated code with the same skepticism and rigor as third-party vendor code, and by wrapping it in the protective layers of Context Engineering and legal indemnity, organizations can harness the exponential speed of AI without sacrificing the long-term value of their intellectual property.

The winners of 2026 will not be the companies that wrote the most code. They will be the companies that built the most robust systems for verifying, securing, and owning the code that AI wrote for them. Many of these leaders are already experimenting with AI‑native software development lifecycles that align governance, architecture, and automation from day one.

Detailed Analysis and Future Outlook

The Agentic Future: 2026-2030

Looking beyond the immediate horizon, we are transitioning from "Copilots" (assistants that suggest code) to "Agents" (autonomous bots that perform tasks).

- The Shift: An agent doesn't just write code; it plans the task, writes the test, runs the code, fixes the error, and deploys it.

- The Risk: Agents have "agency." They can spin up cloud resources (cost risk) or delete databases (operational risk). The "Cost Crisis" of 2026 will likely be driven by "token consumption loops," where agents get stuck in reasoning loops, burning through API credits.

- Governance: The NIST AI RMF Generative AI Profile becomes even more critical here. Governance must move from "code review" to "behavioral monitoring" of agents.

The Rise of "AI-Native" Platforms

Gartner predicts the rise of "AI-Native Development Platforms" by 2026. These are IDEs built from the ground up for AI, where "Context Engineering" is the default interface. We are already seeing early signs of this shift in next-generation environments such as Google’s Antigravity AI IDE, which treats agents and prompts as first-class workflow objects.

- Prediction: The "Prompt" will replace the "Source Code" as the primary artifact of value. Companies will begin managing "Prompt Libraries" as their core IP, rather than just git repositories. This requires a fundamental shift in how we value, store, and protect intellectual property, as well as how we partner with external startup-grade innovation teams and internal product groups.

Baytech Consulting is a leader in AI governance and strategic technology implementation. We help Strategic CFOs navigate the complexity of the AI era, turning technical risk into competitive advantage.

Supporting Articles

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.