How Grok Code Fast 1 Is Changing AI-Powered Software Development

August 28, 2025 / Bryan Reynolds

The artificial intelligence landscape is no longer a distant frontier; it is a fiercely competitive arena where tech giants are locked in a relentless arms race. The battle has moved beyond general-purpose chatbots and into the realm of highly specialized, industry-disrupting tools. Nowhere is this transformation more profound than in software development, a sector being fundamentally reshaped by AI's potential to automate, accelerate, and innovate. In this dynamic environment, Elon Musk's xAI has made a decisive move, introducing Grok Code Fast 1. This is not merely another entry in a crowded field; it is a strategic play designed to challenge the market on three critical fronts: unprecedented speed, massive scale, and disruptive economics.

For business leaders and technology executives, the emergence of such a tool raises immediate and pressing questions. Is this a fleeting trend or a foundational shift in how software is built? What is the tangible business case for adopting yet another AI model? And how does it stack up against the established offerings from OpenAI, Anthropic, and Google? This executive briefing is designed to answer these critical questions, providing a clear analysis of what Grok Code Fast 1 is, why it represents a significant strategic opportunity, and how your organization can intelligently integrate it to build a durable competitive advantage.

What Exactly is xAI's Grok Code Fast 1?

To understand the strategic importance of Grok Code Fast 1, it's essential to look beyond the surface-level function of code completion. This model represents a significant step toward a new paradigm of AI assistance, one built around the concept of agentic workflows. This is a move away from passive tools that simply suggest the next line of code and toward more autonomous assistants capable of understanding and executing complex, multi-step development tasks. For an executive, this is the most crucial concept to grasp: the goal is no longer just to write code faster, but to automate entire segments of the development process.

This capability is built on a foundation of powerful and intentionally designed technical specifications. By translating these specifications into their business implications, we can see the clear value proposition xAI is bringing to the market.

Architecture: Cost-Efficient Power Through Specialization

Grok Code Fast 1 is built on a 314-billion parameter Mixture-of-Experts (MoE) architecture. Unlike a traditional monolithic model, which can be thought of as a single, massive brain that must be fully engaged for every query, an MoE architecture functions more like a team of specialized consultants. When a coding task is submitted, the model intelligently routes the request to the most relevant "experts" within its network, activating only a fraction of its total parameters to generate a response.

For a business, this architectural choice has two profound benefits. First, it leads to higher-quality, more specialized outputs because the task is handled by the part of the model best trained for it. Second, it's vastly more computationally efficient. By activating only a portion of the model, xAI can deliver immense power at a lower operational cost—a saving that is passed directly to the customer through its aggressive pricing model.

Context Window: Project-Wide Awareness in a Single Pass

The model features a massive 256,000-token context window. In practical terms, this is the model's "working memory." A context window of this size is equivalent to hundreds of pages of technical documentation or tens of thousands of lines of code. This allows the AI to ingest and comprehend an entire codebase, a complex project file, or extensive API documentation in a single instance.

The strategic business advantage is a dramatic reduction in context-related errors. Many AI coding assistants struggle when they lack the full picture, generating code that is syntactically correct but functionally flawed because it conflicts with another part of the application. With its expansive memory, Grok Code Fast 1 can generate solutions with a holistic understanding of the project's architecture, dependencies, and constraints, leading to higher-quality first-draft code and reducing the time developers spend on debugging and rework.

Speed: The Engine of Development Velocity

Grok Code Fast 1 operates at an impressive processing speed of up to 92 tokens per second, making it one of the fastest coding models currently available. This raw speed translates directly into a more fluid and uninterrupted workflow for developers. The near-instantaneous feedback loop accelerates the iterative cycle of writing, testing, and refining code. For the business, this is a direct lever on project timelines. Faster iteration means faster prototyping, faster feature development, and ultimately, a faster time-to-market for new products and services.

Reasoning Traces: Building Enterprise Trust Through Transparency

Perhaps one of its most strategically important features is the model's ability to output "visible reasoning traces." This directly confronts the "black box" problem that makes many enterprises hesitant to adopt AI for mission-critical tasks. The model does not just deliver a block of code; it can also articulate the step-by-step logic it followed to arrive at that solution.

This transparency is far more than a convenience for developers. For an enterprise, it's a critical enabler of trust, governance, and auditability. In regulated industries such as finance or healthcare, the ability to understand why an AI made a particular coding decision is non-negotiable. These reasoning traces provide an auditable trail that can be reviewed by senior engineers and compliance officers alike. This feature de-risks the adoption of AI in development workflows, making it a more palatable and defensible choice for organizations where security and accountability are paramount. It transforms the AI from an opaque oracle into a transparent and steerable partner.

Why Should My Business Care About This New Model? The Business Case for Speed and Economy

While the technical specifications are impressive, the decision to invest in a new technology ultimately rests on its ability to deliver tangible business value. The case for Grok Code Fast 1 is built on three powerful pillars: accelerating business velocity, unlocking the productivity of high-value talent, and introducing a disruptive economic model that changes the calculus of AI adoption.

Pillar 1: Radically Accelerating Your Time-to-Market

The direct connection between development speed and business success is undeniable. By integrating AI into the end-to-end software product development life cycle (PDLC), organizations can significantly shorten the journey from initial strategy to final deployment. Grok Code Fast 1, with its combination of high-speed generation and a large context window, is purpose-built to act as a powerful accelerator.

Its practical applications directly target the most time-consuming parts of the development process. Teams can leverage the model for rapid prototyping of new ideas, instantly generating the foundational boilerplate code for new features, and automating the creation of essential but tedious unit tests. Each of these use cases shaves valuable hours and days off the development cycle, allowing teams to conduct market testing faster, respond more swiftly to user feedback, and ultimately deliver value to customers sooner.

Pillar 2: Unlocking Senior Developer Productivity and Innovation

The most significant return on investment from AI coding assistants comes not from replacing developers, but from augmenting them. Your senior engineers are your most valuable and expensive technical resource. Every hour they spend on routine, repetitive tasks—such as writing boilerplate code, debugging simple errors, or generating documentation—is an hour not spent on the complex, high-value work that drives innovation, such as system architecture, strategic problem-solving, and mentoring junior talent.

Grok Code Fast 1 acts as a force multiplier for your entire engineering organization. It empowers junior developers by providing them with an expert pair programmer, helping them learn best practices and overcome common hurdles. Simultaneously, it offloads the mundane tasks from senior developers, freeing their cognitive capacity to focus on the challenges that require human creativity and deep experience. This aligns with the modern view of AI's role in the workforce: it's not about eliminating jobs but about elevating them, making every member of the team more productive and strategically focused. If you're looking to dive deeper into building an agile, high-performing engineering culture, the right AI approach can be a game changer.

Pillar 3: A Disruptive Economic Model for AI-Powered Development

With pricing set at an exceptionally competitive $0.20 per million input tokens and $1.50 per million output tokens, xAI has made a clear strategic statement. This pricing is not just incrementally lower than its competitors; it is an order of magnitude cheaper than some of the premium, high-reasoning models on the market. This aggressive economic model is perhaps the most disruptive aspect of Grok Code Fast 1's entry.

This pricing strategy signals a maturation of the AI coding assistant market. For years, businesses have been faced with a choice: either use a powerful but expensive frontier model for all tasks or forgo the benefits of AI. Grok Code Fast 1 introduces a viable, cost-effective "utility" tier. It challenges the assumption that a single, top-of-the-line AI "sledgehammer" is needed for every nail.

This enables a far more sophisticated and cost-efficient AI strategy for businesses. Organizations can now adopt a portfolio approach, using a fast, economical model like Grok Code Fast 1 for the vast majority (perhaps 80%) of routine, day-to-day coding tasks. The expensive, high-reasoning models from providers like Anthropic or OpenAI can then be reserved for the 20% of tasks that are architecturally complex or mission-critical, where their advanced capabilities justify the premium cost. This tiered strategy allows businesses to maximize the productivity benefits of AI across the entire development workflow while maintaining strict control over costs.

How Does Grok Code Fast 1 Stack Up Against the Giants?

In the competitive landscape of 2025, declaring a single "best" AI coding model is a futile exercise. The market has evolved to a point of specialization, where the optimal choice depends entirely on the specific requirements of the task at hand. Is the priority raw speed for rapid prototyping, deep reasoning for debugging a critical security flaw, or massive context for analyzing a legacy monolith? A modern AI strategy is not about finding a single winner, but about building a versatile toolkit and knowing which tool to deploy for each job. For more on emerging multi-model strategies, you may want to explore our recent analysis "Grok 4: Is It Really the World’s Most Powerful AI? An Honest B2B Analysis."

To that end, the following matrix provides a strategic, at-a-glance comparison of Grok Code Fast 1 against its primary competitors from OpenAI, Anthropic, and Google, focusing on the metrics that matter most to business and technology leaders.

| Metric | xAI Grok Code Fast 1 | OpenAI GPT-4.1 / GPT-5 | Anthropic Claude 4.1 / 3.5 Sonnet | Google Gemini 2.5 Pro |

|---|---|---|---|---|

| Context Window (Scale) | 256K Tokens | Up to 1M Tokens | 200K Tokens | 1M Tokens |

| Performance (SWE-Bench) | Not Available (Proxy: Grok 4 is 58.6%) | High (54.6% for 4.1) | Industry Leading (65% for Sonnet 4) | High (46.8% - 63.8%) |

| Speed (Tokens/Sec) | Industry Leading (~92 tps) | Slower (~75 tps for Grok 4) | Slower | Moderate |

| Cost (Blended $/1M Tokens) | Industry Leading (~$0.85) | High (~$5.63 for GPT-5) | Premium (~$45 for Opus 4.1) | Moderate-High (~$5.63) |

| Key Differentiator | Extreme Speed & Economy | Balanced Performance & Ecosystem | Complex Reasoning & Safety | Massive Context & Google Integration |

| Best For... | Rapid Prototyping, Boilerplate Code, Cost-Sensitive Scale | General Purpose Development, Agentic Workflows | Mission-Critical Logic, Debugging Complex Bugs, Refactoring Legacy Systems | Analyzing Entire Code Repositories, Multimodal Inputs |

Narrative Analysis of the Competitive Landscape

The data in the matrix reveals four distinct strategic positions in the market:

- Grok's Niche: The Speed Demon and Economic Champion. Grok Code Fast 1 has carved out a clear and compelling niche. It is the undisputed leader where velocity and cost-efficiency are the paramount concerns. While user reports suggest it may struggle with highly complex or nuanced tasks compared to its premium rivals, it excels at the high-volume, everyday coding activities that form the bulk of a developer's workload. It is the ideal tool for startups, agile development teams focused on rapid iteration, and large enterprises looking to deploy AI assistance at scale without incurring prohibitive costs.

- Anthropic's Fortress: The Master Architect. Anthropic's Claude models, particularly with their industry-leading scores on the SWE-Bench benchmark—which measures a model's ability to solve real-world GitHub issues—have established themselves as the premier tools for complex reasoning. They are the "master surgeons" of the AI world, best deployed for high-stakes tasks where precision and deep logical understanding are non-negotiable. This includes debugging intricate legacy code, refactoring mission-critical systems, or architecting complex new functionalities.

- OpenAI's All-Rounder: The Swiss Army Knife. The GPT series from OpenAI continues to be the powerful and versatile workhorse of the AI industry. With a mature and deeply integrated ecosystem (most notably through GitHub Copilot), strong performance across a wide range of benchmarks, and a well-understood developer experience, it remains a reliable and powerful choice for general-purpose development. It strikes a formidable balance between reasoning, speed, and capability, making it a default choice for many organizations. Their strides in AI-driven security and DevSecOps also contribute to their continued prominence in enterprise strategy.

- Google's Data Powerhouse: The Digital Librarian. Google's Gemini 2.5 Pro distinguishes itself with its colossal 1-million-token context window and its seamless integration into the vast Google Cloud ecosystem. This makes it the "digital archivist" of the group, uniquely suited for tasks that require ingesting and reasoning over enormous volumes of information. Its ability to analyze an entire code repository or thousands of pages of documentation in one go unlocks new possibilities for large-scale code analysis, migration projects, and comprehensive technical discovery.

What Is the Smartest Way to Integrate Grok Code Fast 1 into Our Workflow?

Successfully adopting a new AI tool requires more than simply providing access; it demands a deliberate strategy that encompasses technology, process, and culture. For leaders looking to harness the power of Grok Code Fast 1, a phased, thoughtful approach will maximize benefits while mitigating risks. If you're just getting started, our practical guide on enterprise AI integration is a helpful read.

Step 1: Gaining Access - The GitHub Copilot Gateway

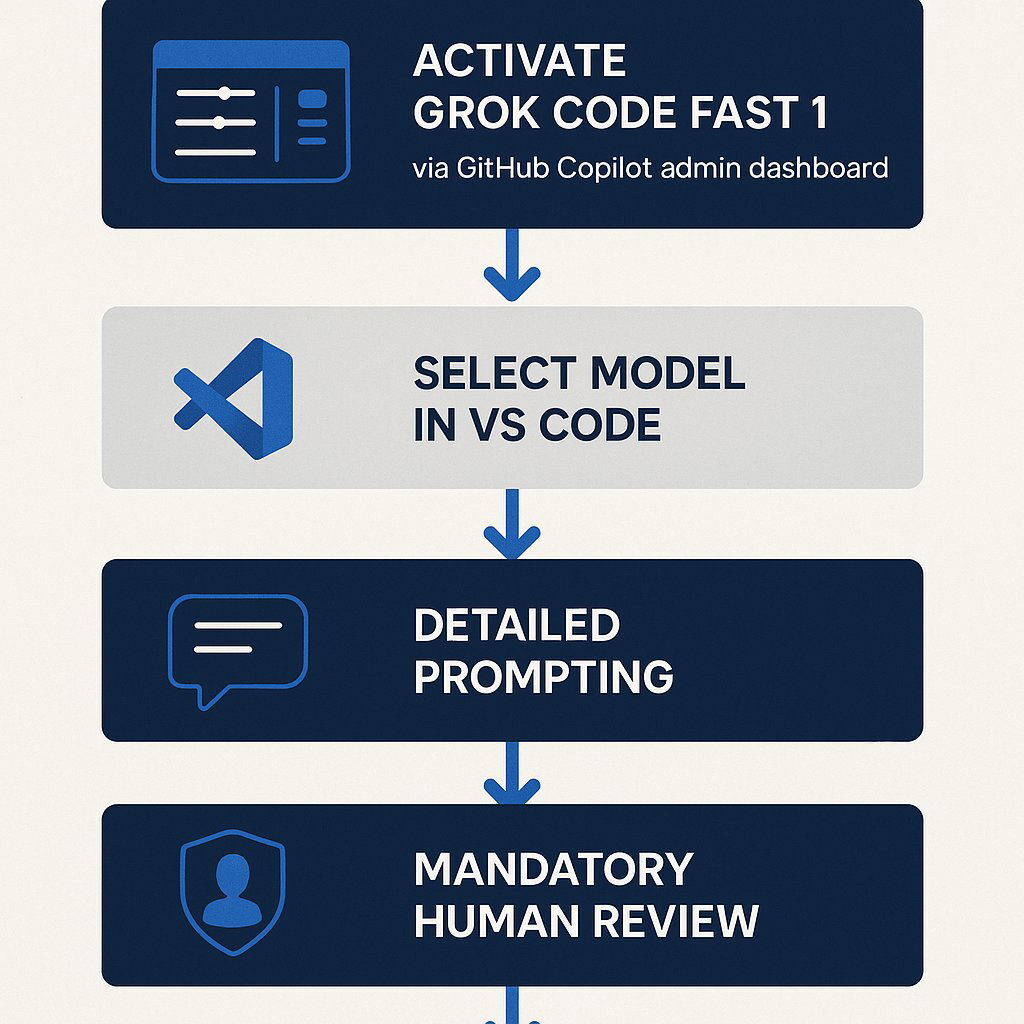

The primary and most seamless path for enterprise adoption of Grok Code Fast 1 is through its integration with GitHub Copilot. The process for enabling access differs slightly based on your organization's plan:

- For Enterprise and Business Plans: Access is centrally managed. A designated administrator must first navigate to the organization's GitHub Copilot settings and explicitly enable the "Grok Code Fast 1 policy." Once this policy is activated, the model will become available as a selectable option in the model picker within Visual Studio Code for every developer in the organization.

- For Individual and Pro Plans: Developers have more direct control. They can opt-in to use the model directly from the model picker in their VS Code editor. Alternatively, for those with a direct xAI API key, the model can be accessed via the "Bring Your Own Key" (BYOK) functionality.

It is also worth noting that xAI and GitHub are offering a complimentary access period for the model (excluding BYOK usage) until 2 p.m. PDT on September 2, 2025, providing a no-cost window for evaluation and pilot programs.

Step 2: A Playbook for Strategic Adoption

Simply "turning on" the tool is insufficient. A successful integration requires a clear playbook that guides its use and establishes best practices.

- Define Clear Use Cases: Not all tasks are created equal. Direct your teams to leverage Grok Code Fast 1 for the tasks where its strengths in speed and economy provide the greatest advantage: generating boilerplate code, writing unit tests, creating documentation, and performing simple, well-defined refactoring. Reserve more complex architectural or security-sensitive tasks for higher-reasoning models or, critically, for your senior human engineers.

- Establish Prompting Standards: The adage "garbage in, garbage out" is magnified with AI. The quality of the model's output is directly proportional to the clarity and context of the input prompt. Encourage your teams to develop a habit of writing specific, detailed, and context-rich prompts. This may involve providing examples of the desired output, specifying the programming languages and frameworks to be used, and outlining any constraints.

- Mandate a "Human-in-the-Loop" Review Process: This is the most critical rule of enterprise AI adoption. Under no circumstances should AI-generated code be committed to a production codebase without a thorough review by a qualified human developer. AI is a powerful assistant, but it is not infallible. It can produce code that is subtly flawed, insecure, or inefficient. Human oversight remains the ultimate backstop for quality and security. For organizations handling sensitive assets or AI governance, such oversight is essential.

- Integrate Validation into the Workflow: The speed of AI generation creates a risk of pushing changes forward without proper validation. As one expert noted, "Automation is the fastest way to make mistakes at speed." Make rigorous testing—both automated and manual—a non-negotiable step in any workflow that involves AI-generated code. The AI can even assist in this process by helping to generate test plans and checklists.

Step 3: Fostering the Right Culture - Augmentation, Not Replacement

Finally, address the cultural implications of this technology head-on. It's natural for engineering teams to have questions or concerns about how AI will impact their roles. Leadership must communicate a clear and consistent message: these tools are here to augment human ingenuity, not replace it. The role of the modern software engineer is evolving from that of a manual coder to that of a system architect and a pilot, guiding powerful AI tools to construct sophisticated systems more efficiently than ever before. This is a shift that empowers developers, freeing them from mundane work to focus on the creative and strategic challenges where they add the most value. For a closer look at how AI and modern workflows are changing developer roles, visit our guide to AI-powered blueprints for software teams.

Conclusion: The Agentic Shift is Here—Secure Your Competitive Edge

The launch of xAI's Grok Code Fast 1 is more than just the arrival of a new product; it is a clear signal of where the industry is headed. Its disruptive combination of extreme speed and aggressive economics is forcing a re-evaluation of how businesses approach AI in software development. It marks the definitive end of the era where a single, expensive AI model was the only viable option and ushers in a new age of strategic, multi-model orchestration.

The core takeaway for business leaders is this: the new competitive differentiator is not simply whether you use AI, but how intelligently you deploy a portfolio of AI tools. The ability to match the right model to the right task—using a fast, economical tool like Grok Code Fast 1 for high-volume work and reserving premium, high-reasoning models for critical challenges—is the key to simultaneously maximizing development velocity, optimizing costs, and ensuring the highest standards of quality. This is the dawn of the agentic era, where AI assistants are becoming increasingly autonomous and capable. If you're seeking further context on orchestrating a multi-model AI environment, don't miss our in-depth playbook on moving beyond AGI hype to real business value.

Navigating this complex, fast-evolving landscape of AI tools requires more than just a new subscription; it requires a new strategy. Choosing the right models, integrating them effectively into your workflows, and upskilling your team to leverage them is a significant undertaking that will define the technology leaders of the next decade.

Baytech Consulting specializes in helping technology leaders develop and implement these forward-thinking AI strategies. We can help you build a customized AI adoption playbook that maximizes your development velocity, optimizes costs, and secures your competitive edge in the age of agentic software development. Contact us today to schedule a strategic AI readiness assessment.

Supporting Links

- Official Announcement: Grok Code Fast 1 is rolling out in public preview for GitHub Copilot

- Benchmark Deep Dive: ( https://www.swebench.com/ )

- Strategic Context: McKinsey: How an AI-enabled software product development life cycle will fuel innovation

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.