AI Agents in Software Development: Executive Guide to TCO, ROI & Top Tools for 2025

September 09, 2025 / Bryan Reynolds

The Executive's Guide to AI Agents in Software Development: TCO, ROI, and the Tools Shaping 2025

The discourse surrounding Artificial Intelligence is saturated with hyperbole, making it difficult for technology leaders to distinguish between fleeting trends and fundamental strategic shifts. This briefing, prepared by the expert team at Baytech Consulting, is designed to cut through that noise. It provides a clear, business-focused analysis of AI agents in software development, moving beyond technical jargon to answer the questions that matter most to the C-suite: How will these tools really impact team performance, the budget, and the company's competitive edge?

This guide follows a direct, question-and-answer format, tackling the most pressing concerns executives have about this transformative technology. It provides data-driven answers on what these tools do, what they truly cost, and what returns can be realistically expected. The central thesis is this: Adopting AI agents is no longer an experimental venture but a strategic necessity for building and maintaining a high-velocity engineering organization. The critical decision is not if to adopt, but how to integrate these tools to maximize return on investment (ROI) while proactively mitigating inherent risks.

What Are AI Agents, and Why Should My Leadership Team Care Now?

At an executive level, AI agents in the Software Development Lifecycle (SDLC) should be understood as intelligent, goal-driven entities that go far beyond simple code completion. Unlike earlier tools, they can reason, plan, and execute complex, multi-step tasks, fundamentally altering the nature of software creation. To navigate this landscape, it is crucial to recognize the two distinct tiers of agents emerging in the market.

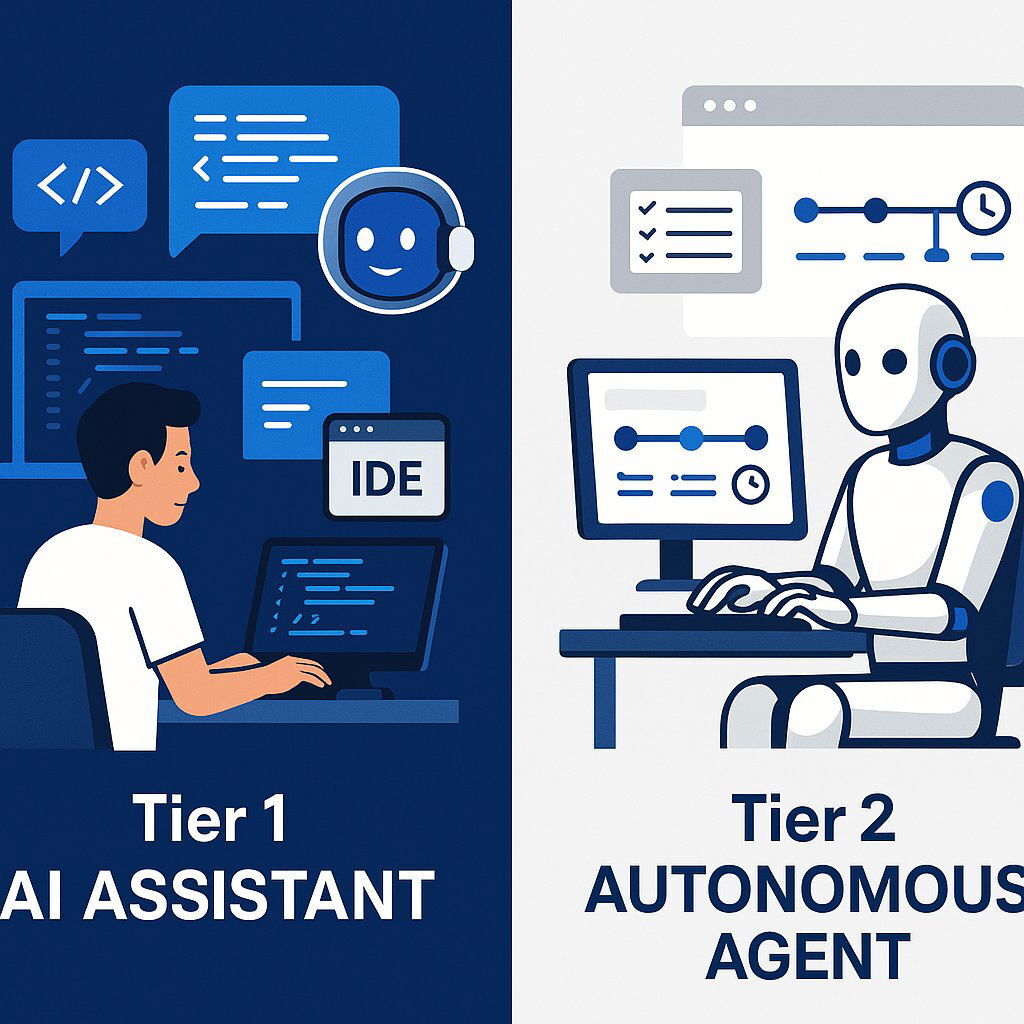

The Evolution: From Assistant to Autonomous Actor

Tier 1: AI Assistants (The "Pair Programmer") This category includes market-leading tools like GitHub Copilot and Amazon Q Developer. These assistants integrate directly into a developer's Integrated Development Environment (IDE), acting as a "pair programmer" that augments their existing workflow. Their primary function is to enhance the productivity of an individual developer by suggesting code, answering contextual questions about the codebase, and automating repetitive micro-tasks such as writing unit tests or generating documentation.

Tier 2: Autonomous AI Agents (The "AI Engineer") This tier represents a paradigm shift, led by groundbreaking tools like Devin from Cognition Labs. These agents operate at a higher level of abstraction. Instead of assisting a developer, they are capable of taking a complete task—such as a feature request or a bug report—and autonomously planning and executing the entire development workflow. This includes setting up the development environment, researching documentation using an integrated browser, writing the code, running tests, and submitting a finished pull request for human review.

The emergence of these autonomous agents signals a fundamental evolution in how technical work is managed. The conversation is expanding from simply making individual developers faster (augmentation) to creating a new class of digital team members that can be assigned entire work packages (delegation). Initially, AI assistants like GitHub Copilot optimized an existing process: a human developer writing code. Autonomous agents like Devin introduce a new process: a manager assigning a ticket directly to an AI. This transforms the role of the human engineer from a "doer" of all tasks to an "orchestrator" and "reviewer" of AI-completed work, allowing them to operate at a higher level of abstraction. This is not merely a tooling change; it is an organizational design change that requires new management methodologies, performance metrics, and career development paths for hybrid teams of human and AI engineers.

The Strategic Imperative

Leadership teams must act now because the competitive landscape is being reshaped by early adopters. Organizations leveraging these tools are accelerating their time-to-market, improving product quality, and, most importantly, freeing their most valuable senior talent to focus on high-impact innovation and complex architectural challenges. With Gartner predicting that over 70% of organizations will have incorporated AI into their applications by 2025, inaction is a direct threat to competitive viability.

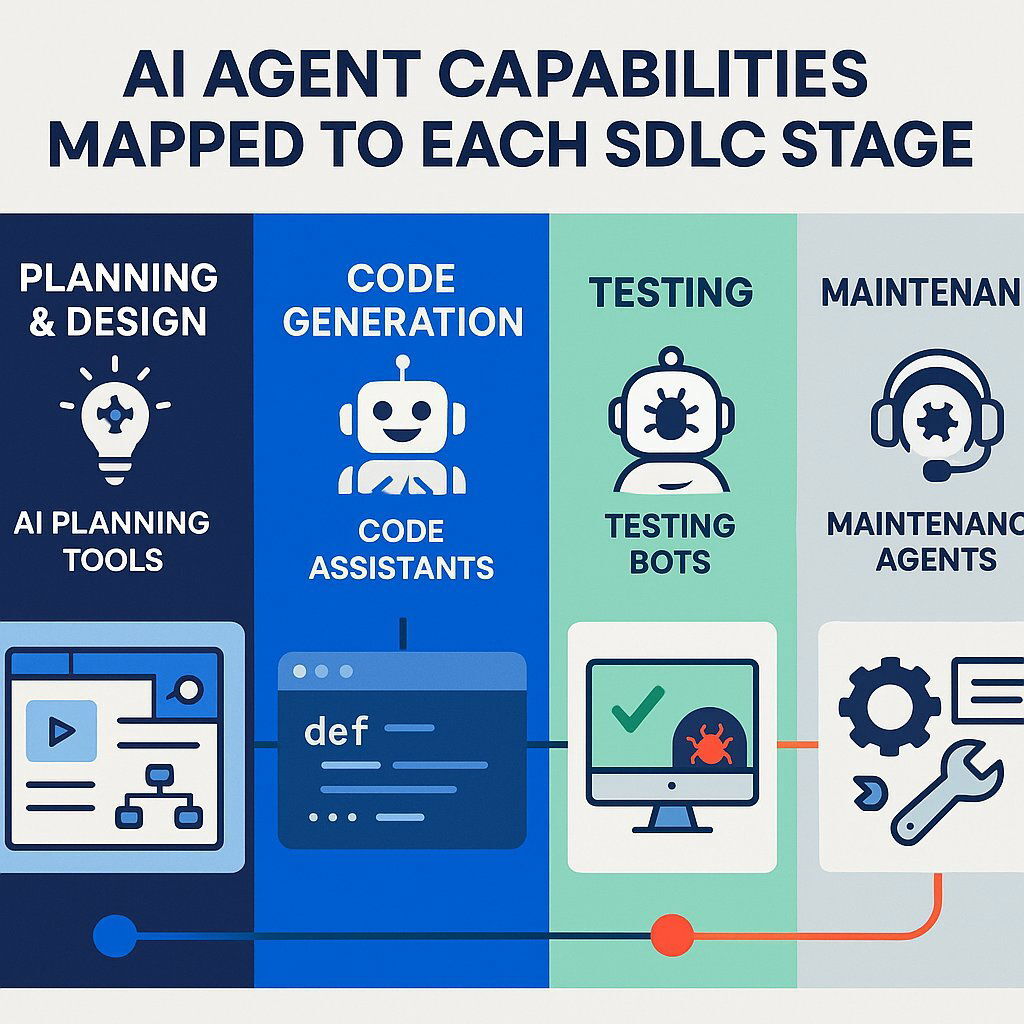

What Can These Tools Actually Do Across Our Software Development Lifecycle?

To make the impact of AI tangible, it is useful to map its capabilities to each stage of the SDLC. These tools are not a monolithic solution but a portfolio of capabilities that can be applied to specific bottlenecks within the value stream.

A. Planning & Design

AI is transforming project management from a reactive, historical-reporting function into a proactive, predictive discipline. AI-powered project management tools like Wrike and Monday.com use machine learning to analyze historical project data, enabling them to provide predictive risk assessments, intelligent resource forecasting, and automation of routine administrative tasks.

For example, an AI agent can analyze past sprints and identify that a planned feature is at high risk of delay due to its dependencies on a specific, overloaded team. It can then recommend reallocating a senior engineer with the precise skills needed to mitigate that risk before it materializes, ensuring projects stay on track.

B. Code Generation & Development

This is where the distinction between AI assistants and autonomous agents becomes most clear.

- The AI Pair Programmer: Tools like GitHub Copilot, Amazon Q, and Tabnine excel at the micro-level of development. A developer can use a natural language prompt like, "Generate a Python function to upload a file to an S3 bucket," and the tool will produce the necessary code snippet instantly. These tools also accelerate the modernization of legacy codebases by translating code between languages and providing context-aware completions that adhere to modern best practices.

- The Autonomous Engineer: An agent like Devin operates at the macro-level. A product manager can assign Devin a Jira ticket that reads, "Add S3 upload functionality to the user profile page." Devin will then autonomously create a step-by-step plan, use its browser to research the latest AWS S3 API documentation, write the full feature in its own sandboxed editor, execute commands in its terminal to test the implementation, and finally submit a complete, test-passing pull request for a human engineer to review and merge.

C. Testing & Quality Assurance

The massive acceleration of code generation with AI creates a downstream "quality tax" on QA and security teams, who are now faced with validating a much higher volume of code. This creates a new bottleneck unless testing and security are also augmented with AI. The tools across the SDLC are not standalone solutions but part of a necessary, symbiotic ecosystem. To realize the full end-to-end benefit of AI in development, organizations must also invest in AI-powered testing and security platforms.

AI-native testing platforms like Mabl are addressing this challenge head-on. Instead of a QA engineer spending days writing brittle test scripts, they can provide a user story in plain language, such as, "A user should be able to log in, add an item to their cart, and complete the checkout process." The AI testing agent then autonomously generates, executes, and maintains the corresponding end-to-end tests. Crucially, these platforms feature "self-healing" tests that automatically adapt to minor UI changes and provide autonomous root cause analysis that explains why a test failed, drastically reducing the manual effort of test maintenance. For a broader look at how these trends are shaping delivery and risk, explore Agentic SDLC: The AI-Powered Blueprint Transforming Software Development.

D. Debugging & Maintenance

AI agents are also streamlining the often time-consuming process of fixing bugs.

- AI-Powered Debugging: Within the IDE, an AI assistant can analyze complex stack traces, suggest potential fixes for bugs, and identify security vulnerabilities in real-time as the developer is coding.

- Autonomous Bug Fixing: An autonomous agent like Devin has demonstrated the ability to take a bug report directly from a GitHub issue, independently set up an environment to reproduce the bug, and then code, test, and submit the fix without any human intervention.

A C-Suite Comparison: The Top AI Agent Tools for the Enterprise

For executive decision-makers, selecting the right tool requires a focus on criteria that matter at the enterprise level: security, governance, scalability, and integration. The following table provides a strategic overview of the leading tools in 2025.

Table 1: 2025 AI Agent Tool Landscape for the Enterprise

| Tool | Category | Ideal For... | Key Enterprise Features | Pricing Model |

|---|---|---|---|---|

| GitHub Copilot Enterprise | AI Pair Programmer | General developer productivity & in-IDE assistance across the organization. | Codebase indexing, centralized policy management, IP indemnity, audit logs. | Per user/month. |

| Amazon Q Developer (Pro) | AI Pair Programmer | AWS-centric development teams needing deep integration with AWS services. | AWS service integration, security scanning, custom code recommendations. | Per user/month. |

| Tabnine Enterprise | AI Pair Programmer | Organizations with strict IP/privacy needs or those requiring air-gapped deployments. | Self-hosting options, models trained only on permissively licensed code. | Per user/month. |

| Devin | Autonomous AI Engineer | Specialized teams focused on backlog reduction, complex refactors, and migrations. | Autonomous task execution, deep integration with Jira/Slack, sandboxed environment. | Usage-based (ACU) / Team Subscription. |

| Mabl | AI-Native Testing Platform | QA teams looking to automate end-to-end testing and reduce test maintenance overhead. | Low-code test creation, auto-healing tests, autonomous failure analysis. | Platform Subscription. |

Deep Dive 1: GitHub Copilot Enterprise - The De Facto Standard for Developer Augmentation

GitHub Copilot is the most mature and widely adopted solution for establishing a baseline of AI-augmented productivity across an entire engineering organization. Its business value lies in its enterprise-grade features. Codebase-aware chat allows Copilot to understand an organization's private code, providing highly relevant and contextual suggestions. Centralized policy management, detailed audit logs, and crucial IP indemnification provide the governance and legal protection that large enterprises require. Furthermore, its deep integration with the broader GitHub ecosystem (e.g., automated pull request summaries) and Azure DevOps makes it a natural fit for organizations already invested in the Microsoft stack. If you're considering modernizing your current delivery workflows and cloud-native platforms alongside AI-powered tools, explore our executive guide on EHR modernization and cloud-native strategies.

Deep Dive 2: Devin - The Vanguard of Autonomous Engineering

Devin should not be viewed as a Copilot replacement, but as a new category of specialized tool. Its value is in tackling specific, high-effort, low-creativity workstreams that are often a major source of technical debt and developer burnout. This includes legacy code migrations, repetitive data engineering tasks, and clearing backlogs of well-defined feature requests or bug fixes.

The most compelling proof of its enterprise-scale ROI comes from the Nubank case study. Nubank faced a multi-year, 1000-engineer project to refactor a massive, monolithic system. By delegating the repetitive refactoring tasks to Devin, they compressed the project timeline into a matter of weeks, achieving a remarkable 12x efficiency gain and over 20x in cost savings. However, it is important to be pragmatic about Devin's current capabilities. It operates primarily through a Slack and Jira-based workflow and has documented limitations in handling ambiguous tasks, requirements that demand high creativity, or understanding deep, unstated business context. For a more foundational discussion of how custom solutions can address legacy and complexity issues, see How Custom Software Solves Manufacturing’s Supply Chain Crisis.

What's the Real Cost? A Practical Breakdown of Total Cost of Ownership (TCO)

The true investment in AI agents is like an iceberg: the visible subscription fee is only a small fraction of the Total Cost of Ownership (TCO). A strategic budget must account for direct, implementation, and hidden operational costs.

A. Direct Costs (The License Fees)

The per-user, per-month subscription fees for the leading AI assistants are relatively straightforward:

- GitHub Copilot Enterprise: $39

- Amazon Q Developer Pro: $19

- Tabnine Enterprise: $39

Devin employs a different model based on consumption. Its "Agent Compute Units" (ACUs) are tied to task complexity and compute time, not just the number of users. The team plan starts at $500 per month, which includes 250 ACUs.

B. Implementation & Enablement Costs (The Investment to Unlock Value)

- Training & Onboarding: Data shows that structured training improves adoption outcomes by 40%. Organizations should budget $50-$100 per developer for hands-on workshops, creating internal documentation, and establishing a program of internal champions to drive best practices.

- Administrative & Governance Overhead: Significant "soft costs" are incurred as legal, security, and management teams spend time vetting tools, establishing usage policies (e.g., filtering suggestions that match public code), and monitoring adoption and compliance. This can easily represent an initial investment of $5,000 or more for a mid-sized organization.

C. Hidden Operational Costs (The Long Tail)

- Increased QA & Security Scrutiny: As discussed, higher code velocity increases the surface area for bugs and vulnerabilities. This may necessitate new investment in automated security tools or more time allocated from the security team to review AI-generated code. To better understand how hidden costs can snowball without the right approach, see the cautionary tale in ChatGPT 5: From Death Star to Easy-Bake Oven.

- Change Management & Productivity Dip: An initial, temporary dip in productivity is expected as teams adapt their workflows and learn the art of effective prompting.

- Potential for Skill Atrophy: A critical long-term cost is the risk of junior developers not learning core debugging and problem-solving fundamentals due to an over-reliance on AI. This requires a strategic investment in new mentorship models and training programs focused on architectural thinking and systems design.

The chart below illustrates a sample TCO breakdown, highlighting that licensing fees often account for less than half of the true first-year cost.

Chart 1: TCO Breakdown for a 100-Developer Team (Annual Estimate) !( https://i.imgur.com/kY7wV2c.png )

What's the Payback? Calculating the ROI of AI Agent Adoption

The true ROI of AI agents must be measured across three pillars: Productivity, Quality, and People (Developer Experience).

A. Productivity & Speed

The most widely cited metric is that developers complete tasks up to 55% faster when using AI assistants. This raw number translates into tangible business outcomes: faster time-to-market for new features, quicker resolution of customer-facing bugs, and increased overall development throughput. Real-world case studies validate this, with organizations like Allpay achieving a 25% increase in delivery volume and Bancolombia seeing a 30% increase in code generation after adoption. For practical insights on improving software testing ROI within this context, see B2B Software Testing: How to Avoid Over- or Under-testing and Maximize ROI.

B. Quality & Reliability

A common fear is that increased speed will come at the expense of quality. However, data from a large-scale study conducted with Accenture shows the opposite. Teams using GitHub Copilot saw a 15% increase in their pull request merge rate and an 84% increase in successful builds, indicating that the code being submitted was of higher quality and passed review more often. This directly impacts the bottom line through fewer production bugs, lower change failure rates (a key DORA metric), reduced costly rework, and higher customer satisfaction.

C. Developer Experience & Retention

This is a critical, often underestimated, component of ROI. In a highly competitive talent market, a superior Developer Experience (DevEx) is a powerful strategic advantage. The data is compelling: 90% of developers report feeling more fulfilled in their job, 73% find it easier to stay in a "flow state," and 87% report less mental effort spent on repetitive tasks when using AI tools. Higher job satisfaction leads directly to lower attrition, reducing the significant costs associated with recruiting, hiring, and onboarding new engineers.

To make this tangible, the following framework can be used to build a preliminary business case for adoption.

Table 2: A Simple Framework for Calculating Your Potential ROI

| Input | Your Value | Calculation | Result |

|---|---|---|---|

| (A) Number of Developers | 100 | ||

| (B) Fully-Loaded Annual Developer Salary | $150,000 | ||

| (C) Annual TCO per Developer (from Chart 1) | $888 | ||

| (D) Estimated Weekly Time Savings (hours) | 2 | ||

| (E) Total Annual TCO | (A) x (C) | $88,800 | |

| (F) Hourly Developer Cost | (B) / 2080 hours | $72.12 | |

| (G) Annual Productivity Gain per Developer | (D) x 50 weeks x (F) | $7,212 | |

| (H) Total Annual Productivity Gain | (G) x (A) | $721,200 | |

| (I)Net Annual ROI | (H) - (E) | $632,400 | |

| (J)ROI Multiple | (I) / (E) | 7.1x |

What Are the Risks, and How Do We Mitigate Them? (The Baytech Consulting Approach)

A pragmatic approach requires directly addressing the risks that cause executive hesitation. The common thread through all mitigation strategies is the necessity of a strong, well-defined governance framework. Successful adoption is less about the technology itself and more about the maturity of the organization's engineering culture and practices. To go deeper into managing complex digital risks, compliance, and governance frameworks in enterprise environments, see Digital Transformation in Hazardous Waste Management: Compliance, Strategy & ROI.

Risk 1: Data Security & IP Protection

- The Fear: "Will our proprietary source code be used to train a public AI model?".

- The Mitigation: Enterprise-grade tools like GitHub Copilot Enterprise and Tabnine Enterprise provide explicit contractual guarantees that they do not train on customer code. Features such as self-hosting options (Tabnine) and robust, configurable privacy controls are non-negotiable requirements for any enterprise deployment. Governance policies must be established to enforce these settings across the organization.

Risk 2: Integration with Legacy Systems

- The Fear: "Our tech stack is complex. Will these tools create more problems than they solve?".

- The Mitigation: This is a valid and common challenge. The recommended approach is a phased rollout, starting with a pilot team working on more modern services to build momentum and identify integration friction points. This is an area where an experienced partner can provide immense value in bridging the gap between modern AI tools and entrenched legacy systems.

Risk 3: Quality Control & Over-Reliance

- The Fear: "Will my team start shipping low-quality, AI-generated 'spaghetti code' that becomes a maintenance nightmare?".

The Mitigation: The solution is process governance. AI must be positioned as an assistant, not a replacement for sound engineering principles. This requires maintaining rigorous human oversight in code reviews and pairing AI code generation with AI-powered testing platforms to handle the increased volume. For junior developers, new mentorship programs must be implemented that focus on understanding the

why behind the code, not just on generating it quickly.

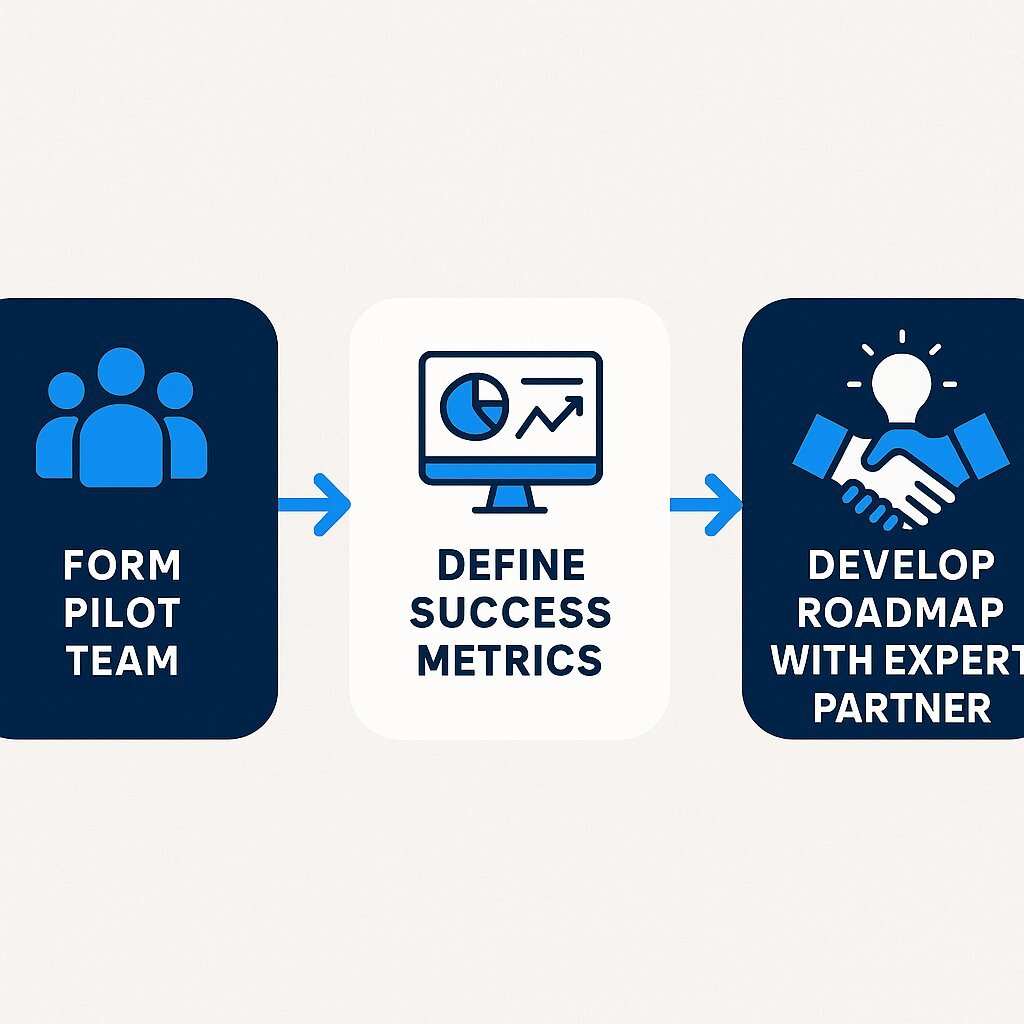

Conclusion: Your Next Steps to Building an AI-Powered Engineering Organization

The strategic adoption of AI agents is a pivotal step towards creating a more productive, innovative, and resilient engineering organization. The data clearly shows that when implementation is planned and executed thoughtfully, the ROI is substantial. The path forward involves a deliberate, measured approach.

Three Actionable Next Steps:

- Form a Pilot Team: Assemble a small, cross-functional team of 5-10 developers (mixing senior and junior talent) to trial a tool like GitHub Copilot Business for a defined 6-8 week period. This approach aligns with best practices from successful enterprise adoptions. If you'd like to see how leadership strategies for adoption and organizational change can set the foundation for a successful tech transformation, check out Why Manufacturing Teams Resist Software—and How to Overcome It.

- Define Success Metrics Beyond Speed: Use a balanced framework like DORA or the DX Core 4 to measure the pilot's impact. Track not just cycle time, but also change failure rate, deployment frequency, and developer satisfaction scores against a clear baseline established before the pilot begins.

- Develop a Strategic Roadmap with an Expert Partner: This is a complex organizational transformation. Partnering with a firm like Baytech Consulting can provide the necessary expertise to conduct an organizational readiness assessment, select the right portfolio of AI tools for specific needs, and build the governance and training programs essential for a successful, scalable, enterprise-wide rollout.

Supporting Articles:

- For a deeper dive into the data: (GitHub Copilot & Accenture Research)

- For a balanced view on implementation hurdles: (AI in Software Development: Challenges and Opportunities)

- For a forward-looking strategic perspective: AI agents' impact on software engineering

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.