What is Nano Banana, and Why Should Your Business Care?

September 10, 2025 / Bryan Reynolds

Introduction: What is Nano Banana, and Why Should Your Business Care?

In the hyper-competitive landscape of artificial intelligence, it is rare for a new technology to emerge with such force that it reshapes the leaderboard overnight. Yet, in mid-2025, that is precisely what happened. A mysterious, unbranded AI image model codenamed "nano banana" appeared on LMArena, the industry's most respected public benchmark for AI models. Without any corporate fanfare, it began systematically outperforming every established player in head-to-head user tests, quickly rising to the #1 global rank for image editing. The tech world was buzzing: Who was behind this powerful new tool?

The answer came in late August 2025, when Google DeepMind revealed that "nano banana" was the public alias for its latest and most advanced AI model: Gemini 2.5 Flash Image. This strategic "guerilla launch" was a masterstroke. By allowing the model to build a reputation based purely on its superior, unbiased performance, Google established its credibility before the official announcement. It was not just another corporate claim of advancement; it was a market-proven reality. This approach signaled immense confidence in the product's capabilities and immediately captured the attention of industry leaders.

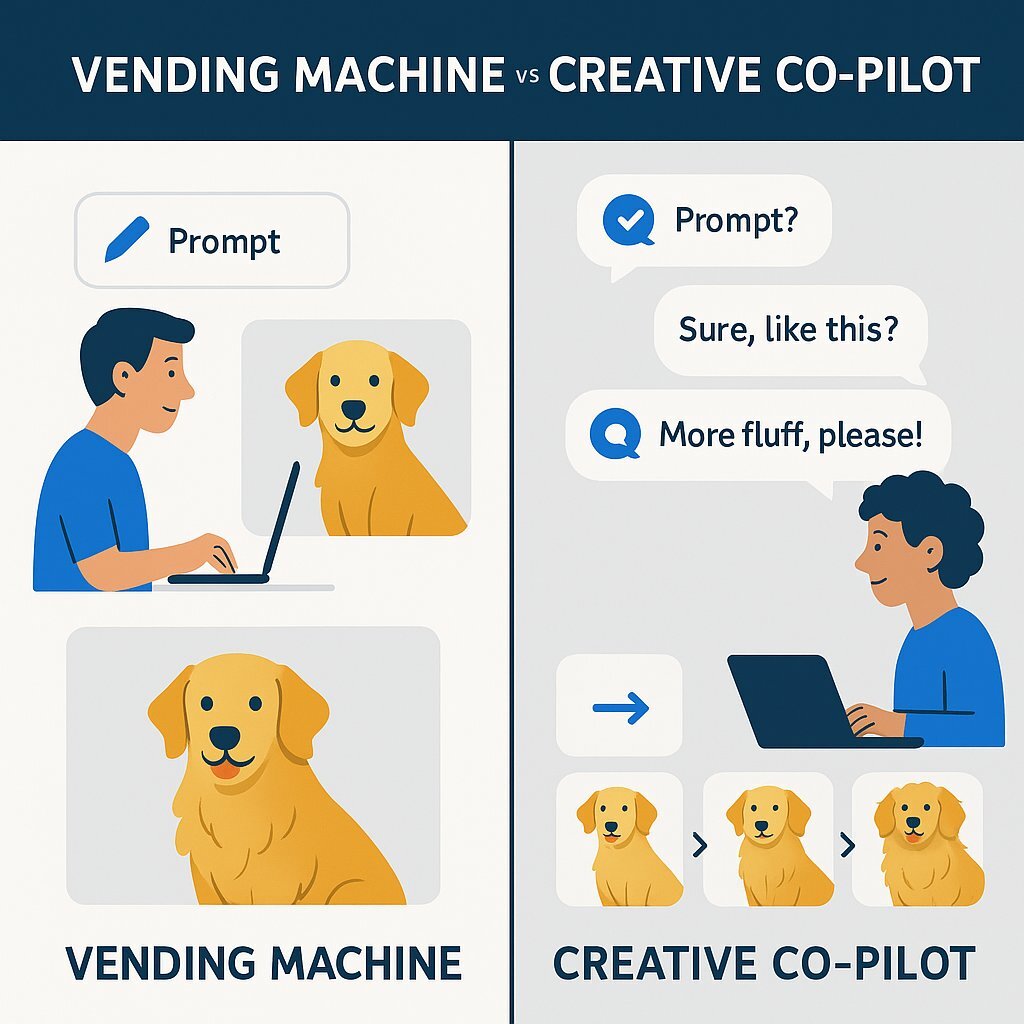

For executives, the key question is not just what this technology is, but why it matters for their business. Gemini 2.5 Flash Image represents a fundamental shift in how organizations can create and manipulate visual content. It moves beyond the traditional "vending machine" model of generative AI—where a text prompt is inserted and a static image is dispensed—to a "creative co-pilot" model. This is a tool that engages in a conversational dialogue, understands iterative commands, and functions less like a simple generator and more like a creative partner with visual reasoning capabilities.

This report provides a comprehensive executive briefing on Gemini 2.5 Flash Image. It will deconstruct its game-changing features, provide a data-driven comparison against its primary competitors, explore tangible business applications across key industries, and offer a strategic guide for leveraging this powerful new asset for competitive advantage.

A New Class of AI: The Key Capabilities Defining Gemini 2.5 Flash Image

The excitement surrounding Gemini 2.5 Flash Image is rooted in a set of core capabilities that directly address the most significant pain points of previous-generation AI image tools. These features are not merely incremental improvements; they represent a new class of functionality that unlocks previously impractical workflows for businesses.

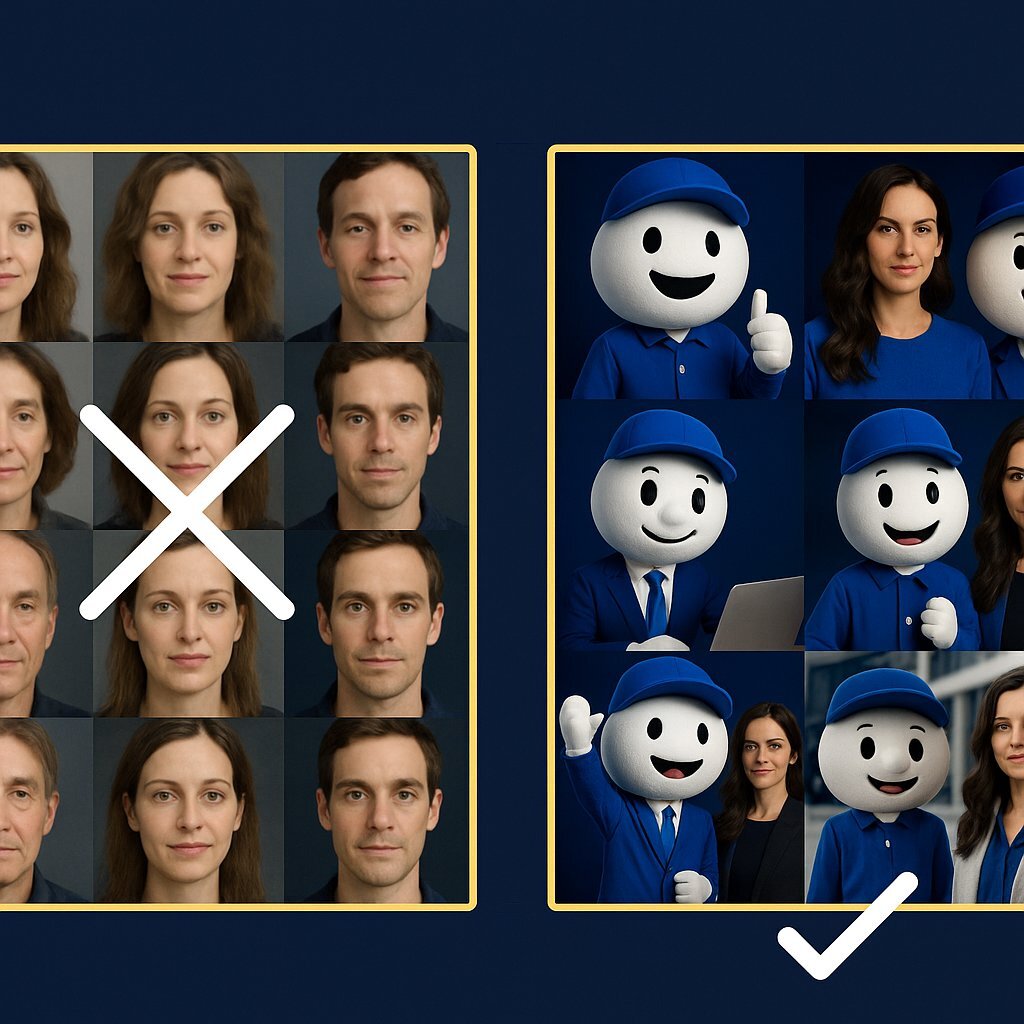

The End of Inconsistent AI Art: Mastering Character Consistency

A persistent and critical failure of AI image models has been their inability to maintain the likeness of a person, character, or branded object across multiple generations. For any professional use case—from a marketing campaign featuring a mascot to an e-commerce site showing a product from different angles—this inconsistency rendered AI-generated visuals unreliable. An image might be 90% correct, but the subtle "morphing" of facial features or product details made it unusable for professional storytelling.

Gemini 2.5 Flash Image was engineered specifically to solve this problem. It boasts a reported 95%+ character consistency rate, a figure that fundamentally changes the calculus for businesses. This means a user can upload a photo of a person and confidently place them in different outfits, change their location, or even see how they would appear in another decade, all while preserving their core identity. The same applies to branded characters or even pets; the model can add a tutu to a specific chihuahua without changing the dog's unique features. For businesses, this breakthrough unlocks the ability to create consistent brand assets, character-driven marketing campaigns, and uniform product imagery at scale and with high fidelity.

Your Creative Co-Pilot: Conversational, Multi-Turn Editing

The traditional workflow for AI image creation has been a frustrating cycle of one-shot prompts. If an image was not perfect, the user had to start over, tweaking keywords and hoping for a better result. Gemini 2.5 Flash Image replaces this with a dynamic, conversational process known as multi-turn editing.

This capability allows users to generate an initial image and then refine it through a series of simple, natural language commands. For example, a real estate professional could start with an image of an empty room and then conversationally direct the AI: "Paint the walls a light sage green," followed by "Now, add a large, floor-to-ceiling bookshelf on the back wall," and finally, "Place a modern leather sofa in the center of the room." The AI understands each command in context, altering specific parts of the image while preserving the rest.

This interactive dialogue is made possible by the model's underlying architecture. It is a natively multimodal model, meaning it was trained from the ground up to process text and images in a single, unified step. It does not just translate words to pixels; it possesses a form of visual reasoning that allows it to understand complex, compositional instructions. This architectural distinction is what separates it from pure image generators and elevates it to a visual reasoning partner.

It is important to note that, as a newly released technology, it is not flawless. Some users have reported an "image loop" behavior where the model acknowledges a request for a minor edit but returns an identical image. This is a known issue in the early rollout, often triggered by prompts that are too subtle. Workarounds include using more descriptive and distinct edit instructions or starting a new chat session to reset the model's context.

More Than the Sum of its Parts: Multi-Image Fusion & Style Transfer

Gemini 2.5 Flash Image introduces powerful capabilities for blending and remixing visual concepts. Its multi-image fusion feature allows users to upload and combine up to three separate images into a new, seamless visual. For instance, a marketer could take a photo of a new product, an image of a scenic background, and a picture of a brand ambassador, and then prompt the AI to merge them into a single, cohesive advertisement. This moves beyond simple background replacement to a more intelligent blending of elements, lighting, and perspective.

Complementing this is a sophisticated style transfer capability. The model can isolate the aesthetic of one image—its color palette, texture, or artistic style—and apply it to an object in another. A fashion designer could, for example, take the intricate pattern from a butterfly's wings and apply it to a dress, or apply the texture of flower petals to a pair of rain boots, generating instant, novel design concepts. For creative teams, these features are powerful tools for brainstorming, prototyping, and creating unique composite visuals that would traditionally require hours of skilled work in software like Adobe Photoshop.

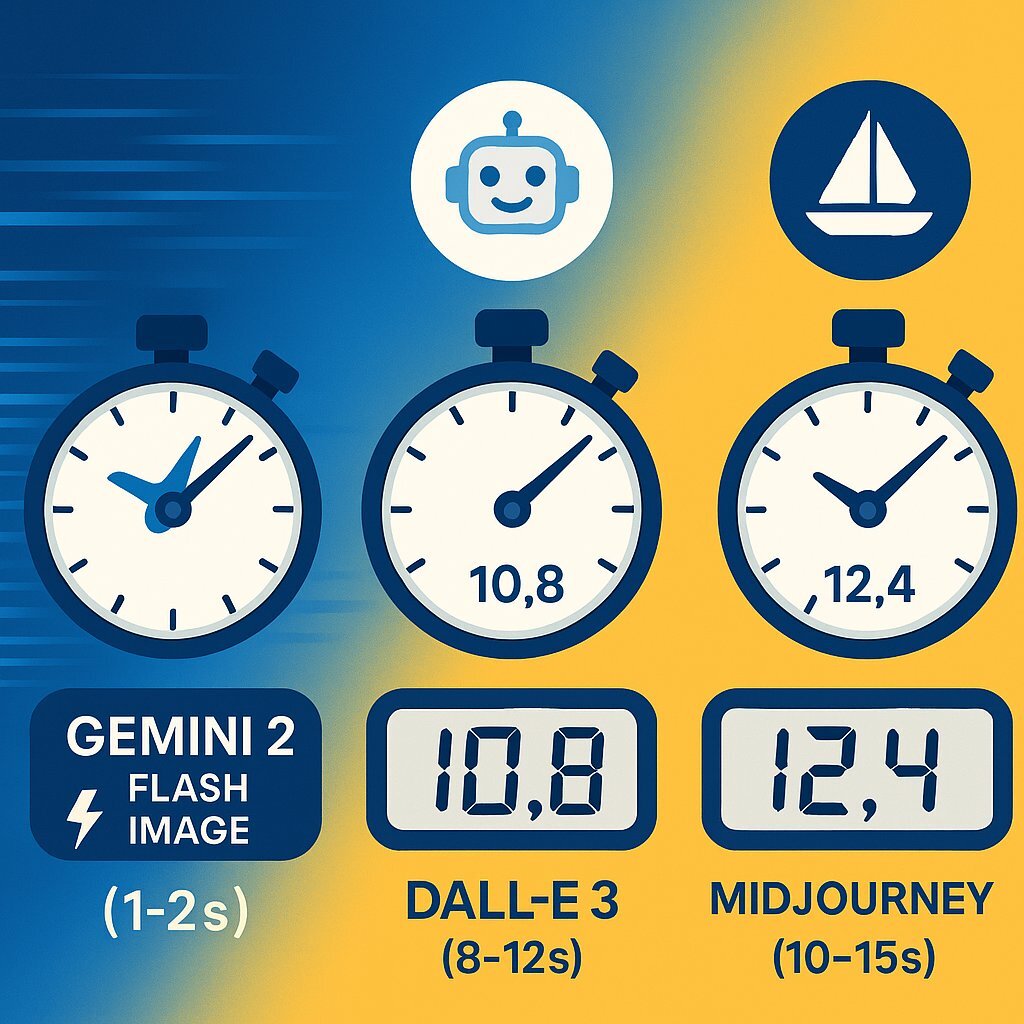

The "Flash" Advantage: Speed, Efficiency, and Real-Time Workflows

The "Flash" in the model's name is not just marketing; it signifies a core design principle of speed and efficiency, engineered for the kind of rapid, interactive workflows that businesses require. While competing models can take anywhere from 8 to 15 seconds to generate a high-quality image, Gemini 2.5 Flash Image delivers results in an average of

1-2 seconds.

This dramatic reduction in latency transforms the user experience from a passive waiting game into a real-time creative session. This speed is a direct result of its advanced technical architecture. The model is a sparse Mixture-of-Experts (MoE) model, which allows it to activate only a relevant subset of its parameters for any given task, making it highly efficient. This software architecture runs on Google's custom-designed Tensor Processing Units (TPUs), hardware specifically optimized for the massive computational demands of training and running large-scale AI models. This synergy of hardware and software is what enables the near-instantaneous generation that makes conversational, multi-turn editing a fluid and productive process.

The Competitive Landscape: How Nano Banana Stacks Up

The release of Gemini 2.5 Flash Image has directly challenged the established leaders in the high-quality AI image generation market, primarily OpenAI's DALL-E 3 (most commonly accessed via ChatGPT) and the independent powerhouse, Midjourney. While each model possesses unique strengths, Gemini's focus on solving core operational pain points for businesses—consistency and iterative control—has carved out a distinct and powerful position.

An analysis of the competitive landscape reveals not a single "best" model, but rather a market that is segmenting to serve different user needs and workflows. Midjourney continues to excel as a tool for artists and creatives seeking stunning, stylized, and often serendipitous visual output. DALL-E 3 remains a highly accessible and reliable utility for generating literal interpretations of prompts quickly. Gemini 2.5 Flash Image, however, is optimized for scalable, repeatable, and controlled business operations.

The choice of tool is therefore becoming a strategic decision based on the specific business function. A creative agency might use Midjourney for a high-concept brand launch, DALL-E 3 for rapid-fire social media content, and Gemini 2.5 Flash Image to execute a large-scale e-commerce product catalog update that demands absolute consistency. Understanding these distinctions is key for any executive looking to build a modern, efficient creative technology stack. If you want to deepen your understanding of the underlying terminology and best practices shaping Agile, DevOps, and CI/CD in this new era of creative automation, check out our executive guide to Agile, DevOps, CI/CD, & business software jargon.

The following table provides a data-driven comparison of the three leading models across the metrics most relevant to business users, based on performance benchmarks and expert analysis.

| Feature / Metric | Google Gemini 2.5 Flash Image (Nano Banana) | OpenAI DALL-E 3 (via ChatGPT) | Midjourney v6 |

|---|---|---|---|

| Primary Strength | Conversational Editing & Character Consistency | Prompt Adherence & Ease of Access | Artistic Style & Creative Output |

| Character Consistency | ~95% (Game-changing reliability for branding) | ~65% (Noticeable variations) | ~70% (Better, but still inconsistent) |

| Average Speed | 1-2 seconds (Enables real-time workflows) | 8-12 seconds | 10-15 seconds |

| Editing Capability | Excellent: Multi-turn conversational editing, multi-image fusion, inpainting. | Good: Inpainting within ChatGPT, but less interactive. | Very Good: Vary Region, zoom, pan, remixing tools. |

| Prompt Interpretation | Excellent: Understands narrative and complex natural language commands. | Very Good: Highly literal and follows instructions precisely. | Good: More artistic and interpretive, can add its own creative spin. |

| Access / Interface | Gemini App, Google AI Studio, Vertex AI, API | Integrated into ChatGPT Plus, Microsoft Copilot | Web interface and Discord |

| Cost (API / Base Plan) | ~$0.039 per image (via API) | Included in ChatGPT Plus subscription (~$20/mo) | Starts at $10/month subscription |

| Best For... | Marketing, E-commerce, Product Design, Storytelling | Quick, literal visuals, concept generation, integrated text/image chat | High-concept art, mood boards, cinematic and stylized visuals |

From Concept to Conversion: Practical Business Applications Across Industries

The true value of any new technology is measured by its ability to solve real-world problems and create tangible business value. Gemini 2.5 Flash Image's advanced capabilities translate directly into powerful applications across a wide range of industries, empowering teams to work faster, more creatively, and more efficiently. If you want a practical perspective on how digital transformation can supercharge marketing, product engagement, and patient experience, look into our guide on how custom digital platforms drive ROI and engagement—you’ll see AI is just one piece of a bigger puzzle.

Marketing & Advertising: The End of the Creative Bottleneck

For marketing and advertising teams, the model is a force multiplier. The ability to generate hyper-personalized ad creatives at scale is a primary use case. A team can create a core visual and then instantly generate dozens of variations tailored to different audience segments, platforms, or A/B tests—a process that would traditionally take days or weeks. The model's character consistency ensures that brand mascots or spokespeople remain uniform across every iteration of a campaign. This operational efficiency is why major industry players like advertising giant WPP and creative software leader Adobe have already begun integrating Gemini 2.5 Flash Image into their enterprise platforms, validating its readiness for high-stakes professional workflows. For a Marketing Director, this means drastically reducing campaign time-to-market and the high costs associated with photography and graphic design, while empowering their teams to iterate on creative concepts in real time.

E-commerce & Retail: A Revolution in Product Visualization

The e-commerce sector stands to benefit enormously from the model's consistency and editing prowess. A standout application is dynamic product photography. A retailer can take a single, clean shot of a product and use Gemini 2.5 Flash Image to place it into hundreds of different lifestyle scenes, backgrounds, or contexts, creating a rich and diverse set of visuals for product pages and social media without the expense of a physical photoshoot. Furthermore, its multi-image fusion capability can power more realistic virtual try-on experiences, allowing a customer to upload a photo of themselves and see how a piece of clothing might look. For a Head of Sales, this translates directly to higher conversion rates through more engaging, personalized, and high-quality product visuals, all while significantly reducing the logistical complexity and cost of traditional product photography.

Real Estate & Interior Design: Selling the Vision, Instantly

In real estate, selling a property is often about selling a vision. Gemini 2.5 Flash Image is a powerful tool for bringing that vision to life. Agents can use it for virtual staging, transforming photos of empty rooms into fully furnished, appealing living spaces. They can also show a single property in different conditions—visualizing it during different seasons, with different paint colors, or with various landscaping options—to help potential buyers connect emotionally with the space. The model's ability to work with visual templates also allows for the rapid creation of uniform, high-quality listing cards for an entire portfolio of properties. This empowers real estate professionals to market properties more effectively and accelerate the sales cycle by providing clients with compelling, customized visualizations. To explore how the right AI tools can make or break efficiency in these high-stakes business environments, see our deep dive on xAI Grok Code Fast 1 and its impact on rapid industry transformation.

Gaming & Software Development: Accelerating the Creative Pipeline

For CTOs and development leads in the gaming and software industries, the model can dramatically accelerate the creative pipeline. Development teams can use it for the rapid generation of concept art for characters, environments, and in-game assets, allowing for more creative exploration in the early stages of a project. The character consistency feature is invaluable for creating detailed character sheets and ensuring that a character looks the same in promotional materials as they do in the game itself. It can also be used to quickly design and iterate on UI/UX elements, icons, and other graphical assets.

For technical leaders, the real power lies in integration. The Gemini API allows these capabilities to be embedded directly into custom development workflows. For example, integrating the API into an enterprise application management and DevOps pipeline can automate the generation of placeholder assets or UI mockups, streamlining the entire application management process. A partner with expertise in agile deployment, such as Baytech Consulting, can help teams build these integrations quickly, turning a powerful standalone tool into a seamless and automated part of their software development lifecycle.

Putting Nano Banana to Work: A Strategic Guide for Businesses

Adopting a new technology effectively requires a clear understanding of how to access it, how to use it, and how to govern it. This section provides a strategic guide for business leaders looking to implement Gemini 2.5 Flash Image within their organizations.

How to Access Gemini 2.5 Flash Image & What it Costs

Google has made the model accessible through several channels, catering to different needs from individual experimentation to enterprise-scale deployment:

- For Individuals & Experimentation: The most straightforward way to test the model's capabilities is through the Gemini App (available on web and mobile), which provides a user-friendly interface for generating and editing images.

- For Developers & Prototyping: Google AI Studio offers a web-based environment for developers to prototype with the model, test prompts, and even build simple AI-powered applications without extensive coding.

- For Enterprise & Integration: For production use cases, the model is available through the Gemini API and Google's Vertex AI platform. These channels provide the tools needed to integrate the model's capabilities into custom applications, internal workflows, and enterprise-grade systems. Want to see how modern cloud-native architectures are changing the game for enterprises? Dive into our executive blueprint for business agility in cloud-native adoption.

The pricing model is transparent and based on usage, which is particularly appealing for CFOs and budget managers. When accessed via the API, the cost is $30 per 1 million output tokens. A typical image generation or editing task consumes approximately 1,290 tokens, which translates to a predictable cost of about $0.039 per image. This clear, per-unit cost allows for easy comparison against existing creative expenditures, such as stock photo subscriptions or freelance designer fees.

Prompting 101 for Leaders: Guiding Your Team to Success

While the model is powerful, the quality of the output is still heavily influenced by the quality of the input. Leaders can guide their teams to achieve superior results by promoting a few key principles of effective prompting:

- The Golden Rule: Describe the Scene, Don't Just List Keywords. The model's greatest strength is its deep understanding of natural language. A narrative, descriptive paragraph will almost always produce a more coherent and higher-quality image than a simple list of disconnected words like "dog, park, sun, happy." Encourage teams to write prompts that tell a story.

- Think Like a Photographer for Realism. To achieve photorealistic results, prompts should include photographic terminology. Mentioning camera angles ("close-up portrait"), lens types ("captured with an 85mm lens for soft background bokeh"), lighting conditions ("illuminated by soft golden hour light"), and mood ("the overall mood is serene and masterful") will guide the model toward a more professional and realistic output. For CTOs intrigued by how prompt engineering and efficient AI tool usage tie into real business outcomes, our article on AI agents and automation in software development is a must-read.

- Be Explicit for Business Assets. When creating assets like icons, logos, or stickers for use in other materials, it is crucial to be explicit. Prompts should specify the style ("a kawaii-style sticker") and, most importantly, include the phrase "The background must be white" to ensure the asset is generated on a clean, transparent-ready background for easy integration.

Governance and Responsible AI: Building with Confidence

With great power comes great responsibility. As businesses adopt AI image generation, establishing strong governance and ethical guidelines is paramount. Google has built trust and safety features directly into the model. All images created or edited with Gemini 2.5 Flash Image include an invisible SynthID digital watermark, a cryptographic signature that is robust to common image manipulations and allows the content to be identified as AI-generated. Images generated in the public Gemini app also include a visible watermark.

Beyond these built-in features, businesses must address the key ethical considerations of using this technology. These include navigating the ambiguous legal landscape around copyright for AI-generated works, actively monitoring for and mitigating bias that may be inherited from the model's training data, and preventing the creation of misleading or deceptive content that could harm the brand's reputation. For a strategic framework on AI governance and protecting your digital assets, see our executive guide to AI Governance, Asset Management, and risk.

The strategic imperative for leaders is to be proactive. This involves creating clear internal policies for the acceptable use of AI image tools, establishing a review process to ensure generated content aligns with brand values and is free from bias, and maintaining transparency with customers and stakeholders about the use of AI-generated visuals.

Ultimately, the return on investment from this technology is shifting from a simple cost-cutting exercise to a more profound opportunity for value creation. While initial use cases focused on replacing stock photography or reducing designer hours, the true potential of Gemini 2.5 Flash Image lies in its ability to unlock new revenue-generating activities. The ability to hyper-personalize marketing at scale, rapidly A/B test visual strategies, and create entirely new forms of engaging content were previously infeasible due to time and cost constraints. This technology removes those barriers, reframing it from a simple efficiency tool into a strategic engine for growth, innovation, and deeper customer engagement. Want to avoid the trap of over-configuring your software and increasing long-term costs? Check out our insights on configuration complexity and balancing flexibility with maintainability.

Conclusion: The Bottom Line and Your Next Move

Google's Gemini 2.5 Flash Image, aka "Nano Banana," is more than just an incremental update in the AI arms race. Its arrival marks a pivotal moment where generative AI for images has matured from a fascinating novelty into a practical, scalable, and indispensable business tool. By solving the critical, long-standing challenges of character consistency and iterative editing, Google has delivered a model uniquely suited for the operational demands of modern enterprises.

The key takeaway for business leaders is that this technology's unique combination of consistency, conversational control, and real-time speed makes it a formidable asset for any organization that relies on visual content to market products, communicate with customers, or develop new ideas. It democratizes high-level creative capabilities, empowering teams across the organization to produce professional-quality visuals with unprecedented efficiency.

To capitalize on this opportunity, executives should consider the following actionable next steps:

- Encourage Experimentation. The best way to understand the model's potential is to use it. Task a marketing, design, or product team with a small pilot project using the free versions available in the Gemini App or Google AI Studio. This hands-on experience will provide invaluable insights into its capabilities.

- Identify a High-Impact Use Case. Analyze current creative workflows and pinpoint one key business process—such as product photography for e-commerce, ad creative generation, or concept art for a new application—where this technology could deliver the most significant and immediate return on investment.

- Explore API Integration. For CTOs and technology leaders, the strategic endgame is integration. Begin evaluating the Gemini API and consider how its capabilities could be embedded into proprietary systems to automate and scale the high-impact use case identified in step two. To accelerate this process, consider engaging a technology partner with proven expertise in DevOps efficiency, agile deployment, and custom software development to design and implement a solution that maximizes business value.

The era of AI as a creative co-pilot has arrived. The organizations that move decisively to integrate these new capabilities into their strategic and operational fabric will be the ones to define the next generation of visual communication and customer engagement.

How do you see this technology changing your industry? Share your thoughts below.

Further Reading

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.