Cloud-Native Architecture: The Executive Blueprint for Business Agility

September 03, 2025 / Bryan ReynoldsThe Executive's Guide to Cloud-Native Architecture: Beyond the Hype to Business Reality

Introduction: The Agility Gap - Is Your Technology Holding Your Business Back?

Imagine this scenario: a nimble competitor launches a game-changing new feature, capturing market share in a matter of weeks. Meanwhile, your own product roadmap is measured in quarters, constrained by a rigid, slow-moving technology infrastructure. This isn't a failure of your engineering team; it's a symptom of a deeper, more strategic problem—the "Agility Gap." This gap, the chasm between the relentless pace of market demands and your organization's ability to respond, is the single greatest threat to established businesses today. It's a business velocity problem, and its roots lie in how your digital engine is built.

For years, technology has been treated as a cost center, a complex system to be managed and maintained. But in the modern digital economy, technology is the business. The ability to innovate, adapt, and deliver value to customers at speed is paramount. This requires a fundamental shift in thinking, away from the monolithic, slow-release cycles of the past and toward a new model designed for the speed of the cloud.

This new model is called "cloud-native." It's more than a technical buzzword; it's a strategic business framework for building and running applications that are engineered to close the Agility Gap. It's about re-architecting your company's core capabilities for speed, resilience, and unprecedented scale.

Navigating this transformation is a significant undertaking, filled with complex technical, financial, and organizational questions. This guide, brought to you by the experts at Baytech Consulting, is designed to cut through the noise. We will provide the clear, business-focused answers that executives need to make informed, strategic decisions about this critical journey. We will address the questions you are—and should be—asking, from the fundamental "what is it?" to the crucial "what's the real cost?" and "why can't we just do this ourselves?"

1. "So, What Exactly Is a Cloud-Native Architecture in Plain English?"

One of the most common misconceptions among business leaders is that "cloud-native" simply means running existing applications on a cloud provider's servers. This is a critical misunderstanding. Moving your old systems to the cloud without changing their architecture is like putting a horse-drawn carriage on a highway—you're in a new environment, but you're not equipped to take advantage of its speed.

Core Concept: It's an Approach, Not a Place

Cloud-native is not a destination; it's a modern approach to building and running software applications that fully leverages the unique advantages of the cloud computing delivery model. It's about designing systems from the ground up to be flexible, scalable, and resilient, embracing automation and the dynamic nature of cloud infrastructure. This approach contrasts sharply with traditional, on-premises infrastructure, which operates under a very different set of constraints.

The Monolith vs. Microservices Analogy: The LEGO® Castle

To grasp the strategic importance of this shift, consider an analogy. For decades, most business applications have been built as "monoliths."

- The Monolith (The Pre-Built Castle): Imagine a large, intricate castle model, molded from a single piece of plastic. It's impressive, but it's also rigid and brittle. If you want to change one of the towers, you have to be incredibly careful not to crack the entire structure. Testing any change is a slow, painstaking process because a small modification in one area could have unintended consequences anywhere else. Deploying an update means replacing the entire castle at once—a high-risk, all-or-nothing event. This is how traditional applications work: a single, massive codebase where all components are tightly coupled.

- Microservices (The LEGO® Castle): Now, imagine building that same castle with individual LEGO bricks. Each brick represents a small, independent service—a "microservice"—that performs a single business function. You might have a brick for 'user authentication,' another for 'payment processing,' and a third for 'product search'. Each brick is a self-contained unit. You can replace the 'payment' brick with an upgraded version, or add more 'search' bricks to handle increased traffic, all without disturbing the rest of the castle. This modularity is the architectural heart of cloud-native agility.

The Enabling Technologies (Explained for Executives)

This modular, microservices-based approach is made possible by a set of core technologies that work in concert to automate and manage complexity.

- Containers (The Standardized Shipping Box): A container is a standardized package that holds a microservice (a single LEGO brick) and everything it needs to run—code, databases, frameworks, and other dependencies. Think of it like a standardized shipping container. It doesn't matter what's inside; the container itself has standard dimensions and fittings, so it can be moved by any crane and placed on any ship. This solves the age-old problem of "it worked on my developer's machine but broke in production." A containerized service runs identically everywhere, from a laptop to the live production environment, leading to far more reliable and predictable releases.

- Orchestration (The Port Authority): When your application consists of hundreds or even thousands of these container "ships," you need a sophisticated system to manage the logistics. This is where an orchestration platform like Kubernetes comes in. Kubernetes acts as the automated "port authority" for your entire application fleet. It decides which server "dock" to place each container in, automatically replaces any container that fails, monitors their health, and scales the number of containers up or down based on real-time demand. This powerful infrastructure automation is what allows cloud-native systems to be self-healing and dynamically scalable.

- CI/CD (The Automated Assembly Line): Continuous Integration and Continuous Delivery (CI/CD) is the automated factory assembly line that builds, tests, and deploys your software. In a traditional model, deploying new code is a manual, risky, and infrequent event. With a CI/CD pipeline, the moment a developer commits a change, an automated process kicks in. It builds the code, runs it through a battery of quality and security checks, packages it into a container, and deploys it to production—often without any human intervention. This automation transforms the release process from a months-long ordeal into a routine, low-risk event that can happen multiple times a day, enabling a pace of innovation that was previously unimaginable.

The Cultural Shift from Projects to Products

Adopting these technologies is only half the story. The most profound change that cloud-native brings is a fundamental shift in the organization's operating model. It forces a move away from a traditional "project" mindset and toward a "product" mindset.

In the monolithic world, work is organized into projects with a beginning and an end. A development team builds a feature, "throws it over the wall" to a quality assurance team, who then hands it off to an operations team to run and maintain. This creates silos, diffuses responsibility, and slows everything down with handoffs and long "release trains" where everyone has to wait for the slowest component.

The cloud-native model shatters these silos. An application is organized around business capabilities, with small, autonomous teams owning their microservice from conception to retirement. The "inventory management" team, for example, is composed of developers, operations specialists, and security experts who are collectively responsible for the entire lifecycle of the inventory microservice. They are empowered to choose the best technologies for their specific job and can release updates independently, at their own pace, without waiting for or disrupting any other team.

This structure creates a direct line of sight between the engineering team and the business value they deliver. They are no longer just closing tickets in a project plan; they are continuously improving a living business product. This fosters a culture of ownership, accountability, and expertise that is the true engine of agility. This cultural transformation is not a side effect; it is a prerequisite for success, and it requires active sponsorship and understanding from the executive level.

2. "That's Interesting, But Why Should My Business Invest in This? What's the Real ROI?"

Understanding the "what" is important, but the executive imperative is to understand the "why." A cloud-native transformation is a significant investment of capital and focus. The justification must be grounded in tangible, measurable business outcomes that create a clear return on investment (ROI). The benefits are not just technical improvements; they are strategic advantages that directly impact revenue, risk, and competitive positioning.

Benefit 1: Accelerating Time-to-Market (Winning the Agility Race)

The single most compelling benefit of cloud-native architecture is speed. By breaking down monolithic applications into independent microservices, development teams can work in parallel without tripping over each other. The team working on a new recommendation engine can iterate and deploy daily, while the team managing the billing system maintains its own steady, stable release cadence. This parallelization of effort dramatically shortens development cycles.

When combined with the automated CI/CD pipeline, this architectural freedom becomes a strategic weapon. The ability to move an idea from concept to production in days or hours, rather than months or quarters, is a profound competitive advantage. It allows a business to respond to market changes, launch new products, and incorporate customer feedback at a velocity that legacy competitors simply cannot match. This isn't just about being faster; it's about creating a business that learns and adapts in real-time.

Benefit 2: Building Unbreakable Systems (Maximizing Uptime and Trust)

In a digital-first world, downtime is no longer a technical inconvenience; it is a direct loss of revenue and customer trust. Traditional monolithic applications have a critical vulnerability: a single point of failure. A bug in a minor, non-critical feature can bring the entire system crashing down.

Cloud-native systems are designed for failure. The modular nature of microservices isolates faults. If the 'product review' service encounters an error, it may temporarily become unavailable, but the core functions of browsing, searching, and purchasing remain fully operational. The blast radius of any single failure is contained, preserving the overall user experience.

Furthermore, the Kubernetes orchestration layer provides powerful self-healing capabilities. It constantly monitors the health of every container in the system. If a container becomes unresponsive, Kubernetes automatically terminates it and spins up a healthy replacement, often resolving the issue before a human operator is even alerted. This shifts the operational posture from reactive firefighting to automated, proactive resilience, leading to significantly higher uptime and building the foundation of a reliable, trustworthy digital presence.

Benefit 3: Achieving True Scalability (Paying for What You Need, When You Need It)

Traditional infrastructure planning is a guessing game. Businesses must provision enough server capacity to handle their absolute peak demand—like a Black Friday sale—which means that for the other 364 days of the year, a huge portion of that expensive hardware sits idle and underutilized.

Cloud-native architecture eliminates this waste through automated, granular scalability. Instead of scaling an entire monolithic application, you can scale individual microservices based on their specific, real-time load. If a marketing campaign drives a massive spike in traffic to your product landing pages, Kubernetes can automatically add dozens of new containers for the 'product-catalog' service to handle the load. At the same time, back-end services like 'order-fulfillment' might remain at their normal capacity. Once the traffic spike subsides, the extra containers are automatically removed. This resource-optimized scaling ensures that you are always using—and paying for—precisely the amount of computing power you need at any given moment, no more and no less.

Benefit 4: Optimizing Costs and Resources (Shifting from CapEx to Value-Driven OpEx)

The financial model of cloud-native is fundamentally different and more advantageous than traditional IT. It represents a strategic shift from large, infrequent Capital Expenditures (CapEx) on physical hardware to predictable, usage-based Operational Expenditures (OpEx). This frees up capital, improves cash flow, and allows for greater financial flexibility.

However, the most significant cost optimization is not in hardware savings, but in the productivity of your most valuable and expensive resource: your engineering talent. By automating away the manual toil of infrastructure management, patching, and deployments, cloud-native platforms free developers to focus on what they do best: writing code that creates business value. When engineers can spend their time innovating on new features rather than managing servers, their productivity skyrockets, and the entire business accelerates.

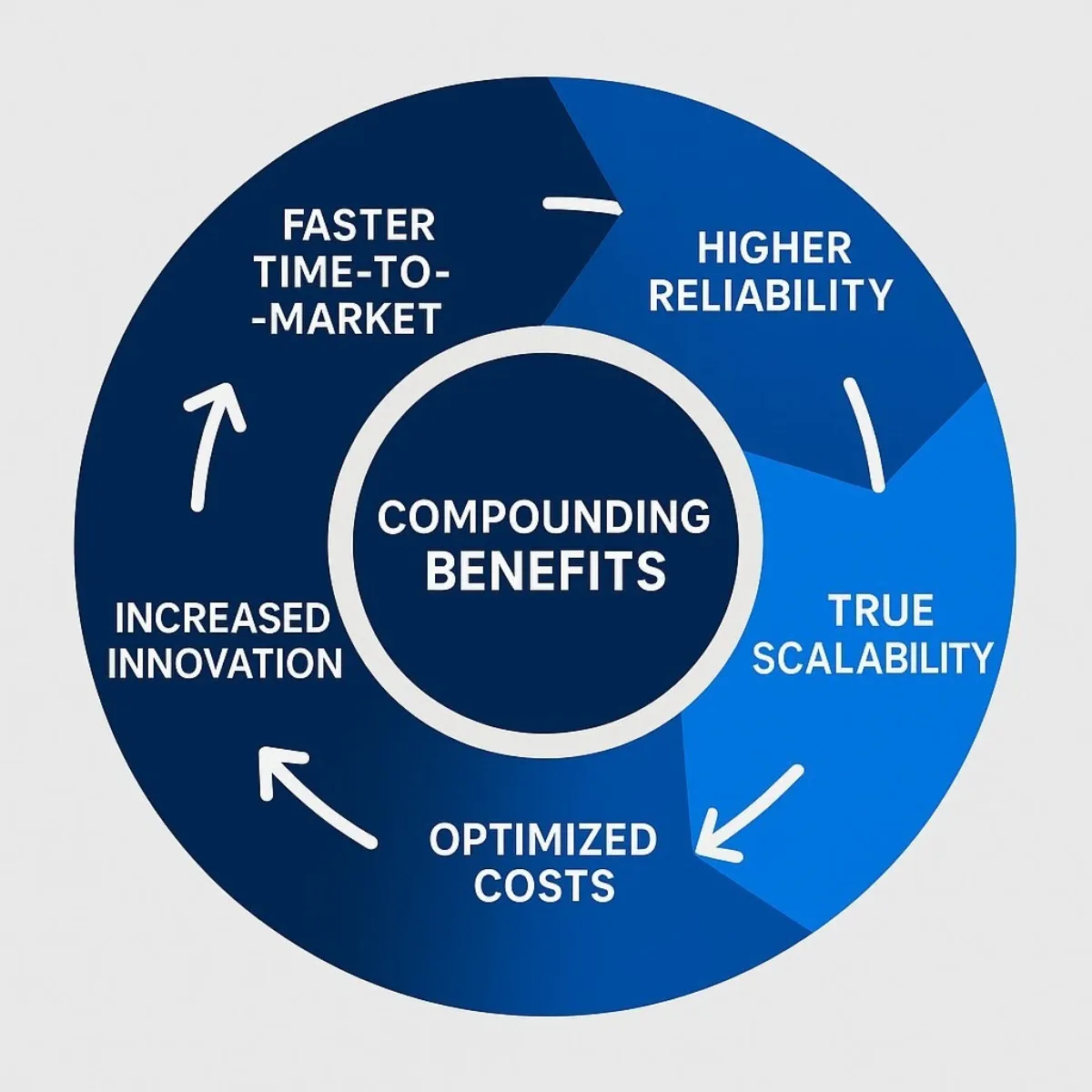

The Compounding Value Chain of Cloud-Native Benefits

It is a mistake to view these benefits in isolation as a simple checklist. They are deeply interconnected and create a powerful, self-reinforcing virtuous cycle—a flywheel for business growth. For a closer look at this compounding effect, our executive guide to AI agents in software development offers insight into how technical modernization and intelligent automation can push productivity to new heights.

The analysis begins with the understanding that each benefit enables the next. The accelerated time-to-market allows for the rapid and continuous iteration that is essential for building a superior customer experience. A business cannot claim to be customer-centric if it takes six months to respond to feedback or fix a critical bug. The high reliability and uptime of these systems form the bedrock of trust upon which that customer experience is built; frequent outages quickly erode customer loyalty, no matter how good the features are. True, granular scalability ensures that the experience remains fast and responsive even during unpredictable demand spikes, preventing lost sales and customer frustration.

This superior, reliable, and scalable customer experience directly drives key business metrics: higher conversion rates, increased customer retention, and greater lifetime value. The financial gains from these improvements, combined with the cost optimizations from the pay-as-you-go model and increased developer productivity, free up capital and resources. This capital can then be reinvested back into the engineering teams to fund further innovation, which starts the cycle all over again at an even higher velocity. This is the compounding value chain of cloud-native: it's not just an IT project, but a strategic investment that transforms the technology organization from a cost center into a powerful engine for sustainable business growth.

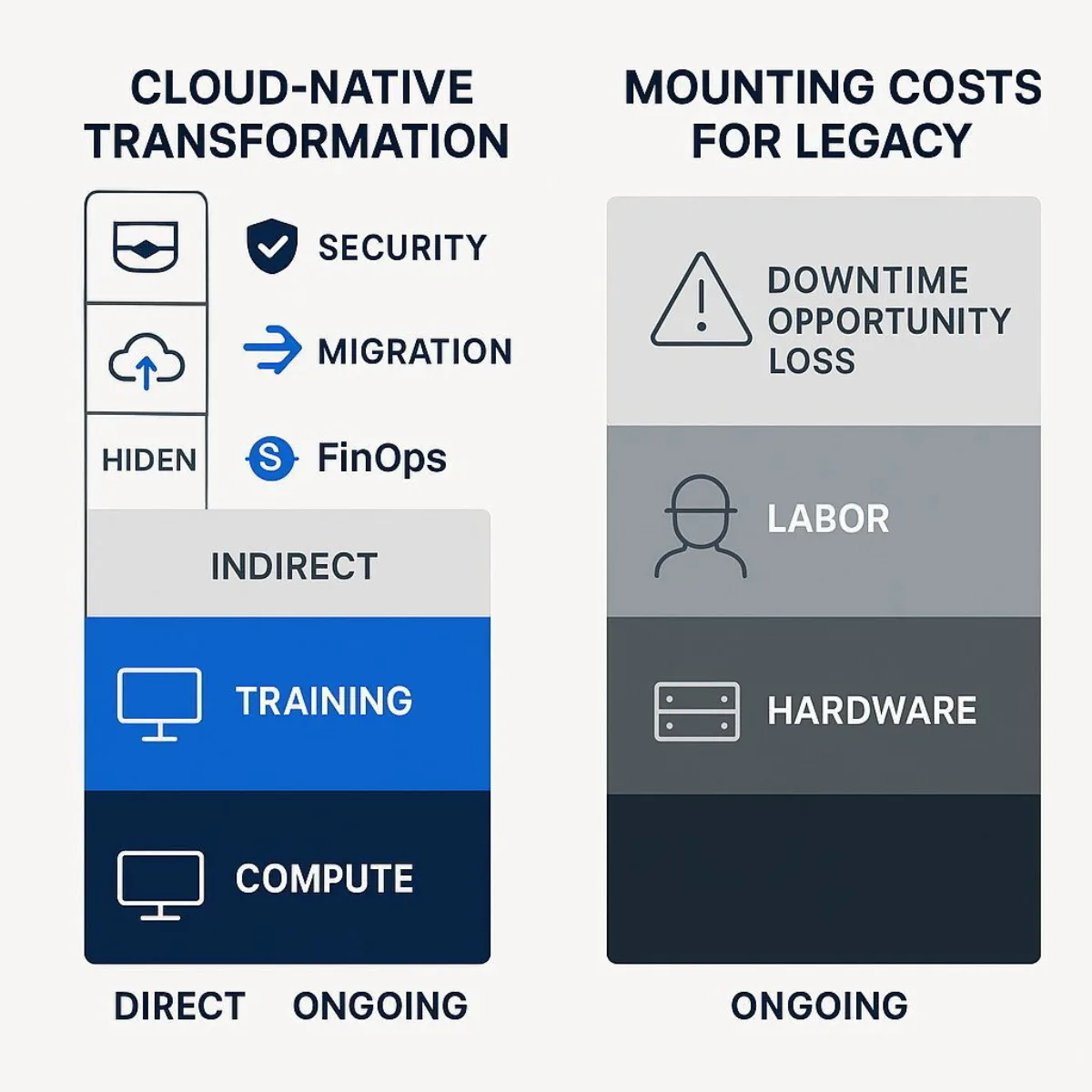

3. "This Sounds Complex and Expensive. What Are the Real Costs I Need to Consider?"

The benefits of a cloud-native architecture are compelling, but a responsible executive must ask the hard questions about cost. A move of this magnitude is not trivial, and the price tag extends far beyond the monthly cloud provider bill. A simplistic comparison of a server invoice to a cloud subscription is a recipe for financial surprise and strategic failure.

Introducing Total Cost of Ownership (TCO): The Only Metric That Matters

To make a sound financial decision, the analysis must encompass the Total Cost of Ownership (TCO) over a multi-year horizon, typically three to five years. TCO provides a comprehensive financial model that accounts for all direct, indirect, visible, and hidden costs associated with both maintaining your current environment and transitioning to a new one.

This is not a simple accounting exercise. Building a credible TCO model requires a deep understanding of both technology and finance. It's a critical strategic planning phase where a partner like Baytech Consulting can provide invaluable expertise, helping your organization move beyond sticker-price comparisons to a clear-eyed analysis of the long-term strategic investment.

Deconstructing the TCO of a Cloud-Native Transformation

A comprehensive TCO model for a cloud-native transformation includes several distinct cost categories.

- Direct Cloud Costs (The Visible Part): These are the line items that appear on your monthly bill from providers like AWS, Azure, or Google Cloud. They are the most visible but are often only a fraction of the total cost. This category includes:

- Compute Costs: The virtual machines or serverless functions that run your containers.

- Storage Costs: The persistent disks, object storage, and databases that hold your application data.

- Data Transfer Costs: Fees for moving data into, out of, and between cloud services and regions.

- Managed Service Fees: Charges for services like managed Kubernetes, databases, and monitoring tools.

- Transitional & Indirect Costs (The Hidden Part): This is where most organizations underestimate the true cost. These are the one-time and ongoing expenses required to enable the transformation.

- Migration Costs: This is the significant, one-time investment required to move from your current state to the cloud-native model. It includes the labor costs for strategic planning, data migration, and, most importantly, refactoring or rewriting applications to work effectively as microservices.

- Personnel & Training Costs: This is arguably the largest and most frequently overlooked cost. Your existing team will need new skills to operate in a cloud-native world. This requires a substantial investment in training programs, certifications, and potentially hiring new talent with deep expertise in Kubernetes, cloud security, and DevOps practices.

- Security & Compliance Tooling: The shared responsibility model in the cloud means that while the provider secures the underlying infrastructure, your organization is responsible for securing your applications and data on that infrastructure. This necessitates investment in a new class of cloud-native application protection platforms (CNAPPs) and security tools designed for dynamic, containerized environments.

- FinOps & Governance: The dynamic, pay-as-you-go nature of the cloud requires a new financial discipline known as FinOps (Cloud Financial Management). This involves investing in tools and personnel dedicated to monitoring cloud usage, forecasting costs, optimizing spend, and preventing budget overruns, which can occur quickly in an unmanaged environment.

The TCO of Not Migrating (The True Cost of the Status Quo)

A complete TCO analysis must also honestly assess the full cost of doing nothing. The status quo is not free; it carries its own significant and often escalating costs. Get more insight into TCO pitfalls and strategies in our deep dive on software development contracts and project models—many lessons translate directly to cloud spend as well.

- Hardware & Data Center Costs: The recurring capital expenditures for server refresh cycles, escalating software licensing and maintenance fees, and the operational costs of power, cooling, physical security, and data center real estate.

- Operational Labor: The fully-loaded salaries of the IT staff dedicated to manual, low-value tasks like server provisioning, patching, troubleshooting hardware failures, and "keeping the lights on."

- The Cost of Downtime & Slow Performance: This is the most critical and often unmeasured cost. Every hour of system downtime or degraded performance has a direct, quantifiable impact on the bottom line through lost revenue, decreased employee productivity, and potential SLA penalties.

- Opportunity Cost: The most significant, albeit intangible, cost of all. What is the financial impact of being six months slower to market than your competitors with a new product? What is the value of the market share lost to a more agile rival? This is the true cost of maintaining a slow, brittle infrastructure.

Cloud TCO is an Investment in Business Agility, Not an IT Expense

When the TCO analysis is performed correctly, it reveals a profound strategic truth. The traditional, on-premises model is not a stable asset; it is a depreciating liability with high, often hidden, operational costs and significant business risk. Conversely, the cloud-native model should not be viewed as a simple IT expense to be minimized. It is a strategic investment that transforms the IT infrastructure from a cost center into a primary enabler of business value and agility.

The line items that appear as "costs" in the cloud TCO—such as personnel training and migration expenses—are, in fact, the very investments required to unlock the high-value benefits of developer productivity and business agility. The financial conversation must therefore shift. It is not about comparing the cost of old servers to the cost of new cloud services. It is about a strategic reallocation of the corporate budget: shifting funds away from the low-ROI activity of maintaining brittle legacy systems and toward the high-ROI activity of building a fast, flexible, and resilient platform for future growth. The question for the executive team is not "How do we cut our IT budget?" but rather, "How do we invest in the technology foundation that will accelerate our entire business?"

4. "Can't We Do This Cheaper? Why Not Just Rent Servers and Have Our Team Build It with Kubernetes?"

This is the most astute question an executive can ask. It cuts directly to the core of the build-versus-buy decision and forces a conversation about value, cost, and, most importantly, risk. The answer is not about which option appears cheaper on a monthly invoice, but which path delivers a superior Total Cost of Ownership and a more manageable risk profile for the business.

Defining the Paths

There are two primary ways to implement a Kubernetes-based, cloud-native platform:

- Path A: The Managed Path: This involves using a managed Kubernetes service from a major cloud provider, such as Amazon Elastic Kubernetes Service (EKS), Azure Kubernetes Service (AKS), or Google Kubernetes Engine (GKE). In this model, the cloud provider takes on the immense complexity of installing, managing, securing, and scaling the Kubernetes "control plane"—the system's brain—for you.

- Path B: The DIY Path: This involves renting raw computing power, typically in the form of bare metal servers from a hosting provider, and having your internal team install, configure, secure, and manage the entire open-source Kubernetes platform from scratch. This is effectively building your own private cloud.

The Deceptive Simplicity of the DIY Cost Model

On the surface, the DIY path can seem financially attractive. A powerful bare metal server might be rented for as little as $150 per month. In contrast, the managed path involves paying for the underlying cloud virtual machines plus a management fee for the Kubernetes control plane, which is typically around $0.10 per hour, or about $72 per cluster per month, for providers like AWS and Google. At first glance, the costs may seem comparable or even favor the DIY approach.

This is a dangerous illusion. This simple comparison ignores the single largest cost driver in any technology initiative: expert human labor. The true TCO of the DIY path is hidden in the massive, ongoing operational burden that the organization must assume.

The Detailed Comparison: Managed vs. Self-Hosted Kubernetes

| Feature | Managed Kubernetes (e.g., AWS EKS, Azure AKS) | Self-Hosted Kubernetes (DIY on Rented Servers) |

|---|---|---|

| Upfront Cost | Low. Costs are pay-as-you-go for resources consumed, plus a small, predictable management fee. | Moderate to High. Includes server rental fees, but also the significant labor cost of the initial platform design, installation, and hardening. |

| Visible Monthly Cost | Transparent and predictable: compute and storage usage plus a flat management fee (e.g., ~$72 per cluster). | Deceptively low: the server rental fee (e.g., $150+ per server) is the only visible cost. |

| Hidden Monthly Cost | Minimal. The pricing models are designed for transparency. | Extremely High. This is the dominant cost factor. It includes the fully-loaded salaries of a dedicated team of 3-5+ elite-level engineers specializing in Kubernetes, cloud networking, storage, and security. |

| Operational Overhead | Low. The cloud provider is responsible for the 24/7 management of the complex control plane, including security patching, version upgrades, availability, and scaling. | Extremely High. Your internal team is 100% responsible for everything. This includes constant monitoring, urgent security patching, complex version upgrades, disaster recovery planning and testing, and troubleshooting deep system-level issues. |

| Required Expertise | Moderate. Your team needs standard DevOps skills to build automation and deploy applications on the platform. | Elite-Level. This requires a team of scarce and expensive specialists with deep, nuanced expertise in Kubernetes internals, etcd management, Linux kernel tuning, software-defined networking, and distributed storage systems. |

| Time to Production | Fast (Weeks). The foundational platform is provisioned in minutes. Your team can immediately begin focusing on deploying your business applications. | Slow (Months, or longer). A significant amount of time—often 6 months or more—is spent just building, testing, and hardening the underlying platform before a single business application can be deployed. |

| Security & Compliance | Shared Responsibility Model. The provider secures the underlying platform and provides tools and certifications (e.g., PCI, HIPAA) that accelerate your compliance efforts. | 100% Your Responsibility. The risk of critical security misconfigurations in networking, access control, or container security is extremely high and falls entirely on your team. A single mistake can lead to a catastrophic breach. |

| Scalability & Reliability | Enterprise-grade. These services are designed for massive scale and high availability, backed by a formal, financially-backed Service Level Agreement (SLA) from the provider. | Entirely dependent on the quality of your team's architecture and their 24/7 operational vigilance. There is no external SLA; the business assumes all risk of downtime. |

| Focus of Your Team | Business Value. Your best and brightest engineers are focused on building and improving your company's revenue-generating applications. | Infrastructure Plumbing. Your best and brightest engineers are consumed with the complex, non-differentiating task of "keeping the lights on" for the Kubernetes platform itself. |

| True TCO | Predictable OpEx. For the vast majority of businesses, the TCO is significantly lower when factoring in the avoided costs of specialized labor, operational drag, and risk mitigation. | Deceptively low infrastructure cost, but an astronomically high TCO once the true costs of specialist salaries, lost productivity, delayed time-to-market, and the financial risk of downtime and security breaches are included. |

The Managed Fee is an Insurance Policy on Complexity and Risk

The ~$72 per month management fee is not a "cost" in the traditional sense. It is the price an organization pays to strategically transfer an enormous, complex, and high-stakes operational burden to a world-class expert—the cloud provider.

Consider the true cost of the alternative. A single senior Kubernetes engineer can command a salary well into six figures. A full team required to properly manage a production-grade DIY platform 24/7 can easily cost over a million dollars per year in fully-loaded salary expenses. That cost alone far exceeds the management fees for dozens, if not hundreds, of managed clusters.

Furthermore, the DIY path forces the business to internalize 100% of the operational risk. What is the cost to the business of a multi-day outage caused by a failed Kubernetes upgrade? What is the financial and reputational damage of a data breach stemming from a subtle network policy misconfiguration? These are not hypothetical scenarios; they are common occurrences in complex, self-managed environments.

The managed service fee is, therefore, best understood as an insurance policy. It is a fixed, predictable cost that offloads a variable, unpredictable, and potentially catastrophic set of risks and operational burdens. For any company whose core business is not selling Kubernetes platforms, the managed path provides a dramatically superior risk-adjusted ROI. It allows your most valuable people to stop worrying about undifferentiated infrastructure plumbing and focus exclusively on activities that create a competitive advantage for your business.

5. "We're Convinced. What Should We Be Doing Right Now to Get Started?"

Making the strategic decision to adopt a cloud-native architecture is a critical first step. The next is to translate that decision into a clear, actionable plan. A successful transition requires careful planning, executive sponsorship, and a phased, iterative approach that delivers value quickly while managing risk.

The Strategic Imperative: This is a Business Transformation, Not an IT Project

The single most common failure mode in cloud adoption is treating it as a technology project to be delegated solely to the IT department. A cloud-native transformation fundamentally changes how a business builds and delivers value. As such, it demands executive buy-in and deep, cross-functional alignment between business leadership, IT, and finance from day one. The goals, budget, and metrics for success must be defined and owned by the entire leadership team.

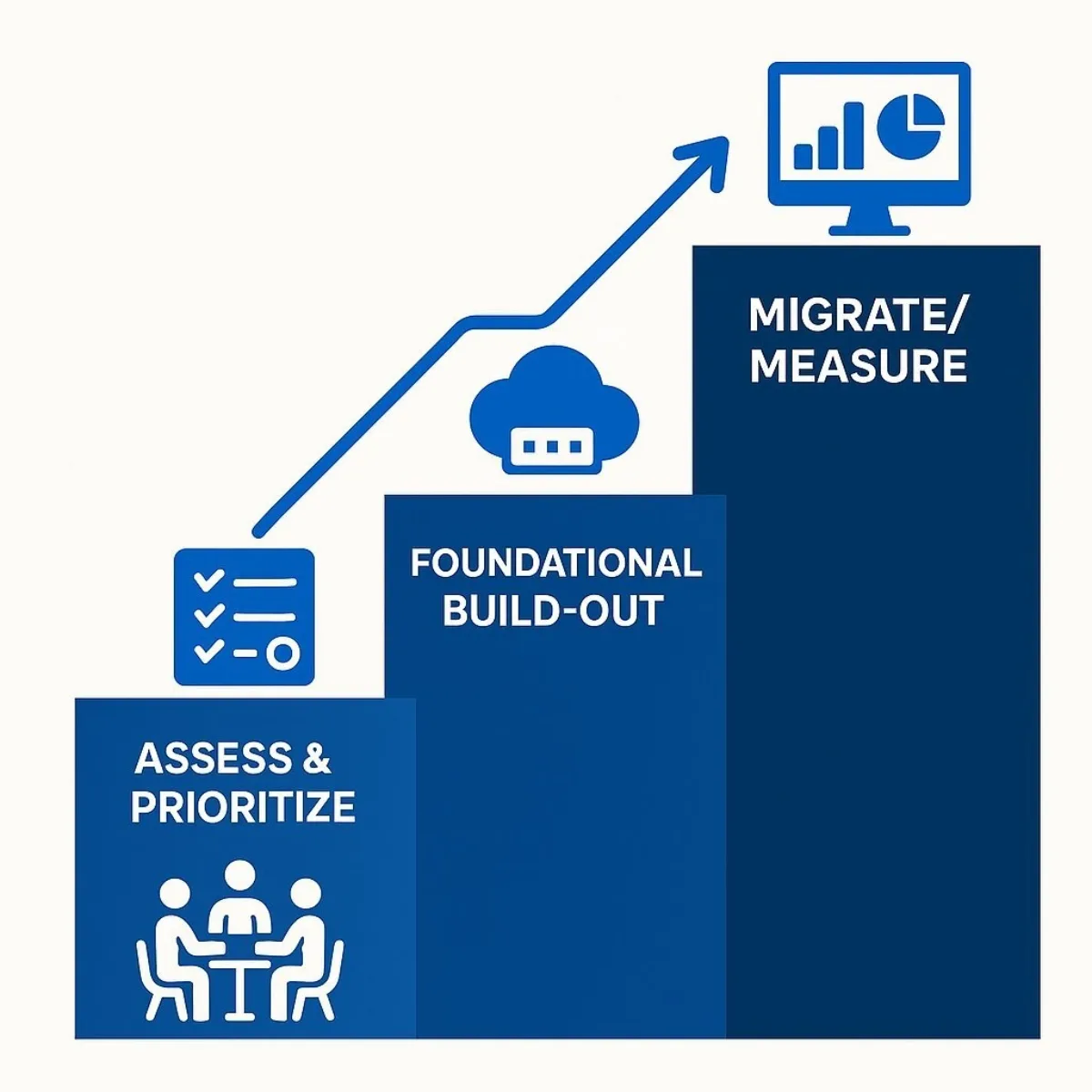

Baytech's Phased Approach to Cloud-Native Adoption

A structured, phased approach is essential to navigate the complexity of this journey. At Baytech Consulting, we guide our clients through a proven four-phase model designed to build momentum and ensure that technology investments are always tied to business outcomes.

Phase 1: Strategy & Alignment (The 'Why')

- Action: The journey begins not with technology, but with strategy. The first step is a dedicated workshop that brings together key business and technology leaders. The primary goal is to define the core business objectives driving the transformation. Are you aiming to reduce your product release cycle from six months to two weeks? Do you need to improve system uptime to meet the demands of a new enterprise client segment? Are you trying to lower the cost of customer acquisition by enabling faster feature experimentation? Find out how AI-powered development and agentic SDLC models can help accelerate these objectives.

- Output: A concise, documented cloud adoption strategy. This document is not a technical blueprint; it is a business plan that explicitly links specific technology initiatives to measurable business outcomes and Key Performance Indicators (KPIs).

Phase 2: Assess & Prioritize (The 'What' and 'Where')

- Action: With clear business goals established, the next step is to conduct a thorough assessment of your current application portfolio. Not all applications are created equal, and not everything needs to be rewritten as microservices immediately. Applications should be categorized based on their business impact, technical complexity, and readiness for the cloud.

- Identify a Pilot Project: The key to this phase is to select a single, ideal candidate for an initial pilot project. The best pilot is a workload that is strategically important and will demonstrate clear value, but is not so complex or mission-critical that it introduces excessive risk. This first mover builds organizational momentum, provides invaluable hands-on learning, and creates a powerful success story.

- Output: A prioritized application migration roadmap that outlines the sequence and approach for modernizing your portfolio, along with a clearly defined scope and success criteria for the initial pilot project.

Phase 3: Foundational Build-Out (The 'How')

- Action: This is the phase where an expert partner like Baytech Consulting provides immense value by accelerating the process and preventing costly mistakes. Together, we design and construct your secure and scalable "landing zone" in the cloud. This is the foundational platform upon which all your future cloud-native applications will run. It includes setting up the core cloud network, implementing robust identity and access management controls, establishing security guardrails and policies, and building the automated CI/CD pipelines that will enable rapid, reliable deployments.

- Output: A secure, scalable, and fully automated cloud foundation, built according to best practices and ready to host your first cloud-native application.

Phase 4: Migrate, Modernize & Measure (The 'Proof')

- Action: With the foundation in place, the team executes the pilot project migration. This might involve "lifting and shifting" an existing application into a container to run on Kubernetes, or it could involve refactoring a key piece of a large monolith into its first independent microservice.

- Measure Relentlessly: As soon as the pilot is live, begin tracking its performance against the business KPIs defined in Phase 1. Has the deployment frequency increased? Has the application's stability and uptime improved? What is the new, granular cost profile of this workload?. Consider real-world performance metrics, which are discussed in depth in our AI engineer effectiveness breakdown.

- Output: A successful pilot application running in production, a set of documented learnings and best practices that will inform future migrations, and a data-backed business case that proves the value of the cloud-native model to the rest of the organization.

The Journey is Iterative, Not a Big Bang

The principles of agility and rapid iteration that define cloud-native architecture should also define the adoption journey itself. The most successful transformations are not massive, multi-year, "boil the ocean" projects that delay any realization of value until the very end. This "big bang" approach is a relic of the monolithic world; it is slow, incredibly risky, and often fails under its own weight.

The superior strategy is to build a "flywheel" of modernization. The successful pilot project provides the initial push. It delivers tangible value quickly, which builds political capital and organizational confidence. The lessons learned from that first migration make the second one faster, cheaper, and less risky. The success of the second project further accelerates the third. This iterative process creates a repeatable "migration factory" model, allowing the organization to progressively and safely modernize its entire portfolio over time. It is the embodiment of applying agile principles to the transformation itself, creating a cycle of compounding returns on the initial investment in the foundational platform and your team's skills.

Conclusion: Building Your Future-Ready Enterprise

The transition to a cloud-native operating model is no longer a niche technical trend; it is a business imperative. It is the strategic answer to closing the "Agility Gap," empowering organizations to innovate faster, operate with greater reliability, and adapt to a constantly changing market with a speed and flexibility that was once the exclusive domain of digital-native startups.

This journey is about far more than technology. It is a transformation that touches every aspect of the business, from financial models and operational processes to organizational structure and culture. The path is complex, with significant strategic, financial, and cultural challenges to navigate. The question for business leaders today is not if their organization will need to adapt to this new reality, but how they will lead the transition to capture the immense value and avoid the common pitfalls.

In a transformation of this magnitude, expert guidance is not a luxury; it is a critical success factor. A trusted partner can provide the strategic clarity, technical expertise, and hands-on execution support needed to turn ambition into reality.

If you are ready to move from questions to a clear, actionable strategy for your cloud-native journey, let's have a conversation. Baytech Consulting helps leaders like you build the business case, navigate the complexity, and execute the transformation that will define your company's future.

Supporting Articles

- Gartner: https://www.gartner.com/en/infrastructure-and-it-operations-leaders/topics/cloud-strategy

- Cloud Native Computing Foundation (CNCF): https://www.cncf.io/reports/state-of-cloud-native-development-q1-2025

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.