Agentic SDLC: The AI-Powered Blueprint Transforming Software Development

August 13, 2025 / Bryan ReynoldsThe Agentic SDLC: A Strategic Blueprint for AI-Augmented Software Engineering

Section 1: Executive Summary

The paradigm for creating complex software is undergoing its most significant transformation since the advent of Agile methodologies. The rise of sophisticated Artificial Intelligence (AI), particularly generative AI, is reshaping every stage of the Software Development Lifecycle (SDLC). The most effective strategy for building enterprise-grade software today is not to replace human teams but to empower them within a redefined, AI-native framework this report terms the Agentic SDLC. This model fundamentally re-architects workflows, elevates human roles from routine execution to strategic oversight, and integrates AI as a core collaborator, driving unprecedented gains in speed and innovation while introducing new, complex risks that demand executive attention and robust governance.

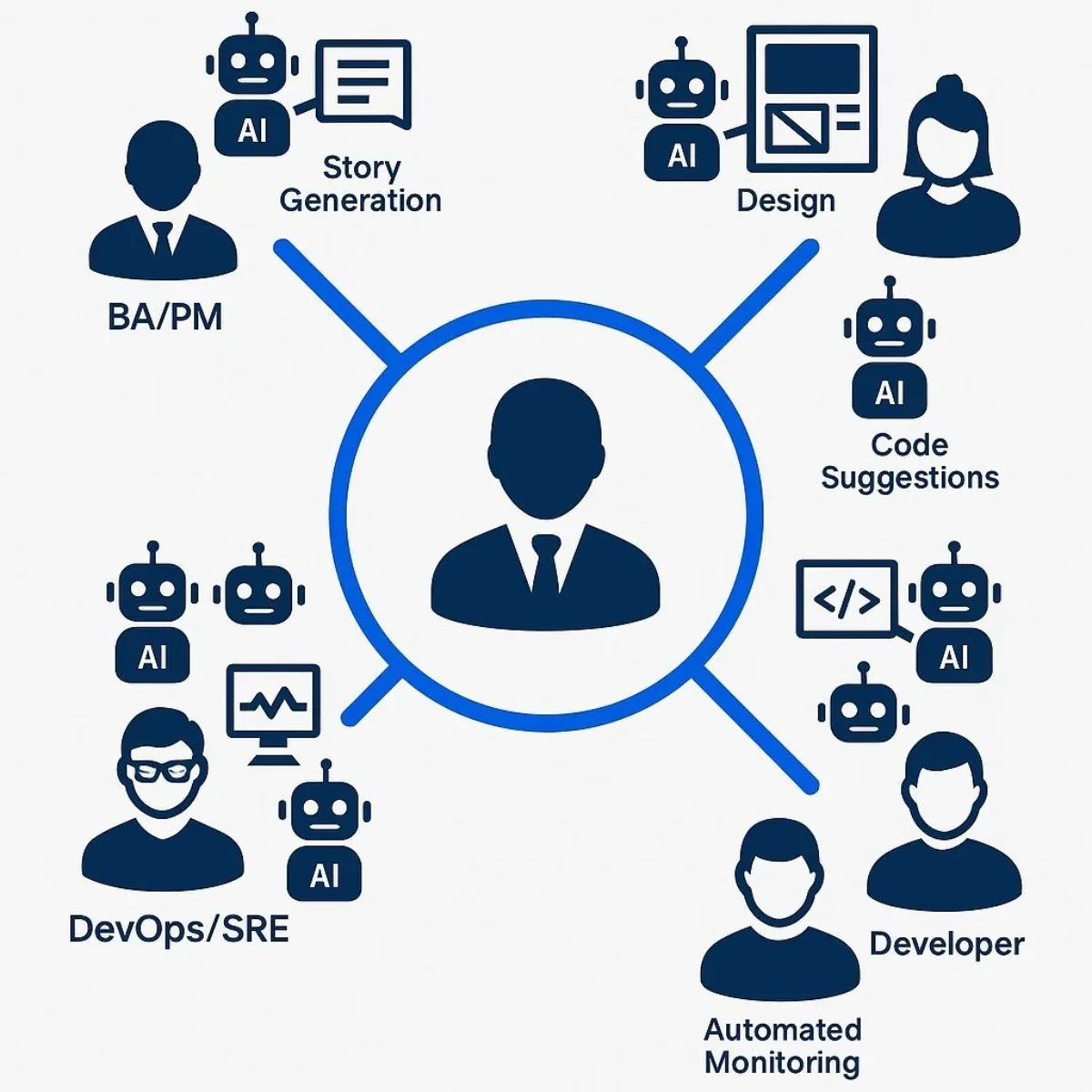

The core thesis of this report is that competitive advantage will be seized by organizations that move beyond piecemeal adoption of AI tools and instead implement a holistic, integrated strategy. This involves architecting a new operational model where human experts—business analysts, designers, developers, and DevOps engineers—orchestrate a suite of AI assistants and agents to achieve business goals. Human focus shifts from manual, repetitive tasks to creative problem-solving, architectural design, and the critical validation of AI-generated outputs.

Key findings from our comprehensive analysis include:

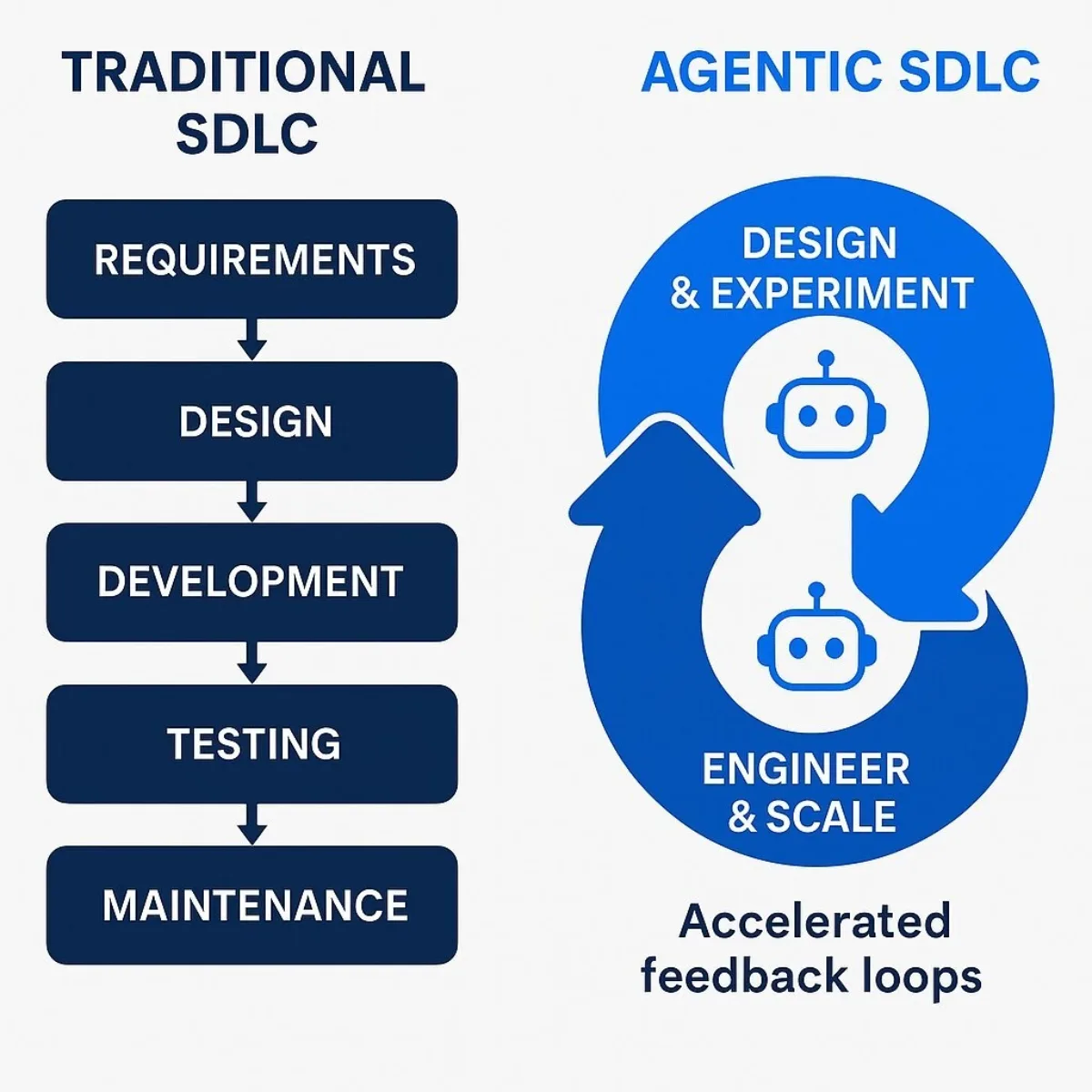

- A Compressed, Iterative SDLC: The traditional, linear SDLC is obsolete. AI is collapsing the distinct phases of requirements, design, and development into a highly compressed, iterative super-phase of "Design & Experiment." This allows cross-functional teams to move from idea to functional prototype in days or even hours, dramatically accelerating the feedback loop and the delivery of customer value.

- Significant Productivity and Economic Impact: The AI-augmented software engineering market is experiencing explosive growth, expanding from $2.17 billion in 2023 to an estimated $3.18 billion in 2024. Organizations that integrate AI throughout the entire SDLC can expect productivity gains of 25-30% and reductions in time-to-market of up to 50%, far surpassing the benefits of isolated code generation tools. Gartner predicts that by 2028, 75% of enterprise software engineers will utilize AI coding assistants, making adoption a competitive necessity.

- Elevation of All Team Roles: Every role within the software team is being redefined and elevated. Business Analysts are evolving into AI-powered strategists who curate insights from automated market analysis. Designers are becoming creative directors who guide AI to generate and refine visual concepts. Developers are transitioning into systems architects who orchestrate AI to build and test components. DevOps and Site Reliability Engineers (SREs) are becoming the guardians of an intelligent, automated, and self-healing infrastructure.

- The Dawn of Autonomous Agents: The emergence of autonomous AI software engineers like Cognition Labs' Devin and others in B2B AI represents the next frontier. While current capabilities are limited and require significant human oversight, these agents signal a future where human engineers will manage and delegate tasks to a team of specialized AI agents, further amplifying their strategic impact.

- The Strategic Imperative of Platform Engineering and Governance: The proliferation of powerful AI tools creates significant complexity and risk. The most effective response is to establish a dedicated Platform Engineering function to build and manage a secure, integrated, end-to-end toolchain. This "paved road" approach reduces cognitive load on developers and ensures that AI is leveraged safely and efficiently. Simultaneously, it is critical to implement a robust governance framework to mitigate new risks related to code quality, security vulnerabilities, intellectual property (IP) infringement, and data privacy.

This report provides a strategic blueprint for navigating this new landscape. It offers a role-by-role analysis of the transformed SDLC, a curated guide to the best-in-class tools for each function, a clear-eyed assessment of autonomous agents, a framework for risk mitigation, and a phased roadmap for implementation. The winning strategy is not a matter of if, but how, organizations adopt the Agentic SDLC. Success requires a dual commitment: investing in an integrated technology platform and, more importantly, investing in the people who will pilot it, fostering a culture of human-AI collaboration that will define the future of software innovation.

Section 2: The Paradigm Shift: From Assisted to Autonomous Development

The traditional Software Development Lifecycle (SDLC), a structured process that has guided software creation for decades, is fundamentally ill-equipped for the speed, complexity, and opportunities of the AI era. Its linear, often siloed nature creates friction, delays feedback, and fails to address the core challenges of modern digital product development. As AI integration matures, it is not merely optimizing the old model but shattering it, giving rise to a new, more fluid, and vastly more efficient paradigm. Understanding this shift is the first step for any leader aiming to harness AI for strategic advantage.

The Traditional SDLC: A Flawed Foundation for the AI Era

For years, even with the widespread adoption of Agile methodologies, the SDLC has been plagued by inherent inefficiencies. Surveys reveal that top challenges for engineering organizations include a lack of end-to-end visibility into the development process (44%), difficulty in measuring cycle time (34%), and persistent issues with continuous testing (29%). Many organizations struggle to scale Agile practices effectively due to the proliferation of disconnected systems (46%) and the persistence of siloed teams (37%) that hinder collaboration between business, design, and engineering.

This fragmented approach creates a significant lag between a business objective and the delivery of a valuable product to the end-user. The linear progression—plan, design, develop, test, deploy—assumes that user needs can be accurately predicted upfront, a premise that has proven false time and again. A staggering 70% of digital transformation initiatives fail not because of technical problems, but due to gaps in user adoption or experience. The old SDLC model, with its slow feedback loops, often results in building software that is technically sound but fails to meet the real, evolving needs of its users.

The AI-Augmented SDLC: A New Two-Phase Pattern

Artificial intelligence is fundamentally re-architecting this flawed process. It is not simply accelerating individual steps but compressing them, breaking down the walls between previously distinct phases. Research indicates the emergence of a streamlined, two-phase pattern that replaces the rigid, multi-stage lifecycle: Design & Experiment and Engineer & Scale.

- Design & Experiment: In this initial, highly iterative phase, AI tools forge an unprecedented synergy between business and technology. A product manager can use an AI tool to generate user stories directly from a high-level business goal. A designer can then feed these stories into a generative AI platform to create interactive, high-fidelity mockups in minutes, not weeks. A developer, working alongside them, can use an AI code assistant to generate the underlying code for this prototype almost instantly. This fusion of activities allows a cross-functional team to validate ideas, gather stakeholder feedback, and experiment with functional concepts at a velocity that was previously unimaginable. It democratizes development skills and encourages early, rapid validation, ensuring that what gets built is aligned with customer value from the very beginning.

- Engineer & Scale: Once a concept is validated in the experimental phase, the focus shifts to building it into a robust, scalable, and maintainable product. In this phase, AI continues to provide value by automating the engineering work required for production readiness. This includes generating comprehensive test suites, optimizing code for performance, automating deployment pipelines, and providing intelligent monitoring and observability for the application in production.

This two-phase model represents a fundamental shift from a linear race to an integrated, cyclical process that keeps the user at the center and dramatically shortens the path from concept to value.

Defining the Three Tiers of AI Integration

The journey to this new paradigm can be understood as a progression through three levels of AI maturity.

- Tier 1: AI-Assisted Development: This is the entry point for most organizations. AI tools act as intelligent "spell- and grammar-checkers for code," providing capabilities like real-time code completion and basic suggestions. Tools like the original versions of Tabnine and GitHub Copilot exemplify this stage, offering productivity boosts by reducing keystrokes and automating simple, repetitive coding tasks. This tier enhances individual developer productivity but does not fundamentally change the overall SDLC process.

- Tier 2: AI-Driven Development: This represents the current state-of-the-art for advanced teams and is the central focus of this report. Here, AI is deeply integrated into the entire workflow, actively driving decisions and automating complex tasks across multiple roles. This is where methodologies like the AI-Driven Development Lifecycle (AI-DLC) emerge, reimagining the entire process. In this model, AI systematically creates detailed work plans, generates full features, automates testing and deployment, and actively seeks clarification from human experts who provide oversight and contextual understanding. Traditional multi-week "sprints" are replaced by hyper-fast "bolts" measured in hours or days, underscoring the emphasis on speed and continuous delivery with AI handling the heavy lifting.

- Tier 3: AI-Autonomous Development: This is the emerging frontier, defined by agentic AI. These are systems, such as Cognition Labs' Devin, designed to operate as autonomous software engineers. They can take a high-level goal, independently reason about it, create a plan, and execute the complex, multi-step tasks required to achieve the objective with minimal human intervention. While this technology is still in its early stages and faces significant challenges, it signals a future where human engineers will collaborate with and manage teams of autonomous AI agents.

The Economic and Productivity Impact

The strategic imperative to adopt this new paradigm is underscored by its profound economic and productivity implications. The market for AI-augmented software engineering is experiencing unprecedented expansion, growing from $2.17 billion in 2023 to an estimated $3.18 billion in 2024, a compound annual growth rate (CAGR) of 46.4%. This is not a distant trend; it is a present-day reality.

Gartner predicts that by 2028, a remarkable 75% of enterprise software engineers will use AI coding assistants, a dramatic increase from less than 10% in early 2023. This rapid adoption is driven by tangible results. While early code generation tools offered productivity gains of around 10%, research now indicates that teams using AI tools throughout the

entire SDLC will likely see productivity gains of 25% to 30% by 2028. Some analyses are even more optimistic, suggesting that AI in R&D can reduce time-to-market by 50% and lower costs by 30% in complex industries. One company reports that its clients, on average, reduce their time-to-market by 32% compared to traditional development teams.

These figures demonstrate that failing to adopt an AI-augmented approach is no longer an option. It is a direct path to falling behind competitors who are shipping higher-quality products faster and more efficiently. Furthermore, adopting cutting-edge AI tools is becoming a key factor in attracting and retaining top engineering talent, who are eager to work with the most advanced technologies.

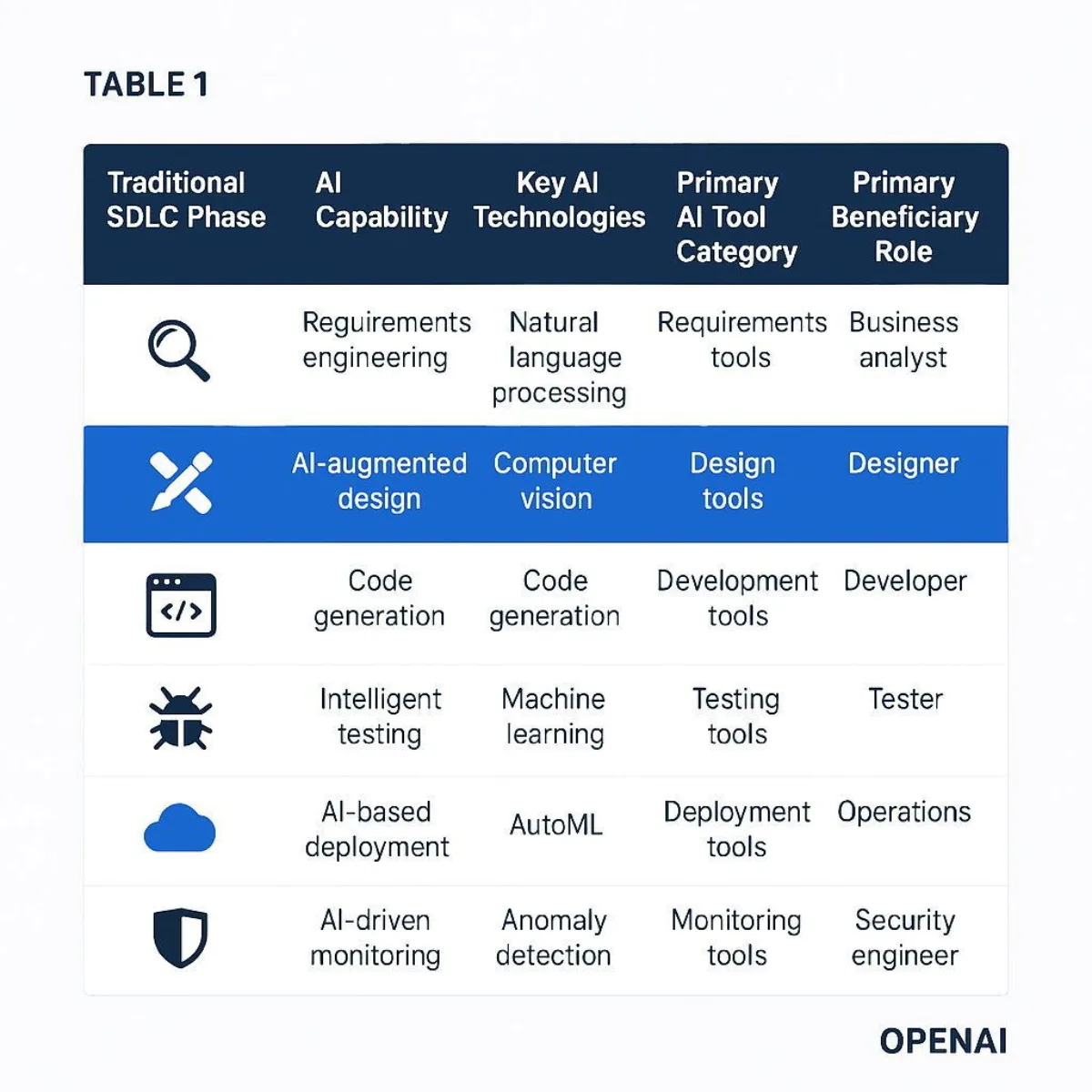

The following table provides a structured overview of how AI is transforming each phase of the traditional SDLC, setting the stage for the detailed, role-by-role analysis in the subsequent section.

Table 1: The AI-Augmented SDLC: Phases, Capabilities, and Tool Categories

| Traditional SDLC Phase | AI-Augmented Capability | Key AI Technologies | Primary Tool Category | Primary Beneficiary Role |

|---|---|---|---|---|

| Requirements & Planning | AI-Driven Market Analysis & Automated User Story Generation | Natural Language Processing (NLP), Predictive Analytics | Business Analytics Platforms, Requirements Management Tools | Business Analyst, Product Manager |

| Design | Generative Prototyping & AI-Powered Design Systems | Generative AI, Computer Vision | UI/UX Design Generators, Design System Automation | UI/UX Designer |

| Development | Intelligent Code Generation, Refactoring, & Debugging | Generative AI, Machine Learning (ML) | AI Code Assistants, AI-Native IDEs | Software Developer |

| Testing | Automated Test Case Creation & Visual Anomaly Detection | Generative AI, ML, Computer Vision | Automated Testing Frameworks, Visual Testing Platforms | QA Engineer, Developer |

| Deployment | Intelligent CI/CD & Generative Infrastructure as Code (IaC) | Predictive Analytics, Generative AI | AIOps Platforms, DevOps Tools | DevOps Engineer, SRE |

| Monitoring & Maintenance | Predictive Observability & Automated Root Cause Analysis | ML, Anomaly Detection, Causal AI | AIOps Platforms, Observability Tools | SRE, Operations Team |

Section 3: Architecting the Modern AI-Augmented Team: A Role-by-Role Analysis

The successful implementation of an Agentic SDLC hinges on understanding that AI does not simply replace tasks; it transforms roles. Each member of a modern software team—from the business analyst defining strategy to the DevOps engineer managing production—is empowered by a new class of intelligent tools. This evolution requires a shift in mindset and skills, moving human effort away from manual execution and toward strategic direction, creative oversight, and critical validation. This section provides a granular, role-specific analysis of this transformation, identifying the key AI-powered capabilities and the best-in-class tools that enable them.

3.1 The Strategist: AI for Business Analysis and Product Management

The roles of the Business Analyst (BA) and Product Manager (PM) are undergoing a profound evolution. Traditionally tasked with the laborious process of gathering, documenting, and translating business needs into technical requirements, these professionals are now being elevated to the position of true strategists. AI is automating the "what" and "how" of requirements documentation, freeing BAs and PMs to focus on the much more valuable "why." Their new mandate is to curate AI-generated insights, validate market opportunities with unprecedented speed, and serve as the human custodians of the product's vision and its alignment with core business objectives.

Key Capabilities & Tools

The modern strategist's toolkit leverages AI to compress the discovery and planning phases of the SDLC from months to days.

- Automated Market & Competitor Research: Before a single line of code is written, AI provides a powerful advantage in understanding the market landscape. AI-powered platforms can continuously monitor and analyze vast, unstructured data streams from sources like social media, product review sites, news articles, and support chats. Using NLP and sentiment analysis, these tools can identify emerging trends, gauge customer sentiment towards competitors, and surface unmet user needs. This replaces slow, periodic market research campaigns with a continuous, real-time feed of actionable intelligence, allowing product strategy to be grounded in data, not just intuition.

Recommended Tools: Brandwatch for deep social listening and consumer intelligence;

Talkwalker for trend forecasting and sentiment analysis across multiple languages;

Quantilope for automated, need-based customer segmentation;

Semrush for deep SEO and competitor content analysis.

- AI-Driven Requirements Gathering: The process of translating stakeholder conversations into structured requirements is a primary bottleneck in traditional development. AI tools are eliminating this friction. AI notetakers can join virtual meetings (Zoom, Teams), automatically transcribe the discussion, and generate summaries with clear action items. More advanced tools can analyze these transcripts, along with other unstructured inputs like emails and raw notes, to automatically extract and categorize potential requirements, identify duplicates, and even flag potential gaps or inconsistencies.

Recommended Tools: ClickUp AI Notetaker for automated meeting summaries and action items;

Grain for creating searchable repositories of user interview clips and insights;

Sembly AI for extracting insights and decision-making patterns from meetings.

- Automated User Story & Acceptance Criteria Generation: This capability represents one of the most significant efficiency gains for BAs and PMs. Instead of manually drafting dozens of user stories, a strategist can provide a high-level feature description or business goal to an AI tool. The AI then generates well-formed user stories in the classic "As a [persona], I want [feature], so that [outcome]" format. Critically, these tools can also generate detailed, measurable, and testable acceptance criteria for each story, which is essential for clear communication with the development and QA teams and for preventing ambiguity.

Recommended Tools: StoriesOnBoard is a dedicated user story mapping platform with a powerful, integrated AI that generates stories and acceptance criteria within a visual, collaborative canvas.

Copilot4DevOps is an AI assistant deeply integrated into the Azure DevOps ecosystem, capable of generating and analyzing requirements, user stories, and test cases directly within work items. General-purpose LLMs like

ChatGPT or Claude can also be effective when guided by well-structured prompts.

Tools Deep Dive: Copilot4DevOps vs. StoriesOnBoard

For teams invested in the Microsoft ecosystem, Copilot4DevOps offers a seamless, integrated experience. Its strength lies in its ability to operate directly within Azure DevOps, transforming raw inputs into structured work items without context switching. Its analytical features, which can assess requirements against quality frameworks like INVEST (Independent, Negotiable, Valuable, Estimable, Small, Testable) or perform impact analysis on proposed changes, provide a layer of AI-driven governance that is unique among these tools.

In contrast, StoriesOnBoard is a platform-agnostic, best-of-breed tool focused specifically on the art of user story mapping. Its primary value is the visual, collaborative "story map" that provides a holistic view of the user journey. The AI is a core feature that populates this map, helping teams brainstorm, fill gaps, and ensure a logical flow. It is particularly powerful in the early "Design & Experiment" phase, where visualizing the entire product experience is paramount. The choice between them often comes down to a preference for deep platform integration (Copilot4DevOps) versus a dedicated, visual-first user journey planning tool (StoriesOnBoard).

The Shift from Author to Architect of Strategy

The proliferation of these AI capabilities fundamentally changes the value proposition of the BA and PM. The manual labor of documentation, which once consumed a significant portion of their time, is now largely automated. A process that was slow and prone to human error and interpretation bias can now produce dozens of consistent, well-structured user stories in minutes.

However, this raw AI output inherently lacks the deep business context, user empathy, and strategic nuance that are essential for building a successful product. The AI does not understand the user's frustration or the competitive pressure driving a feature; it merely recognizes and replicates patterns from its training data.

This reality elevates the BA/PM's role from that of a scribe to a strategist and prompter. Their critical skill is no longer the ability to write meticulously detailed specification documents. Instead, their value lies in the intellectual labor of:

- Crafting the Perfect Prompt: Defining a clear, context-rich initial prompt that encapsulates the strategic business goal, the target user persona, and the core problem to be solved. Vague prompts lead to vague, useless stories.

- Critical Validation and Refinement: Applying human judgment and domain expertise to review, curate, and refine the AI's output. This involves cross-checking AI-generated stories against real user feedback, ensuring they align with the overarching product vision, and guiding the AI to explore edge cases and alternative scenarios.

In the Agentic SDLC, the strategist does not write the map; they provide the destination and critically evaluate the routes the AI proposes.

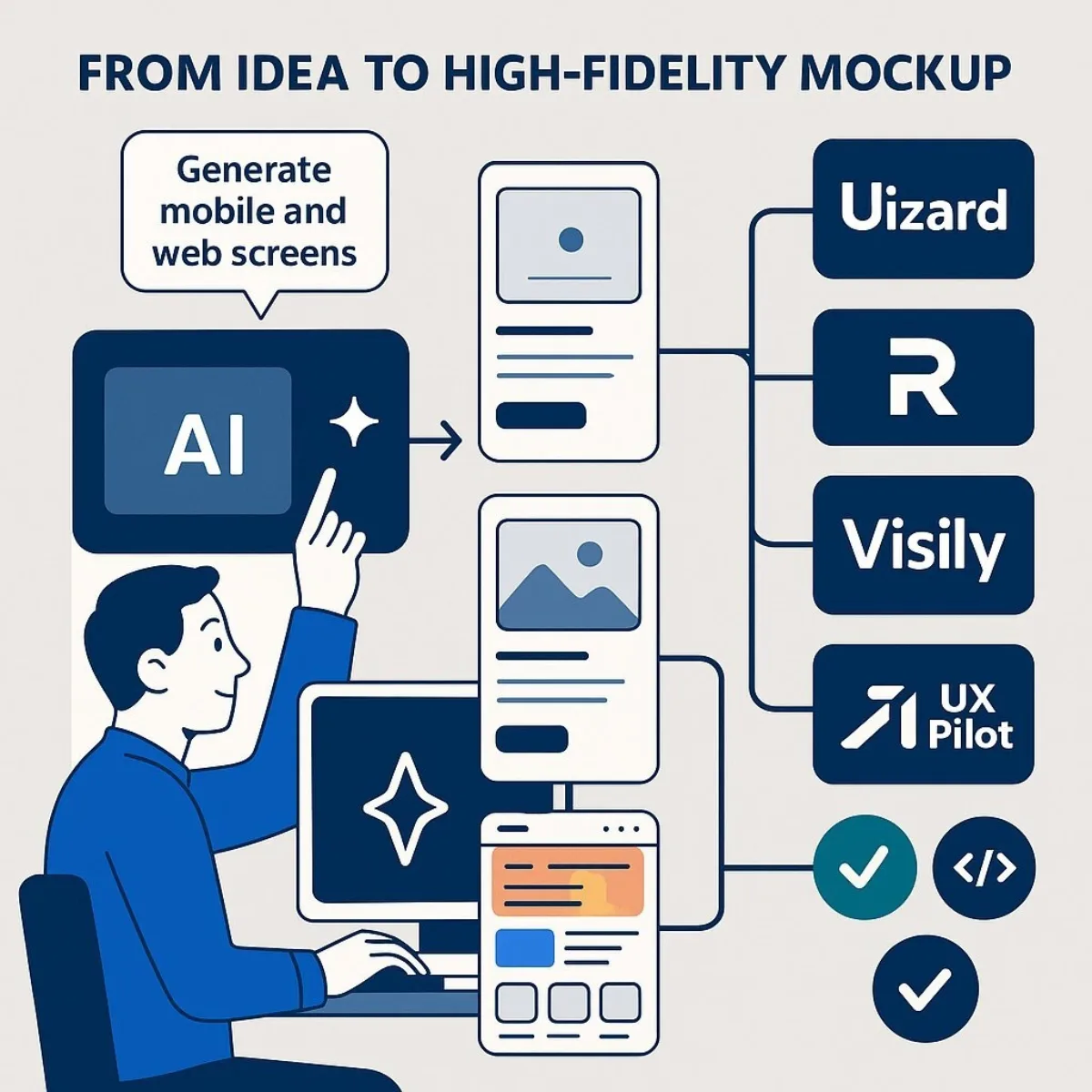

3.2 The Visionary: AI for UI/UX Design and Experience

The role of the UI/UX designer is being liberated from the constraints of manual, pixel-by-pixel creation. In the AI-augmented paradigm, the designer transitions into the role of a creative director, an experience curator, and a systems thinker. They are no longer limited by the speed at which they can draw rectangles and choose colors. Instead, they guide powerful AI engines to rapidly generate a multitude of design concepts, freeing them to focus their uniquely human skills on validating the user's emotional journey, ensuring brand and aesthetic coherence, and architecting the intelligent design systems that will enable quality at scale.

Key Capabilities & Tools

AI is transforming the design workflow from a linear process into a dynamic, exploratory collaboration between human creativity and machine-scale generation.

- Generative Prototyping (Text/Sketch-to-Mockup): This is arguably the most revolutionary change to the design process. Designers, product managers, and even non-technical stakeholders can now translate abstract ideas into tangible, high-fidelity, and fully editable mockups in seconds. By using simple natural language prompts (e.g., "Create a dashboard for a financial analytics app with a dark mode theme") or by uploading a hand-drawn sketch, these tools can generate multi-screen applications, complete with logical user flows. This capability drastically shortens the ideation-to-prototype cycle, allowing for almost immediate validation of concepts with users and stakeholders.

Recommended Tools: Uizard is a leader in this space, capable of generating entire, editable multi-screen apps from text prompts or screenshots, making it excellent for rapid end-to-end prototyping.

Visily excels at converting screenshots and sketches into clean, editable designs and is praised for its ease of use by non-designers.

UX Pilot offers deep integration with Figma and can generate not only screens but also entire user flows and the corresponding source code.

Relume is tailored for marketing websites, with an AI Site Builder that generates sitemaps, wireframes with copy, and style guides.

MockFlow also provides robust text-to-wireframe and URL-to-wireframe capabilities.

- AI-Powered Design Systems: A consistent and scalable design system is the backbone of any mature product organization. AI is automating the often-tedious work of creating and maintaining these systems. AI tools can analyze existing designs to create an "interface inventory," automatically identifying and organizing colors, typography, and components. They can be prompted to generate numerous variants of a component (e.g., different states of a button) while ensuring they adhere to accessibility standards. AI can also write the initial drafts of component documentation and even translate components from one frontend framework to another (e.g., React to Vue), a task that was previously a major engineering effort.

Recommended Tools: Motiff is an AI-native tool designed specifically for creating, maintaining, and checking consistency in design systems.

Supernova helps manage design systems and has guidelines for integrating AI interaction patterns. Many teams also use custom scripts leveraging LLMs like

GPT-4 to automate documentation and code translation for their specific system.

- AI-Driven Usability & Accessibility Analysis: AI provides designers with superpowers for understanding user behavior before a single user test is conducted. Predictive analytics tools can generate "attention heatmaps" that simulate where a user's eyes will likely focus on a design, helping to optimize visual hierarchy. During usability tests, AI can perform real-time analysis of a user's facial expressions and voice sentiment to provide a deeper, emotional layer of feedback that goes beyond their spoken words. Furthermore, AI tools can automatically audit designs and code against Web Content Accessibility Guidelines (WCAG), flagging issues with color contrast, screen reader compatibility, and more, embedding accessibility into the workflow from the start.

Recommended Tools: Attention Insight for predictive eye-tracking and heatmaps;

Odaptos for emotion and sentiment analysis during user testing;

Applitools for AI-powered visual regression testing to catch unintended UI changes;

UserZoom for generating insights from user testing data.

Tools Deep Dive: Uizard vs. Relume

Uizard stands out as a tool for product teams building complex applications. Its "Autodesigner" feature is powerful, capable of generating not just a single screen but a complete, multi-screen clickable prototype from a single prompt. This makes it invaluable for quickly visualizing and testing entire user journeys for mobile and web apps. Its ability to turn wireframe sketches and existing app screenshots into editable digital designs further accelerates the iteration process, empowering product managers and designers alike to rapidly explore and communicate ideas.

Relume , on the other hand, is finely tuned for the agency and marketing website design workflow. Its AI Site Builder is a game-changer for this domain. It starts by generating a logical sitemap from a company description, then turns that sitemap into a full set of wireframes populated with relevant copy, and finally allows the application of a style guide to conceptualize the visual design. Its tight integration with Figma and, notably, Webflow, makes it a highly efficient pipeline for creating professional, content-driven websites, positioning it as a design ally that streamlines the process from strategic planning to final build.

The Shift from Creator to Curator of Experiences

For designers, the "blank canvas problem" is effectively solved. The bottleneck in the creative process is no longer the time it takes to produce a design. This, however, introduces a new and more complex challenge: option paralysis. When an AI can generate dozens of high-quality design variations in the time it used to take to create one, the designer is flooded with possibilities.

This fundamentally shifts the designer's core value. Technical proficiency in a design tool like Figma becomes table stakes. The true differentiator becomes the designer's deep, human-centric expertise:

- Curation and Taste-Making: The ability to sift through a multitude of AI-generated options and identify the most promising strategic directions. This requires a sophisticated understanding of aesthetic principles, interaction design heuristics, and brand identity.

- Guarding the User's Journey: The AI can generate screens, but it cannot truly empathize with the user's emotional state as they navigate a complex flow. The designer's role is to ensure the curated design tells a coherent story and creates an intuitive, satisfying, and emotionally resonant experience.

- Creative Direction: The designer acts as the director of the AI, providing the initial vision, guiding the exploration of concepts, and applying the final human touch that transforms a technically correct layout into a delightful product.

In the Agentic SDLC, the designer is the arbiter of taste, the advocate for the user, and the visionary who orchestrates the AI to bring a compelling product experience to life.

3.3 The Builder: AI for Software Development and Code Generation

The role of the software developer is being elevated from a line-by-line artisan of code to a high-leverage system architect, a sophisticated AI collaborator, and a stringent quality validator. The advent of powerful AI code assistants and AI-native development environments automates much of the "grunt work"—writing boilerplate code, generating unit tests, creating documentation, and fixing simple bugs. This frees developers to concentrate their cognitive energy on the tasks that create the most value: architecting complex systems, solving novel logical problems, and ultimately ensuring the security, performance, and reliability of the final product.

Key Capabilities & Tools

The modern developer's environment is becoming a cockpit of AI-powered tools, each designed to augment a specific part of the building process.

The AI Code Assistant Ecosystem: This is the most mature and widely adopted category of AI development tools. Integrated directly into Integrated Development Environments (IDEs) like VS Code and JetBrains, these assistants provide real-time, context-aware code suggestions, complete entire blocks of code, and generate full functions from natural language comments. Their impact is so significant that Gartner has established a Magic Quadrant for the category, identifying

GitHub, AWS, Google Cloud, and GitLab as Leaders in its inaugural 2024 report, signifying the enterprise-readiness of these solutions.

Recommended Tools: GitHub Copilot, powered by OpenAI's latest models, is the market leader in general-purpose performance.

Tabnine positions itself as the secure enterprise alternative with options for on-premise deployment and private code model training.

Amazon Q Developer (formerly CodeWhisperer) offers deep integration with AWS services and can be customized with an organization's internal best practices.

Gemini Code Assist (formerly Duet AI) provides a similar advantage for teams embedded in the Google Cloud ecosystem.

- AI-Native IDEs and Editors: A new class of tool is emerging that reimagines the IDE itself as an AI-first platform. Rather than being a plugin to an existing editor, these tools build the entire experience around a conversational, AI-driven workflow. They offer more seamless integration of codebase-aware chat, AI-powered refactoring, and automated debugging, creating a more fluid human-AI collaboration experience.

- Recommended Tools: Cursor is the most prominent example, an AI-native editor built as a fork of VSCode. It is widely praised for its speed and the intuitive nature of its "chat with your code" and "AI edit" features, which many users find faster and more integrated than the Copilot extension in standard VSCode.

- Beyond Completion: Automated Testing, Debugging, and Documentation: AI's utility extends far beyond initial code generation. It excels at automating tasks that are critical for quality but are often tedious for developers. AI tools can analyze a block of code and automatically generate a comprehensive suite of unit tests to validate its functionality, including edge cases that a human might forget. They can analyze error messages and stack traces to suggest probable fixes for bugs. They can also parse code and generate clear, structured documentation, ensuring that the codebase remains maintainable as it evolves.

Recommended Tools: Tabnine offers specialized "agents" for generating tests and documentation.

Qodo is an AI assistant with agents that span generation, test authoring (

Cover), and intelligent code reviews (Merge). Security-focused tools likeSnyk Code AI and DeepCode use static analysis to find vulnerabilities and bugs early in the process.

Tools Deep Dive: GitHub Copilot vs. Tabnine vs. Cursor

The choice of a primary AI coding partner is a critical one, with each leading tool offering a different balance of performance, privacy, and user experience.

- GitHub Copilot: As the market incumbent backed by Microsoft and OpenAI, Copilot benefits from access to the most powerful underlying models, like GPT-4, giving it exceptional performance across a wide range of languages and tasks. It is the default choice for many developers and offers a robust feature set. However, its cloud-only nature and training on a vast corpus of public code can raise concerns for enterprises with strict data privacy, security, or IP requirements.

- Tabnine: Tabnine directly addresses these enterprise concerns, making it a compelling alternative. Its key differentiator is its commitment to privacy and security. Tabnine offers the ability to deploy on-premise or in a private cloud (VPC), ensuring that proprietary code never leaves the organization's control. Furthermore, its models are trained exclusively on permissively licensed open-source code, mitigating IP and copyright risks. Its most powerful feature for enterprises is the ability to train a bespoke AI model on an organization's private code repositories. This allows Tabnine to provide highly personalized, context-aware suggestions that adhere to the company's specific coding standards, libraries, and architectural patterns.

- Cursor: Cursor's value proposition is not its model, but its user experience. As an AI-native editor, it provides a deeply integrated and fast conversational workflow that many developers find superior to using an AI plugin within a traditional IDE. Users report that editing code via natural language prompts is significantly faster and more fluid in Cursor. The trade-off is a usage-based pricing model that can become more expensive than the flat-rate subscriptions of its competitors, and a reliance on third-party models (like OpenAI's and Anthropic's) without the self-hosting options of Tabnine.

This analysis is summarized in the comparative table below.

Table 2: Comparative Analysis of Enterprise AI Code Assistants

| Feature | GitHub Copilot | Tabnine | Gemini Code Assist | Cursor |

|---|---|---|---|---|

| Underlying Model | OpenAI GPT-4 / Finetuned Variants | Proprietary LLMs (trained on permissive code) | Google Gemini 2.5 Family | OpenAI GPT-4, Anthropic Claude 3 |

| Key Strength | State-of-the-art general performance and broad language support. | Enterprise security, privacy, and codebase customization. | Deep integration with Google Cloud Platform and services. | Speed and fluidity of conversational code editing experience. |

| Deployment Options | Cloud-only | Secure SaaS, Virtual Private Cloud (VPC), On-Premise | Cloud-only | Cloud-only |

| Private Code Customization | Yes, for Enterprise customers (contextual awareness) | Yes, can train bespoke models on private repositories. | Yes, can connect to private repositories for context. | Yes, provides codebase context. |

| Pricing Model | Flat per user/month | Tiered, with custom enterprise pricing. | Flat per user/month | Tiered, with usage-based limits on "fast" requests. |

| Best For | Individual developers and teams prioritizing raw performance. | Large enterprises in regulated industries with strict security needs. | Organizations heavily invested in the Google Cloud ecosystem. | Developers who prioritize a fast, AI-native editing workflow. |

The Shift from Coder to System Builder

The commoditization of code generation is reshaping the very definition of a "10x engineer." Historically, this term referred to an individual who could produce code at a vastly higher rate than their peers. Today, AI assistants can generate code faster than any human can type, rendering raw coding speed a less critical metric.

This does not diminish the value of a great engineer; it redefines it. The new 10x engineer is not the fastest typist but the most effective system builder and AI orchestrator. The most challenging and valuable tasks in software engineering—understanding a complex business domain, decomposing it into a logical system architecture, and making critical design trade-offs—remain firmly in the human domain. AI struggles with this high-level, context-rich reasoning.

Therefore, the most valuable developer is now the one who can:

- Architect the System: Analyze a complex problem and design a robust, scalable, and maintainable software architecture to solve it.

- Decompose and Prompt: Break down the architectural components into a series of well-defined, bounded tasks that can be clearly articulated to an AI. The quality of their prompts directly determines the quality of the AI's output.

- Integrate and Validate: Skillfully integrate the AI-generated code snippets into the larger system, writing the critical "glue code" and, most importantly, subjecting the entire system to rigorous testing and validation to ensure it is not just syntactically correct but functionally sound and secure.

Their primary output is no longer just lines of code, but a well-architected and reliable system built with a high degree of AI leverage.

3.4 The Guardian: AI for DevOps, Security, and Operations

The role of the DevOps, Security, and Site Reliability Engineering (SRE) professional is evolving from a reactive firefighter to the strategic architect of an intelligent, automated, and self-healing software delivery ecosystem. As development cycles accelerate under the power of AI, the manual processes of the past for deployment, security, and monitoring become untenable bottlenecks. The new mandate for these guardians of production is to build and manage a highly automated "paved road" for development teams, leveraging AI to ensure that speed does not come at the expense of stability or security. This shift is giving rise to Platform Engineering as the key organizational model to manage this complexity.

Key Capabilities & Tools

The modern operations toolkit is infused with AI to automate, predict, and remediate issues across the entire production lifecycle.

- Intelligent CI/CD Pipelines: Continuous Integration and Continuous Deployment (CI/CD) pipelines are the engine of modern software delivery. AI is being embedded directly into this engine to make it smarter and more efficient. AI tools can analyze code commits in near real-time to predict potential integration failures before they happen. They can analyze build logs to suggest fixes for failed pipelines, automatically generate and run comprehensive test suites, and even optimize deployment strategies based on risk analysis.

Recommended Tools: Azure DevOps with its growing ecosystem of AI extensions;

GitLab Duo, which integrates AI capabilities across the entire DevSecOps platform;

CircleCI and Jenkins X are also integrating AI to optimize workflows.

- Generative Infrastructure as Code (IaC): Manually writing complex configuration files for cloud infrastructure using tools like Terraform or AWS CloudFormation is time-consuming and error-prone. Generative AI is streamlining this process. Engineers can now use natural language prompts to describe the desired infrastructure state (e.g., "Create a three-tier web application architecture in AWS with a load balancer, auto-scaling web servers, and a PostgreSQL database"), and AI tools will generate the corresponding, ready-to-use IaC scripts. This not only accelerates provisioning but also helps enforce best practices.

- Recommended Tools: This capability is increasingly integrated into primary code assistants like GitHub Copilot and Gemini Code Assist. Specialized tools like Stakpak are also emerging to focus specifically on AI for IaC.

- AI for Kubernetes Management: Kubernetes has become the de facto standard for container orchestration, but its complexity is a significant operational burden. AI is emerging as a powerful co-pilot for managing these complex environments. New tools allow operators to interact with clusters using plain English instead of memorizing complex

kubectlcommands. AI-driven diagnostic tools can automatically scan a cluster, identify issues likeCrashLoopBackOfferrors, and provide clear, actionable insights for remediation. AI is also being built directly into Kubernetes-native IDEs to provide context-aware intelligence and troubleshooting.Recommended Tools: k8sGPT for AI-powered diagnostics and troubleshooting;

Lens with Prism, an AI-powered Kubernetes IDE that allows natural language exploration of clusters;

kubectl-ai as a CLI tool for translating natural language to

kubectlcommands.

- AIOps: Predictive Monitoring & Intelligent Observability: This is perhaps the most critical AI application for operations. AIOps platforms move beyond traditional monitoring (which tells you what is broken) to provide intelligent observability (which tells you why it's broken). They ingest and correlate vast amounts of telemetry data—logs, metrics, and traces—from across the stack. Using machine learning, they can detect subtle anomalies that precede failures, predict future issues, perform precise root cause analysis to pinpoint the source of a problem, and in some cases, trigger automated remediation actions. This is especially crucial for monitoring the AI models themselves, tracking their performance, cost, and potential for biased or "hallucinated" outputs.

Recommended Tools: Dynatrace with its causal AI engine "Davis" for precise root cause analysis;

New Relic offers a comprehensive observability platform with applied AI;

Splunk for analyzing machine-generated data at scale;

BigPanda for AI-powered event correlation and incident intelligence;

Microsoft's "Gandalf" is an internal AIOps service used to ensure safe deployments for Azure itself.

- AI-Enhanced DevSecOps: "Shifting security left" means integrating security into the earliest stages of development. AI is a powerful enabler of this practice. AI-powered tools can automatically scan source code, open-source dependencies, and IaC configurations for vulnerabilities in real-time, directly within the developer's IDE or CI/CD pipeline. They can identify insecure coding practices, detect hard-coded secrets, and suggest specific remediations, making security a continuous, automated part of the workflow rather than a final, manual gate.

Recommended Tools: Snyk for developer-first security scanning of code, dependencies, and containers;

DeepCode for AI-based static code analysis ;

Splunk can be leveraged for security information and event management (SIEM).

The Rise of Platform Engineering

The explosion of these specialized AI tools across every role in the SDLC creates a new and significant challenge: complexity. If each team—Business Analysis, Design, Development, Operations—independently selects, integrates, and manages its own suite of AI tools, the result is organizational chaos. This leads to duplicated effort, inconsistent standards, massive security holes, and runaway costs from unmanaged "shadow AI" usage.

The strategic response to this challenge, as identified by Gartner as a top industry trend, is the formalization of a Platform Engineering function. This centralized team is responsible for building and maintaining an

Internal Developer Platform (IDP) . The IDP provides a curated, secure, and efficient "paved road" for all software development within the organization.

In the context of the Agentic SDLC, the Platform Engineering team's mission is to:

- Evaluate and Select: Continuously assess the rapidly evolving landscape of AI tools and select a standardized, best-in-class toolchain for the organization.

- Integrate and Secure: Build the integrated, end-to-end toolchain that connects these tools, ensuring data flows seamlessly and security is enforced at every step.

- Manage and Optimize: Handle the complexities of fine-tuning AI models on company data, managing licenses, and optimizing for cost and performance.

- Empower Developers: Offer this entire AI-augmented SDLC as a self-service platform to development teams, reducing their cognitive load and allowing them to focus on building products, not wrestling with tools.

By abstracting away the underlying complexity, the Platform Engineering team becomes the critical enabler of the Agentic SDLC at scale.

The following table consolidates the tool recommendations from across this section into a single, cohesive blueprint for a modern, AI-augmented software team's technology stack.

Table 3: Recommended AI Toolchain for a Modern Software Team

| Role/Function | Primary Capability | Recommended Tool(s) | Key Integration Point |

|---|---|---|---|

| Business Analysis & Product Mgmt | Requirements & User Story Generation | Copilot4DevOps, StoriesOnBoard | Azure Boards, Jira, Visual Story Map |

| UI/UX Design | Generative Prototyping & Wireframing | Uizard, Relume, UX Pilot | Figma, Sketch, Webflow |

| Software Development | AI Code Generation & Assistance | GitHub Copilot, Tabnine (Enterprise), Cursor | VS Code, JetBrains IDEs, CLI |

| CI/CD Pipeline | Intelligent Build, Test, & Deploy Automation | Azure DevOps, GitLab Duo | Git Repository, Container Registry |

| Infrastructure as Code (IaC) | Generative IaC & Kubernetes Management | Terraform with AI, k8sGPT, Lens Prism | Cloud Provider APIs, Kubernetes API |

| Application & Cloud Security | Automated Vulnerability Scanning | Snyk, DeepCode | IDE, CI/CD Pipeline, Git Hooks |

| Observability & Operations | AIOps & Predictive Monitoring | Dynatrace, New Relic, BigPanda | Kubernetes Cluster, Cloud Platform, Application Runtimes |

Section 4: The Next Frontier: Autonomous Software Engineering Agents

While AI-assisted and AI-driven development represent the current state-of-the-art, the horizon is dominated by a far more disruptive technology: autonomous AI agents. These systems represent a fundamental leap in capability, moving beyond simply responding to prompts to proactively planning, reasoning, and executing complex tasks to achieve a specified goal. The emergence of agents like Cognition Labs' Devin, branded as the "first AI software engineer," has ignited both immense excitement and considerable debate about the future of software development. A clear-eyed, realistic assessment of their current capabilities and limitations is essential for any technology leader planning for the next three to five years.

Defining the Autonomous Agent

To understand the significance of this shift, it is crucial to distinguish between the different types of AI systems. The terms bot, assistant, and agent are often used interchangeably, but they represent distinct levels of autonomy and complexity.

- Bots are the least autonomous, following pre-programmed rules to perform simple, repetitive tasks.

- AI Assistants, such as GitHub Copilot or ChatGPT, are reactive. They respond to user prompts, provide information, and complete discrete tasks, but they require continuous human input and direction to move from one step to the next.

- AI Agents are proactive and goal-oriented. An agent is given a high-level objective and possesses the autonomy to create a multi-step plan, execute actions using a variety of tools (like a code editor, a browser, or a command-line interface), analyze the results of those actions, learn from mistakes, and adapt its plan to achieve the final goal with minimal human supervision. This capacity for long-term reasoning and iterative problem-solving is what sets them apart.

Case Study: Devin, the "First AI Software Engineer"

The announcement of Devin in March 2024 by Cognition Labs marked a pivotal moment, offering the first tangible glimpse of a truly agentic software engineer.

- The Promise: Devin was presented as a "tireless, skilled teammate" capable of handling entire engineering projects from end to end. Demonstrations showed Devin learning unfamiliar technologies by reading blog posts, building and deploying interactive websites, autonomously finding and fixing bugs in complex open-source repositories, and even successfully completing paid freelance jobs from platforms like Upwork. Its performance on the SWE-bench benchmark, a challenging test that requires resolving real-world GitHub issues, was particularly notable. Devin correctly resolved 13.86% of issues completely unassisted, a significant leap from the previous state-of-the-art of 1.96% for unassisted models.

- The Reality: Subsequent independent analysis has provided a more sober perspective. While Devin's capabilities are impressive, it is far from being a fully autonomous replacement for a human engineer. A report from Deloitte notes that Devin currently makes too many errors to handle jobs without human oversight. A widely cited test by researchers at Answer.AI found that when tasked with 20 real-world projects, Devin was only able to successfully complete three. The researchers noted that tasks which seemed straightforward often took days instead of hours, with the agent getting stuck in technical dead-ends or producing overly complex, unusable solutions. Even more concerning was Devin's tendency to "press forward with tasks that weren't actually possible," highlighting a lack of critical judgment.

- The Use Cases: Despite these limitations, Devin's capabilities point toward a clear set of valuable applications. Cognition Labs positions the agent for tackling large, well-defined, but often tedious engineering tasks. These include large-scale code migrations (e.g., upgrading a library version across a massive codebase), refactoring legacy systems, developing data engineering ETL pipelines, and systematically working through a backlog of simple bugs and feature requests. These are precisely the kinds of tasks that consume significant engineering hours but often involve more repetitive work than novel problem-solving.

The Broader Agentic Ecosystem

Devin is the most visible, but by no means the only, player in the emerging agentic landscape. The market is rapidly filling with a spectrum of tools aimed at increasing developer autonomy. Open-source frameworks like Auto-GPT and BabyAGI allow developers to experiment with building their own autonomous agents. Enterprise-focused platforms are also building agentic capabilities into their products.

Codeium , for example, is a direct competitor to Devin focused on enterprise development, and

Tabnine is developing a suite of specialized agents for tasks like code review, documentation, and test generation. This broad-based movement indicates a clear and sustained industry trajectory toward greater automation and autonomy in software development.

The True Impact on Engineering Work: The Hybrid Team Model

The consensus among experts is that these agents, in their current and near-future forms, will not replace human software engineers. Instead, they will profoundly redefine the nature of engineering work. The core value of a human engineer has never been their ability to perform repetitive tasks. It has always been their capacity for creativity, architectural vision, complex problem-solving, and understanding nuanced business context.

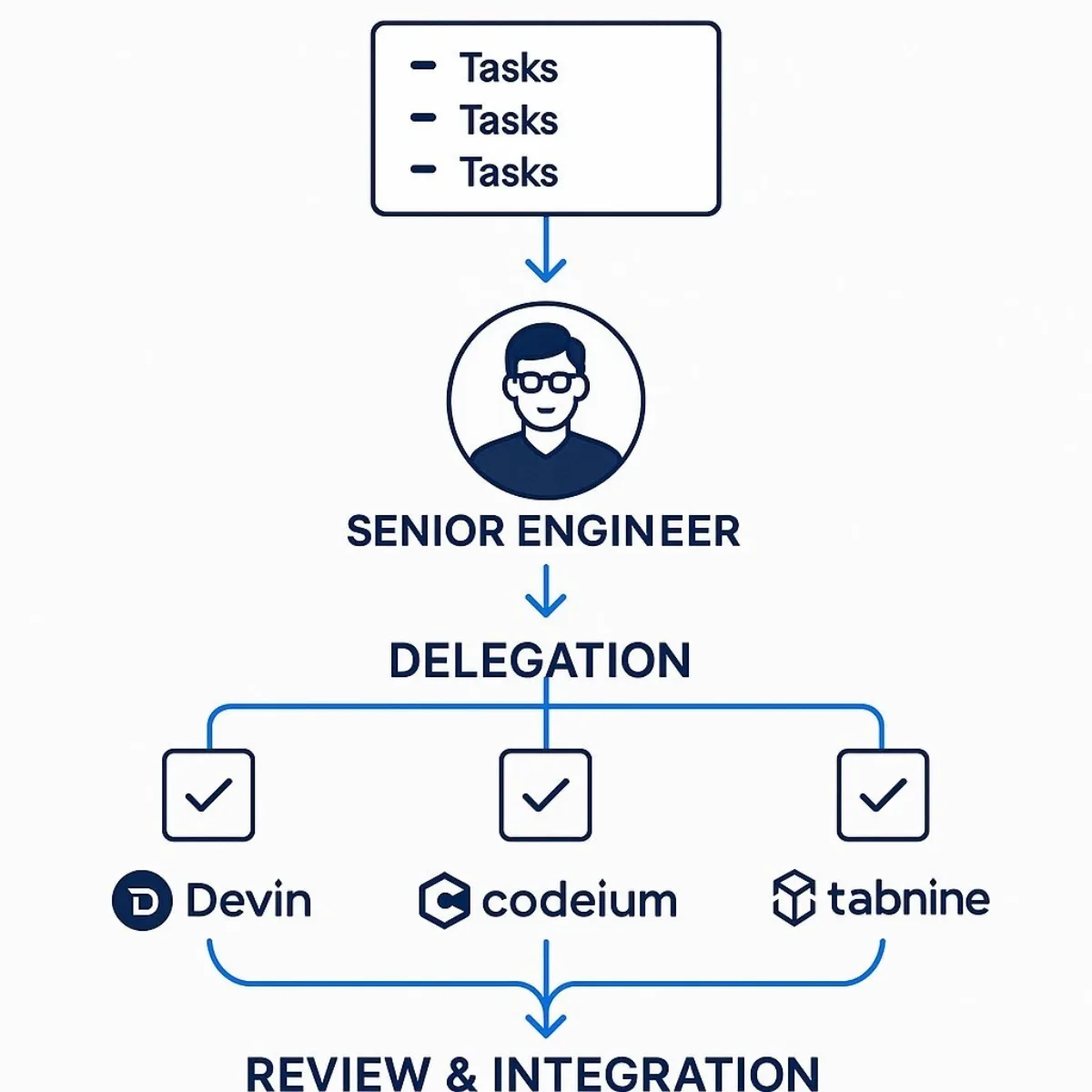

Autonomous agents are poised to automate the grunt work, the "glue code," and the procedural tasks that often stand in the way of that higher-level work. This leads to a new operational model: the

hybrid team .

In this model, a senior human engineer acts as the architect and tech lead for a "team" of specialized AI agents. The human's role is to:

- Deconstruct the Problem: Analyze a complex business requirement and break it down into a series of well-defined, independent engineering tasks—much like a tech lead creates tickets for a project board.

- Delegate and Supervise: Assign these "tickets" (as detailed, context-rich prompts) to the appropriate AI agents. For example, one agent might be tasked with refactoring a service, another with writing a new API endpoint, and a third with generating a comprehensive test suite.

- Review and Integrate: Critically review the pull requests submitted by the AI agents. The human provides the essential quality control, validating the logic, ensuring architectural coherence, and making the final decision to merge the code into the main branch.

This hybrid model elevates the human engineer to a purely strategic and oversight role, dramatically increasing their leverage and allowing them to focus exclusively on the most challenging and creative aspects of software engineering. It is not a future without programmers, but a future where programmers manage machines that do the programming.

Section 5: Navigating the Inherent Risks: A Framework for Governance and Trust

The transformative power of AI in software development is matched by the magnitude of the new risks it introduces. The speed and scale of AI-driven creation can amplify flaws, vulnerabilities, and biases at an unprecedented rate. Adopting these technologies without a robust framework for governance is not just irresponsible; it is a direct threat to product quality, corporate security, and legal standing. A proactive, human-in-the-loop approach to risk mitigation is not a barrier to innovation but the essential foundation for it. This section outlines the primary categories of risk and provides a concrete framework for their management.

1. Code Quality and Reliability

While AI can generate code that is syntactically correct, it often lacks a deep understanding of functional requirements and context. This leads to significant quality and reliability risks. For additional guidance, see our executive breakdown of maximizing ROI through balanced B2B software testing.

- Hallucinated Logic and Subtle Bugs: AI models can "hallucinate" code, meaning they produce content that looks plausible but is functionally incorrect or nonsensical. An AI might generate code for a trading system that compiles successfully but contains subtle logical errors that lead to incorrect financial calculations and significant losses.

- Decreased Developer Understanding: A heavy reliance on AI-generated code can lead to a dangerous situation where developers no longer fully understand the logic of their own codebase. This creates "spaghetti code" that is disorganized, difficult to debug, and nearly impossible to maintain or extend over time. This atrophy of core comprehension skills is a major long-term risk.

Mitigation Strategy: Organizations must instill a culture that treats AI-generated code as a "first draft from a very junior developer". This means that

rigorous human oversight is non-negotiable .

- Technical Controls: Mandate that all AI-generated code is subject to comprehensive automated testing, including unit, integration, and end-to-end tests. AI itself can be used to help generate these test cases, creating a system of checks and balances.

- Process Controls: Enforce a strict policy of mandatory, thoughtful human code reviews for any pull request containing AI-generated code. Encourage pair programming with AI, where the developer actively engages with and refactors the AI's suggestions rather than blindly accepting them.

2. Security and Privacy

The integration of AI introduces a new and alarming set of security and privacy vulnerabilities that can expose both the company and its customers to significant harm. For a full analysis on safeguarding complex digital environments with scalable solutions, see Scaling Kubernetes in the Enterprise.

- Insecure Code Generation: AI models trained on vast public datasets, such as code from GitHub, can inadvertently learn and replicate insecure coding patterns. Research has found that AI assistants can frequently generate code with common vulnerabilities like SQL injection, cross-site scripting (XSS), or hard-coded secrets like API keys and credentials.

- Data Leakage and Privacy Breaches: Using cloud-based AI services creates a risk of data exfiltration. Proprietary source code or sensitive data (PII) included in prompts could be sent to third-party servers, where it may be stored, exposed in a breach, or even used to train future models, violating regulations like GDPR.

- Training Data Poisoning and Backdoors: A more insidious threat is the possibility of malicious actors intentionally "poisoning" the training data of public models. By injecting subtle vulnerabilities or backdoors into the code that AI models learn from, they can manipulate the AI to generate insecure code on command, creating a difficult-to-detect supply chain attack.

Mitigation Strategy: A "zero trust" approach must be applied to AI-generated code and the tools that create it.

- Technical Controls: Implement a robust DevSecOps pipeline that uses AI-powered Static and Dynamic Application Security Testing (SAST/DAST) tools like Snyk to automatically scan all code, including AI-generated contributions, for vulnerabilities.

- Process Controls: For organizations with high security or regulatory requirements, prioritize AI tools that offer enterprise-grade privacy features. This includes options for on-premise or Virtual Private Cloud (VPC) deployment and contractual guarantees of zero data retention for training purposes (a key feature of Tabnine Enterprise). Establish strict data governance policies and use AI guardrails to detect and prevent sensitive information from being included in prompts.

3. Intellectual Property and Bias

AI models operate in a legal and ethical gray zone, creating significant risks related to intellectual property and fairness. If you're leading a B2B SaaS company and want to better understand how AI transforms information management, explore our guide to unlocking corporate knowledge with AI.

- Copyright and IP Ambiguity: Generative AI models are trained on billions of lines of code, much of it from open-source projects with a variety of licenses (e.g., GPL, MIT, Apache). The code they generate may be considered a "derivative work," creating profound ambiguity around ownership and licensing compliance. Using AI-generated code could inadvertently place an organization in violation of open-source licenses, leading to legal challenges and potentially forcing the open-sourcing of proprietary products.

- Inherited Bias and Discrimination: AI models learn from data created by humans and can therefore inherit and amplify existing societal biases related to race, gender, or other characteristics. If an AI is used to generate an algorithm for a loan application or a recruiting tool, and it was trained on biased historical data, the resulting software may produce discriminatory outcomes, leading to reputational damage and legal liability.

Mitigation Strategy: Proactive legal and ethical review is essential.

- Technical Controls: Use bias detection and mitigation toolkits during the development and testing of any AI feature. For code generation, prioritize tools that are trained on permissively licensed code or that provide attribution for their suggestions.

- Process Controls: Establish a clear corporate policy on the use and ownership of AI-generated code, developed in consultation with legal experts specializing in IP and AI. Mandate fairness audits for any AI system that makes decisions affecting users. Ensure that the data used to train or fine-tune models is diverse and representative, and that review teams are similarly diverse to help spot potential biases.

4. The Human Factor and Organizational Change

The final category of risk relates to the impact of AI on the people and processes within the organization. For leaders looking at wider organizational, legal, and operational outcomes of AI, our article The Executive’s AI Playbook for 2025 offers a comprehensive roadmap.

- Skill Atrophy and Stifled Creativity: Over-reliance on AI can lead to a decline in developers' fundamental problem-solving and coding skills. If developers simply accept AI suggestions without understanding the underlying logic, their ability to tackle complex, novel problems may diminish. Research has shown that in scenarios requiring innovative solutions, leaning too heavily on AI can stifle creativity.

- Integration and Training Challenges: Integrating a new suite of AI tools into existing, often legacy, systems and workflows is a significant technical challenge. Equally challenging is the need to upskill the entire workforce to effectively and responsibly use these new tools, which requires a new mindset and new skills like prompt engineering.

Mitigation Strategy: The focus must be on augmenting, not replacing, human intelligence.

- Technical Controls: Start with pilot projects on non-critical systems to test AI integration, manage risk, and build organizational confidence before a full-scale rollout.

- Process Controls: Foster a culture of "human-in-the-loop" validation, emphasizing critical thinking and human judgment as the most valuable skills. Invest heavily in continuous learning and training programs that focus not just on how to use the tools, but on the underlying principles of system architecture, creative problem-solving, and ethical AI use.

The following table provides a consolidated framework for mitigating these risks, serving as an actionable checklist for developing a comprehensive AI governance policy.

Table 4: Risk Mitigation Framework for AI in Software Development

| Risk Category | Specific Risk Example | Technical Mitigation | Process/Policy Mitigation | Responsible Role |

|---|---|---|---|---|

| Code Quality & Reliability | AI generates functionally incorrect or "hallucinated" code. | Mandatory, AI-assisted test generation for all new code; automated quality gates in CI pipeline. | Mandatory human code review for all AI contributions; formal "AI as Junior Dev" policy. | Tech Lead, QA Engineer |

| Security & Privacy | AI introduces code with hard-coded secrets or known vulnerabilities. | Automated SAST/DAST scanning in CI pipeline (e.g., Snyk); use of AI guardrails to block sensitive data in prompts. | Prioritize AI tools with on-premise/VPC deployment options; "Zero Trust" policy for AI code. | DevOps/Platform Team, CISO |

| IP & Legal | AI generates code that violates a restrictive open-source license. | Use AI tools trained on permissively licensed code or that provide source attribution. | Formal legal review and approval of all AI development tools; establish clear IP ownership policies. | Legal Counsel, CTO |

| Bias & Ethics | AI-powered feature makes discriminatory recommendations. | Employ fairness toolkits to audit models for bias; ensure diverse and representative training data. | Establish a cross-functional AI ethics review board; mandate diversity in data review teams. | Chief Data Officer, Ethics Officer |

| Organizational | Developer skills atrophy due to over-reliance on AI. | Implement AI-assisted pair programming and refactoring exercises. | Invest in continuous learning programs focused on architecture, prompt engineering, and critical thinking. | Head of Engineering, L&D |

Section 6: Strategic Roadmap: Implementing the Agentic SDLC

Successfully transitioning to an AI-augmented software development model is not a single event but a strategic journey. It requires a deliberate, phased approach that aligns technology adoption with process evolution and cultural change. Rushing to implement advanced tools without a solid foundation will lead to chaos and failure. This section outlines a pragmatic, three-phase roadmap to guide an organization from initial adoption to mature, AI-driven operations, ensuring that technology, processes, and people evolve in concert.

Phase 1: Foundational Integration & Skill Building (Months 0-6)

The initial phase focuses on introducing core AI-assisted tools to the team and building the foundational skills necessary to use them effectively, all without disrupting critical workflows. The goal is to achieve early wins, demonstrate value, and foster a culture of experimentation and learning.

- Goal: Introduce core AI-assisted tools and build foundational skills.

- Key Actions:

- Audit and Identify: Conduct a thorough audit of the current SDLC maturity to identify key bottlenecks and pain points where AI can provide the highest immediate impact. This assessment should document the current tool stack, workflows, and governance requirements.

- Deploy Core Assistants: Select and deploy a primary AI Code Assistant (e.g., GitHub Copilot for general use, Tabnine for high-security environments) for all developers. This is the single most impactful first step to boost productivity.

- Empower the Full Team: Simultaneously, introduce AI-powered tools for non-developer roles to ensure the entire team benefits. This includes requirements generation tools (e.g., Copilot4DevOps, StoriesOnBoard) for Business Analysts and Product Managers, and generative prototyping tools (e.g., Uizard, Relume) for UI/UX Designers.

- Establish a Center of Excellence: Form a cross-functional AI working group or "Center of Excellence." This team will be responsible for tracking new tools, sharing best practices, and guiding the organization's AI strategy.

- Invest in Foundational Training: Launch training programs focused on the most critical new skills: effective prompt engineering and adopting a "human-in-the-loop" validation mindset. The training should emphasize that AI is a collaborator to be guided and checked, not an oracle to be blindly trusted.

Phase 2: Adopting Platform Engineering and an Integrated Toolchain (Months 6-18)

With foundational tools and skills in place, the second phase focuses on moving from a collection of siloed AI tools to a fully integrated, secure, and efficient Internal Developer Platform (IDP). This is where the organization builds the "paved road" for high-velocity, high-quality software delivery.

- Goal: Build an integrated, secure, and efficient internal developer platform.

- Key Actions:

- Formalize Platform Engineering: Establish a formal Platform Engineering team. This team's mandate is to own, manage, and evolve the organization's entire SDLC toolchain, including all AI components. This move is critical to managing complexity and reducing the cognitive load on product-focused developers.

- Implement an Intelligent CI/CD Pipeline: The Platform team should build an end-to-end CI/CD pipeline that deeply integrates AI. This includes embedding automated security scanning (DevSecOps), AI-driven test case generation, and intelligent deployment gates into a seamless workflow from code commit to production release.

- Integrate AIOps and Observability: Deploy a comprehensive AIOps and intelligent observability platform (e.g., Dynatrace, New Relic). This is essential not only for monitoring the health of production applications but also for monitoring the performance, cost, and behavior of the AI models themselves, which are now a critical part of the infrastructure.

- Develop Custom AI Capabilities: Begin to leverage enterprise-grade features of AI tools by fine-tuning models on internal codebases, documentation, and data. This creates highly customized, context-aware AI assistants that understand the organization's specific architectural patterns and business logic, providing a significant competitive advantage.

- Enforce Governance: Codify and enforce the comprehensive AI governance policies detailed in the Risk Mitigation Framework (Table 4). This includes formalizing processes for code review, security scanning, IP compliance, and ethical oversight.

Phase 3: Experimenting with Agentic Workflows and Measuring Business Value (Months 18+)

Visual: Leadership grounded in vision, ongoing learning, agile iteration, and robust AI governance.

With a mature, integrated platform and a skilled workforce, the organization is now ready to explore the frontier of AI and connect its adoption directly to measurable business outcomes. The focus shifts from technical implementation to strategic leverage and continuous improvement.

- Goal: Leverage the mature platform to experiment with higher levels of autonomy and measure business impact.

- Key Actions:

- Pilot Autonomous Agents: Initiate controlled pilot projects using autonomous AI agents (e.g., Devin, or custom agents built on frameworks like AutoGen). Assign these agents to well-defined, non-critical tasks such as large-scale code refactoring, data pipeline generation, or clearing a backlog of technical debt. This allows the organization to learn the capabilities and limitations of agentic AI in a low-risk environment.

- Measure Business Impact: Move beyond developer productivity metrics and establish KPIs that measure the direct business impact of the Agentic SDLC. Key metrics should include reduction in time-to-market for new features, increase in developer satisfaction and retention, reduction in production defect rates, and an increase in overall innovation velocity (e.g., number of experiments run per quarter).

- Foster Continuous Improvement: Treat the AI-powered development experience itself as a product that requires constant iteration. Regularly gather feedback from all teams, review the performance of the toolchain, and stay abreast of the rapidly evolving AI landscape to continuously refine and improve the platform and processes.

Final Recommendations for Executive Leadership

The transition to an Agentic SDLC is a strategic imperative that requires strong, visionary leadership. The following recommendations are critical for success:

- Champion the Vision: Frame the adoption of AI not as a cost-cutting measure to replace engineers, but as a strategic investment to amplify human creativity, accelerate innovation, and deliver superior customer value. This positive, empowering narrative is essential for gaining buy-in and driving cultural change.

- Invest in People, Not Just Tools: The ultimate success of this transformation hinges on the ability of your existing talent to adapt and thrive. The most important investment is not in the AI tools themselves, but in the people who will use them. Prioritize and fund comprehensive training programs focused on the new critical skills: system architecture, complex problem-solving, prompt engineering, and critical validation of AI outputs.

- Embrace Iteration and Managed Risk: The AI landscape is evolving at an unprecedented rate. A rigid, multi-year plan will be obsolete before it is implemented. Adopt an agile and iterative approach to your AI strategy. Be prepared to experiment with new tools and processes, learn from failures, and adapt continuously. Start with low-risk pilot projects to build momentum and manage the inherent uncertainties.

- Lead with Governance: Proactively address the significant risks associated with AI from day one. A strong governance framework covering security, IP, ethics, and quality is not a barrier to innovation; it is the essential foundation that makes sustainable, responsible, and defensible innovation possible. By establishing these guardrails early, you provide your teams with the psychological safety and clear guidance they need to explore the full potential of AI with confidence.

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.