How to Choose the Right AI Coding Tools: Subscription vs. Pay-As-You-Go for CTOs

August 12, 2025 / Bryan Reynolds

The AI Coding Revolution: A CTO's Guide to Choosing Your Tools—Subscription vs. Pay-As-You-Go

Introduction: Navigating the AI Cambrian Explosion

The world of software development is in the midst of a seismic shift, a period of explosive innovation that industry analysts have dubbed the "Cambrian explosion of AI tools". A new category of software—the AI coding assistant or "agent"—has emerged, promising to dramatically accelerate productivity, improve code quality, and redefine the development lifecycle. For B2B technology leaders, from visionary CTOs to strategic CFOs, this presents both a massive opportunity and a significant challenge.

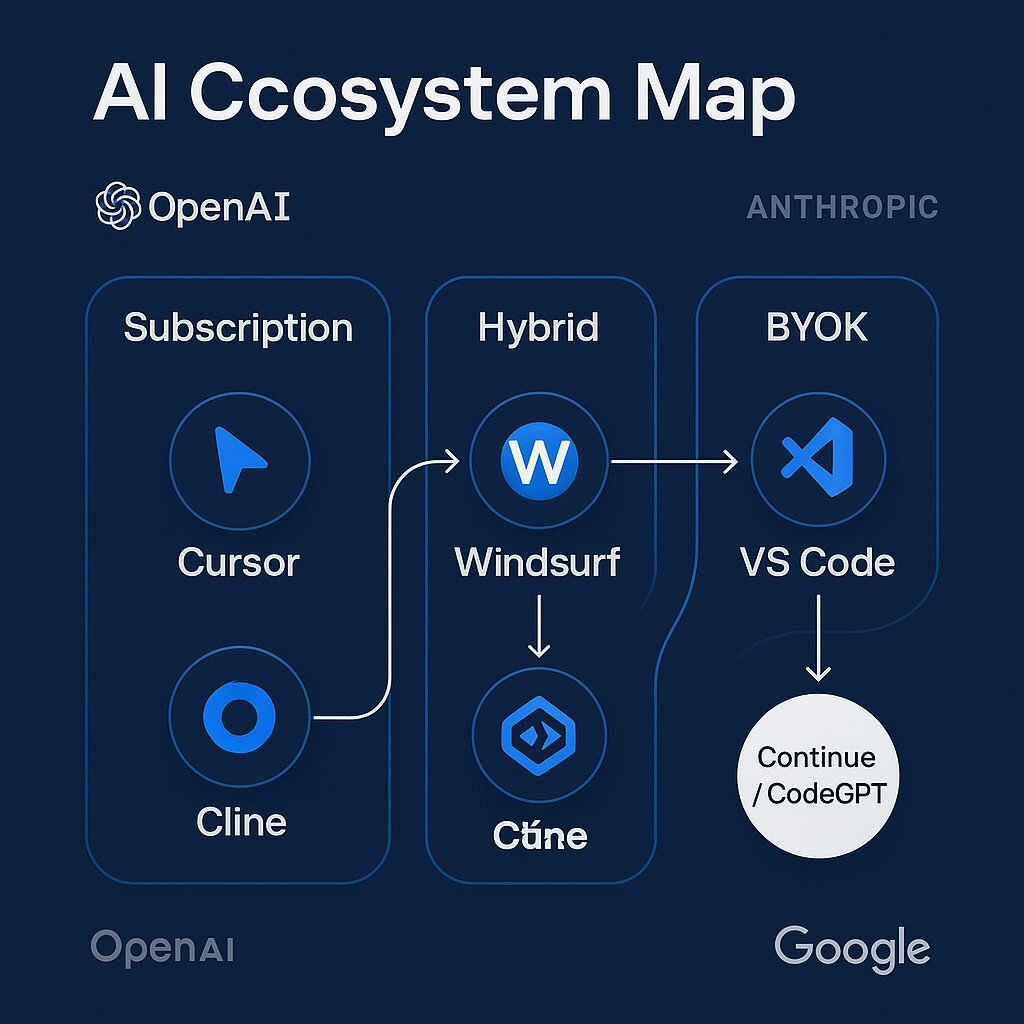

The market is crowded with powerful but confusing options, each with its own philosophy, feature set, and pricing model. This fragmentation has led to a rise in "shadow IT," as developers individually experiment with a dizzying array of tools like Cursor, Windsurf, Cline, and various VS Code extensions, creating overlapping costs and security blind spots for their organizations. Meanwhile, many seasoned developers remain skeptical, questioning whether these tools provide enough value to justify their cost and the disruption to established workflows.

Choosing the right AI coding assistant is no longer a simple technical decision delegated to an engineering manager. It is a critical strategic choice with profound financial, security, and operational implications that will shape your team's performance for years to come. At Baytech Consulting, we navigate this complex landscape daily, both for our enterprise clients and for our own elite engineering teams. We leverage cutting-edge tech stacks—including Azure DevOps, VS Code, Kubernetes, and PostgreSQL—to deliver robust, scalable software, and the strategic integration of AI is central to our process. This guide is born from that experience. It is designed to cut through the marketing hype and provide B2B leaders with a clear, honest framework for making an informed decision.

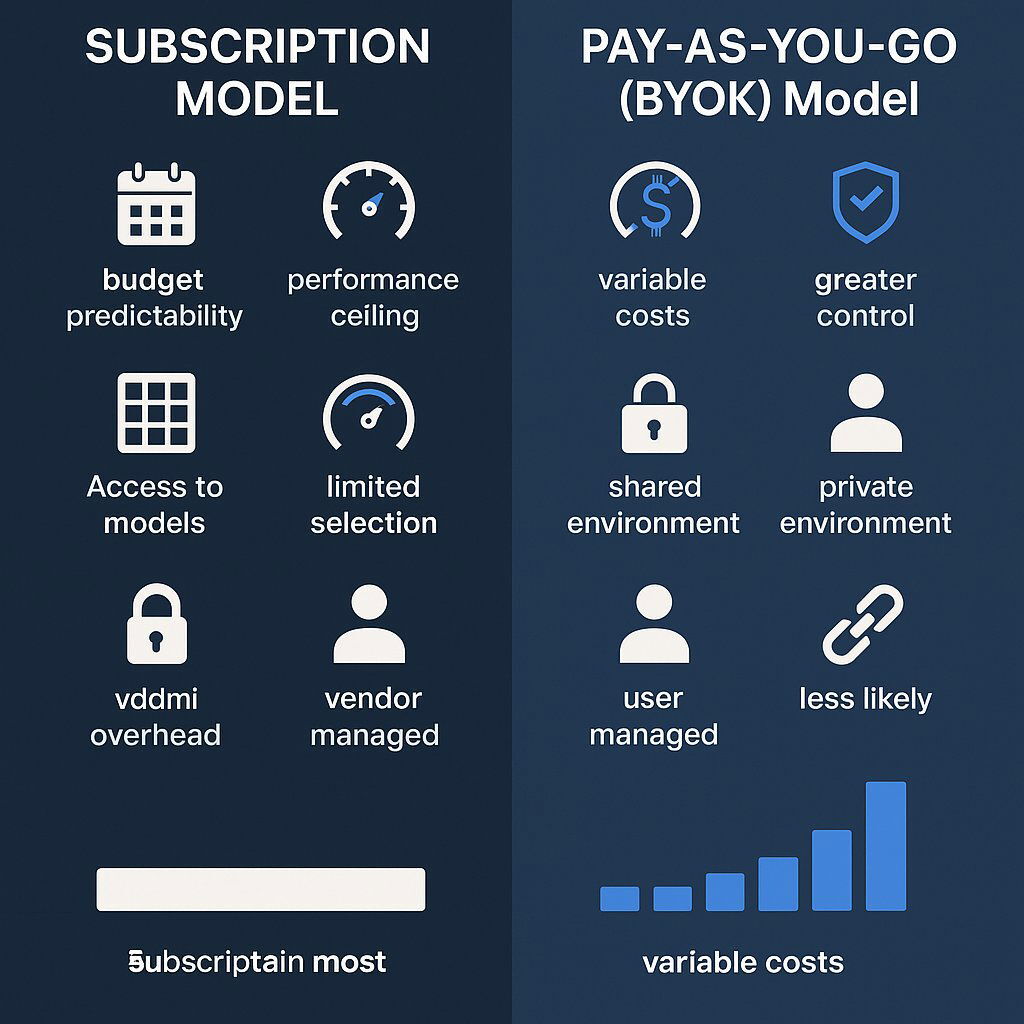

The Fundamental Choice: Predictable Costs vs. Ultimate Flexibility

Before diving into the specifics of any single tool, the most critical decision a leadership team must make revolves around the pricing model. This is the fundamental fork in the road, and it dictates far more than just how you'll be billed. It defines the incentives of your tool provider, the performance ceiling of the AI, and the level of security and control you retain over your most valuable asset: your source code. The choice boils down to two dominant philosophies: the all-inclusive predictability of a subscription versus the raw, unconstrained power of a pay-as-you-go model.

The Subscription Model: The All-Inclusive Resort

The subscription model is, on its face, the simplest and most appealing option, especially for organizations that prioritize predictable budgets. For a flat monthly fee per developer, tools like GitHub Copilot, Cursor, and Windsurf offer access to a suite of AI features. This model is easy to understand, easy to budget for, and easy to sell to a finance department wary of runaway technology costs. It promises an "all-you-can-eat" buffet of AI assistance, with ongoing updates and support often bundled into the price.

However, this alluring simplicity masks a fundamental misalignment of incentives. While you pay a fixed price, the provider's cost is variable, directly tied to the computational resources—measured in "tokens"—that your developers consume with every single query. To protect their profit margins, providers are financially incentivized to minimize their cost-per-request. This can lead to several hidden performance trade-offs:

- Aggressive Context Compression: To keep API calls cheap, the service may aggressively summarize or discard parts of the conversation history and referenced code files. This is the most common cause of user frustration, where the AI agent seems to "forget" key details or instructions midway through a complex task. One developer on a public forum described this exact issue with a subscription-based tool, noting that after a few prompts, context is lost and they must constantly re-add files to the conversation, a clear symptom of the provider's cost-saving measures.

- Lack of Transparency: You often have little visibility into which underlying AI model is being used or how your prompts are being modified behind the scenes. The provider may route your requests to older, cheaper, or less capable models to save money, without informing you.

- Performance Ceilings: The incentive to limit usage prevents the agent from achieving true autonomy. A developer cannot give the tool a complex, 20-step plan and trust it to execute reliably, because the constant context compression will cause it to "lose the plot." Instead, developers are forced to "babysit" the agent, feeding it one small step at a time.

Ultimately, the subscription model creates a zero-sum game between the provider's profitability and the user's access to the AI's full potential. The "predictable cost" that appeals to the CFO can quickly become a "predictable performance ceiling" for the CTO, limiting the tool's ROI and frustrating the very power users you hired to solve your most difficult problems.

The Pay-As-You-Go (BYOK) Model: The Custom-Built Race Car

The alternative is the pay-as-you-go (PAYG) model, most powerfully implemented as "Bring Your Own Key" (BYOK). With this approach, the AI coding tool itself is often free and open-source, and you connect it to an AI provider like OpenAI, Anthropic, or Google using your own API key. You pay the AI provider directly for what you use, measured by the number of input and output tokens your team consumes. Tools like Cline, Continue, and CodeGPT are built around this philosophy.

This model offers unparalleled power, flexibility, and security, but it comes with the trade-off of financial unpredictability.

- Aligned Incentives and Peak Performance: In a BYOK model, the tool developer is no longer responsible for the cost of running the AI. Their sole incentive is to build the most capable, powerful software possible to win your loyalty. This means they encourage large context windows and complex, multi-step reasoning because they know it produces the best results—and you, the user, are in control of the cost. You get unrestricted access to the absolute latest and most powerful AI models the moment they are released, allowing you to switch from GPT-4o to Claude 3.5 Sonnet with a simple dropdown menu.

- Unmatched Security and Control: For any enterprise, but especially those in regulated industries like finance and healthcare, the security implications of BYOK are a game-changer. Your proprietary source code is never sent to the tool provider's servers. The data flows directly from the developer's machine (the client) to the secure infrastructure of the AI provider (e.g., OpenAI). This "Zero Trust by Design" architecture makes tools like Cline, which is 100% open-source and runs entirely client-side, the only viable option for many organizations with strict security and compliance mandates like GDPR or HIPAA. For a comprehensive look at secure AI implementation, see The Replit AI Disaster: A Wake-Up Call for Every Executive on AI in Production.

- Future-Proofing and No Vendor Lock-In: By decoupling the coding tool (the interface) from the AI model (the engine), you avoid vendor lock-in. If a new, revolutionary model is released tomorrow by a different lab, you can adopt it instantly without having to change your team's entire workflow or IDE.

The primary drawback, and it is a significant one, is the potential for unpredictable billing. A single developer working on a complex problem could rack up substantial costs in a short period. This model requires a higher degree of technical sophistication and a commitment to active financial governance, including monitoring, setting usage caps, and training developers on cost-optimization techniques.

For the CTO, however, BYOK is more than a pricing model; it's a strategic choice. It is a commitment to providing your team with the most powerful tools available, maintaining maximum security and control over your intellectual property, and building a flexible foundation that can adapt to the rapid evolution of AI.

Table 1: Subscription vs. Pay-As-You-Go at a Glance

| Strategic Factor | Subscription Model | Pay-As-You-Go (BYOK) Model |

|---|---|---|

| Budget Predictability | High. Fixed monthly cost per user. | Low. Costs are variable and based on actual usage. |

| Performance Ceiling | High risk. Provider is incentivized to limit usage, potentially capping performance. | Low risk. User has access to the model's full capabilities; performance is limited by budget. |

| Access to Frontier Models | Delayed. Users are dependent on the provider to integrate new, potentially more expensive, models. | Instant. Users can switch to the latest models from any provider immediately upon release. |

| Security & Data Privacy | Moderate to High Risk. Proprietary code is sent to the tool provider's servers for processing. | Low Risk. "Zero Trust" model where code is never seen by the tool provider. Ideal for compliance. |

| Administrative Overhead | Low. Simple procurement and billing process. | High. Requires active cost monitoring, budget caps, and developer training. |

| Vendor Lock-In Risk | High. Workflows are tied to a single provider's ecosystem and their choice of AI models. | Low. The tool is decoupled from the AI model, allowing for easy migration to new platforms. |

The Contenders: A Strategic Breakdown of Today's Top AI Coding Platforms

With a clear understanding of the fundamental trade-offs between pricing models, we can now analyze the leading tools in the market. The most effective development organizations recognize that there is no single "best" tool. Instead, they adopt a "right tool for the right job" mentality, understanding that each platform excels in a different phase of the development lifecycle.

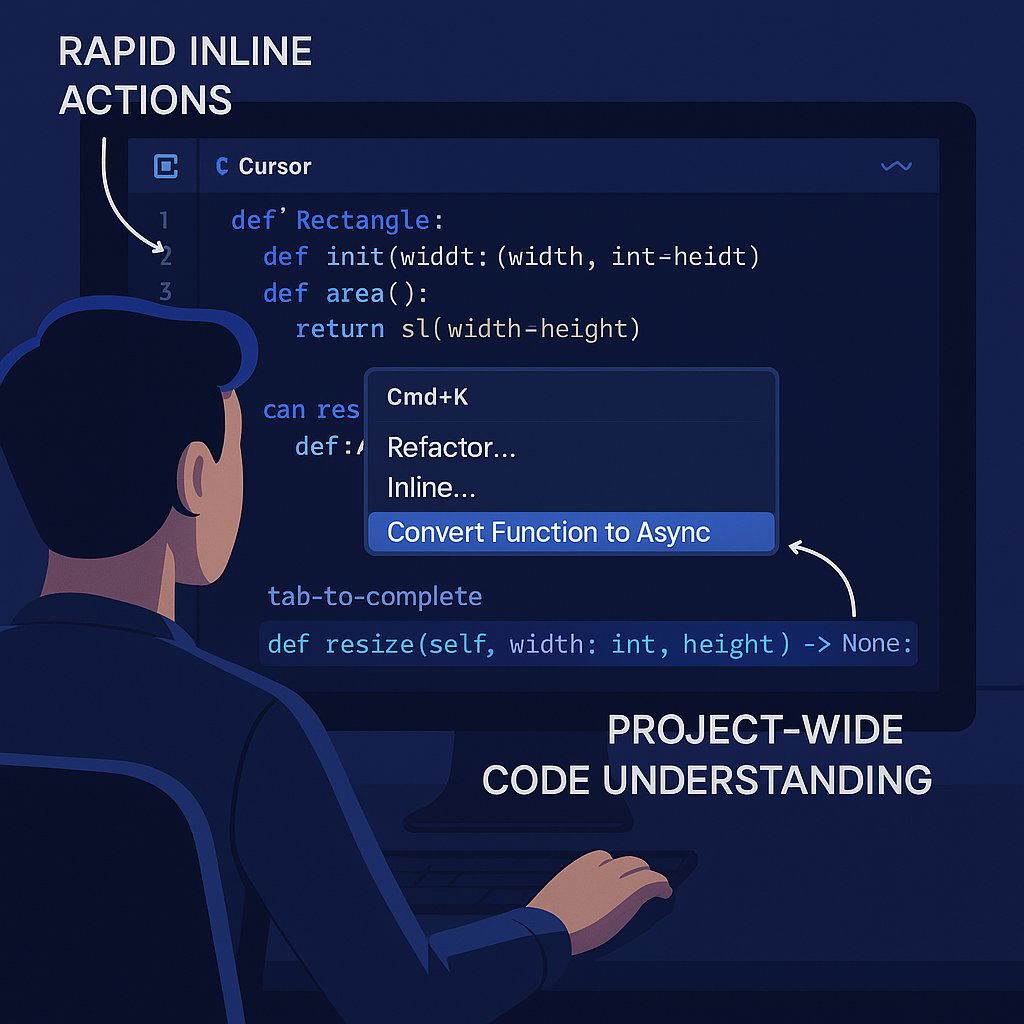

Cursor: The Power User's Daily Driver

- Profile: Cursor is a complete, standalone Integrated Development Environment (IDE) that is "forked" from the popular Visual Studio Code. This means it feels instantly familiar to most developers but is supercharged with deeply integrated AI capabilities. It is designed from the ground up to maximize the day-to-day productivity of a developer engaged in intensive coding.

- Strengths: Cursor's magic lies in its speed and seamless integration. It excels at inline actions, allowing a developer to highlight a block of code and refactor, explain, or generate tests with a simple command (

Cmd+K). It has a deep, project-wide understanding of the codebase, enabling complex, multi-file edits. Its "tab-to-complete" feature, which intelligently predicts and completes entire blocks of code, is frequently described by users as a game-changing experience that makes them feel at least "2x more productive" than with other tools. - Pricing & Ideal Use Case: Cursor offers a hybrid model with both a monthly subscription (which includes a generous amount of usage) and a BYOK option for users who need more power or control. It is the ideal daily driver for senior developers whose primary role is writing, debugging, and refining code.

- Weaknesses: As a primarily subscription-based service, it can suffer from the context management issues discussed earlier, where it may "forget" details in a long-running conversation. It is also closed-source, which can be a non-starter for organizations that require the ability to audit their tools.

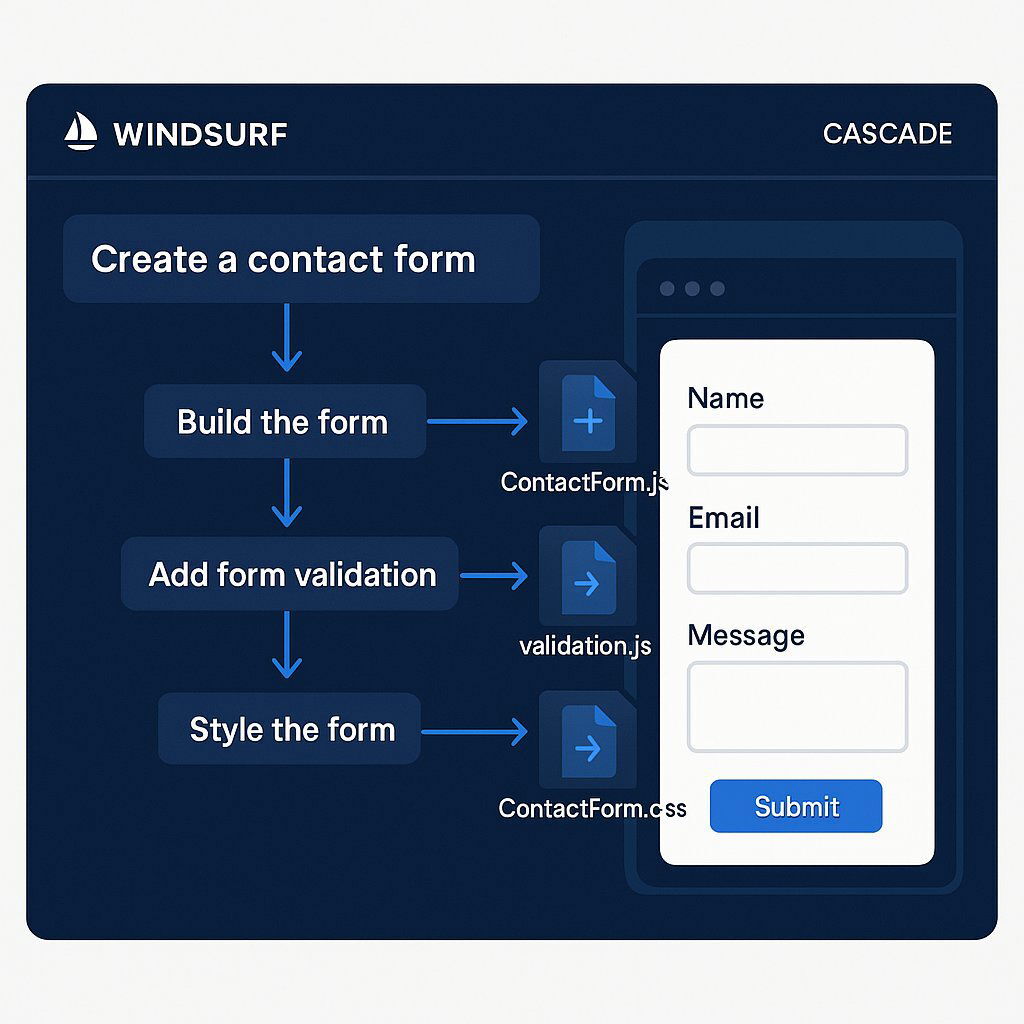

Windsurf: The Autonomous Feature-Builder

- Profile: Windsurf positions itself as an agent-based coding platform. Its flagship feature, "Cascade," is designed to operate with more autonomy than a typical assistant. A developer can give Cascade a high-level goal, and the agent will break it down into a plan of subtasks, execute terminal commands, and create or edit multiple files to achieve the objective.

- Strengths: Windsurf shines in scenarios that involve scaffolding new projects or building out entire features from a prompt. Users praise its clean, intuitive user experience and its ability to take a natural language request and return a fully working web preview, server and all. Its agentic workflow feels like delegating a task rather than pair-programming.

- Pricing & Ideal Use Case: Windsurf uses a subscription model that allocates a certain number of "credits" per month, which are consumed by various AI actions. This is effectively a usage-based model within a predictable subscription container. It's best suited for greenfield development, building minimum viable products (MVPs), and automating the creation of boilerplate code.

- Weaknesses: While it has its own editor, it is not a full-featured IDE in the same way as Cursor or VS Code. Some users report that its agentic system, while powerful, can occasionally produce more errors or "nonsensical" results compared to the more controlled approach of Cursor.

Cline: The Enterprise-Grade Security & Transparency Champion

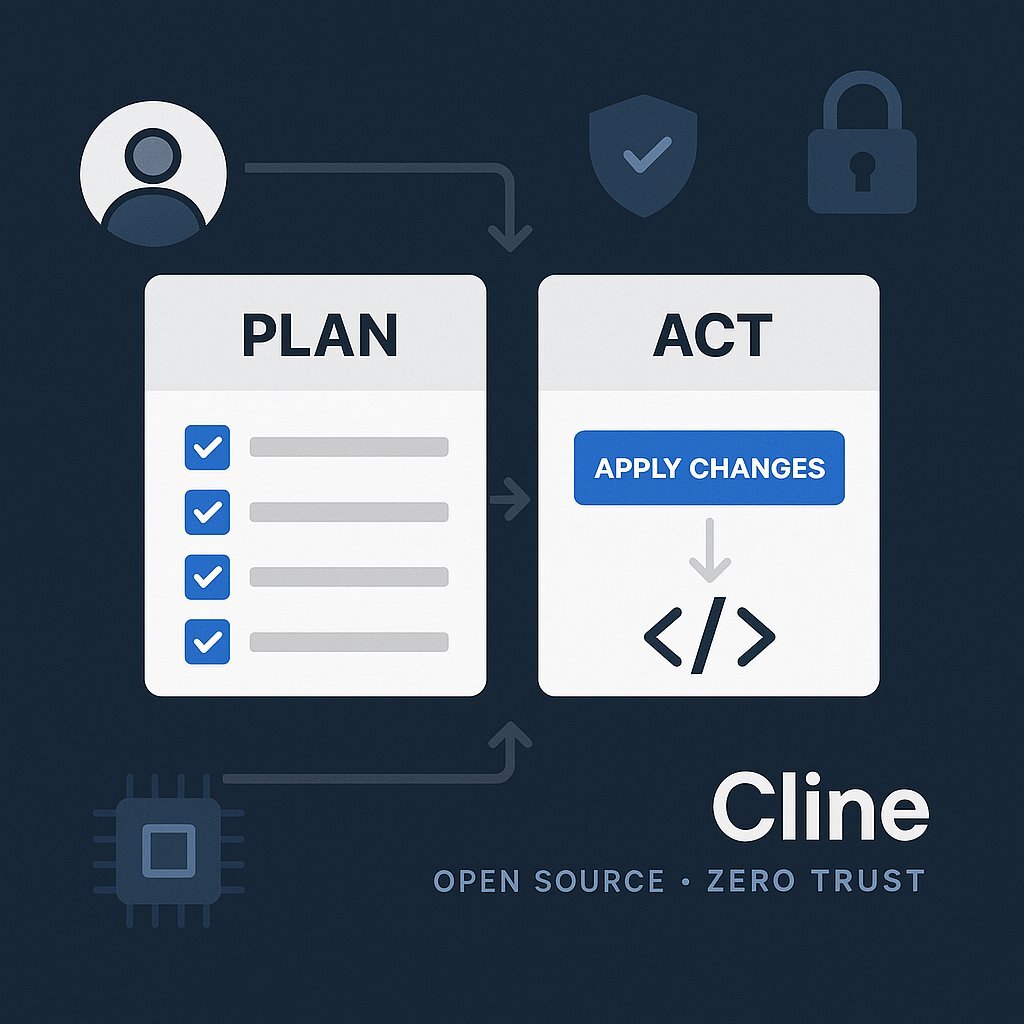

Profile: Cline is a free, open-source extension for VS Code that operates exclusively on the BYOK model. Its entire philosophy is built on two pillars: security and transparency. It introduces a unique "Plan & Act" workflow. In

Planmode, the agent analyzes the codebase and collaborates with the developer to create a detailed, step-by-step implementation strategy. Only after the developer approves the plan does the agent switch toActmode to execute the changes.- Strengths: Cline's "Zero Trust" client-side architecture is its killer feature for the enterprise. Because your code is never sent to Cline's servers, it can be fully audited and deployed in the most security-sensitive environments. The "Plan & Act" mode, while slower, often results in higher-quality output for complex, multi-file tasks because it forces a thorough strategic review before a single line of code is written.

- Pricing & Ideal Use Case: The tool is free; users only pay for the tokens they consume on their own OpenAI, Anthropic, or Google accounts. Cline is the undisputed champion for regulated industries, government contractors, and any organization where data security and audibility are non-negotiable. It is also the best choice for highly complex architectural tasks that benefit from meticulous planning.

- Weaknesses: The power of BYOK comes at a cost. Cline can become expensive if token usage is not carefully managed. It also has a steeper learning curve and lacks some of the polished, "quality-of-life" features of its subscription-based competitors, most notably the predictive tab-completion found in Cursor.

VS Code + Extensions (Continue, CodeGPT): The Customizable Workhorse

- Profile: For many elite development teams, their existing VS Code setup is a highly customized, finely tuned engine of productivity. For them, the best approach is not to replace their IDE, but to augment it with powerful AI extensions that act as a bridge to the latest models.

- Continue: This is a leading open-source, BYOK extension that allows developers to essentially build and share their own custom AI assistants. It's designed for maximum hackability, enabling teams to tailor the AI's behavior, context sources, and rules to their specific projects and internal standards.

- CodeGPT: Another extremely popular extension, CodeGPT connects to a wide array of AI providers and offers a rich feature set, including an "Agent Marketplace" for specialized tasks and a "Knowledge Graph" feature that builds a deep understanding of your entire codebase. It offers a tiered subscription model but also supports a full BYOK mode for enterprise clients.

- Ideal Use Case: This approach is perfect for teams that need the full, unfettered power of a traditional local IDE—with its integrated terminal, world-class debugger, and deep Git integration—but want to layer in state-of-the-art AI capabilities. It offers the ultimate in customizability and control.

Table 2: Feature & Strategic Fit Matrix

| Feature / Factor | Cursor | Windsurf | Cline | VS Code + Continue |

|---|---|---|---|---|

| Full IDE | ✅ (VS Code Fork) | ❌ (Has an editor) | ❌ (VS Code Extension) | ✅ (Native IDE) |

| Autonomous Agent | ⚠️ (Agent mode exists but is less autonomous) | ✅ (Cascade agent plans and executes tasks) | ✅ (Plan & Act modes for controlled autonomy) | ⚠️ (Agent features depend on the extension) |

| Security Model | Closed-Source, Cloud-Based | Closed-Source, Cloud-Based | Open-Source, Zero-Trust (Client-Side Only) | Open-Source, Zero-Trust (Client-Side Only) |

| Primary Business Model | Subscription (with BYOK option) | Subscription (Credit-based) | Pay-As-You-Go (BYOK Only) | Pay-As-You-Go (BYOK Only) |

| Best For (Project Type) | Daily coding, complex refactoring, refinement | Greenfield development, scaffolding, MVPs | Enterprise, regulated industries, complex architectural planning | Augmenting existing, highly customized workflows |

| Key Differentiator | Speed, inline editing, and "magic" tab-completion | Agentic "Cascade" workflow and polished UX | Unmatched security, transparency, and planning capabilities | Maximum customizability and integration with the traditional IDE ecosystem |

The "Price No Object" Stack: How Elite Teams Combine Tools for Maximum Impact

As the AI coding landscape matures, the most sophisticated development organizations are moving beyond the "one tool to rule them all" mindset. They recognize that just as a master carpenter has more than one saw, an elite developer needs a suite of specialized AI assistants. They are pioneering a multi-agent stack, orchestrating different tools for the tasks they perform best. This approach doesn't just add productivity; it multiplies it across the entire development lifecycle.

This new workflow reframes the role of the senior developer from a pure coder into an "orchestrator" of AI agents. Their highest-value activities become strategic planning, precise task delegation, and rigorous quality control. This is a powerful new paradigm for organizing software development, and developers are already sharing workflows online that combine multiple tools to achieve superior results. Here is what that state-of-the-art workflow looks like:

- Phase 1: Planning & Architecture (with Cline) For a new, complex feature, a senior developer or architect begins in a VS Code environment with the Cline extension. They write a high-level prompt describing the desired outcome, business logic, and constraints. Leveraging its BYOK model to access a powerful, large-context model like Claude 3 Opus, Cline analyzes the entire existing codebase, identifies potential dependencies and conflicts, and collaborates with the developer in

Planmode to produce a detailed, multi-step implementation strategy. This critical first step ensures the architectural approach is sound before any code is written, preventing costly rework later. For additional guidance on leveraging AI for knowledge work, see Google NotebookLM for Enterprise: Secure, Verifiable AI. - Phase 2: Scaffolding & Greenfield Development (with Windsurf) With the architectural blueprint from Cline in hand, the task of creating the initial files, boilerplate code, database migrations, and basic UI components is delegated to Windsurf. Its

Cascadeagent is perfectly suited for this type of structured, multi-file creation. The developer can feed it a sub-task from the master plan—"Create a new React component for the user dashboard with these three sub-components and a corresponding Storybook file"—and Windsurf will execute it, often providing a live preview for immediate validation. - Phase 3: Core Logic & Intensive Refinement (with Cursor) Once the project skeleton is in place, the developer opens the codebase in the Cursor IDE for the most intensive phase of coding. Here, they leverage Cursor's unparalleled speed and ergonomics. They use the powerful inline editing (

Cmd+K) to flesh out complex business logic, the intelligent refactoring tools to clean up and optimize the scaffolded code, and the "magic" tab-completion to blaze through repetitive patterns. This is where the developer's deep expertise is combined with the AI's tactical speed to write high-quality, production-ready code. - Phase 4: Traditional Tooling & Debugging (with VS Code) Throughout the process, the developer seamlessly switches back to a standard VS Code instance—or simply uses the terminal and debugger integrated within Cursor—for tasks where traditional tools still reign supreme. This includes running complex debugging sessions with breakpoints, performing intricate Git operations like interactive rebases, managing local containerized environments with Docker, and deploying to Kubernetes clusters.

This multi-agent approach represents a fundamental shift in how software is built. It allows a single, highly-skilled developer to command a team of specialized AI assistants, achieving a level of velocity and quality that was previously unimaginable. For a CTO, this isn't just about buying tools; it's about redesigning workflows and upskilling talent to lead this new paradigm.

Taming the Beast: A CFO's Guide to Managing Pay-As-You-Go Costs

The power of the BYOK model is undeniable, but to a CFO, it can sound like handing developers a blank check. The fear of unpredictable, spiraling costs is the single biggest barrier to adopting this strategically superior model. Success requires a paradigm shift from the passive, set-and-forget world of subscriptions to a new discipline of active cost governance. This is a shared responsibility between engineering and finance, and it transforms cost management from a defensive chore into a competitive weapon. Teams that master these techniques can afford to use the most powerful AI models more frequently and on more complex tasks than their rivals, all while staying on budget. For a broader strategy on integrating AI within your operations, explore How Do We Get Started and Integrate AI?

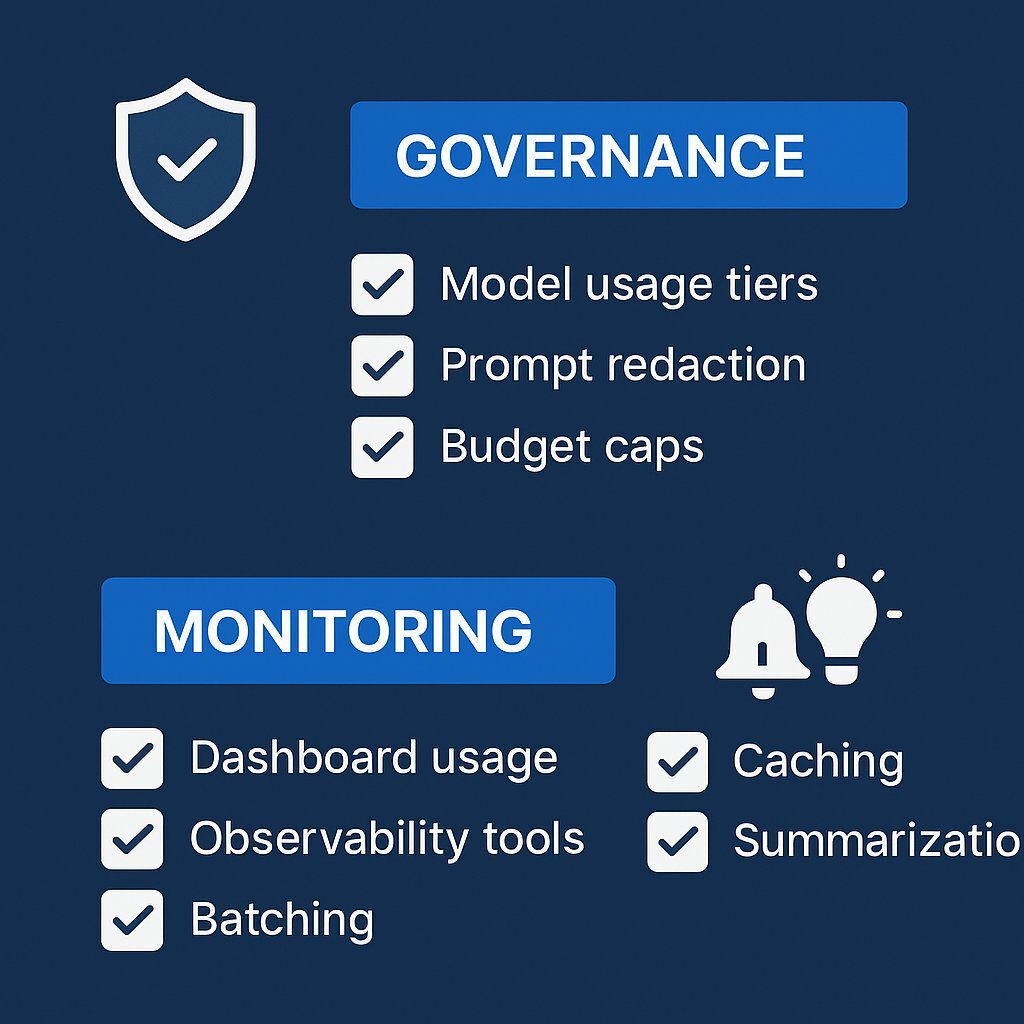

Step 1: Establish Governance and Policies

The foundation of cost control is a clear set of rules. Without them, you are flying blind.

- Tiered Model Usage: Not all tasks require the most powerful (and most expensive) AI model. Establish clear guidelines for your team. For simple, repetitive tasks like writing docstrings or generating boilerplate, mandate the use of cheaper, faster models like GPT-3.5-Turbo or Claude 3 Haiku. Reserve the premium, expensive models like GPT-4o or Claude 3 Opus for high-value, complex tasks like architectural design, critical code refactoring, or summarizing dense technical documentation.

- Safe Prompting Policies: Train your developers on "prompt hygiene". This is both a security and a cost measure. Policies should strictly forbid pasting proprietary code, API keys, customer data, or other sensitive information into prompts. All code should be reviewed and redacted before being used as context, protecting your IP and preventing the accidental consumption of thousands of unnecessary tokens.

Step 2: Implement Monitoring, Budgeting, and Alerts

You cannot control what you cannot measure. Active monitoring is non-negotiable in a BYOK environment.

- API Provider Dashboards: The first line of defense is the dashboard provided by your AI vendor (e.g., OpenAI, Anthropic). Use it to monitor usage in near real-time. Crucially, set hard spending limits and monthly budgets for your organization and, if possible, for individual developers or projects. This acts as a financial circuit breaker to prevent catastrophic overruns.

- LLM Observability Platforms: For more granular control, integrate a dedicated LLM observability tool. Platforms like LangSmith, OpenLLMetry, and Helicone act as a proxy for your API calls, giving you detailed dashboards that track token usage, cost-per-request, latency, and error rates. Some enterprise-grade tools, like Claude Code, even have built-in support for the OpenTelemetry standard, allowing you to pipe usage data directly into your existing enterprise monitoring systems like Datadog or New Relic.

- Automated Alerts: Use your monitoring tools to set up automated alerts. Configure them to notify a channel in Slack or Teams when daily spending exceeds a certain threshold or when a single developer's usage spikes unexpectedly. This allows you to catch anomalies early before they become significant financial problems.

Step 3: Optimize Token Usage with Smart Techniques

This is where engineering best practices directly translate into financial savings. Train your team on these token-saving techniques.

- Intelligent Prompt Engineering: Shorter prompts cost less. Teach developers to be concise and eliminate filler words like "please" or "kindly". When sending structured data, use token-efficient formats. Minified JSON is better than formatted JSON, and CSV is often even better, as it eliminates repetitive keys.

- Aggressive Caching: Many queries in a development workflow are repetitive. Implement a caching layer using a tool like Redis to store the responses to common prompts. The first time a developer asks to "generate a unit test for this function," the request goes to the AI. The next time anyone on the team asks the exact same question, the response is served instantly from the cache, costing nothing.

- Request Batching: Instead of sending ten separate, small API requests, it is often more cost-effective to group them into a single, larger request. This reduces the per-call overhead and can significantly lower costs for automated tasks.

- Context Summarization: The biggest driver of cost in long, agentic conversations is the ever-growing context window. Instead of sending the entire chat history with every turn, implement a programmatic summarization step. After a few exchanges, a cheaper AI model can be called to summarize the key points of the conversation so far. This summary, rather than the full transcript, is then used as the context for the next turn, drastically reducing input token counts.

Table 3: Cost Optimization Checklist for BYOK Models

| Category | Checklist Item |

|---|---|

| Governance | Have we defined model-usage tiers (e.g., cheap vs. expensive models for specific tasks)? |

| Is there a clear policy on redacting sensitive data from prompts? | |

| Are developers trained on writing concise, token-efficient prompts? | |

| Monitoring | Are hard spending limits and monthly budgets set in our API provider account? |

| Is an LLM observability tool (e.g., LangSmith, Helicone) or custom monitoring in place? | |

| Have we configured automated alerts for unusual spending spikes? | |

| Optimization | Is a caching strategy (e.g., Redis) in place for repeated queries? |

| Are we using request batching for automated or high-volume tasks? | |

| Is there a context summarization strategy for long-running agentic conversations? |

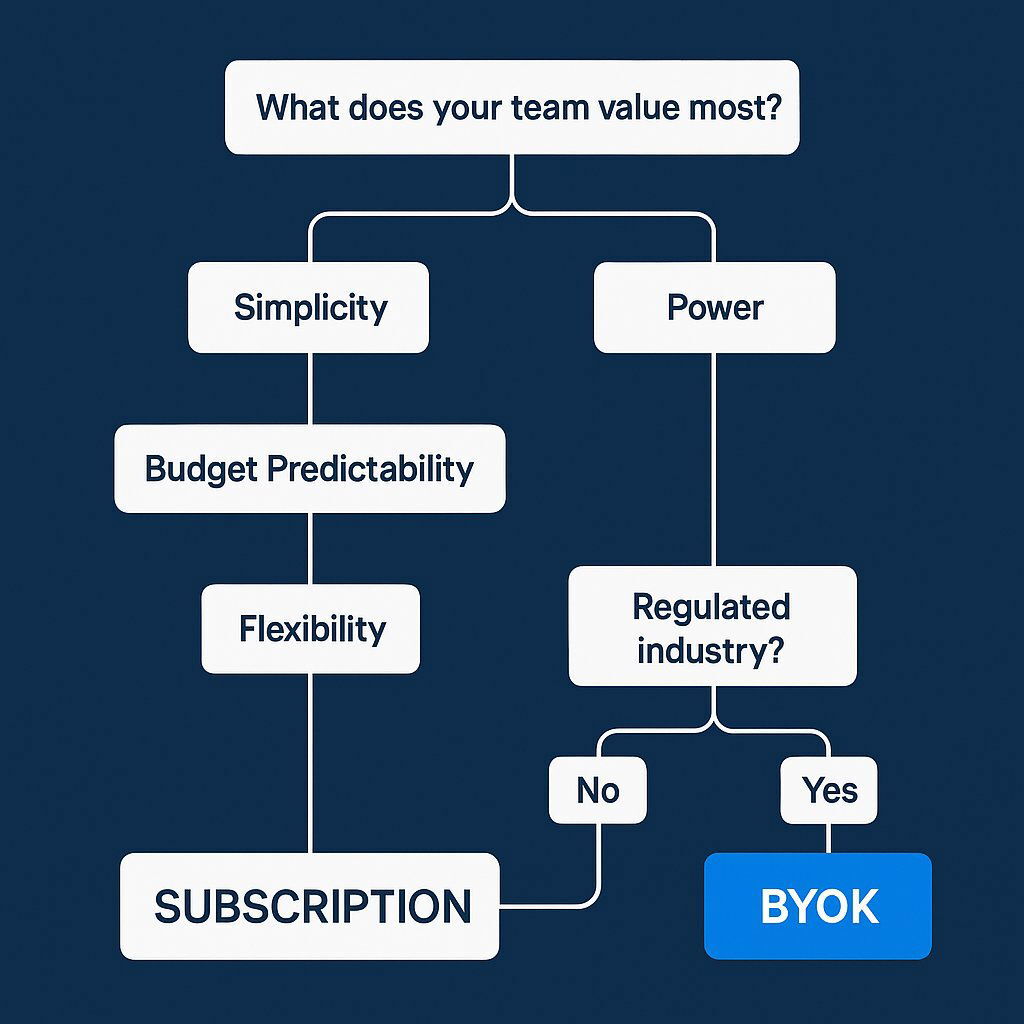

Conclusion: Making the Right Strategic Choice for Your Team

The explosion of AI coding assistants has moved the industry past the question of if we should use them to the more complex questions of which ones we should use and how we should pay for them. The choice between a predictable subscription and a powerful pay-as-you-go model is not merely a line item on a budget; it is a strategic decision that reflects your organization's priorities regarding simplicity, power, security, and control.

There is no single right answer. The correct path depends entirely on your team's specific context, culture, and risk tolerance. For those building and scaling with startup agility, you may want to reference The Executive’s AI Playbook: Building, Funding & Scaling Startups in 2025 as you weigh your decisions.

Choose the Subscription Model if:

- Your primary goal is simplicity and budget predictability.

- Your finance department requires fixed, forecastable costs.

- Your team values an easy, out-of-the-box experience over ultimate power and customizability.

- Your primary use case is augmenting existing developer workflows with code completion and chat, rather than attempting full task automation.

Choose the Pay-As-You-Go (BYOK) Model if:

- You operate in a regulated industry where security, data privacy, and audibility are paramount.

- Your highest priority is providing your developers with unrestricted access to the most powerful and advanced AI models available.

- You want to avoid vendor lock-in and maintain the flexibility to adapt to a rapidly changing AI landscape.

- You have the organizational maturity and technical discipline to actively govern and manage variable costs.

At Baytech Consulting, we specialize in architecting, building, and managing custom, enterprise-grade applications where performance, security, and efficiency are non-negotiable. For our most demanding client projects and our own internal R&D, we have found that a multi-agent stack, powered by a BYOK model, provides a decisive advantage. We have invested in developing the expertise and governance frameworks required to harness the immense power of this approach while maintaining rigorous cost control.

Navigating this new landscape is a complex undertaking. The choices you make today will have a lasting impact on your team's productivity, your product's quality, and your company's bottom line. If you are looking for a strategic partner to help you design and implement a high-performance, cost-effective AI development strategy, we can help. Together, we can build the right tool stack, the right processes, and the right culture to give your team an enduring competitive edge in the age of AI.

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.