B2B Software Testing: How to Avoid Over- or Under-testing and Maximize ROI

August 08, 2025 / Bryan Reynolds

Testing Your B2B Software: How Much is Enough, What Are the Real Costs, and How Does Your Project Size Change the Game?

Every business leader building or buying custom software grapples with a fundamental tension. On one side, there's the fear of under-testing—of launching a product riddled with bugs that crash systems, corrupt data, and shatter customer trust. On the other, there's the fear of over-testing—of pouring time and money into a quality assurance (QA) black hole that delays time-to-market and inflates budgets with no clear return. It's the "Goldilocks problem" of software quality: how do you find the approach that's just right?

The solution isn't a magic number or a one-size-fits-all checklist. It's a strategic framework for making risk-based decisions that align your testing efforts with your most critical business goals. This article provides that framework. We'll demystify the real costs of software bugs, define what "enough" testing truly means in a B2B context, and show you how to tailor your strategy to the scale and complexity of your project.

At Baytech Consulting, we specialize in crafting enterprise-grade custom software. We've guided countless B2B leaders through this exact challenge, and our "Rapid Agile Deployment" philosophy is built on a core principle: quality isn't something you test in at the end of a project; it's something you engineer in from the very first line of code.

The True Cost of a Software Bug: Why "Cutting Corners" on Testing is the Most Expensive Decision You Can Make

The most dangerous myth in software development is that testing is a "cost center." In reality, strategic testing is one of the most powerful forms of risk mitigation available to your business. The cost of not testing properly isn't just a line item; it's an existential threat that can manifest in budget overruns, lost revenue, and catastrophic brand damage.

The Exponential Cost of a Single Bug

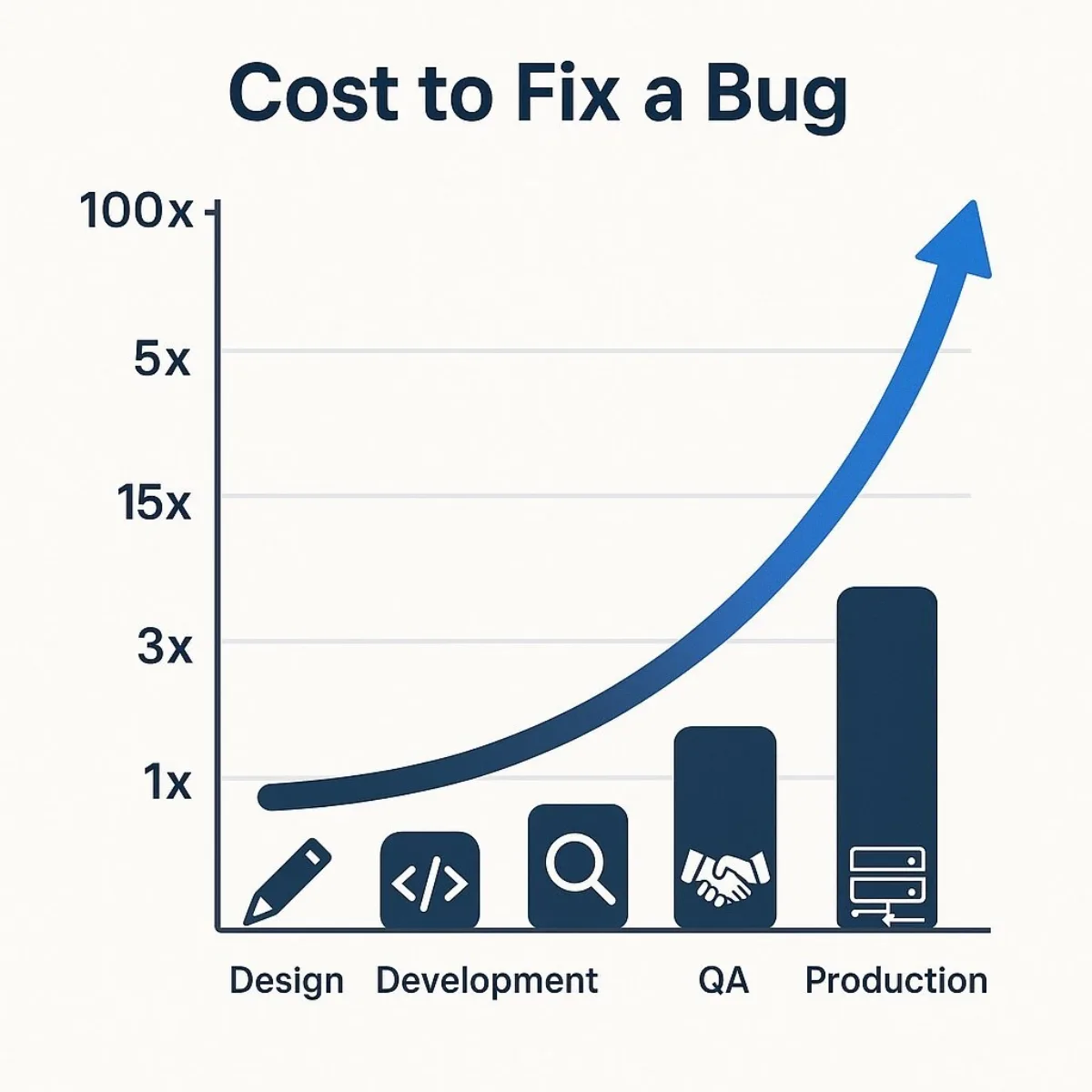

The single most important concept for any executive to understand is that the cost to fix a software defect multiplies exponentially the later it is discovered in the software development lifecycle (SDLC). The numbers are staggering:

- Fixing a bug during the implementation phase is 6 times more expensive than catching it during the initial design phase.

- If that same bug makes it to a formal testing phase, the cost jumps to 15 times higher.

- Once the software is live in production and in the hands of your customers, fixing that bug can be 30 to 100 times more expensive than if it had been caught early.

This exponential curve isn't an exaggeration; it's a reflection of compounding complexity and disruption. A bug found by a developer while they are writing the code is a simple, quick fix. The code is fresh in their mind, and the change impacts no one else. This is a low-cost event.

When that bug moves to a dedicated QA or Staging environment, the cost multiplies. Now, the process involves a QA tester finding and documenting the bug, a developer having to stop their current work and switch context back to older code, implementing a fix, deploying a new build to the testing environment, and the QA tester re-running the test to verify the fix. More people, more process, more time—more cost.

A bug that reaches production is a full-blown business crisis. The cost explodes because it now involves your customer support team fielding angry calls, potential revenue loss from failed transactions or system downtime, your development team dropping everything for emergency "hotfixes," and a full regression test of the entire system to ensure the emergency fix didn't break something else. This is before you even consider the cost of communicating with frustrated customers and the long-term damage to your brand's reputation. The "100x" figure is a conservative estimate of the total business impact.

Table: The Escalating Cost of a Single Bug

For a strategic CFO or non-technical executive, this table provides an at-a-glance summary of the financial imperative for early testing.

| SDLC Stage | Relative Cost to Fix | Activities Involved | Business Impact |

|---|---|---|---|

| Design/Requirements | 1x | Clarify a specification, update a document. | Minimal |

| Development (Unit Test) | ~5x | Developer finds and fixes in their local environment. | Low |

| Integration/QA Testing | ~15x | QA files bug, Dev context-switches, fixes, redeploys, QA re-tests. | Medium (Project Delay) |

| Acceptance (UAT) | ~30x | Stakeholder finds issue, requires cross-team coordination, potential rework. | High (Delayed Launch) |

| Production (Post-Release) | 100x+ | Customers impacted, support tickets, emergency hotfix, brand damage, potential churn. | Critical (Revenue Loss) |

Real-World Catastrophes: When "Minor" Bugs Create Major Headlines

These abstract costs become terrifyingly real when you look at high-profile failures:

- Healthcare.gov: A project initially budgeted at $93.7 million spiraled to an estimated $1.7 billion. The cause was a combination of poor planning, rushed development, and, critically, inadequate testing. The website crashed within hours of its launch, unable to handle user traffic due to faulty code and login failures, requiring massive, expensive rework.

- Samsung Galaxy Note 7: While a hardware issue was at the core, experts speculate that a software flaw in the battery management system—which should have been caught in testing—played a key role in the device's battery explosions. The result was a global recall and an estimated $17 billion in losses, not to mention immeasurable damage to a flagship brand.

- Knight Capital Group: In a perfect B2B finance example, the trading firm lost $440 million in just 45 minutes. The cause? A manual deployment error activated dormant, untested code on their trading platform. A simple regression test to verify that old, unused features remained inactive could have prevented this catastrophic loss.

The Hidden Costs That Don't Show Up on a P&L Immediately

Beyond the immediate cost of a fix, poorly tested software introduces insidious, long-term costs that silently drain your company's resources and potential.

- Technical Debt: Code that is not properly tested is often brittle, poorly structured, and difficult to change. Every new feature takes longer to build because developers have to work around existing flaws, slowing innovation to a crawl. For a closer look at how legacy technical debt accumulates, see our in-depth analysis.

- Customer Churn & Lost Trust: B2B relationships are built on reliability. Your software is part of your customer's critical business operations. If it's buggy, you are directly threatening their ability to do business, making them quick to look for a more stable alternative. The cost to acquire a new customer can be five to seven times higher than retaining an existing one.

- Operational Drag & Support Costs: Your customer support team becomes a permanent fire-fighting crew, and your development team is constantly pulled away from building value-generating features to focus on maintenance and crisis management.

- Reputational Damage: In the B2B world, reputation is everything. Negative reviews and word-of-mouth about an unreliable platform can poison the well for new prospects and strategic partners.

A Practical Guide to the Testing Toolkit: What Your CTO Needs and Your CFO Should Know

To a business leader, the world of software testing can seem like an alphabet soup of jargon. But understanding the purpose and business value of the core testing types and environments is essential for making informed strategic decisions. Think of it like building a secure facility: you need locks on individual doors, guards at the main gate, and a full-scale security drill for the entire compound. Each layer provides a different type of protection.

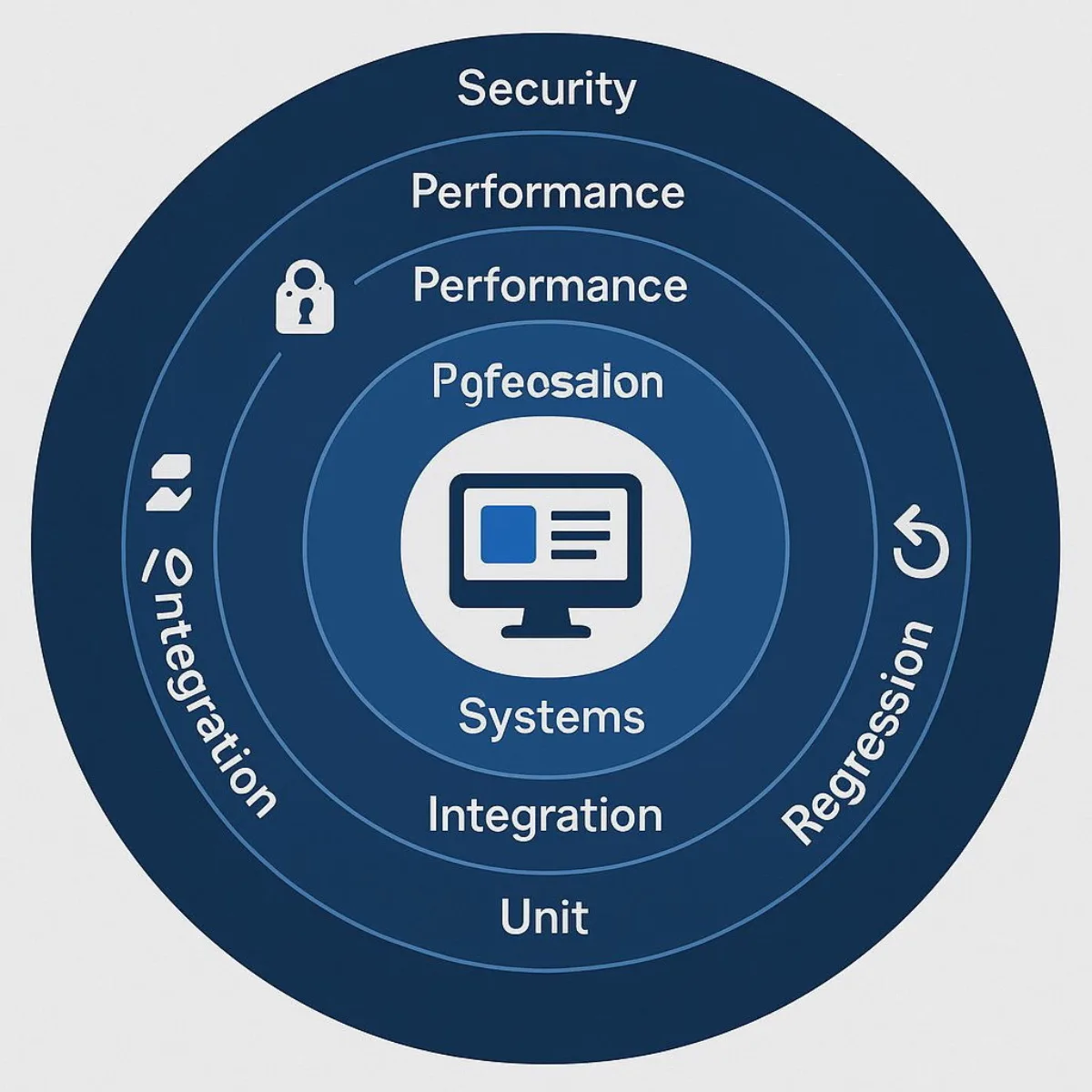

The Layers of Defense: Major Testing Types in Software Development

- Unit Testing: Performed by developers, this is the first line of defense. It tests the smallest individual components of the software (the "units," like a single function or class) in isolation to ensure they work correctly.

- Business Value: This is the cheapest possible stage to catch a bug, saving immense costs down the line. It also forces a higher standard of code quality from the very beginning, reducing future technical debt.

- Integration Testing: This tests how different modules or services work together. For example, does the "user login" feature correctly communicate with the "customer database"?

- Business Value: It prevents failures in critical, multi-part workflows that users rely on. In the age of microservices and complex APIs, this is absolutely essential to ensure the system functions as a coherent whole. See our article on eliminating data inconsistency for strategic integration tips.

- System Testing: This phase tests the complete, integrated software system as a whole against the original business requirements.

- Business Value: This is the ultimate validation: "Does the platform we built actually do what the business and our customers need it to do?" It verifies both functional and non-functional requirements.

- Regression Testing: This crucial process ensures that a new feature or bug fix hasn't accidentally broken any existing functionality. After every change, a suite of regression tests is run to check the status quo.

- Business Value: It protects your existing customers and revenue streams from disruption. It gives you the confidence to release updates frequently without fear of breaking what already works. For any established B2B product, this is non-negotiable.

- Acceptance Testing (UAT): This is often the final stage before release, where business stakeholders or a select group of actual customers test the software to confirm it meets their needs and is "fit for purpose."

- Business Value: It's the final business sign-off. It ensures that the software not only works technically but also solves the real-world business problem it was designed to address. It is the last line of defense against releasing a product that misses the mark.

- Performance Testing: This measures how the software performs under stress and load, assessing its speed, stability, and scalability.

- Business Value: It prevents your application from crashing during peak usage (e.g., at the end of a financial quarter or during a major marketing campaign). A slow, unresponsive platform frustrates users and leads to abandonment. If you're curious about website speed on mobile devices, check our comprehensive guide.

- Security Testing: This is an active attempt to find and exploit vulnerabilities in the software, through methods like penetration testing and vulnerability scanning.

- Business Value: It protects your business and your customers from data breaches, which carry enormous financial penalties, legal liability, and a complete loss of trust. In B2B, demonstrating robust security is often a prerequisite for closing enterprise deals.

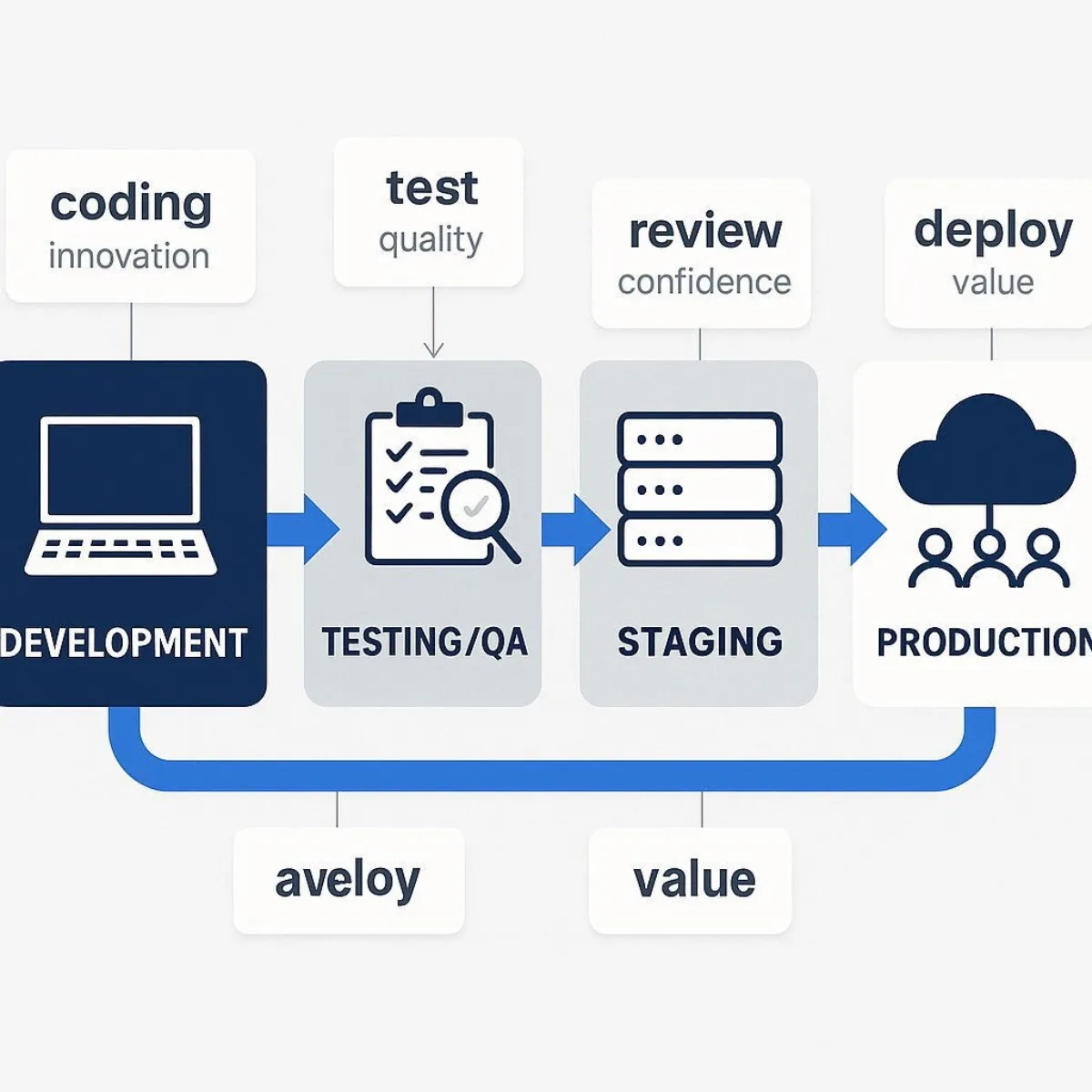

The Proving Grounds: Why Different Test Environments Matter

You can't conduct professional-grade testing on a developer's laptop. A mature software development process relies on a pipeline of distinct environments, each with a specific purpose, to ensure stability and data integrity.

- Development (Dev): This is the developer's personal workshop. It's where code is written and initial unit tests are run. It is, by nature, unstable and constantly changing.

- Testing / QA: This is a stable, controlled environment owned by the QA team. It uses clean, predictable test data, allowing testers to reliably reproduce and verify bugs.

- Staging (Pre-Production): This is arguably the most critical environment in the entire pipeline. It should be an exact replica of your live production environment, from the server configuration to the networking rules.

- Business Value: Staging is the full dress rehearsal before opening night. It's where you conduct final UAT, performance tests, and security audits in a safe space that perfectly mirrors the real world. A proper staging environment would have caught the configuration error that cost Knight Capital $440 million.

- Production (Prod): This is the live environment where your customers conduct their business. It must be the most stable, secure, and protected environment of all.

Table: The B2B Software Testing Landscape

This table connects the "what" (testing type) with the "why" (business purpose) and the "where" (environment) in a single, easy-to-read reference for business leaders.

| Testing Type | Business Purpose | Who Performs It | Typical Environment |

|---|---|---|---|

| Unit Testing | Catch bugs cheaply, ensure code quality | Developers | Development |

| Integration Testing | Verify modules work together (e.g., API calls) | Developers / QA | Integration / QA |

| System Testing | Validate the entire system against requirements | QA / Testers | QA / Staging |

| Regression Testing | Protect existing features from new changes | QA (Often Automated) | QA / Staging |

| Performance Testing | Ensure speed and stability under load | QA / Performance Engineers | Staging |

| Security Testing | Find and fix vulnerabilities before an attack | Security Specialists | Staging |

| Acceptance Testing (UAT) | Final business sign-off before release | Business Stakeholders / End Users | Staging |

Finding the Sweet Spot: How to Avoid "Too Much" Testing with a Risk-Based Strategy

Now we arrive at the central question: "What is too much testing?" The fear of wasting resources is valid. Testing can become a black hole for time and money if approached incorrectly. "Too much" testing occurs when teams attempt to test every feature with the same level of intensity, leading to project delays and bloated budgets without a proportional increase in quality. The obsessive pursuit of metrics like 100% code coverage is a classic example of this anti-pattern; it often leads to writing tests for trivial code while high-risk areas may still be undertested.

The problem is not the act of testing, but the lack of focus. The solution is to trade exhaustive, unfocused testing for intelligent, prioritized testing.

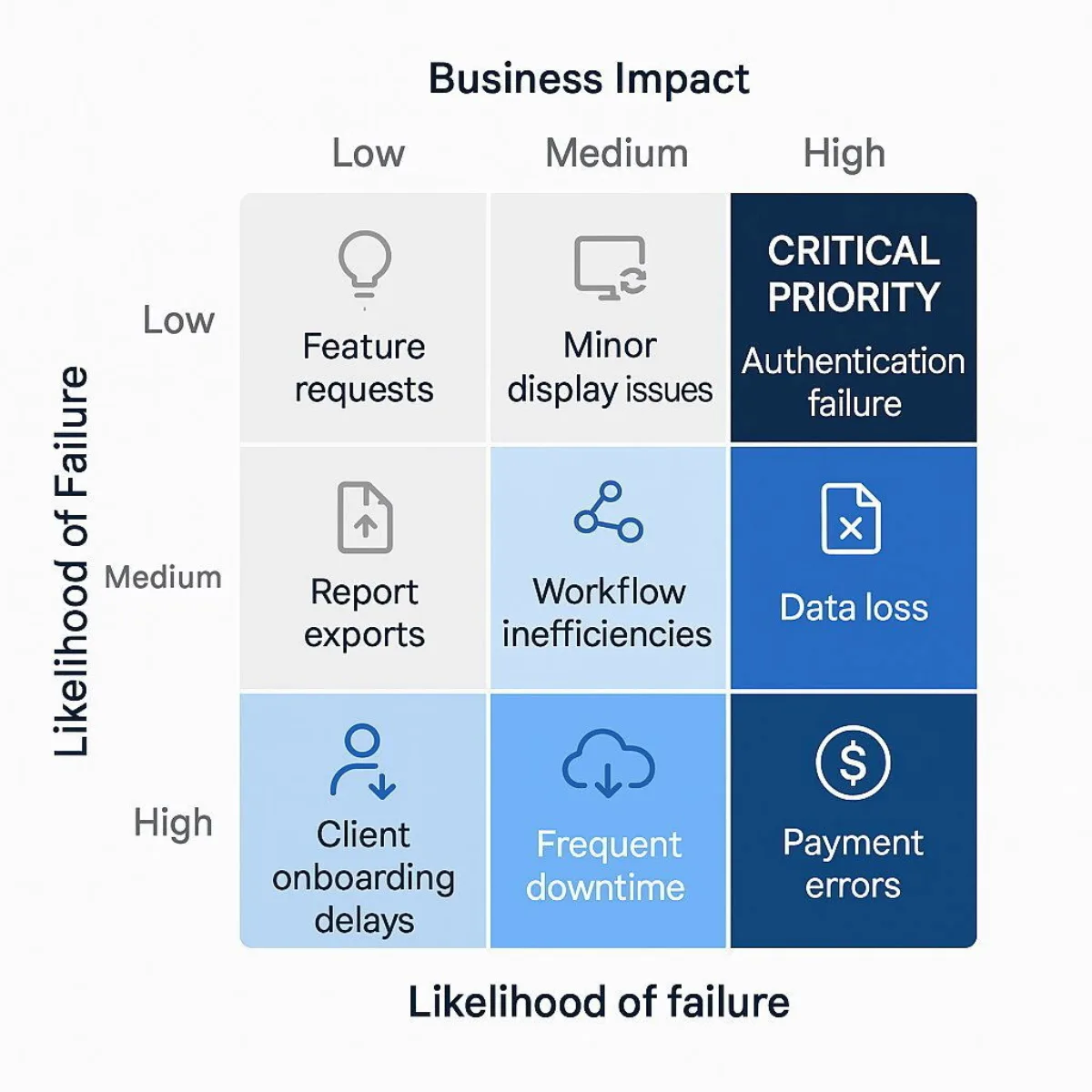

The Solution: Risk-Based Testing (RBT)

Risk-Based Testing (RBT) is the industry-standard methodology for optimizing testing efforts and getting the most value from your QA budget. The concept is simple but incredibly powerful. It prioritizes testing activities based on the level of risk each feature or component poses to the business. This is calculated with a straightforward formula :

You then focus your most rigorous and time-consuming testing efforts on the areas of highest risk.

The business value is immediate and profound. RBT directly aligns your QA investment with your business objectives. It ensures your limited testing resources are spent protecting your most critical revenue-generating workflows, your most sensitive data, and your most-used features—not on exhaustively testing the color of a button on the "About Us" page.

RBT as a Collaborative Business Process, Not Just a QA Task

For RBT to be effective, it cannot be performed in a QA silo. It requires a structured, collaborative process that brings together expertise from across the business. The two inputs to the risk formula—likelihood and impact—come from different parts of your organization.

- Likelihood of Failure is a technical assessment. Your developers and architects are the best people to identify which parts of the codebase are overly complex, which are built on older technology, or which have a history of being buggy. They can assess the probability that a given feature will fail. To learn more about how modern AI can influence software unpredictability, visit our article on managing non-deterministic AI in production.

- Business Impact of Failure is a business assessment. Your Head of Sales knows which feature is a deal-breaker for enterprise clients. Your Head of Customer Support knows which bugs generate the most angry phone calls. Your CFO knows where financial transactions occur and what the cost of an error would be. They are the ones who can quantify the damage of a potential failure.

A QA analyst cannot determine these things alone. Their role is to facilitate the conversation between all these stakeholders, capture their input, and build a risk profile of the application. This transforms testing from a purely technical, back-office function into a strategic, cross-functional business process. The RBT planning meeting becomes one of the most important sessions in the entire development lifecycle.

A Step-by-Step Guide to Implementing RBT in Your B2B Project

- Step 1: Risk Identification (The Brainstorm). Gather key stakeholders: developers, product managers, sales leaders, and support leads. Ask direct, uncomfortable questions: "What feature, if it failed, would cause us to lose our biggest customer?" "What bug would force us to issue a press release and notify regulators?" "Where does the money change hands in our application?" Document every potential risk.

- Step 2: Risk Analysis & Prioritization (The Matrix). For each risk you've identified, plot it on a simple risk matrix. Assess both its Likelihood of Failure and its Business Impact on a scale (e.g., Low, Medium, High). This will visually categorize your risks into clear priority tiers.

- Step 3: Test Planning & Execution (The Action Plan). Design your testing strategy based on the priorities from the matrix. High-risk items receive the most intense scrutiny: exhaustive manual testing, a full suite of automated tests, performance and security testing, and rigorous UAT. Medium-risk items get standard functional and regression testing. Low-risk items might only get a quick, automated "smoke test" to ensure they haven't completely broken.

Chart: A Sample B2B Risk Assessment Matrix

This visual tool can be used in your own organization to drive the RBT conversation and create an actionable plan.

| Likelihood of Failure: LOW | Likelihood of Failure: MEDIUM | Likelihood of Failure: HIGH | |

|---|---|---|---|

| Business Impact: HIGH | MEDIUM PRIORITY e.g., Annual report generation fails. Strategy: Thorough Functional & Regression Testing. | HIGH PRIORITY e.g., User authentication fails. Strategy: Exhaustive Testing (Automated, Security, UAT). | CRITICAL PRIORITY e.g., Payment processing fails. Strategy: Exhaustive Plus (All tests, plus load, stress, and failover). |

| Business Impact: MEDIUM | LOW PRIORITY e.g., Data export has minor formatting errors. Strategy: Standard Functional Testing. | MEDIUM PRIORITY e.g., A secondary feature workflow is slow. Strategy: Functional, Regression, and targeted Performance Testing. | HIGH PRIORITY e.g., Core search functionality returns inaccurate results. Strategy: Exhaustive Functional & Automated Regression Testing. |

| Business Impact: LOW | LOWEST PRIORITY e.g., Typo in the footer text. Strategy: Exploratory Testing Only. Fix if found. | LOW PRIORITY e.g., UI alignment issue on a settings page. Strategy: Basic Automated Check. Log as low priority. | MEDIUM PRIORITY e.g., A frequently used but non-critical UI element is broken. Strategy: Targeted Functional & Automated Testing. |

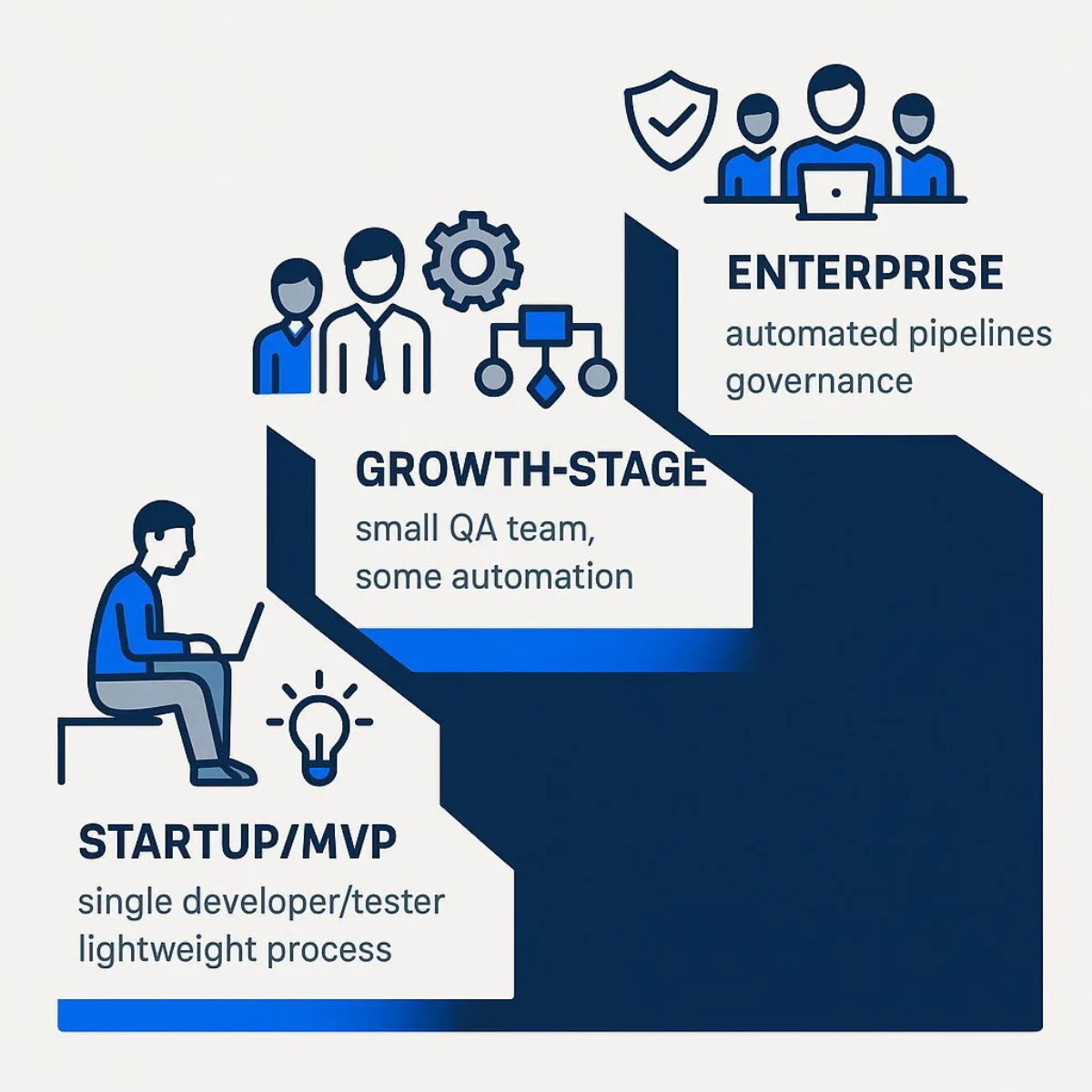

Scaling Your Strategy: Tailoring Your Testing Approach to Project Size

A testing strategy that is perfect for a three-person startup launching an MVP would be dangerously inadequate for an enterprise-level financial platform. Conversely, forcing a startup to adopt the bureaucratic overhead of an enterprise process would kill its agility. Your testing strategy must be tailored to your project's scale, complexity, and risk profile. This evolution is not just about adding more tests; it represents a fundamental shift in process maturity that should mirror your company's growth.

For Small Projects & Startups (e.g., MVP, initial product launch)

- Goal: Speed to market and validating a core business idea. The primary risk is building something nobody wants.

- Strategy: Lean, agile, and informal. The focus is on high-impact exploratory testing and rapid feedback loops over extensive documentation.

- Tactics:

- Developers are responsible for writing their own unit and integration tests.

- Testing is often performed by a product manager or a single "QA-minded" team member.

- The focus is on manually testing the critical "happy path" user workflows.

- A formal test strategy document is unnecessary overhead; a master test plan or even just a checklist may suffice.

- Automation is minimal, likely focused only on the single most critical function, like user signup or a core calculation.

For Medium-Sized & Growth-Stage Projects (e.g., scaling a product, adding major features)

- Goal: Maintain high quality while increasing development velocity and feature complexity. The risk shifts to instability and unpredictability.

- Strategy: Introduce structure, dedicated resources, and foundational automation. This is the inflection point where informal processes break down.

- Tactics:

- Establish a dedicated QA team with clear roles and responsibilities.

- Invest in test automation for the regression suite. This is the single most important step to enable faster, safer, and more predictable release cycles.

- Implement a test case management tool (even a simple one) to track test cases, execution results, and bug reports.

- Formalize the bug lifecycle process so that issues are tracked, prioritized, and resolved systematically.

- Begin targeted performance and security testing for new, high-risk features.

For Large Enterprise Projects (e.g., mission-critical platforms, regulated industries)

- Goal: Ensure rock-solid stability, security, compliance, and traceability. The cost of failure is immense.

- Strategy: The focus shifts to governance, formal process, and specialization.

- Tactics:

- A formal Test Strategy document is authored and approved by all key business and technical stakeholders. This document is the constitution for all QA activities.

- A robust Test Management Platform is non-negotiable. For projects of this scale, managing thousands of test cases, coordinating globally distributed teams, and providing end-to-end traceability from requirement to test result is impossible without a centralized system. This is where the capabilities within platforms like Azure DevOps become essential.

- Specialized, dedicated teams are formed for Performance Testing, Security Testing, and Test Automation.

- Strict environment management and release control processes are enforced to protect the integrity of the Staging and Production environments.

- Comprehensive test coverage is not just a goal but a requirement that must be demonstrated for internal audits and external regulatory compliance.

Calculating the ROI: Proving the Value of Your Testing Investment

To secure the necessary budget for a mature QA process, you must be able to speak the language of the CFO. This means framing testing not as a cost, but as a high-return investment and backing it up with data. If you're looking for a deeper dive on quantifying the ROI of custom software investment, check our full CFO's guide.

Framing the Conversation: Investment vs. Cost

The "Cost of Quality" is a useful model for this conversation. It divides quality-related expenses into two buckets: the Cost of Good Quality (proactive investments) and the Cost of Poor Quality (reactive costs).

- Cost of Good Quality (Investment):

- Prevention Costs: Training developers, defining clear requirements.

- Appraisal Costs: The budget for your QA team, testing tools, and environments.

- Cost of Poor Quality (Expense):

- Internal Failure Costs: The cost to fix bugs found before release.

- External Failure Costs: The 100x cost to fix bugs found after release, including support costs and lost revenue.

The argument is simple: a strategic investment in the Cost of Good Quality dramatically reduces the much larger and more volatile Cost of Poor Quality.

Calculating the ROI of Test Automation

Test automation, in particular, offers a clear and measurable return on investment. The formula is straightforward :

- Investment Costs:

- Tooling: Licenses for automation frameworks or cloud testing platforms.

- Infrastructure: The cost of servers and environments to run the tests, which can be optimized with modern solutions like our on-premise Harvester HCI or scalable OVHCloud servers.

- Personnel: The one-time cost for engineers to write the automated test scripts and the ongoing cost to maintain them.

- Savings (The Return):

- Reduced Manual Labor: This is the easiest number to quantify. Calculate the hours your manual testers would have spent running the same repetitive regression suite every single release cycle. Automating this frees them up for higher-value exploratory testing.

- Faster Time-to-Market: Automation is a key enabler of Continuous Integration/Continuous Deployment (CI/CD). Faster, more frequent releases can be a massive competitive advantage, allowing you to respond to market needs more quickly.

- Defect Leakage Reduction: Track the number of critical bugs caught by your automation suite that would have otherwise "leaked" into production. Apply the 100x cost multiplier from our first section to quantify the millions of dollars in potential losses you've avoided.

At Baytech Consulting, our "Tailored Tech Advantage" means we don't just write code; we design systems for testability from day one. By using modern tech stacks and engineering best practices, we ensure that our clients' investment in test automation is as efficient and effective as possible, maximizing their ROI on quality.

B2B Metrics That Tell the Real Story of Quality

Ultimately, the true ROI of a great testing strategy is reflected in your core B2B business metrics. A high-quality, reliable platform directly and positively impacts :

- Trial-to-Paid Conversion Rate: A smooth, intuitive, and bug-free trial experience is one of the most powerful drivers of new customer acquisition. To maximize the value of your platform, consider leveraging AI and machine learning for product enhancement.

- Net Revenue Retention (NRR): Happy customers whose businesses run smoothly on your platform don't churn; they stay, they renew, and they upgrade. Reliability is the bedrock of high NRR.

- Customer Support Costs: Fewer bugs mean fewer support tickets, lower operational overhead, and a support team that can focus on proactive customer success instead of reactive problem-solving.

Conclusion: From Cost Center to Competitive Advantage

Let's return to the core questions every business leader faces. The answers are not simple, but they are clear.

- What are the costs? The true cost of a software bug is not the hours it takes a developer to fix it. It's the exponential ripple effect of disruption, rework, lost revenue, and damaged trust that can be up to 100 times greater if the bug reaches your customers. Investing in early, continuous testing is the single most effective way to mitigate this enormous financial risk.

- What is "too much"? "Enough" testing is never about volume; it's about focus. "Too much" testing is unfocused testing. By adopting a Risk-Based Testing (RBT) approach, you transform QA from a guessing game into a data-driven strategy, ensuring your most valuable resources are dedicated to protecting your most critical business functions.

- How does project size change the game? Your testing strategy must mature alongside your product and your business. The informal agility that serves a startup will cripple an enterprise, and the formal governance an enterprise requires will suffocate a startup. The key is to consciously evolve your approach as your scale and risk profile change.

Your Actionable Next Step

You can begin this transformation today with one simple action. Schedule a one-hour meeting with your heads of Sales, Customer Support, and Engineering. There is only one item on the agenda: "What are the top five things that could break in our software that would cause the most damage to our business?"

The answers to that question are the foundation of your new risk-based testing strategy.

Building high-quality, enterprise-grade software that drives business value is our passion. If you're ready to turn your quality assurance process from a perceived cost center into a true, measurable competitive advantage, let's have a conversation.

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.