AI Governance and Asset Management: The Strategic Framework for the Modern Enterprise

September 04, 2025 / Bryan ReynoldsStrategic Imperatives in the AI Era: A Unified Framework for AI Governance and Asset Management

Executive Summary

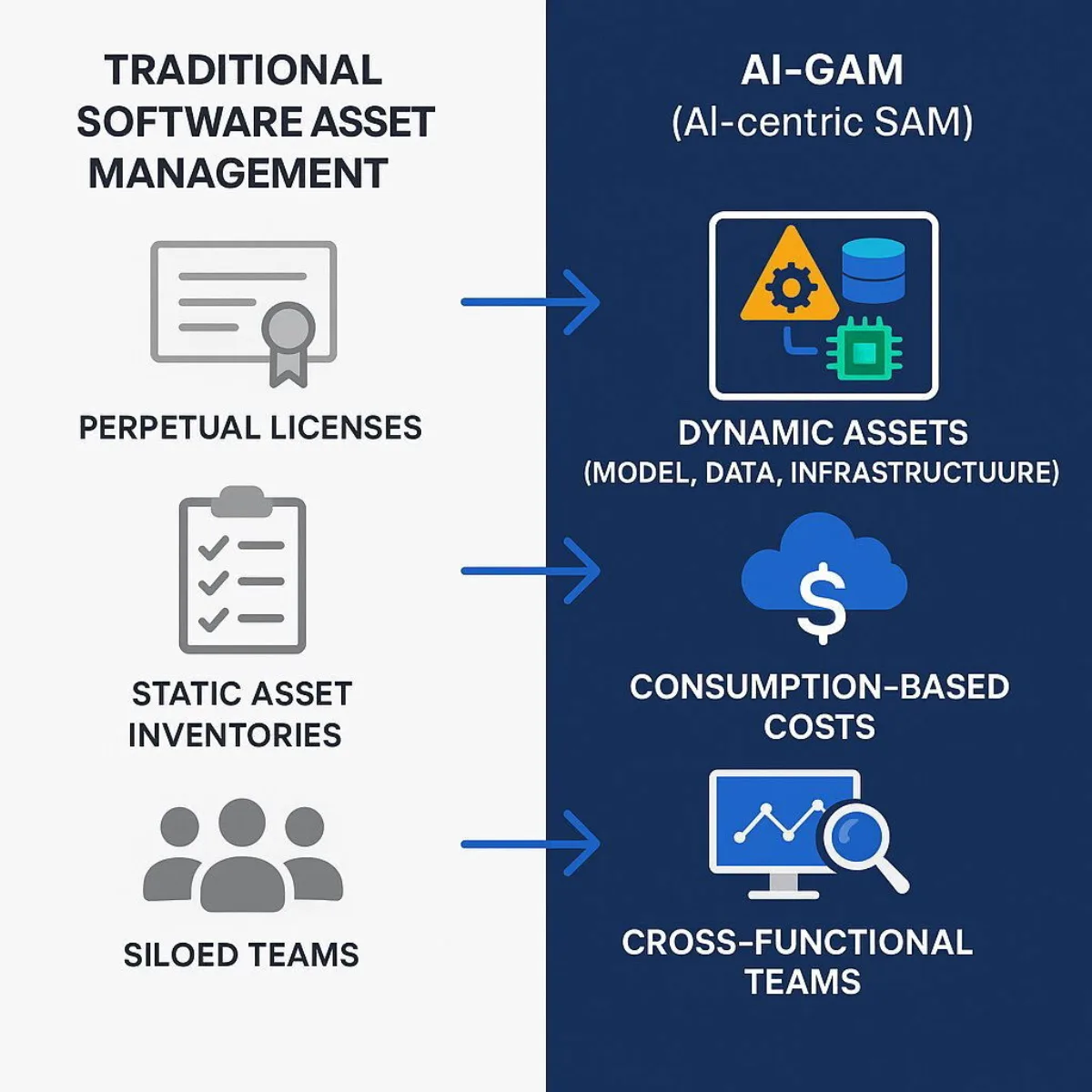

The rapid, widespread deployment of Artificial Intelligence (AI) has created a new, dynamic, and high-value class of software assets. Traditional Software Asset Management (SAM) frameworks, designed for an era of on-premise software and perpetual licenses, are fundamentally insufficient for managing the unique lifecycle, risks, and costs associated with this new paradigm. This report establishes that effective AI Governance is not merely a compliance or ethics exercise but the essential strategic framework for managing these assets, mitigating profound risks, and unlocking their full business value.

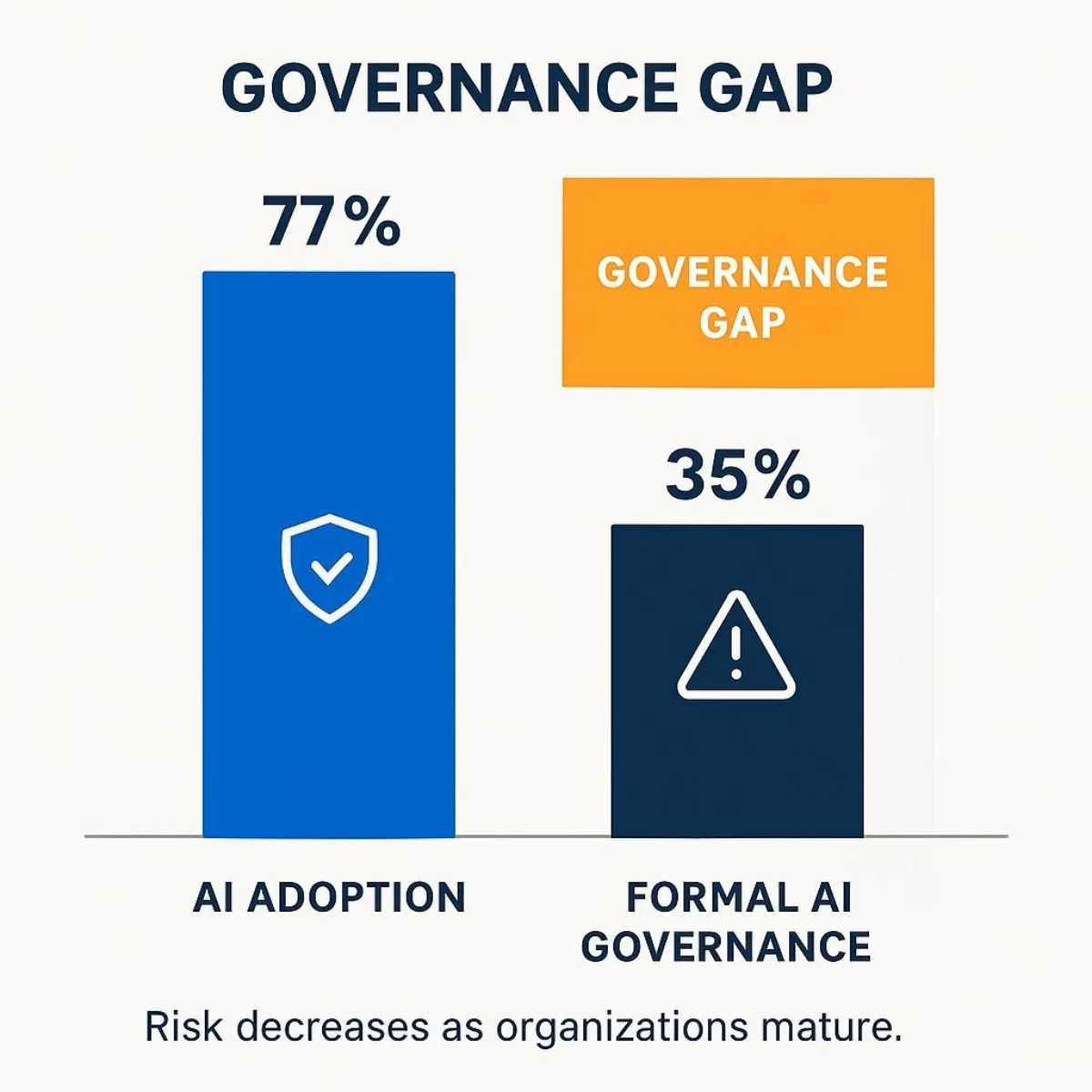

This analysis reveals a critical "governance gap" within the modern enterprise. While AI adoption is accelerating—with 77% of companies actively using or exploring AI technologies—only 35% have a formal governance framework in place. This disparity represents a significant and growing source of unmanaged risk, from regulatory penalties and data breaches to reputational damage and failed investments. Organizations that fail to bridge this gap will find their AI initiatives stagnating, unable to scale beyond isolated experiments due to a lack of trust and control.

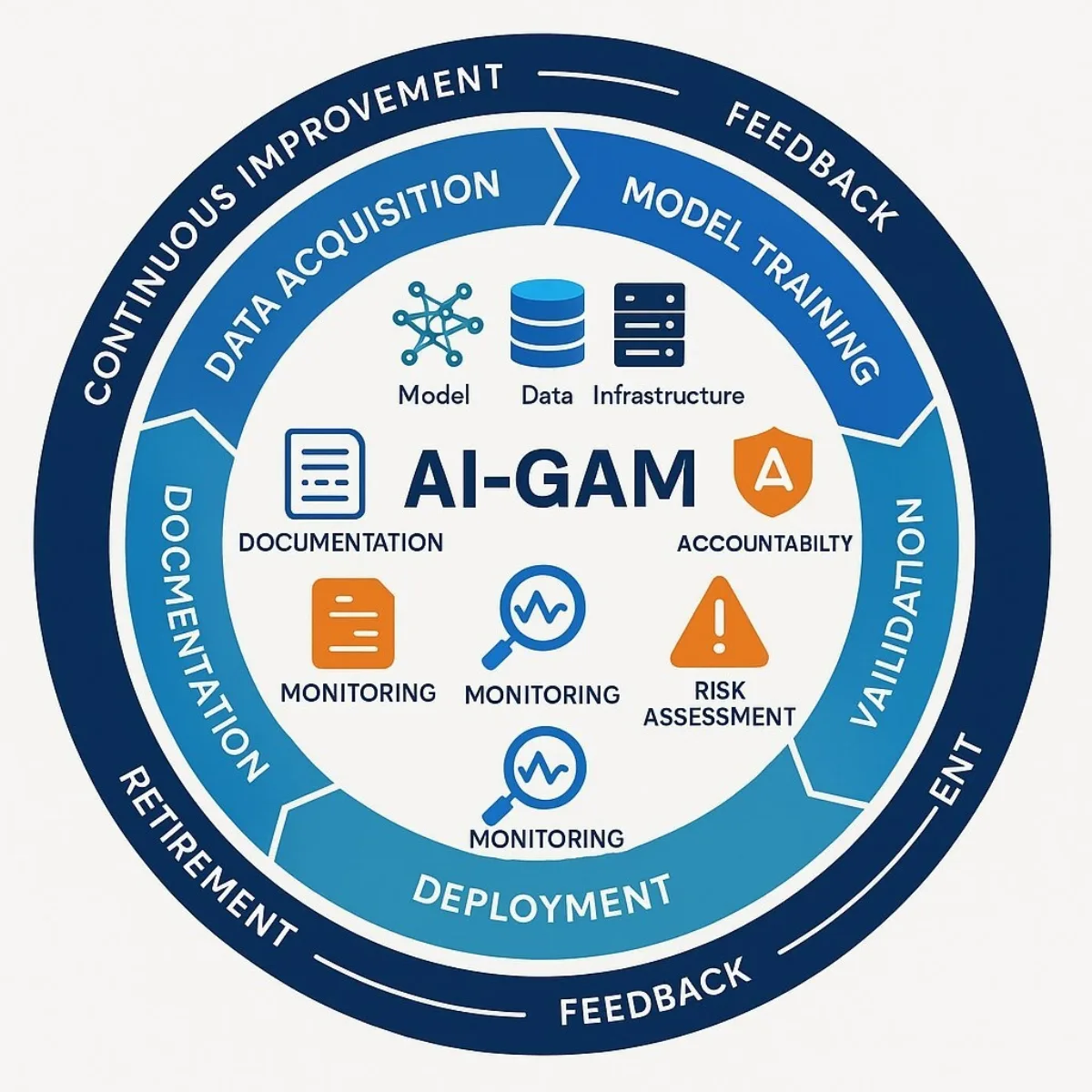

To address this challenge, this report introduces a unified framework—AI Governance and Asset Management (AI-GAM)—that holistically integrates ethical principles, regulatory compliance, and lifecycle management. The AI-GAM model reframes governance from a restrictive cost center to a direct enabler of innovation, providing the necessary guardrails for confident, scalable, and responsible AI deployment. It recognizes that AI assets, comprising models, data, and specialized infrastructure, require a new management paradigm focused on continuous monitoring, data provenance, and consumption-based cost optimization.

The core recommendations of this report advocate for a phased, maturity-based approach to implementing the AI-GAM framework. This journey, moving from a reactive to a proactive and ultimately transformative state, should be championed by a cross-functional governance body that includes representation from legal, IT, data science, and finance. By embedding governance principles directly into the AI asset lifecycle, organizations can build a foundation of trust, ensure regulatory adherence, and create the conditions necessary to realize a sustainable and significant return on their AI investments. This report provides a comprehensive roadmap for leaders to navigate this complex landscape, transforming AI from a source of uncertainty into a resilient, high-performing engine of enterprise value.

Section 1: The New Strategic Imperative: Governing AI as a Core Business Asset

1.1 The Proliferation of AI and the Emergence of a New Asset Class

The integration of Artificial Intelligence into the enterprise is no longer a futuristic concept but a present-day reality and a primary driver of strategic planning. The scale of this transformation is immense; research indicates that 77% of companies are either actively using or exploring the use of AI, and a commanding 83% of business leaders identify AI as a top priority within their strategic business plans. This is not a niche technology confined to research labs but a foundational business platform, with economic projections estimating a $15.7 trillion contribution to the global economy by 2030.

This widespread adoption has given rise to a fundamentally new class of business asset. Unlike traditional software, which is typically a self-contained, executable product, an AI system is a complex and dynamic assemblage of interdependent components. These new assets are composed of:

- Models: The algorithms themselves, which can be proprietary models developed in-house, customized open-source models, or services consumed via third-party Application Programming Interfaces (APIs).

- Data: The vast datasets used for training, testing, and validating these models are themselves primary assets. The quality, lineage, and governance of this data are paramount to the value and reliability of the entire system.

- Infrastructure: The specialized hardware, such as Graphics Processing Units (GPUs), and the elastic cloud services required to train and run AI models constitute a significant and variable cost component that must be actively managed.

- Intellectual Property: The unique configurations, prompts, and workflows developed to make these components work together represent valuable, often proprietary, intellectual property.

The management of this new asset class requires a strategic shift in thinking. These are not static assets to be inventoried once a year; they are dynamic, evolving systems whose performance can drift, whose costs are consumption-based, and whose impact on the business is pervasive.

1.2 Beyond Compliance: Why Ad-Hoc Governance Fails and Strategic Oversight Succeeds

The power and complexity of AI assets introduce a new spectrum of risks that many organizations are ill-prepared to manage. Without a structured governance framework, companies expose themselves to biased outputs that can lead to discriminatory practices, severe privacy breaches, novel security threats, non-compliance with emerging regulations, and significant reputational damage. These risks are not theoretical; they manifest as a loss of customer and stakeholder trust, internal resistance to AI adoption, and poor strategic decision-making based on flawed, AI-generated insights.

This landscape has created a significant "governance gap." While AI adoption is high, a striking minority of organizations have implemented the necessary oversight. Data shows that only 35% of companies currently have a formal AI governance framework in place. Although 87% of business leaders state they plan to implement AI ethics policies by 2025, this lag between deployment and oversight creates a period of substantial, unmanaged risk.

Many organizations currently operate with an ad-hoc or informal approach to governance, addressing issues reactively as they arise. This approach is dangerously insufficient. Ad-hoc governance is typically siloed within departments, lacks enterprise-wide visibility, and fails to address the systemic nature of AI risk. In contrast, a formal, strategic governance framework is proactive, comprehensive, and integrated across the entire organization. It moves beyond a simple compliance checklist to align AI development and deployment with core business objectives, fostering a culture of responsibility and building the institutional trust necessary for AI to flourish.

The disparity between AI adoption and governance maturity is more than a statistical anomaly; it is an emerging competitive chasm. The logic is straightforward: organizations without robust governance will inevitably encounter AI failures as they attempt to scale their initiatives, whether through biased model outputs, security breaches, or unreliable "hallucinations". These failures will not be isolated incidents. They will erode customer and stakeholder trust, attract the attention of regulators, and force reactive, costly pauses in strategic AI programs, hindering momentum and wasting investment. Conversely, organizations that invest in mature governance can deploy AI with greater confidence and at a larger scale, empowering them to tackle higher-value, higher-risk business problems. This creates a powerful flywheel effect: governed AI fosters trust, which enables faster and safer scaling, which in turn leads to higher and more sustainable ROI, ultimately building a wider and more defensible competitive moat. In this context, governance is not a brake on innovation but the very engine of sustainable, AI-driven growth.

Furthermore, AI assets introduce a form of "systemic risk" to the enterprise that is unfamiliar in the world of traditional software. A bug in a conventional software application is typically contained within that program's functionality. A flawed AI asset, however, can have cascading and unpredictable negative impacts across dozens of business functions simultaneously. For instance, a single customer sentiment model might be used as a service by sales, marketing, product development, and customer support teams. If this shared model "drifts" due to new data or is "poisoned" by a malicious actor, it can silently corrupt decision-making across all dependent functions. This creates a systemic operational risk that permeates the organization. Therefore, managing an AI asset is not like managing a desktop application; it is akin to managing a piece of critical infrastructure, requiring a level of centralized oversight, continuous monitoring, and rigorous lifecycle management that far exceeds the scope of traditional SAM.

Section 2: Deconstructing AI Governance: Principles, Frameworks, and the Regulatory Horizon

2.1 The Pillars of Trustworthy AI

At the heart of any robust AI governance framework lie a set of core principles. These principles are not abstract ideals but the practical foundation for building AI systems that are safe, effective, and worthy of the trust of stakeholders, from customers and employees to regulators and the public. A synthesis of leading global standards reveals a consensus around several key pillars that must guide the development and deployment of AI.

- Fairness and Bias Mitigation: This principle demands that AI systems be designed and evaluated to prevent discrimination and ensure equitable outcomes for all user groups. It involves using diverse and representative training data, auditing algorithms for hidden biases related to protected attributes like race, gender, or age, and implementing fairness-aware machine learning techniques.

- Transparency and Explainability: Stakeholders must be able to understand how an AI system makes its decisions, especially in high-stakes applications. This principle requires clear documentation of model functionality, data sources, and decision-making logic. For complex models, it involves using explainability techniques to make their outputs interpretable by users and auditors, moving away from the "black box" paradigm.

- Accountability and Oversight: Clear lines of responsibility must be established for the outcomes of AI systems. This principle ensures that identifiable individuals or teams are accountable for the entire AI lifecycle. It necessitates robust human oversight mechanisms, particularly for high-risk applications, to allow for intervention, correction, or shutdown of an AI system when necessary.

- Privacy and Data Protection: AI systems must be designed to respect and protect user privacy. This involves strict adherence to data protection regulations like the EU's General Data Protection Regulation (GDPR), obtaining informed consent for data use, minimizing the collection of personal data, and using anonymization or other privacy-enhancing techniques wherever possible.

- Security and Robustness: AI systems must be technically resilient, reliable, and secure. This principle requires that systems are designed to operate safely under expected conditions and handle unexpected scenarios without producing harmful outcomes. It also involves implementing strong cybersecurity measures to protect systems from adversarial attacks, data breaches, and unauthorized access.

2.2 Comparative Analysis of Global Frameworks

As organizations navigate the implementation of these principles, they are confronted with a growing landscape of regulations, standards, and guidelines. The most prominent of these are not competing but complementary, each serving a different purpose in the global push for responsible AI. Understanding their distinct roles is crucial for developing a comprehensive compliance strategy.

- The EU AI Act: A Risk-Based Mandate: The European Union's AI Act is the world's first comprehensive, binding legal framework for AI. Its core feature is a risk-based classification system that categorizes AI applications into four tiers:

- Unacceptable Risk: AI practices that are a clear threat to human rights are banned outright. This includes social scoring, cognitive behavioral manipulation, and most uses of real-time remote biometric identification in public spaces.

- High Risk: A broad category of AI systems that can negatively impact safety or fundamental rights. This includes AI used in critical infrastructure, education, employment, access to essential services, law enforcement, and justice administration. These systems are subject to stringent obligations before and after they are placed on the market, including rigorous risk assessments, high-quality data governance, detailed technical documentation, activity logging, clear user information, human oversight, and high levels of accuracy and cybersecurity.

- Limited Risk: AI systems like chatbots or those that generate deepfakes are subject to transparency obligations, requiring them to disclose that the content is AI-generated or that the user is interacting with a machine.

- Minimal Risk: The vast majority of AI applications fall into this category and are not subject to specific legal obligations under the Act. Crucially, non-compliance with the EU AI Act carries significant financial penalties, with fines reaching up to €35 million or 7% of a company's global annual turnover, whichever is higher.

- The NIST AI Risk Management Framework (RMF): A Practical, Voluntary Guide: Developed by the U.S. National Institute of Standards and Technology, the AI RMF provides a voluntary, flexible, and practical framework for organizations to manage the risks associated with AI. It is not a prescriptive law but a guide intended to help organizations cultivate a culture of risk management. The RMF is structured around four core functions that form a continuous cycle:

- Govern: Implement policies and processes to foster a culture of risk awareness and management.

- Map: Identify the context and thoroughly understand the potential risks and benefits of an AI system.

- Measure: Employ quantitative and qualitative tools to analyze, assess, and track AI risks.

- Manage: Allocate resources to mitigate identified risks and decide on appropriate responses. The framework also defines seven key characteristics of trustworthy AI: validity and reliability, safety, security and resilience, accountability and transparency, explainability and interpretability, privacy enhancement, and fairness.

- OECD AI Principles: An Ethical Compass for Global Policy: The Organisation for Economic Co-operation and Development (OECD) has established a set of five intergovernmental principles that form a global ethical standard for trustworthy AI. These principles are not legally binding but serve as a foundational policy framework that has influenced national strategies and regulations worldwide, including the EU AI Act. The five core principles are:

- AI should benefit people and the planet by driving inclusive growth, sustainable development, and well-being.

- AI systems should be designed in a way that respects the rule of law, human rights, democratic values, and diversity, and they should include appropriate safeguards—for example, enabling human intervention where necessary—to ensure a fair and just society.

- There should be transparency and responsible disclosure around AI systems to ensure that people understand AI-based outcomes and can challenge them.

- AI systems must function in a robust, secure, and safe way throughout their lifecycle, and potential risks should be continually assessed and managed.

- Organizations and individuals developing, deploying, or operating AI systems should be held accountable for their proper functioning in line with the above principles.

| Attribute | EU AI Act | NIST AI Risk Management Framework (RMF) | OECD AI Principles |

|---|---|---|---|

| Legal Status | Mandatory legal framework within the European Union. | Voluntary guidance framework, primarily for U.S. organizations but with global applicability. | Non-binding ethical principles and policy recommendations for member countries. |

| Core Methodology | Risk-Based Classification: AI systems are categorized into Unacceptable, High, Limited, or Minimal risk tiers, with obligations corresponding to the risk level. | Risk Management Lifecycle: A continuous process structured around four functions: Govern, Map, Measure, and Manage. | Principles-Based Ethics: A set of five high-level principles to guide the development of trustworthy and human-centric AI. |

| Key Requirements | For high-risk systems: mandatory conformity assessments, risk management systems, data governance, technical documentation, human oversight, and registration in an EU database. | For organizations: guidance on establishing a risk management culture, identifying and analyzing AI risks, and implementing controls to manage those risks. Defines characteristics of trustworthy AI. | For governments and organizations: recommendations to foster a policy environment that supports responsible AI, including investment in R&D, enabling a digital ecosystem, and international cooperation. |

| Geographic Scope | Applies to providers and deployers of AI systems placed on the EU market or whose output is used in the EU, regardless of where the entity is established. | Developed in the U.S. but designed for global use and alignment with international standards. | Global principles adopted by OECD member countries and adhered to by other nations, forming a basis for international consensus. |

2.3 Anticipating Regulatory Evolution: Building a Future-Proof Governance Model

The field of AI and its regulation is in a state of constant flux. New laws are being drafted, and existing frameworks are being updated to keep pace with rapid technological advancements. Attempting to build a governance model that is narrowly tailored to a single piece of legislation is a strategy destined for failure.

A more resilient and future-proof approach is to build an internal governance model that is grounded in the core principles shared across all major global frameworks. While the specific legal mechanisms of the EU AI Act, the practical guidance of the NIST RMF, and the ethical norms of the OECD Principles differ in their implementation, they share a profound underlying theme: accountability must be embedded throughout the entire AI lifecycle. The EU AI Act explicitly requires risk management "throughout the high risk AI system's lifecycle". The NIST RMF's Govern-Map-Measure-Manage functions are inherently cyclical and apply to all stages of development and use. The OECD principles of accountability and robustness imply a continuous, not a one-time, responsibility.

This convergence means that a "one-time" compliance check performed just before deployment is dangerously insufficient. Governance cannot be a static gate that a project passes through; it must be a continuous, dynamic process integrated into the very fabric of AI development and operations. The practical implication for organizations is that they must build a flexible, principles-based system and integrate governance controls directly into their Machine Learning Operations (MLOps) and development pipelines. This ensures that as regulations evolve, the organization's foundational ability to demonstrate fairness, transparency, and accountability remains constant, allowing it to adapt to new specific requirements without needing to redesign its entire governance structure from the ground up.

Section 3: Reimagining Software Asset Management for the AI Era

3.1 From Perpetual Licenses to Consumption-Based Models: The Economic Shift

For decades, Software Asset Management (SAM) has been a critical IT and finance function, defined as a set of business practices for strategically managing the lifecycle—purchase, deployment, maintenance, use, and disposal—of an organization's software applications. The primary goals of traditional SAM have been to optimize software costs, limit legal and financial risks associated with non-compliance, and ensure that software investments deliver business value. Key elements of a traditional SAM program include maintaining a comprehensive software inventory, monitoring software usage, managing license entitlements to ensure compliance, and streamlining procurement processes.

However, the technological and economic models underpinning the AI era render many of these traditional practices obsolete. The world of on-premise, perpetual licenses tracked via manual audits and spreadsheets is rapidly giving way to a far more dynamic and complex landscape. The proliferation of AI is accelerating a fundamental shift toward subscription-based Software-as-a-Service (SaaS) and, more importantly, consumption-based models where costs are tied directly to usage metrics like API calls, compute hours, or data processed. This economic shift makes static, periodic compliance checks ineffective. Managing these new assets requires real-time monitoring and a proactive, predictive approach to cost management that traditional SAM tools and processes were not designed to handle.

3.2 The AI Asset Lifecycle: A New Management Paradigm

The very definition of a "software asset" must be expanded to encompass the unique components of AI systems. An AI asset is not a single executable file but a composite entity whose value and risk are distributed across its constituent parts. A new management paradigm must account for the distinct lifecycle of these components:

- Models: The algorithmic models are the core of the asset. Managing them involves tracking not just which model is in use, but also its version, its performance metrics over time, and its potential for "drift"—a degradation in accuracy as real-world data changes. The inventory must include a mix of proprietary models, open-source models with varying license obligations, and third-party models accessed via APIs, each with its own cost structure and risk profile.

- Data: The datasets used to train, test, and validate AI models are primary assets of immense value. The adage "garbage in, garbage out" is a fundamental law of AI; therefore, the quality, integrity, lineage, and governance of these data assets are paramount. Managing data as an asset involves documenting its provenance, ensuring it is free from bias, and tracking its usage to comply with privacy regulations.

- Infrastructure: AI models, particularly large language models (LLMs) and deep learning systems, require specialized and costly infrastructure. This includes high-performance hardware like GPUs and consumption-based cloud services. Managing this infrastructure as an asset means moving beyond simple procurement to active monitoring and optimization of compute resources to control spiraling costs.

The lifecycle of these assets is also far more complex and cyclical than that of traditional software. It involves a continuous loop of Data Acquisition, Preprocessing, Model Training, Validation, Deployment, Monitoring, and frequent Retraining or Retirement, all of which must be tracked and managed.

3.3 The Role of AI in Supercharging SAM

Paradoxically, the same technology creating these new asset management challenges also offers the solution. AI itself can be a powerful tool for modernizing and automating the SAM function, enabling it to cope with the scale and complexity of the new environment. AI-powered tools can revolutionize asset management by:

- Automating Discovery and Inventory: AI can continuously scan networks, cloud environments, and code repositories to automatically discover and inventory all AI assets, including open-source models and API dependencies that might otherwise go untracked.

- Predicting Consumption and Optimizing Costs: By analyzing usage patterns, AI can predict future cloud consumption and API costs, allowing for more accurate budgeting and proactive optimization of licensing and subscription plans.

- Enhancing Compliance Monitoring: AI can monitor AI systems in real-time for compliance with internal policies and external regulations, flagging potential issues like data misuse or model bias automatically.

- Augmenting Digital Asset Management (DAM): The principles of managing digital media assets are analogous to managing data assets for AI. AI-powered DAM systems use techniques like automated metadata tagging, image recognition, and intelligent rights management to organize and govern large libraries of unstructured data, providing a model for how to manage the vast datasets required for AI training.

| Dimension | Traditional SAM | AI-Centric SAM (AI-GAM) |

|---|---|---|

| Primary Asset Type | Executable software, installable packages. | Composite Asset: Model + Data + API + Infrastructure. |

| Licensing/Cost Model | Perpetual, per-seat, or per-server licenses with fixed costs. | Consumption-based: Per-token, per-API call, per-compute hour, with variable costs. |

| Primary Risk | Financial penalties from vendor license audits; overspending on unused software. | Biased or harmful outputs, data privacy breaches, security vulnerabilities, model failures, regulatory non-compliance. |

| Compliance Focus | Adherence to legal terms and conditions of software license agreements. | Adherence to ethical principles (fairness, transparency), data privacy laws (GDPR), and AI-specific regulations (EU AI Act). |

| Key Metrics | Number of installations, license utilization rate, cost per license. | Model accuracy, data lineage, model drift, API call volume, cloud compute cost, fairness metrics. |

| Responsible Team | Siloed functions: IT, Procurement, Finance. | Cross-functional team: IT, Legal, Data Science, Risk, Finance (FinOps), Business Units. |

This stark contrast between traditional and AI-centric SAM highlights a critical organizational reality. The ease with which developers and data scientists can now access open-source models and public datasets, often with just a corporate credit card, has created a new and dangerous form of technical debt. This mirrors the historical problem of "shadow IT," where employees used unauthorized software, creating unmanaged security and compliance risks. Today, we face "shadow AI." This proliferation of untracked models and data creates two significant hidden liabilities: "model sprawl" and "data debt."

"Model sprawl" occurs when an organization has no central inventory of the AI models it depends on, the open-source licenses associated with them, or their inherent security vulnerabilities. This lack of visibility makes it impossible to manage risk or cost effectively. "Data debt" is incurred when teams use poorly documented, unvetted, or biased public datasets to train models. This debt may not be immediately apparent, but it will inevitably be paid later in the form of poor model performance, discriminatory outcomes, and costly remediation efforts. This new reality means that the core SAM function of discovery and inventory is more critical than ever. However, to keep pace with the speed of modern AI development, this function must evolve from a periodic, manual audit into an automated, continuous process that is integrated directly into the development lifecycle.

Section 4: The Synthesis: A Unified Framework for AI Governance and Asset Management (AI-GAM)

4.1 The AI-GAM Conceptual Model

To effectively manage the new class of AI assets and their associated risks, organizations must move beyond separate, siloed functions for governance and asset management. The solution lies in a unified framework—AI Governance and Asset Management (AI-GAM)—where governance principles are not abstract policies but are embedded as tangible controls and metadata throughout the entire AI asset lifecycle.

This model connects the "why" of governance with the "what" and "how" of asset management. For example:

- The principle of Fairness is no longer just a statement in a code of conduct. In the AI-GAM framework, it is enforced through mandatory bias audits during the model validation phase of the lifecycle. It is tracked via continuous drift monitoring post-deployment, with automated alerts flagging when a model's outputs begin to skew against a protected group.

- The principle of Transparency is operationalized by requiring that every model checked into the central asset inventory must be accompanied by comprehensive technical documentation, as mandated by regulations like the EU AI Act. This includes "model cards" that detail its intended use, limitations, and the characteristics of its training data.

- The principle of Accountability is established by assigning clear ownership to each asset in the registry and logging all decisions and interventions via immutable records, ensuring a complete audit trail.

By integrating governance directly into the management of the asset, the AI-GAM framework transforms principles into practice, making responsible AI the default, not the exception.

4.2 The Central Role of Data Governance

The AI-GAM framework is built upon a non-negotiable foundation: robust data governance. It is a fundamental truth that AI governance is impossible without data governance. An AI system can only be as trusted, reliable, and unbiased as the data that fuels it. Therefore, a significant portion of the AI-GAM effort must be dedicated to managing data as a primary asset.

Key data governance practices essential for AI include:

- Data Quality and Integrity: Implementing processes to ensure that data used for training and inference is accurate, complete, consistent, and fit for purpose. This includes data cleansing, validation, and profiling.

- Data Lineage and Provenance: Maintaining a clear, auditable record of where data comes from, how it has been transformed, and how it is used across different AI models. This is critical for debugging, ensuring reproducibility, and demonstrating compliance.

- Privacy and Security: Applying strict data protection measures, including access controls, encryption, and privacy-enhancing techniques like anonymization and data minimization, to safeguard sensitive information and comply with regulations like GDPR.

- Data Labeling and Annotation: Ensuring that data used for supervised learning is labeled accurately and consistently, as errors in labeling are a primary source of model performance issues.

4.3 Establishing the AI Governance Office (or Center of Excellence)

Implementing the AI-GAM framework requires a dedicated organizational structure to provide oversight, enforce policies, and drive the initiative forward. This is typically achieved through an AI Governance Office or an AI Center of Excellence. This is not a single individual or department but a cross-functional body with representation from key stakeholders across the enterprise.

The roles and responsibilities within this structure are clearly defined:

- AI Ethics and Compliance Committee: This senior-level body serves as the central governing authority. It is responsible for setting enterprise-wide AI policy, reviewing and approving high-risk use cases, and providing ultimate oversight for the AI-GAM program.

- Legal and Risk Management: This team is responsible for interpreting evolving AI regulations, evaluating legal and compliance risks associated with AI assets, and ensuring that the governance framework aligns with all applicable laws.

- Data Science and Engineering: These technical teams are on the front lines of AI development. Their responsibility is to build and maintain AI models that adhere to the established technical and ethical standards, including requirements for fairness, explainability, and robustness.

- IT, SAM, and FinOps Teams: This group manages the core asset management functions. They are responsible for maintaining the AI asset inventory, tracking consumption-based costs, ensuring the underlying infrastructure is secure and optimized, and managing vendor relationships.

- Finance Department: The finance team plays a crucial role in tracking all AI-related expenditures, measuring the ROI of AI investments, and identifying opportunities for cost savings and financial optimization.

- Business Units: The ultimate users and beneficiaries of AI systems, business units are responsible for providing the business context for AI projects, identifying valuable use cases, and are ultimately accountable for the business outcomes of the AI they deploy in their operations.

4.4 Technology Enablers for AI-GAM

Implementing AI-GAM at an enterprise scale is not feasible with manual processes or traditional SAM tools. A modern, integrated toolchain is required to automate and enforce the framework's controls:

- AI Model Inventory and Registry: A centralized, database-driven system that acts as the "single source of truth" for all AI models in the organization. It tracks model versions, metadata, documentation, dependencies, ownership, and risk levels.

- Data Lineage and Traceability Solutions: Tools that automatically map the flow of data from its source, through various transformations, into the models that use it, and out to the applications that consume its predictions. This provides critical visibility for compliance and debugging.

- Monitoring and Drift Detection Tools: Specialized technologies that continuously monitor the performance of deployed AI models in real-time. They are designed to detect model drift, data drift, and concept drift, automatically alerting teams when a model's performance degrades or its outputs become biased.

- AI-Powered SAM and FinOps Platforms: A new generation of asset management tools that use AI to automate the discovery of cloud and SaaS assets, predict future spend based on usage trends, and provide recommendations for cost optimization.

The successful implementation of the AI-GAM framework necessitates a fundamental shift in organizational structure, forcing the convergence of three traditionally separate disciplines: Machine Learning Operations (MLOps), Financial Operations (FinOps), and Governance, Risk, and Compliance (GRC). In the context of AI assets, these are not sequential activities but deeply intertwined, continuous processes. MLOps manages the technical lifecycle of model development, deployment, and monitoring. FinOps manages the variable, consumption-based costs of the cloud infrastructure that AI runs on. GRC sets the policies and ensures adherence to ethical standards and regulations. One cannot effectively manage the risk of a model (GRC) without continuously monitoring its performance in production (MLOps). One cannot manage the cost of a model (FinOps) without understanding its computational requirements and retraining schedule (MLOps). The AI-GAM framework, therefore, acts as the strategic overlay that forces the integration of these functions, breaking down organizational silos to create a unified, real-time view of each AI asset's value, cost, and risk.

Section 5: An Actionable Roadmap for Implementation

5.1 Adopting a Maturity Model Approach

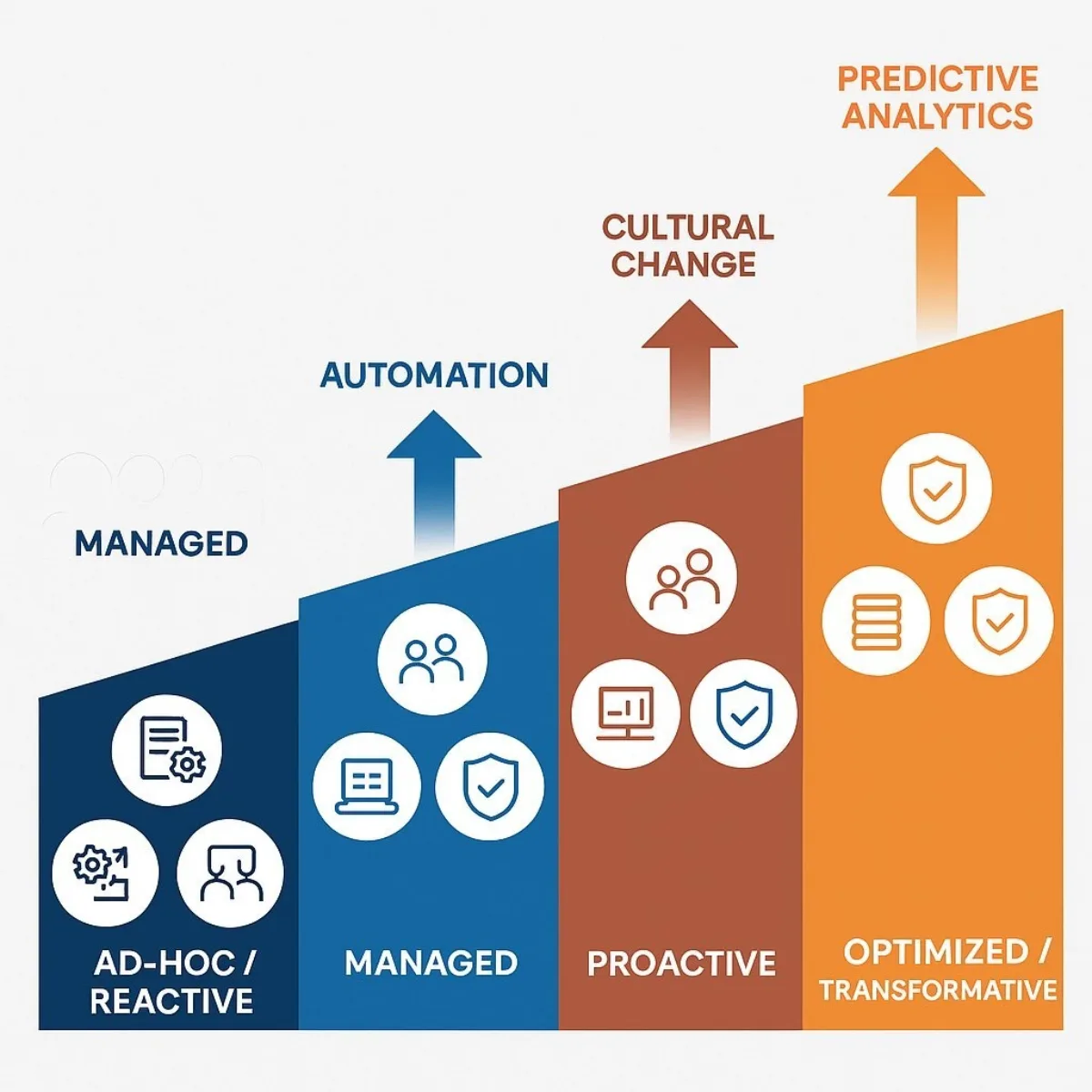

Implementing a comprehensive AI-GAM framework is a significant undertaking that cannot be accomplished overnight. A successful rollout requires a structured, phased approach that builds capabilities incrementally and demonstrates value along the way. Adopting a maturity model is an effective strategy for this journey. It allows an organization to assess its current state, define a clear target state, and map a realistic path for progress. This journey typically moves through stages, from an initial Reactive or Ad-Hoc stage, to a more structured Proactive or Managed stage, and ultimately to a fully integrated Transformative or Optimized stage where governance is an automated and strategic enabler of the business. A timeframe of 18 to 24 months is a realistic target for moving through these phases.

5.2 Phase 1: Discovery and Assessment (Months 1-3)

The first phase is about establishing a baseline understanding of the organization's current AI landscape and risk exposure.

- Conduct an AI Asset Inventory: The initial, critical step is to create a comprehensive inventory of all AI systems currently in use or development across the enterprise. This process must be thorough, actively seeking out "shadow AI" projects within business units that may not have gone through formal IT procurement. This inventory should document the system's purpose, data sources, owners, and current status.

- Perform an AI Risk Assessment: With the inventory in hand, conduct a risk assessment to identify high-risk AI applications, such as those used for hiring, credit scoring, or other critical decision-making. Evaluate these systems against potential risks of bias, security vulnerabilities, and privacy infringements.

- Gap Analysis: Cross-reference the inventory and risk assessment against the requirements of key external frameworks (like the EU AI Act and NIST RMF) and internal policies. This gap analysis will identify the most critical areas where the organization is non-compliant or exposed, forming the basis for the work to be done in subsequent phases.

5.3 Phase 2: Framework Design and Policy Development (Months 4-9)

This phase focuses on building the foundational structures and rules for the AI-GAM program.

- Establish the AI Governance Body: Formalize the creation of the AI Ethics Committee or AI Governance Office. This body must be granted a clear charter, executive sponsorship, and the authority to oversee and enforce AI policy across the organization.

- Develop Core Policies: Draft the foundational documents for the program. This includes an overarching AI Governance Policy that outlines principles and responsibilities, and a more detailed AI Code of Conduct for developers and users. These documents should provide clear, actionable guidelines for data handling, model development standards, acceptable use cases, and transparency requirements.

- Select Technology: Based on the needs identified in Phase 1, evaluate and select the necessary technology enablers for the AI-GAM framework. This includes tools for model inventory and registry, data lineage, and performance monitoring.

5.4 Phase 3: Pilot and Phased Rollout (Months 10-18)

With the framework designed, the next step is to test and refine it in a controlled manner before a full-scale rollout.

- Start Small with Quick Wins: Rather than attempting a "big bang" implementation, select a few high-impact yet manageable AI use cases for a pilot program. Success in these initial projects will be crucial for demonstrating the value of the AI-GAM framework and building internal confidence and momentum.

- Implement Monitoring and Auditing: For the pilot projects, establish the systems and processes for real-time tracking of AI decisions and performance. Conduct the first internal audits of these systems to test the effectiveness of the new governance controls and identify areas for improvement.

- Iterate and Refine: The pilot phase is a critical learning opportunity. Use the findings from the pilot projects to refine policies, streamline processes, and improve the configuration of the supporting technology tools.

5.5 Phase 4: Scaling and Continuous Improvement (Months 19-24+)

The final phase involves expanding the AI-GAM framework to cover the entire enterprise and embedding it into the organization's culture.

- Enterprise-Wide Training: Develop and roll out mandatory training programs on AI ethics, governance policies, and compliance requirements. This training must be tailored to different roles, including developers, data scientists, business users, and executives, to build broad AI fluency.

- Scale the Program: Systematically and gradually bring all remaining AI assets across the organization under the purview of the AI-GAM framework, prioritizing based on risk and business impact.

- Establish a Continuous Improvement Cycle: AI technology and regulations are constantly evolving. The AI-GAM framework must not be a static document. Establish a regular cadence—for example, annually or biannually—for the AI Governance Body to review and update the framework, policies, and tools to ensure they remain relevant and effective.

| Domain | Stage 1: Ad-Hoc/Reactive | Stage 2: Managed | Stage 3: Proactive | Stage 4: Optimized/Transformative |

|---|---|---|---|---|

| Policy & Process | No formal AI policies. Governance is inconsistent and project-specific. Processes are undocumented. | Basic acceptable use policies exist. An informal committee may review some projects. Processes are documented but not standardized. | An enterprise-wide AI governance framework and code of conduct are established and enforced. Processes are standardized and integrated into the AI development lifecycle. | Governance is automated and embedded directly into MLOps and CI/CD pipelines. Policies are dynamically updated based on continuous monitoring and regulatory intelligence. |

| People & Culture | AI expertise is siloed. No formal roles for governance. Awareness of AI risks is low and inconsistent. | An informal working group is formed. Some training is offered on an optional basis. Culture is still largely reactive to incidents. | Formal roles and responsibilities are defined within an AI Governance Office. Mandatory, role-based training is implemented. A culture of responsible AI begins to form. | AI fluency and ethical awareness are widespread. AI governance is viewed as a shared responsibility. The organization is recognized as a leader in responsible AI. |

| Technology & Data | AI assets are un-inventoried ("shadow AI"). Tools are disparate and managed by individual teams. Data governance is weak or non-existent. | A manual inventory of major AI projects is maintained (e.g., in a spreadsheet). Some data quality checks are performed. No dedicated governance tools. | A centralized AI asset registry is deployed. Automated tools for monitoring model performance and data lineage are in use. A formal data governance program is in place. | A unified AI-GAM platform provides a real-time, single pane of glass view of all AI assets, their performance, cost, and risk. Governance controls are automated. |

| Risk Management | Risk is addressed only after an incident occurs. No formal risk assessment process for AI. | Basic risk assessments are conducted for major, high-profile AI projects. Risk management is a manual, checklist-based activity. | A formal AI risk management framework (e.g., based on NIST RMF) is adopted. Risk assessment and mitigation are required for all new AI projects. | Predictive risk analytics are used to anticipate potential model failures or ethical issues. Risk management is a continuous, data-driven, and proactive process. |

Section 6: Demonstrating Value: Measuring ROI and Aligning with C-Suite Objectives

6.1 The ROI of AI Governance: From Cost Avoidance to Value Creation

Demonstrating a clear return on investment (ROI) for AI initiatives has proven challenging for many organizations. One study from 2023 found that enterprise-wide AI projects achieved a surprisingly low ROI of just 5.9% on average, despite significant capital investment. However, this figure masks a crucial reality: organizations that successfully mature their AI programs and embed strong governance are seeing far greater returns. More recent research reveals that early adopters of AI are realizing an average ROI of 41%, generating $1.41 in value for every $1 spent. The difference between stagnation and success often lies in the ability of governance to enable confident, responsible scaling.

The ROI of AI governance itself can be difficult to quantify if viewed solely through a traditional financial lens. Its value extends beyond simple cost-benefit calculations. A holistic framework for understanding the ROI of AI ethics and governance considers three distinct pathways to value:

- Direct Economic Return: This includes the tangible, measurable financial benefits. It encompasses cost avoidance, such as preventing massive regulatory fines (which can be up to 7% of global revenue under the EU AI Act) and mitigating the financial fallout from operational failures caused by rogue AI. It also includes cost savings achieved through the operational efficiencies that governed AI enables and the optimization of AI spend by eliminating redundant or underperforming assets.

- Enhanced Capabilities: Robust governance, particularly strong data governance, is a direct enabler of better AI. High-quality, well-managed data leads to more accurate, reliable, and powerful AI models. This, in turn, leads to better business intelligence and more informed strategic decision-making across the entire organization.

- Reputational Gain: In an era of increasing public and regulatory scrutiny of AI, a demonstrable commitment to ethical and responsible practices becomes a significant competitive differentiator. Strong governance builds trust with customers, partners, and regulators, which translates directly into enhanced brand equity, greater customer loyalty, and new business opportunities.

Ultimately, AI governance is the key to unlocking higher-order value from AI. The initial, most accessible ROI from AI often comes from automating simple, repetitive tasks. The truly transformative, multi-billion-dollar value, however, comes from leveraging AI for core strategic decisions—such as market entry strategies, merger and acquisition analysis, or dynamic supply chain optimization. Organizations are often hesitant to entrust AI with such high-stakes decisions due to the significant risks of bias, error, and a lack of explainability. A robust AI-GAM framework directly mitigates these risks by ensuring fairness, transparency, robustness, and human oversight. By building this foundation of institutional trust, governance provides leadership with the confidence required to deploy AI in these mission-critical, high-value domains. This reframes the ROI discussion from "How much does governance cost?" to "How much strategic value is inaccessible to us without it?".

6.2 The CFO Perspective

For the Chief Financial Officer, the AI-GAM framework is a critical tool for financial stewardship in the AI era.

- Optimizing Spend and Ensuring Value: The shift to consumption-based pricing for AI services creates significant financial uncertainty. AI-GAM provides the necessary visibility into these variable costs, enabling accurate forecasting and preventing budget overruns. By centralizing data and model management, it helps the organization train more efficient internal models, reducing reliance on expensive third-party APIs. The CFO's primary role is to ensure that massive AI investments translate into tangible business value, not just "science projects," and the governance framework provides the metrics and oversight to track and enforce this.

- Mitigating Financial Risk: The potential for multi-million-dollar fines for non-compliance with AI regulations is a primary concern for the CFO. A strong governance program is the first line of defense against these penalties. It also mitigates the significant financial impact of operational disruptions, lawsuits, and brand damage that can result from the failure of an ungoverned AI system.

6.3 The CTO Perspective

For the Chief Technology Officer, AI-GAM is not a barrier to innovation but a crucial enabler of sustainable technological advancement.

- Enabling Innovation Safely: A well-defined governance framework provides clear "guardrails" for AI development. These guardrails do not stifle creativity; instead, they empower development teams to experiment, innovate, and move quickly, secure in the knowledge that they are operating within safe and acceptable boundaries. This prevents the organization from being paralyzed by the fear of unknown risks.

- Ensuring Scalability and Managing Technical Debt: Governance mandates the technical standards necessary for building scalable, robust, and interoperable AI solutions. It prevents the proliferation of one-off, siloed projects that cannot be integrated into the broader enterprise architecture. Crucially, the asset management component of AI-GAM actively combats the accumulation of "model sprawl" and "data debt," ensuring the long-term health, security, and maintainability of the enterprise AI ecosystem.

6.4 The Head of Sales Perspective

For the Head of Sales and other revenue leaders, governed AI is a powerful engine for growth and customer relationship management.

- Driving Revenue with Trusted AI: AI is transforming sales operations, with powerful applications in predictive forecasting, intelligent lead scoring, personalized deal coaching, and hyper-personalized customer outreach. Case studies demonstrate the impact: AI-powered tools have been shown to increase sales-ready results by 40%, boost high-intent leads by 40%, and achieve sales forecasting accuracy of over 80%. Governance ensures that these powerful tools are used effectively and ethically, maximizing their impact.

- Building Customer Trust as a Competitive Advantage: Customers are increasingly aware and skeptical of how companies use AI and their data. A transparent and demonstrable commitment to responsible AI, backed by a strong governance framework, becomes a powerful selling point. It builds the trust that is essential for long-term customer relationships and can be a key differentiator in a crowded market.

- Increasing Sales Team Efficiency: AI can automate many of the time-consuming, non-selling tasks that burden sales teams, such as summarizing meeting notes, drafting follow-up emails, and composing proposals. By freeing up this time, AI allows sellers to focus on what they do best: building relationships and engaging in high-value interactions with clients.

Section 7: Future Outlook: Navigating the Evolving Landscape of AI Assets and Governance

7.1 The Rise of Agentic AI and its Impact on Asset Management

The current landscape of AI, while transformative, is only a precursor to more advanced systems. The emergence of "agentic AI"—autonomous or semi-autonomous software programs that can learn, adapt, and perform complex tasks with minimal human intervention—represents the next frontier. These AI agents will not be passive tools but active participants in business processes, capable of making independent decisions, allocating resources, and interacting with other systems.

This evolution will profoundly impact the AI-GAM framework. The challenge will shift from managing a portfolio of predictive models to overseeing a fleet of autonomous software "employees." This will require new and more sophisticated forms of governance and asset management, including:

- Autonomous Agent Monitoring: How do you track the actions, decisions, and resource consumption of an autonomous agent in real-time?

- Lifecycle Management for Agents: What is the lifecycle of an AI agent? How is it commissioned, trained, updated, and eventually decommissioned?

- Accountability for Autonomous Actions: Who is accountable when an autonomous agent makes a costly error or violates policy?

Organizations will need to develop new control mechanisms, advanced auditing capabilities, and clear ethical guardrails to manage the risks and unlock the immense potential of a workforce augmented by AI agents.

7.2 The Future of AI Compliance: Harmonization and Specialization

The global regulatory landscape for AI will continue to mature and evolve. Two parallel trends are likely to emerge. First, there will be a gradual harmonization of regulations around a common set of core principles, largely mirroring those established by the OECD. This will simplify cross-border compliance for multinational corporations. Second, alongside this harmonization, there will be an increase in specialized, industry-specific regulations. Sectors like finance, healthcare, and transportation, where AI failures can have catastrophic consequences, will see the development of more stringent and domain-specific rules.

Organizations that have already adopted a flexible, principles-based AI-GAM framework will be best positioned to adapt to this complex and dynamic regulatory future. Their foundational commitment to fairness, transparency, and accountability will allow them to meet the spirit of new regulations, requiring only tactical adjustments to meet specific legal mechanisms rather than wholesale strategic overhauls.

7.3 Conclusion: Building a Resilient, Responsible, and High-Performing AI-Driven Enterprise

The central argument of this report is that the integration of AI Governance and Software Asset Management is no longer an optional best practice but a foundational business capability for any organization seeking to compete in the 21st century. The creation of the AI-GAM framework is a strategic imperative to manage a new class of powerful, dynamic, and high-value assets.

Ad-hoc approaches to AI are unsustainable and will inevitably lead to costly failures, regulatory penalties, and an erosion of stakeholder trust. A proactive, structured, and enterprise-wide governance program is the only viable path forward. By embarking on the implementation journey outlined in this report—moving from discovery and assessment to a fully scaled and continuously improving program—organizations can bridge the critical governance gap.

Ultimately, companies that master the discipline of AI-GAM will achieve a dual advantage. They will not only mitigate the profound risks associated with this transformative technology but will also build a sustainable competitive edge. By creating a foundation of trust and control, they will empower their teams to harness the full power of AI responsibly, confidently, and effectively, building a more resilient, innovative, and high-performing enterprise.

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.