ChatGPT 5: From Death Star to Easy-Bake Oven

August 22, 2025 / Bryan Reynolds

The Great AI Reality Check

The hype arrived with the force of a supernova. When OpenAI CEO Sam Altman teased the release of GPT-5 with a "Death Star" image, expectations for a revolutionary leap in artificial intelligence became astronomical. Executives across industries braced for a tool that would not just augment workflows but redefine work itself.

Then, reality landed—not with a cinematic boom, but with the quiet, underwhelming "ping of a toaster".

For many business leaders, the initial thrill of generative AI has curdled into a nagging sense of disappointment. The promised revolution feels more like a series of frustrating experiments and stalled projects. This sentiment is not just anecdotal; it is a market-wide reality check captured in stark numbers. A March 2025 report from S&P Global Market Intelligence revealed that a staggering 42% of companies abandoned most of their AI initiatives this year, a dramatic spike from just 17% in 2024. On average, organizations scrapped 46% of their AI proofs-of-concept before they ever reached production.

This widespread disillusionment is not a sign that AI has failed. Rather, it marks a predictable and necessary phase in the adoption of any transformative technology: the Trough of Disillusionment. The initial excitement, fueled by a tool designed for mass appeal, has collided with the complex, high-stakes realities of enterprise operations. The central question now echoing in boardrooms is: "We were promised a game-changer. Why does this feel like a dud?"

This report dissects the reasons behind this disappointment, explores the hidden costs and roadblocks that blindsided many organizations, and charts a strategic path forward—away from public novelties and toward proprietary, value-generating intelligence.

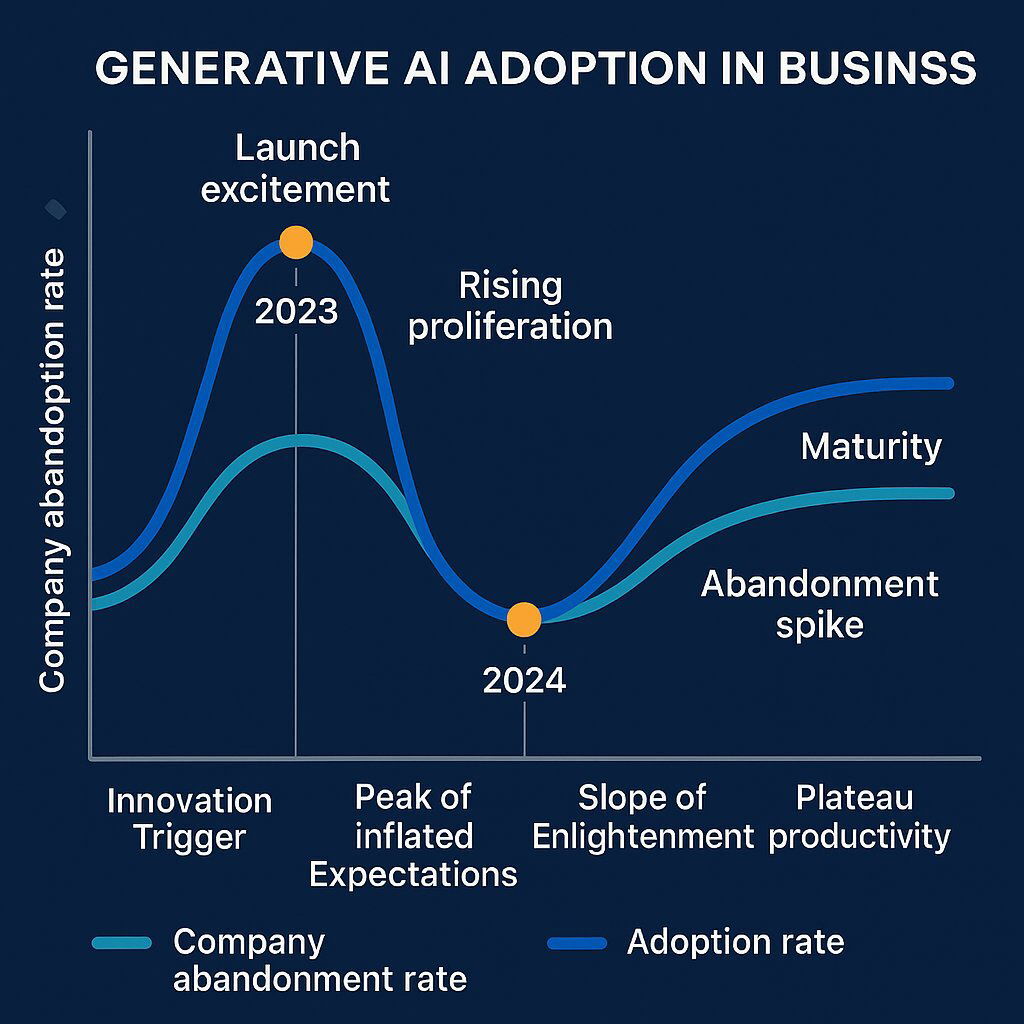

Visual Element: The Enterprise AI Hype Cycle (2023-2025)

The journey of generative AI in the enterprise can be mapped onto the classic technology hype cycle, explaining the current climate of disappointment as a natural market correction.

A curious paradox defines the current moment. Even as project failure rates soar, enterprise AI adoption has doubled to 65% in the last year, and private investment in generative AI has climbed to $33.9 billion. This seeming contradiction reveals a critical market shift. The failures are not deterring investment; they are redirecting it. The initial wave of abandoned projects represents the expensive but necessary end of naive experimentation with public, general-purpose tools. The new, smarter wave of investment is flowing toward strategic, production-grade AI that directly addresses the shortcomings revealed by those early failures. The "dud" phase is not an ending, but a filter, separating the casual experimenters from the serious, strategic adopters.

Why Does ChatGPT Feel Like It's Failing My Business? The Three Core Disconnects

The disappointment with public large language models (LLMs) like ChatGPT in a business setting stems from a fundamental mismatch between the tool's design and the enterprise's needs. Three core disconnects are responsible for the majority of failures: accuracy, security, and specificity.

The Hallucination Hangover: When "Plausibly Wrong" Isn't Good Enough

ChatGPT is engineered for plausibility, not factual correctness. It is a pattern-matching engine that prioritizes generating a fluent, "complete-sounding" answer over one that is verifiably true. This leads to the now-infamous phenomenon of "hallucinations"—fabricated information, citations, or data points that sound credible but are entirely false.

For a consumer asking for a poem or a recipe, this is a novelty. For a business, it is a catastrophic flaw. The consequences of this design are devastating in a professional context. One study analyzing LLM performance on real-world business data from insurance companies found a mere 22% accuracy rate for basic queries. For mid- and expert-level requests—the kind that drive real business decisions—that accuracy plummeted to zero. This limitation is particularly dangerous in technical fields, where the model can generate subtly flawed code, misinterpret complex financial data, or provide incorrect medical or legal information, creating significant liability. The model struggles with logical reasoning and lacks basic common sense, making it an unreliable partner for any task where accuracy is non-negotiable.

The Black Box Security Nightmare: Your Data, Their Model

The most significant risk for any enterprise using a public LLM is the "black box" nature of data handling. When employees input sensitive information into a public tool like ChatGPT—whether it's customer data, product roadmaps, source code, or internal financial reports—that proprietary data can be absorbed into the model's training set. The organization loses all control over how that information is stored, used, or potentially surfaced in responses to other users, including competitors.

This creates an unacceptable level of risk for data leakage, intellectual property contamination, and breaches of client confidentiality agreements. According to Gartner, 73% of enterprises experienced at least one AI-related security incident in the past year alone.

The 2023 Samsung data leak serves as a stark case study of this risk in action. Within weeks of adopting the tool, employees inadvertently leaked highly sensitive information, including proprietary source code entered to debug an error and confidential meeting notes transcribed to create summaries. The incident forced Samsung to ban the use of public AI tools and invest in developing its own secure, in-house alternative. This real-world example demonstrates how quickly the convenience of a public tool can devolve into a multimillion-dollar security crisis.

The "One-Size-Fits-None" Problem: Generalist vs. Specialist

ChatGPT is a generalist, trained on the vast, chaotic, and often unreliable expanse of the public internet. It lacks the specialized domain knowledge, industry-specific jargon, and—most critically—the internal context of a specific business. This results in outputs that are often generic, superficial, and ultimately useless for specialized tasks in regulated or complex fields like finance, healthcare, or advanced manufacturing.

This is what MIT Sloan Management Review calls the "knowledge capture problem". An LLM's value is directly tied to the quality and relevance of the proprietary data it can access. However, feeding an external model with the right internal data is a monumental task. It requires sorting through vast quantities of information, cleaning and structuring it, and continuously curating it—an expensive and resource-intensive process most companies are unprepared for. Without this deep, proprietary context, the model simply cannot "read between the lines" or provide nuanced insights for niche topics.

These three problems do not exist in isolation; they create a compounding cycle of failure in a business environment. An employee, frustrated by a generic or inaccurate answer, attempts to "fix" it by providing more detailed, proprietary company data in a follow-up prompt. This single action exposes the organization to the severe security risks of data leakage. Even with this new data, the generalist model still lacks the deep domain expertise to interpret it correctly, often leading to another contextually flawed output. The net result is a process where an employee has wasted valuable time, created a major security vulnerability, and still ended up with an unusable result. This cycle makes the tool not just ineffective but actively counterproductive and dangerous.

What Are the Hidden Costs and Roadblocks I'm Not Seeing?

The initial appeal of tools like ChatGPT was their perceived low cost and ease of use. However, organizations that moved from casual experimentation to serious implementation quickly discovered a host of hidden costs and operational roadblocks that turned promising pilots into budgetary black holes.

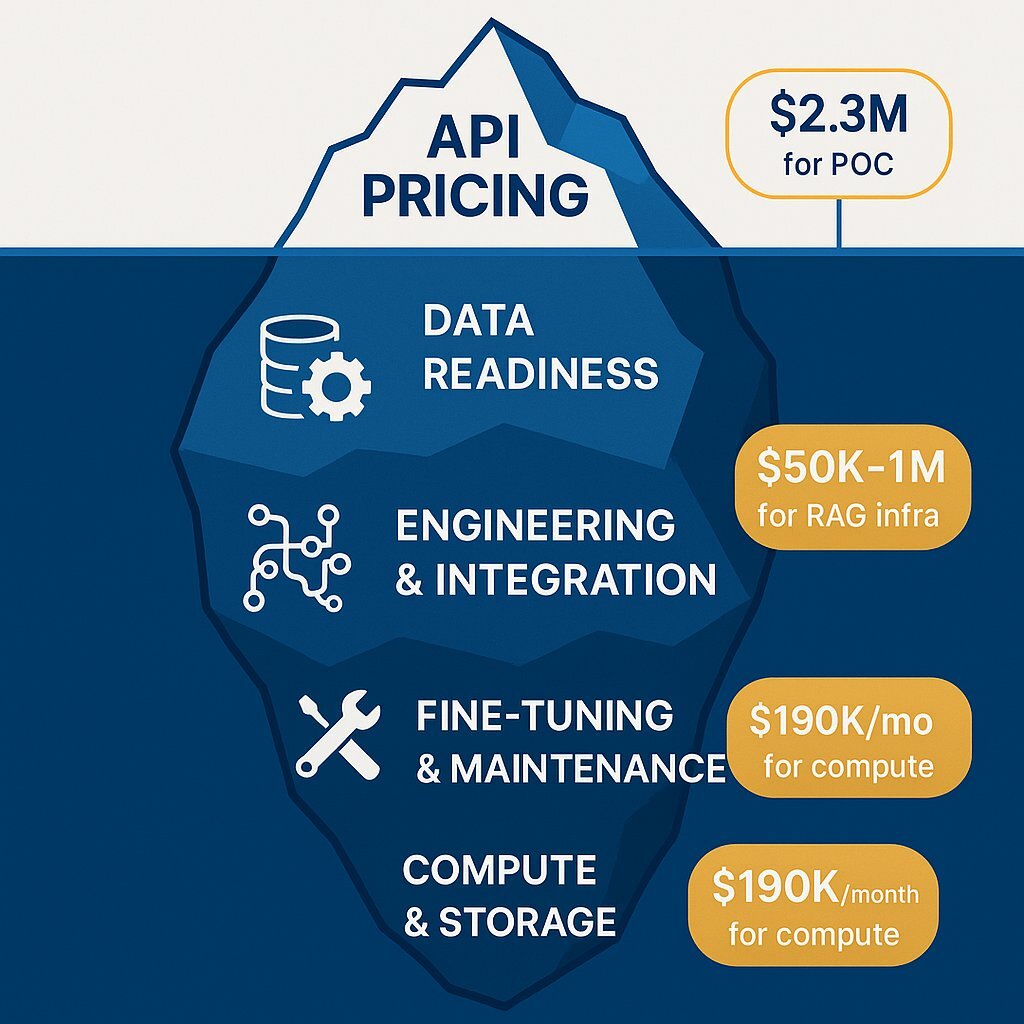

The ROI Mirage: Unmasking the True Total Cost of Ownership (TCO)

The pay-as-you-go API pricing model is deceptive. It represents the tip of an iceberg of costs that emerge during the enterprise implementation lifecycle. Gartner warns that organizations that fail to understand how these costs scale can make a 500% to 1,000% error in their budget calculations. The average proof-of-concept phase alone costs an enterprise

$2.3 million.

The most significant hidden costs include:

- Data Readiness: AI systems are only as good as the data they are trained on. According to Gartner, 39% of companies cite a lack of quality data as a primary barrier to AI implementation. The process of cleaning, labeling, structuring, and maintaining high-quality enterprise data is a massive, ongoing expense that often consumes more of the budget than the AI model development itself. This is the foundational "data plumbing" that must be fixed before any real value can be generated.

- Integration and Engineering: LLMs do not operate in a vacuum. Integrating them into existing enterprise systems like CRMs, ERPs, and internal dashboards requires a significant engineering effort to build APIs, data pipelines, and user interfaces. This work requires specialized MLOps (Machine Learning Operations) talent, which is scarce and expensive, to ensure models can be deployed, monitored, and maintained in a production environment.

- Fine-tuning and Maintenance: An off-the-shelf model is not a "set it and forget it" solution. It requires continuous fine-tuning with company-specific data to remain relevant and accurate. Models degrade over time due to "concept drift," necessitating ongoing maintenance and retraining, which adds recurring labor and compute costs.

- Compute and Storage: To make a general model useful, many companies turn to Retrieval-Augmented Generation (RAG), a technique that grounds the AI in a company's internal knowledge base. According to Gartner, simply building the infrastructure for a RAG solution can cost between $750,000 and $1,000,000. Once operational, the cost of running queries can be exorbitant. One estimate for a mid-sized enterprise suggests that processing 200,000 queries per month against a large internal database could cost over $190,000 per month.

The Integration Quagmire: Why You Can't Just "Plug In" an API

Successful AI implementation is not an IT project; it is a fundamental business transformation that requires redesigning end-to-end workflows. McKinsey has observed that delegating AI initiatives solely to the IT department is a "recipe for failure" because it overlooks the deep operational and strategic changes required to extract value.

Key integration roadblocks include:

- Siloed Teams: Many organizations have established AI centers of excellence that operate independently from core business and IT functions. While useful for rapid experimentation, this autonomy often results in solutions that are poorly integrated with enterprise systems and impossible to scale. A recent survey found that 72% of executives observe their AI applications being developed in silos, creating friction and duplicated effort.

- Legacy Systems: Integrating modern, cloud-native AI services with aging, on-premise enterprise architecture is a complex and costly challenge that API vendors often gloss over in their marketing.

- The MLOps Talent Gap: A critical bottleneck for many firms is the lack of MLOps engineers. While data scientists can build models, MLOps specialists are required to industrialize them—automating deployment, monitoring performance, and ensuring reliability at scale. This talent gap is a primary reason why many successful pilots fail to cross the chasm into production.

The People Problem: Culture, Conflict, and Change Management

Ultimately, the greatest barrier to AI adoption is often human, not technical. The introduction of a technology perceived as a threat to job security can create powerful organizational inertia and cultural resistance, particularly from middle management.

This resistance can manifest as more than just slow adoption. A 2025 survey of C-suite executives delivered a shocking finding: 42% reported that the process of adopting generative AI is "tearing their company apart". The technology's transformative potential challenges existing power dynamics and workflows, leading to internal power struggles, conflicts, and even sabotage. Furthermore, 72% of organizations acknowledge that AI has exposed and exacerbated existing technical skills gaps within their workforce, creating a sense of anxiety and inadequacy among employees.

If Not ChatGPT, Then What? The Path to Real AI Value

The failure of generic, off-the-shelf tools does not signal the end of the AI journey. Instead, it marks the beginning of a more mature, strategic approach. The path to generating real, defensible business value lies in shifting from being a consumer of public AI to becoming the owner of private, proprietary intelligence.

From Public Prompts to Private Intelligence: Owning Your AI Advantage

The fundamental strategic error was treating AI as a simple productivity tool that could be licensed like any other software. Competitors have access to the exact same public LLMs, meaning any efficiency gains are temporary and easily replicated. True, sustainable competitive advantage comes from building a proprietary AI capability that is uniquely trained on a company's own data and tailored to its specific workflows. This transforms AI from a commodity into a core strategic asset—a defensible moat that competitors cannot easily cross. This means moving away from public prompts and toward private, enterprise-grade intelligence that is owned and controlled by the organization.

The Case for Customization

This is where custom-built AI solutions come in. Unlike off-the-shelf models, custom solutions are designed from the ground up to address a company's specific data, processes, and strategic goals. This tailored approach enables capabilities that are impossible with generic tools, such as the hyper-personalization of customer experiences, the automation of unique and complex internal workflows, and the generation of deep, proprietary insights from internal data.

This journey from generic tools to strategic assets is complex, requiring a blend of deep technical expertise and sharp business acumen. It is a journey where specialist firms like Baytech Consulting guide businesses, helping them architect and develop bespoke AI solutions that are hard-wired to their specific operational data and goals. They bridge the gap between off-the-shelf limitations and the tangible value of a truly proprietary AI engine.

While the upfront capital expenditure for custom development is higher, it represents a long-term investment with a much clearer and more predictable path to ROI. This contrasts sharply with the escalating and unpredictable operational expenses of high-volume API usage, which can quickly outpace the initial development cost of a custom solution without ever creating a lasting asset.

Table: Strategic AI Comparison: Public LLM APIs vs. Custom-Built Solutions

| Feature | Public LLM APIs (e.g., ChatGPT) | Custom AI Solution (e.g., via Baytech Consulting) |

|---|---|---|

| Data Security & Privacy | High risk; data may be used for training, vulnerable to leaks | Full control; on-premise or private cloud options ensure compliance (HIPAA, GDPR) |

| Performance & Accuracy | Generalist; prone to hallucinations; as low as 22% accuracy on business data | Domain-specific; trained on proprietary data for high accuracy in niche tasks |

| Cost Structure | Low entry cost; high and unpredictable operational expenses (OpEx) at scale | High initial capital expenditure (CapEx); lower and predictable long-term TCO |

| Integration | Generic API; requires significant engineering effort for deep integration with legacy systems | Designed for the existing tech stack; enables seamless integration with core business systems |

| Competitive Advantage | None; the same tool is available to all competitors, offering no unique edge | High; creates a proprietary, defensible asset that generates unique insights and capabilities |

Think Smaller, Win Bigger: The Emerging Power of Specialized Models (SLMs)

The future of enterprise AI is not just custom; it is also smaller. The industry is rapidly moving beyond the monolithic, "one-model-to-rule-them-all" approach and toward the use of small language models (SLMs)—leaner, more efficient models that are "right-sized" for specific business tasks. For a vast majority of enterprise use cases, deploying a massive, generalist LLM is expensive and inefficient overkill.

SLMs offer several compelling advantages for business applications:

- Cost and Efficiency: SLMs are 10 to 30 times more efficient in terms of energy consumption and compute costs, dramatically lowering the operational expense of running AI at scale.

- Speed and Latency: Their smaller size allows for faster processing and lower latency, which is critical for real-time applications like customer service bots or operational control systems.

- Accuracy and Control: Because they are focused on a narrow domain, SLMs are easier to fine-tune and align with specific business rules. This reduces the likelihood of hallucinations and makes their outputs more predictable and auditable—a vital requirement for regulated industries like finance and healthcare.

- Enhanced Security: SLMs are small enough to be run on-device or within a company's private cloud infrastructure, eliminating the need to send sensitive data to third-party vendors and greatly enhancing data sovereignty.

Conclusion: Your Disappointment Is a Sign of Maturity

The widespread disappointment with ChatGPT is not a dead end for enterprise AI. It is a critical and clarifying turning point. It signals the end of the hype-driven, low-effort experimentation phase and the beginning of a mature, strategic approach to building real business value with artificial intelligence.

The organizations that are already generating significant returns from AI are those that have moved beyond asking, "What can this public tool do for me?" and are instead asking, "What proprietary intelligence must we build to win in our market?" They understand that AI is not a plug-and-play technology but a core capability that must be woven into the fabric of the business.

The "dud" wasn't AI; it was the belief that a single, public tool could solve every unique business challenge. The real work begins now. The leaders of the next decade will be defined not by how they used ChatGPT, but by the custom intelligence they chose to build instead.

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.