ChatGPT Ate My Brain: The Hidden Cost of Outsourcing Thinking

August 21, 2025 / Bryan Reynolds

The Executive's Dilemma: Is Your AI Strategy Boosting Productivity or Draining Intelligence?

The New Line Item on Your Risk Register: Cognitive Decline

The pressure on executive leadership to integrate artificial intelligence is no longer a future concern; it is a present-day imperative. Across the B2B landscape, the mandate is clear: adopt AI or risk obsolescence. The productivity gains are not just theoretical; they are documented, substantial, and reshaping entire industries. In manufacturing, companies like General Electric have leveraged predictive AI platforms to slash equipment downtime by a staggering 20%. In enterprise SaaS, firms like Snowflake have seen a 300% surge in target account engagement by deploying AI-driven marketing strategies. With 78% of companies now using AI in at least one business function, the message from the market is unambiguous: AI is the new engine of competitive advantage.

Yet, as organizations race to deploy these powerful tools, a new, less-discussed risk is quietly emerging on the corporate horizon. It is a risk that targets not the balance sheet or the supply chain, but the very cognitive capital of the workforce. The question, once relegated to the realm of science fiction, must now be asked in the boardroom: Does AI rot your brain? This is not a sensationalist headline but a serious strategic inquiry prompted by a wave of new research. For leaders focused on long-term value creation, it represents a critical examination of workforce resilience, innovation capacity, and the hidden costs of unexamined technological adoption.

The catalyst for this urgent conversation is a groundbreaking, if not yet formally peer-reviewed, study from the MIT Media Lab. When a globally respected institution releases findings that participants exclusively using tools like ChatGPT "consistently underperformed at neural, linguistic, and behavioral levels," it transcends academic debate and becomes a flashing indicator on the corporate dashboard. The study's conclusions are stark: prolonged, uncritical use of generative AI was correlated with weaker brain connectivity, diminished memory retention, and a fading sense of ownership over one's work. For business leaders, this research introduces a novel and profound variable into the AI adoption equation.

The implications extend far beyond individual employee well-being. The core issue must be reframed from a health concern into a strategic business risk. The cognitive capabilities of a workforce are not a static asset; they are a dynamic resource that fuels every aspect of the enterprise. When critical thinking, memory, and creativity begin to erode on an individual level, the aggregate effect is a degradation of the organization's collective intelligence. This decline directly translates to a diminished capacity for the very functions that define competitive advantage in a volatile global market: innovation, complex problem-solving, strategic foresight, and organizational adaptability. Therefore, the question of cognitive decline is not a medical one for executives, but a fundamental challenge of talent management, risk assessment, and the long-term viability of their company's intellectual capital.

Understanding "Cognitive Debt": The Science Behind the Headlines

To grasp the strategic implications of AI on the workforce, leaders must first understand the underlying cognitive mechanisms at play. The recent headlines are not born from abstract fears but from observable, measurable phenomena rooted in the brain's fundamental operating principles. Three core concepts are essential: cognitive offloading, neuroplasticity, and digital amnesia.

The Brain's Efficiency-Seeking Mechanisms

Cognitive Offloading is the act of delegating a mental task to an external tool to reduce cognitive load. This is not a new behavior; humans have used calculators to offload mental arithmetic and GPS to offload spatial navigation for decades. For a deeper look at how decades-old business processes are transforming with new technology, see our discussion of using AI to unlock corporate knowledge and eliminate document chaos. However, generative AI represents a quantum leap in the scale and scope of this offloading. It moves beyond simple memory or calculation to offload complex reasoning, analysis, and synthesis. When an employee asks an AI to summarize a report, draft a strategy, or write code, they are not just offloading a task; they are offloading the thinking process itself.

This offloading directly interacts with Neuroplasticity, the brain's lifelong ability to reorganize itself by forming new neural connections. Often described by the maxim "use it or lose it," this principle dictates that neural pathways that are frequently activated become stronger and more efficient, while those that lie dormant weaken and atrophy over time. Just as an unused muscle deteriorates, cognitive skills that are consistently offloaded to AI risk becoming less accessible and robust. The mental effort required for deep thinking, problem-solving, and creative ideation is the very exercise that strengthens the neural circuits responsible for these higher-order functions.

The most immediate and observable consequence of this dynamic is Digital Amnesia. First identified as the "Google Effect," this is the tendency to forget information that is readily available through an external search. Generative AI supercharges this phenomenon. It doesn't just store information; it retrieves, synthesizes, and presents it in a digestible format, further reducing the brain's need to engage in the effortful processes of encoding, consolidation, and recall that build long-term memory.

The MIT Study: A Look Under the Hood

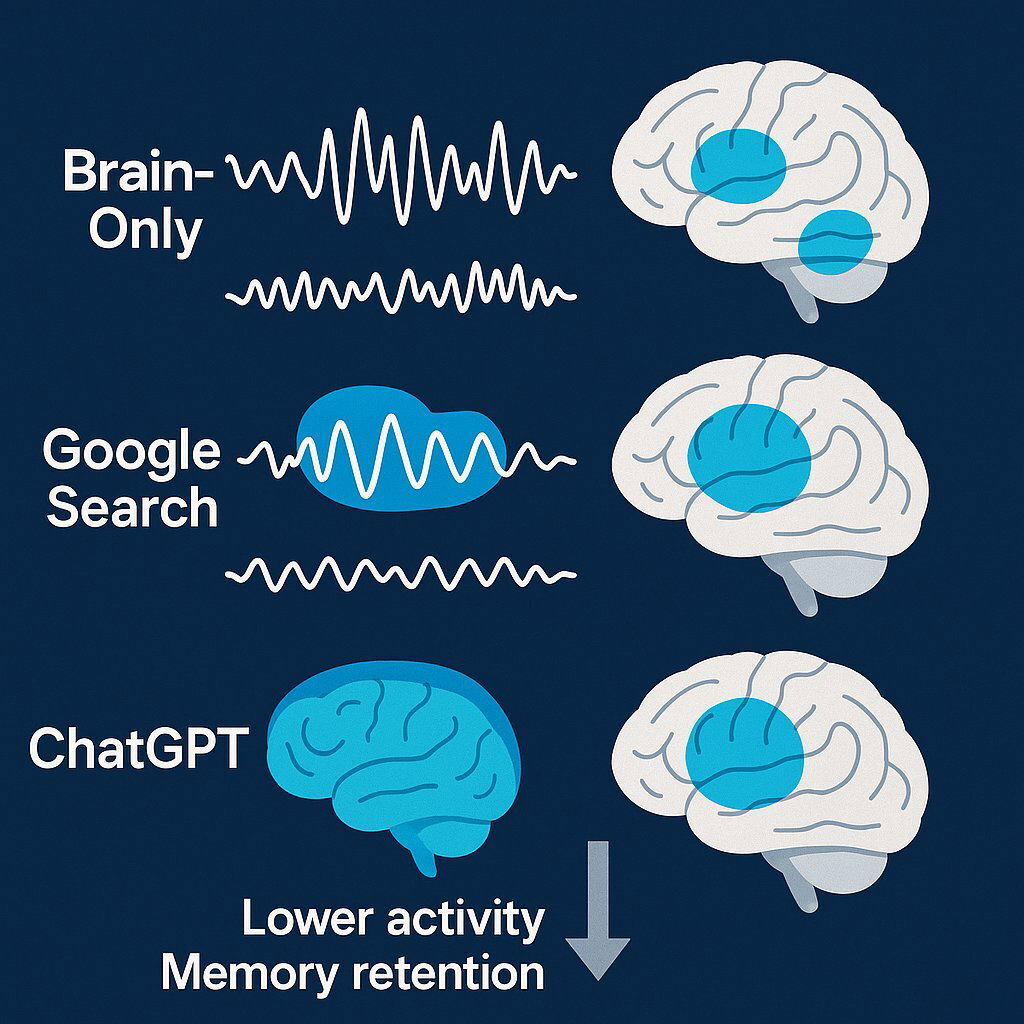

The MIT Media Lab study provided a powerful, real-world demonstration of these principles in action. Researchers divided participants into three groups tasked with writing essays over several months: one group used only their brains, another used Google Search, and the third used ChatGPT. By monitoring brain activity with electroencephalograms (EEGs), they were able to quantify the cognitive cost of AI reliance.

The results were unambiguous. The ChatGPT group exhibited the lowest levels of brain engagement. Specifically, they showed weaker alpha and theta brain waves, which are associated with memory processing, creativity, and deep thought. Their neural activity in key networks responsible for memory, attention, and executive function physically decreased over the course of the study. The behavioral outcomes were just as concerning: 83% of the ChatGPT group could not recall key points from the essays they had submitted, and human evaluators described their writing as homogeneous and "soulless".

This led the researchers to coin a term perfectly suited for the boardroom: "Cognitive Debt". It describes the accumulation of long-term cognitive costs—such as dulled critical thinking, impaired memory, and reduced creativity—in exchange for the short-term benefits of speed and efficiency. Like financial debt, the convenience is immediate, but the compounding interest can be debilitating over time.

The Lingering Effects and a Critical Nuance

Two deeper findings from the research demand executive attention. First is the persistence of the cognitive decline. The sluggish brain activity and weaker neural connectivity measured in the AI-only group continued long after the study was completed. When these participants were later asked to perform tasks without AI, their cognitive engagement failed to "catch up" to the levels of those who had consistently relied on their own brains. This suggests the issue is not merely a temporary laziness that can be shaken off, but a potential weakening of neural pathways due to sustained disuse. For businesses, this has profound implications. It means that simply removing an AI tool or conducting a one-off "critical thinking" workshop may be insufficient to reverse the effects. The initial choice of AI strategy and tool design is therefore far more critical than previously understood, as it may be shaping the baseline cognitive capacity of the workforce in a lasting way.

Second, a more nuanced interpretation of the findings offers a path away from alarmism and toward a pragmatic solution. While headlines may suggest permanent "brain rot," a critical analysis of the study proposes a more precise diagnosis: the issue is one of practice, not necessarily permanent impairment. The cognitive circuits of the AI users were not necessarily damaged; they simply were not being activated or "switched on" for the task at hand. The availability of the AI tool removed the

need to engage in deep thought. This distinction is crucial. "Impairment" suggests an irreversible condition, while "inactivity" frames the problem as a lack of exercise. This reframes the entire challenge for leadership. It is not an unsolvable neurological crisis but an actionable imperative to design work, workflows, and technology that intentionally create the conditions for rigorous cognitive exercise.

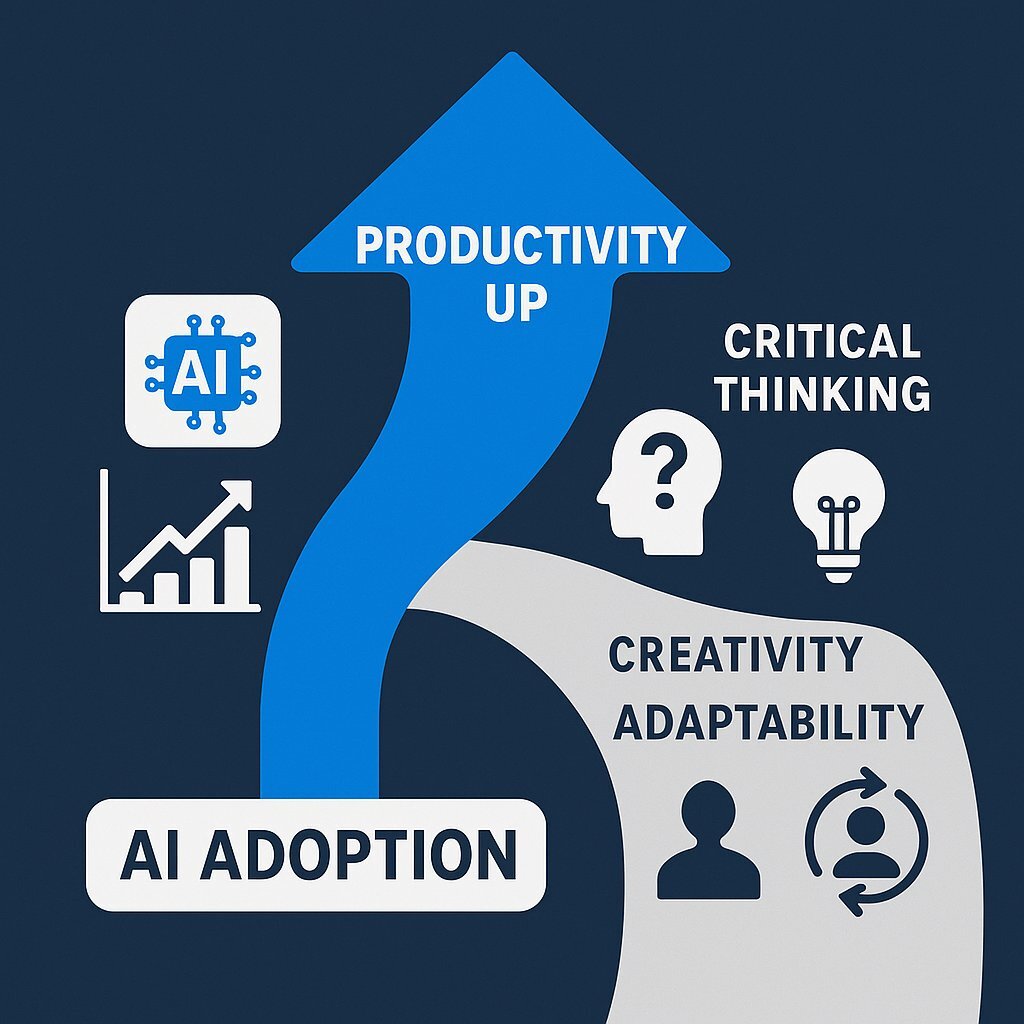

The Productivity Paradox: Are We Automating Our Own Obsolescence?

The central dilemma for modern leadership is a stark productivity paradox. AI tools deliver undeniable gains in efficiency, allowing teams to complete tasks faster and at a greater scale. Yet, this efficiency comes at a "cognitive cost". Organizations investing millions in AI platforms risk simultaneously undermining the very human capabilities—critical thinking, creative problem-solving, and ethical judgment—that are essential for using those tools wisely and maintaining a long-term competitive advantage. This is the high-stakes trade-off that defines the current technological era: the pursuit of short-term productivity may be inadvertently financing the long-term obsolescence of our workforce's core competencies.

The Erosion of Core Business Competencies

The impact is not uniform; it targets the specific higher-order skills that differentiate market leaders from followers. Yet this trend is not limited to AI chatbots or content generation — it extends into data-driven transformation initiatives across every sector. That’s why understanding how executives can successfully integrate AI at scale (without shortchanging talent development) has become a central strategic question.

- Critical Thinking: Over-reliance on AI fosters a culture of passive consumption rather than active analysis. When AI delivers a polished answer, it short-circuits the laborious process of sifting through information, evaluating sources, discerning biases, and formulating an independent conclusion. This is reflected in research showing a significant negative correlation between frequent AI usage and critical thinking scores. As employees increasingly offload cognitive tasks, their ability to engage in the deep, reflective reasoning that underpins sound strategy diminishes.

- Creativity and Innovation: While AI can be a powerful brainstorming partner, its uncritical use poses a significant threat to genuine innovation. The primary risk is "anchoring," where teams fixate on the first plausible idea generated by the machine, stifling the divergent thinking that leads to breakthroughs. This can lead to a "mechanized convergence" of ideas, where outputs become predictable and homogeneous, not only within a company but across an entire industry that uses the same foundational models. The result is a gradual erosion of the unique perspectives and novel solutions that create market differentiation. For a breakdown of how leading organizations preserve innovation and adaptability during digital transformation, read The Executive’s AI Playbook for scaling and funding startups in 2025.

- Problem-Solving and Resilience: A key "irony of automation" is that by mechanizing routine tasks, AI deprives employees of the regular practice needed to strengthen their cognitive "muscles". This leaves them "atrophied and unprepared" when novel exceptions, unforeseen problems, or full-blown crises arise. An organization optimized for predictable efficiency may find itself dangerously brittle when faced with ambiguity, as its workforce has lost the habit of thinking its way through complex, unstructured challenges. For insight on safeguarding adaptability during rapid process change, our piece on custom software and manufacturing supply chain resilience provides practical frameworks.

The Looming Leadership Gap

This erosion of foundational skills has profound implications for talent development and succession planning. The same MIT researchers who studied essay writing are now examining AI's impact on software engineering, with preliminary results suggesting the cognitive effects are "even worse". This is a direct warning to the many companies hoping to replace entry-level coding, analysis, or research roles with AI. If junior employees are allowed to offload the foundational learning and problem-solving that define their early careers, they may never develop the deep expertise, nuanced judgment, and resilient problem-solving skills required to become senior leaders. This creates a critical bottleneck in the leadership pipeline, risking a future where senior roles must be filled externally because the internal talent pool lacks the requisite cognitive depth.

The most insidious risk, however, is the subtle automation of judgment. When employees uncritically accept AI-generated reports, strategies, and solutions, the organization loses the invaluable friction of rigorous debate and diversity of thought. This convergence of thinking creates a fragile enterprise—highly efficient in stable, predictable conditions but dangerously vulnerable to collapse when faced with unexpected market shifts or disruptive events. The collective problem-solving capacity that defines a resilient organization becomes hollowed out from within. The ultimate risk of cognitive decline, therefore, is not just a workforce that is less intelligent, but an organization that is fundamentally less adaptable and less likely to survive in the long run.

The Strategic Response: Building a Cognitively Resilient Organization

The threat of cognitive decline is not an inevitable consequence of AI adoption but a direct result of passive, unstrategic implementation. Proactive leaders can mitigate this risk by architecting a corporate environment that fosters a symbiotic relationship between human and artificial intelligence. This requires a deliberate, multi-pronged strategy focused on culture, training, and technology design.

Strategy 1: Institute a Culture of "Cognitive Hygiene"

Just as organizations have established cybersecurity playbooks and data governance frameworks, they must now develop policies for "cognitive hygiene". This involves moving from ad-hoc, individual tool adoption to a clear, top-down strategy that governs how AI is integrated into workflows to ensure cognitive engagement is preserved.

- Actionable Policy: Think First, Prompt Second. For any complex or strategic task, establish a formal process where employees must first develop their own initial thoughts. This could involve writing a rough draft, creating a mind map, or outlining a solution before turning to AI for expansion, refinement, or data analysis. This simple step ensures that the core of the intellectual work remains a human endeavor.

- Actionable Policy: Mandate Verification and Refinement. Institute a non-negotiable principle that AI is a "co-pilot, not the pilot". All significant AI-generated outputs—be it code, market analysis, legal summaries, or marketing copy—must be critically reviewed, fact-checked, and substantially refined by a human expert. This reinforces a culture of accountability and ensures that employees maintain ownership and deep engagement with their work. For a real-world look at this “co-pilot” approach and the value of Human-in-the-Loop AI in high-risk business settings, our legal sector analysis breaks down the payoff.

- Actionable Policy: Designate "AI-Free Zones." Identify specific activities that are crucial for developing and exercising higher-order cognitive skills and designate them as "AI-free." These might include strategic planning off-sites, brainstorming sessions for breakthrough innovation, complex problem-solving workshops, or project post-mortems. Protecting these spaces from automation forces the kind of deep, collaborative human thinking that builds cognitive muscle.

Strategy 2: Re-architect Learning & Development for a Hybrid-Intelligence World

Corporate L&D programs must evolve. The traditional focus on knowledge transfer and rote memorization is now largely obsolete, as AI can perform these functions flawlessly. The new mandate for L&D is to systematically cultivate the higher-order cognitive skills that AI complements but cannot replicate: critical thinking, complex problem-solving, creativity, and ethical reasoning.

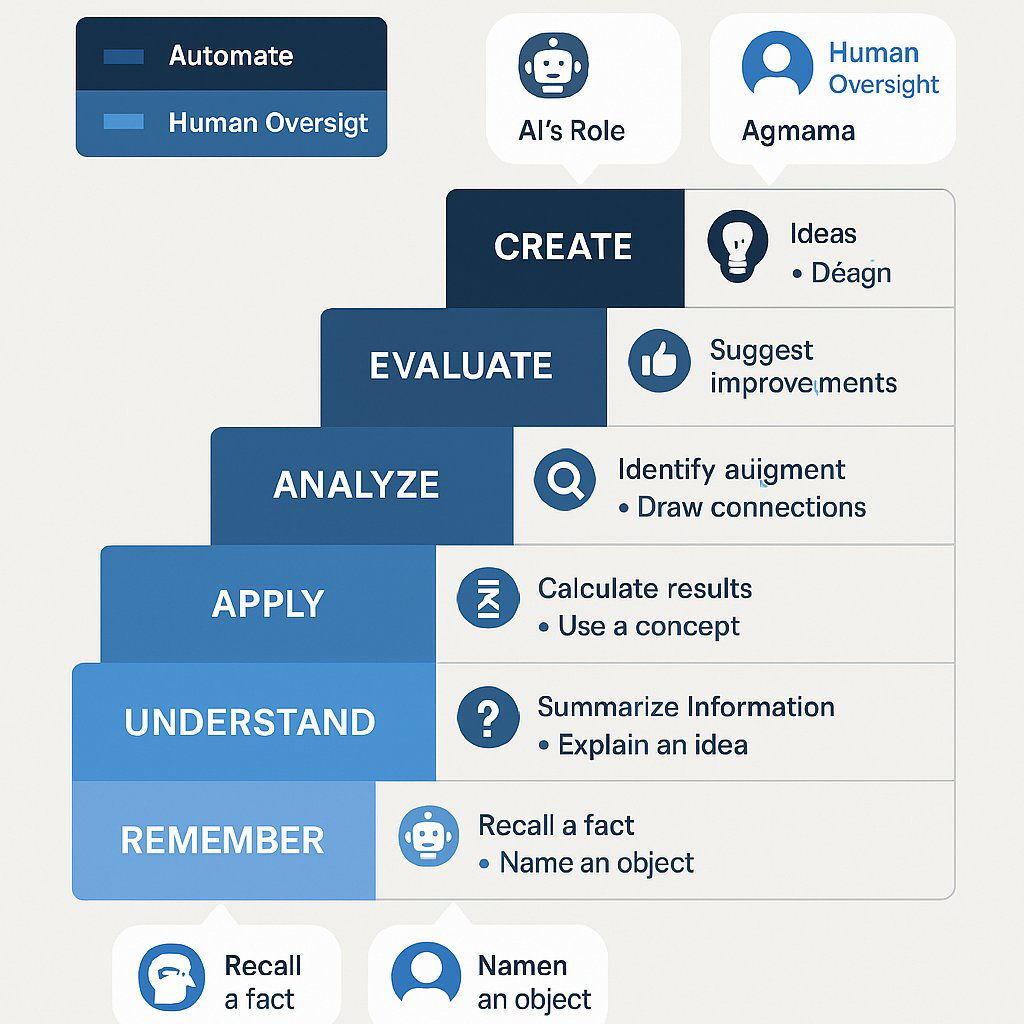

A powerful framework for structuring this new approach is the revised Bloom's Taxonomy, a model that categorizes cognitive tasks into a hierarchy of complexity. By understanding this hierarchy, leaders can strategically allocate tasks between humans and AI, ensuring that employees are consistently challenged to operate at the upper levels of cognitive function while leveraging AI to automate lower-level tasks. This transforms an abstract educational theory into a concrete management tool for designing jobs, structuring workflows, and targeting L&D investments. This paradigm is central to designing the next generation of AI-powered software development processes—getting the best from human expertise and intelligent agents in tandem.

| Level of Taxonomy | Description | AI's Role | Human's Role |

|---|---|---|---|

| Create | Formulate original solutions, design new processes, produce novel work. | Suggest alternatives, generate diverse starting points, create tangible deliverables based on human inputs. | Engage in metacognitive reflection, leverage lived experience and intuition, formulate the core original concept and strategy. |

| Evaluate | Justify a decision, appraise ethical consequences, critique an argument. | Develop and check against evaluation rubrics, identify pros and cons of defined options, surface potential biases in a dataset. | Make the final judgment call, holistically appraise ethical and contextual significance, provide nuanced critique based on values. |

| Analyze | Differentiate, organize, and attribute components of a problem. | Compare/contrast large datasets, infer trends in narrow contexts, compute and predict patterns. | Critically reason within the affective and cognitive domains, justify analysis with depth and clarity, connect disparate ideas. |

| Apply | Execute a procedure, implement a known process. | Automate and execute well-defined processes, solve quantitative problems, assist in debugging. | Operate and test in the real world, apply human creativity to adapt the process, handle unforeseen exceptions. |

| Understand | Explain ideas or concepts, summarize, classify. | Accurately describe concepts, translate languages, summarize large documents, recognize examples. | Contextualize answers within emotional, moral, or ethical frameworks; explain the significance of the information. |

| Remember | Recall facts and basic concepts. | Instantly retrieve factual information, list answers, define terms, construct timelines. | Recall information in tech-free situations, build foundational knowledge for higher-order thinking. |

Strategy 3: Demand Technology That Augments, Not Replaces

The most effective and sustainable mitigation strategy lies not in policy alone, but in the very design of the AI systems deployed within the organization. Business leaders must shift from being passive consumers of off-the-shelf AI products to active commissioners of intelligent systems designed for human-AI collaboration. The gold standard for this approach is Human-in-the-Loop (HITL) design.

HITL is a design philosophy that intentionally embeds human oversight, judgment, and feedback directly into the AI workflow at critical junctures. This approach fundamentally reframes AI from an "oracle" that provides definitive answers to a "tool" that extends and amplifies human capabilities. By creating intentional checkpoints where a human must review, correct, or approve the AI's actions, HITL systems inherently force active cognitive engagement. This design enhances transparency, promotes accountability, and builds user trust, transforming a potentially passive process into an interactive and collaborative one.

However, building effective HITL systems is a complex endeavor that requires deep, integrated expertise in software architecture, machine learning, and human-computer interaction. This is not the domain of generic, one-size-fits-all AI tools that encourage passive consumption. It is the specialized work of custom software development firms like Baytech Consulting. Such firms partner with businesses to architect bespoke AI solutions from the ground up, with the explicit goal of augmenting—not atrophying—the workforce's intelligence. By focusing on building systems with clear intervention points—whether in pre-processing (where humans set the context), in-the-loop (where AI pauses for human approval), or post-processing (where humans refine the final output)—Baytech Consulting ensures that human agency is preserved and critical cognitive skills are continuously practiced and strengthened within the flow of work.

Conclusion: Your Team's Intelligence Is Your Ultimate Asset

The narrative of AI in the enterprise is at a critical inflection point. The initial wave of adoption, driven by the promise of unprecedented efficiency, has proven its value. But a strategic reckoning is now required. The evidence suggests that the uncritical, passive implementation of AI carries a significant risk to an organization's most vital asset: the collective intelligence and cognitive resilience of its people. The threat of "brain rot" is not an inevitable technological destiny; it is a direct consequence of a passive and unstrategic approach.

The choice for leadership is not a binary one between human intelligence and artificial intelligence. It is a choice between AI that replaces and AI that augments; between technology that encourages cognitive complacency and technology that catalyzes human ingenuity. Navigating this choice successfully is the defining leadership challenge of this decade. The next generation of market leaders will not be the companies that simply deploy the most AI, but those that master the art of creating a truly symbiotic relationship between their human talent and their intelligent tools.

This requires intentionality in policy, a strategic overhaul of talent development, and a commitment to deploying human-centric technology. As an executive, the first step is to conduct a "cognitive risk audit" of your organization's current AI strategy. Are your tools and workflows promoting passive consumption or active, critical engagement?

For organizations serious about building a future-proof, cognitively vibrant workforce, the path forward lies in partnership. The complexity of designing and implementing systems that unlock productivity while safeguarding human intellect demands expert guidance. The next step is to engage with specialists who can help architect this future. To explore how custom, Human-in-the-Loop AI solutions can be tailored to your unique business challenges—enhancing productivity while protecting and even strengthening your team's collective intelligence—contact the experts at Baytech Consulting.

Supporting Articles

- https://www.psychologytoday.com/us/blog/urban-survival/202506/how-chatgpt-may-be-impacting-your-brain

- https://hai.stanford.edu/news/humans-loop-design-interactive-ai-systems

- https://www.weforum.org/stories/2024/01/how-companies-can-mitigate-the-risk-of-ai-in-the-workplace

For best practices in enterprise-wide testing and proof of ROI in digital transformation, don't miss our guide to B2B software testing strategies and how these approaches minimize risk as you introduce new AI workflows.

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.