Harnessing Generative AI for Enterprise Success in 2025

December 18, 2025 / Bryan ReynoldsIntroduction: The Great Operational Shift

If 2023 was the year of shock and awe, and 2024 was the year of the Proof of Concept (PoC), 2025 has firmly established itself as the year of operational reality. The honeymoon phase with Generative AI (GenAI) is over. Enterprise leaders—from the Visionary CTO to the Strategic CFO—are no longer asking “What can this do?” but rather “How does this fit into our P&L?” and “How do we scale this without breaking our security posture?”

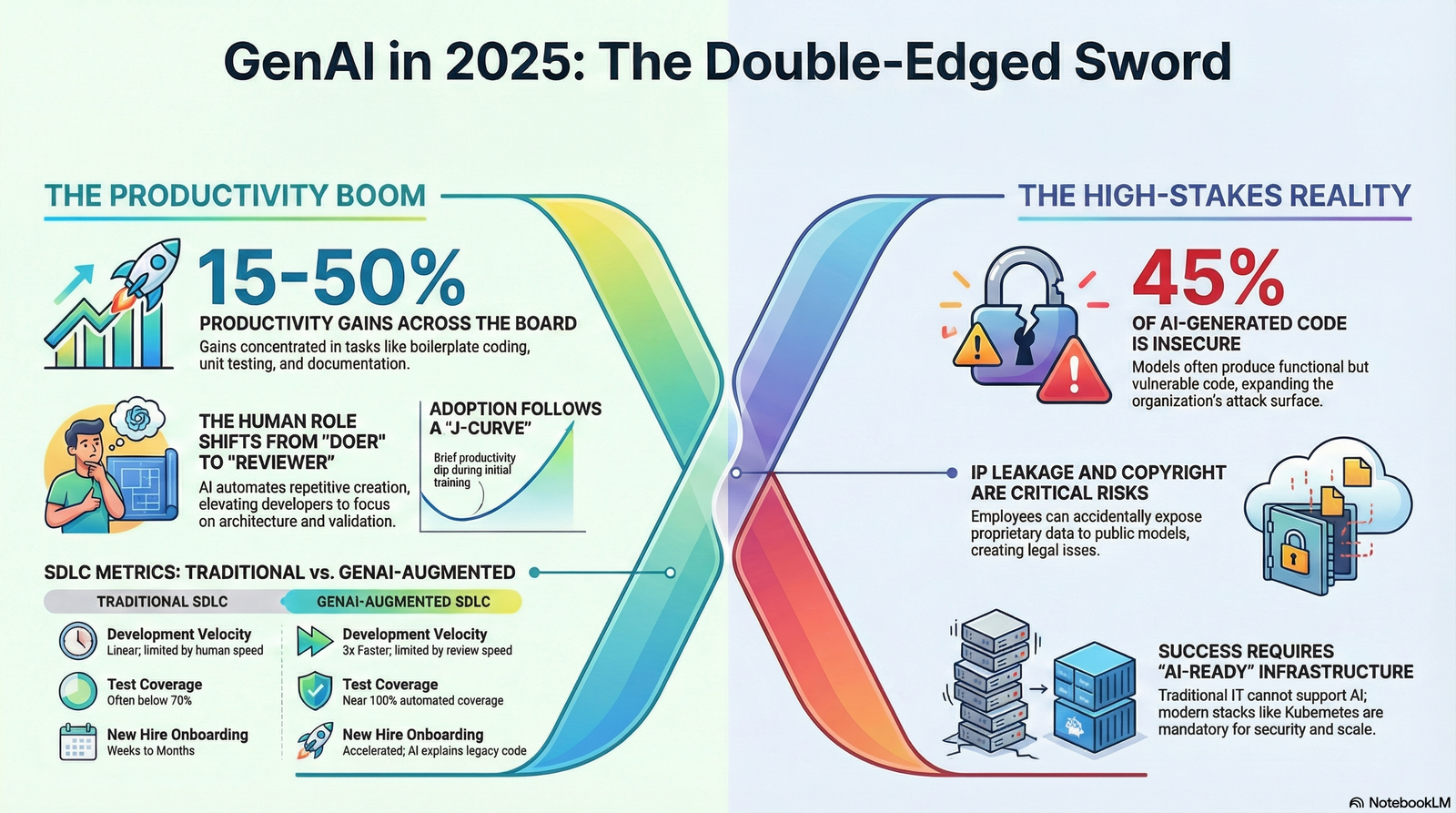

The narrative has shifted from abstract potential to concrete productivity. We are witnessing a fundamental decoupling of output from headcount. Enterprises integrating GenAI across the Software Development Life Cycle (SDLC) are reporting double-digit productivity gains—often exceeding 15% and reaching as high as 50% in specific coding tasks.1 However, these gains are not evenly distributed. They are concentrated in organizations that have moved beyond using AI as a mere “chatbot” and have re-architected their workflows, infrastructure, and culture to be AI-native.

For firms like Baytech Consulting, where Tailored Tech Advantage and Rapid Agile Deployment are not just buzzwords but operational mandates, this shift represents a new baseline for delivery. We are observing that the integration of tools—ranging from Azure DevOps pipelines to sophisticated Kubernetes orchestrations—is creating a divide between companies that are merely “using AI” and those that are “rebuilt by AI.”

This report provides an exhaustive, expert-level analysis of the state of GenAI in the enterprise in 2025. We will dissect the productivity metrics, the architectural requirements (from Azure DevOps On-Prem to Harvester HCI), the vertical-specific impacts, and the darker realities of security and governance.

The Macro View: A Bifurcated Market

Recent data from Forrester and McKinsey indicates a sharp bifurcation in the market. “High performers”—organizations that have fully integrated AI into their development pipelines—are seeing significantly different results than those merely providing access to tools like ChatGPT.

- Adoption is spiking: A May 2024 Forrester survey revealed that 67% of AI decision-makers planned to increase investment in generative AI, a number that has materialized into substantial budget reallocations in 2025.2

- The Productivity Reality: While general productivity gains hover around 15%, specific tasks within the SDLC see much higher efficiency spikes. For instance, documenting code functionality can be completed in half the time, and optimizing existing code (refactoring) sees a reduction of nearly two-thirds in time required.3

But the statistics only tell half the story. The qualitative shift is in how software is built. The “blank page” problem is solved. Architects and developers are now editors and curators of high-velocity output, shifting the bottleneck from creation to validation.

Part 1: The Productivity Paradox and the New Baseline

Beyond the Hype: Quantifying the Efficiency Gains

The headline statistic—double-digit productivity gains—is compelling, but it requires nuance to understand its application. In 2025, productivity is no longer measured solely by lines of code produced. If it were, we would simply be drowning in boilerplate. The metric has evolved to “velocity of value delivery” or “Sprint Velocity Impact.”

Recent studies reveal that while 92% of Fortune 500 firms have adopted the technology, the depth of that adoption varies.4

- The 15% to 50% Range: The reported 15% gain is a conservative average. In specific, repetitive engineering tasks, the gains are massive. PWC reports that teams adopting GenAI have experienced a 20–50% boost in productivity.1 This includes tasks like generating unit tests, writing SQL queries for Postgres, or scaffolding React components.

- The Seniority Gap: A critical insight for 2025 is the variance in impact based on developer experience. Senior developers leveraging GenAI act as “architects,” using the tool to multiply their output. Conversely, junior developers (with less than a year of experience) can sometimes see a productivity drop of 7-10% as they struggle to validate the AI’s output or lack the contextual framework to prompt effectively.3 This “Junior Penalty” is a short-term pain point that necessitates new training protocols.

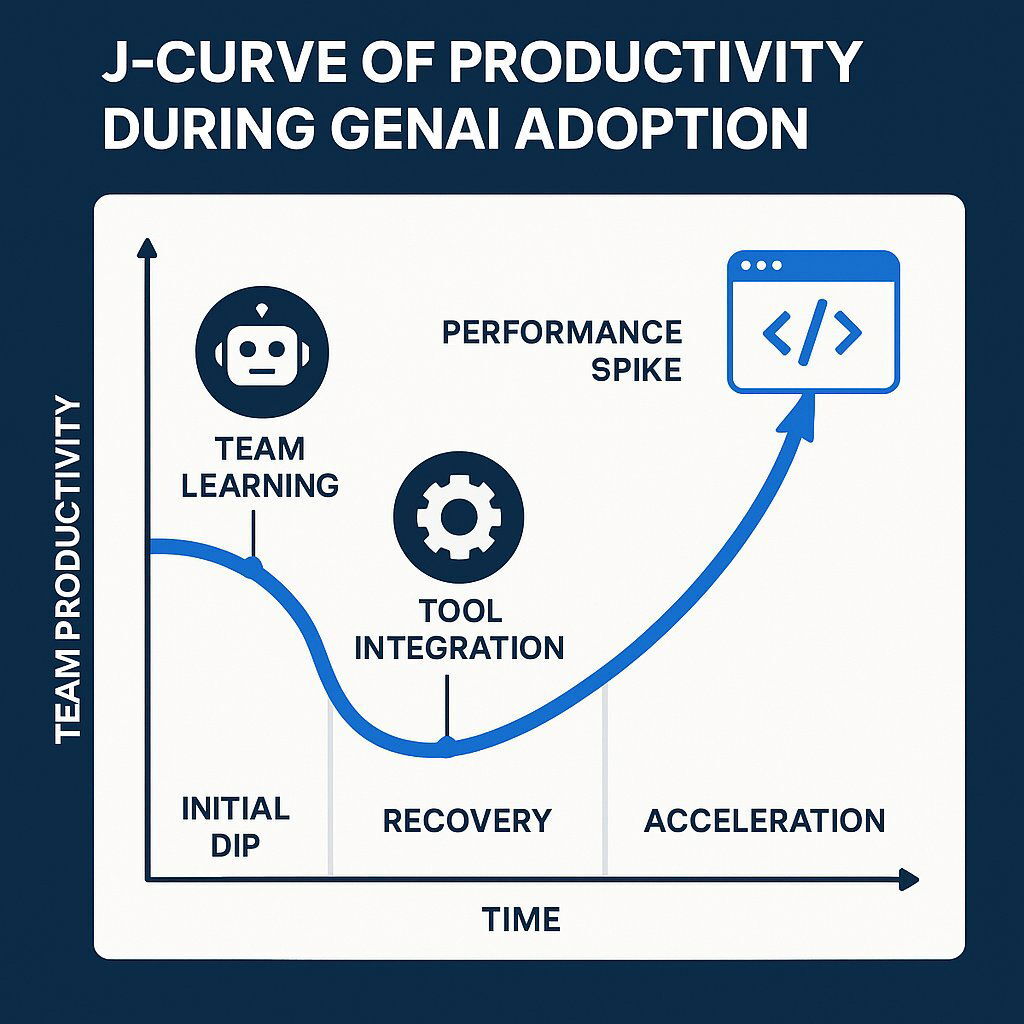

The "J-Curve" of Adoption

Enterprises should not expect an immediate vertical line on their productivity charts. The data suggests a “J-Curve.”

- Phase 1: The Dip. Initial adoption often leads to a slowdown. Teams wrestle with new tools (e.g., configuring the Azure DevOps MCP Server), rewrite prompts, and spend excessive time verifying hallucinated code.5

- Phase 2: The Recovery. As “prompt engineering” becomes muscle memory and tools are integrated into the IDE (VS Code/VS 2022), the friction disappears.

- Phase 3: The Acceleration. Once the AI has context of the repository, velocity spikes. Tasks that took days (like writing a comprehensive regression test suite) take hours.

The Three Pillars of GenAI Value

The value proposition has crystallized into three distinct categories:

- Enhanced Creativity and Productivity: This is the most visible layer. GenAI is empowering businesses to produce high-quality content and code at scale, freeing human resources for higher-value architectural tasks. For a marketing director, this means personalized campaigns at scale. For a CTO, it means prototypes in hours, not weeks.2

- Cost Efficiency: By automating repetitive processes—such as boilerplate code generation, unit test writing, and documentation—companies are reducing operational costs. A reduction in engineering costs by up to 50% has been observed in some aggressive adoption scenarios.1 This allows consultancies like Baytech to deliver “Enterprise-grade quality” at a velocity that defies traditional cost models.

- Growth and Innovation: High-maturity organizations are using AI not just to cut costs but to spur growth. This includes hyper-personalization in marketing and the development of entirely new software products that were previously too resource-intensive to build. McKinsey reports that high performers are more likely to set “innovation” as a primary KPI for GenAI, moving beyond simple “efficiency”.7

Table 1: Traditional vs. GenAI-Augmented SDLC Metrics

Part 2: Revolutionizing the SDLC — Phase by Phase

The Software Development Life Cycle (SDLC) is undergoing its most significant transformation since the advent of Agile methodology. GenAI is not merely an add-on; it is reshaping the definitions of the phases themselves. At Baytech Consulting, we define this as the shift to Rapid Agile Deployment 2.0, where the feedback loops are tightened by machine intelligence.

1. Planning and Architecture: The End of the "Blank Page"

In the planning phase, GenAI acts as a super-analyst. Product Owners and Business Analysts are using AI to convert high-level requirement documents into detailed user stories with acceptance criteria.

Backlog Management with Azure DevOps:

The integration of AI into tools like Azure DevOps is profound. AI agents can now analyze a product backlog, identify dependencies, flag duplicate tickets, and suggest prioritization based on historical velocity data.

- Context-Aware Planning: Using the Azure DevOps Model Context Protocol (MCP) Server, an AI assistant can securely access work items and test plans locally. A Product Owner can prompt, “Get my current sprint work items, then identify which ones might be at risk based on recent commit velocity,” and receive a data-backed risk assessment instantly.11

- User Story Generation: Instead of spending hours drafting acceptance criteria, teams use GenAI to generate them from a feature brief. This ensures consistency and completeness, reducing the “clarification loops” between devs and product owners.12

Architectural Prototyping:

Before a single line of code is written, architects can use GenAI to simulate system designs. For example, when designing a database schema for a SQL Server or Postgres instance, the AI can suggest normalization strategies or predict potential bottlenecks in API interactions based on the expected load.

2. Development: The "TuringBot" Era

Forrester refers to GenAI coding assistants as “TuringBots.” By 2025, 49% of developers are using these assistants in the coding phase.13 The integration is deep and pervasive across IDEs like Visual Studio 2022 and VS Code.

Boilerplate and Scaffolding:

The days of manually typing out standard CRUD (Create, Read, Update, Delete) operations are ending. GenAI generates the scaffolding—controllers, models, and views—allowing developers to focus on complex business logic.

- Example: A developer using VS Code can highlight a database schema in

pgAdminand ask Copilot to “Generate a .NET Core API controller for this table with Swagger documentation,” cutting a 2-hour task down to 10 minutes.

Legacy Modernization (The Brownfield Scenario):

For firms dealing with “brownfield” scenarios (legacy code), GenAI is a game-changer. It can refactor monolithic code into microservices and translate older languages (like COBOL or early Java) into modern stacks (like .NET 6+ or Go) with high accuracy. This transformation aligns directly with modern strategies for real-time data processing and modern architectural modeling.

- Productivity Impact: In these complex environments, GenAI tools have demonstrated a 50% reduction in engineering costs and a 3x increase in development velocity.1 This is critical for industries like Banking and Insurance, where legacy systems are the norm.

The "Context" Challenge and Solution:

The effectiveness of these tools relies heavily on context. Early iterations failed because they only “saw” the open file. 2025 tools, like GitHub Copilot for Azure, operate in “Agent Mode,” crawling the entire repository to understand dependency graphs. This means the AI understands that changing a variable in the User class will break the Billing service, offering a level of safety previously unavailable.14

3. Testing and QA: The Rise of Self-Healing Systems

Testing is perhaps the biggest beneficiary of GenAI. Historically, testing has been the bottleneck of Agile—the phase where velocity dies. GenAI reverses this.

Automated Test Generation:

Tools can now generate unit tests, integration tests, and even end-to-end UI tests automatically. This is critical for Agile sprints where testing often lags behind development. Modern testing flows tie closely to expert software consultancy services that specialize in test automation and quality assurance strategies.

- Coverage Metrics: Teams leveraging these tools see near 100% code coverage, as the AI is tireless in generating edge cases that human testers often miss.8

- Efficiency: A 60% reduction in manual test design time has been reported by teams using AI-augmented QA tools.15

Predictive Defect Analysis:

AI models analyze commit history to predict which modules are most likely to break. If a developer touches a “hotspot” in the code (an area with frequent historical bugs), the CI pipeline can automatically trigger a more rigorous suite of regression tests for that specific area.16

SDET Evolution:

The role of the Software Development Engineer in Test (SDET) is evolving. Instead of writing scripts, they are overseeing AI agents that perform exploratory testing. The AI can “play” with the application, clicking through workflows to find unhandled exceptions, effectively performing “Chaos Engineering” at the UI level.16

4. Deployment and Operations: AI-Native DevOps

Deployment is no longer just a script; it’s an intelligent process. This aligns perfectly with the philosophy of Rapid Agile Deployment and parallels adoption of DevOps efficiency that accelerates delivery and reliability.

Infrastructure as Code (IaC):

GenAI automates the creation of Terraform, Bicep, or Kubernetes YAML scripts. A developer can describe the desired infrastructure—“I need an Azure Kubernetes Service cluster with 3 nodes, an ingress controller, and a connection to an Azure SQL instance”—and the AI generates the precise configuration files. This reduces configuration drift and security misconfigurations.17

Intelligent Rollbacks:

In modern CI/CD pipelines, AI monitors deployment health in real-time. If an anomaly is detected (e.g., a spike in latency or error rates immediately post-deployment), the AI can trigger an automatic rollback before human intervention is even possible. This “Self-Healing Infrastructure” significantly improves uptime metrics.17

Documentation as a Living Artifact:

One of the most persistent pains in software—outdated documentation—is being solved. GenAI pipelines now auto-generate technical documentation from the code itself. Every time a PR is merged, the documentation (hosted in wikis or READMEs) is updated to reflect the new API endpoints or logic changes.2

Part 3: The Infrastructure of Intelligence — Stacks and Architecture

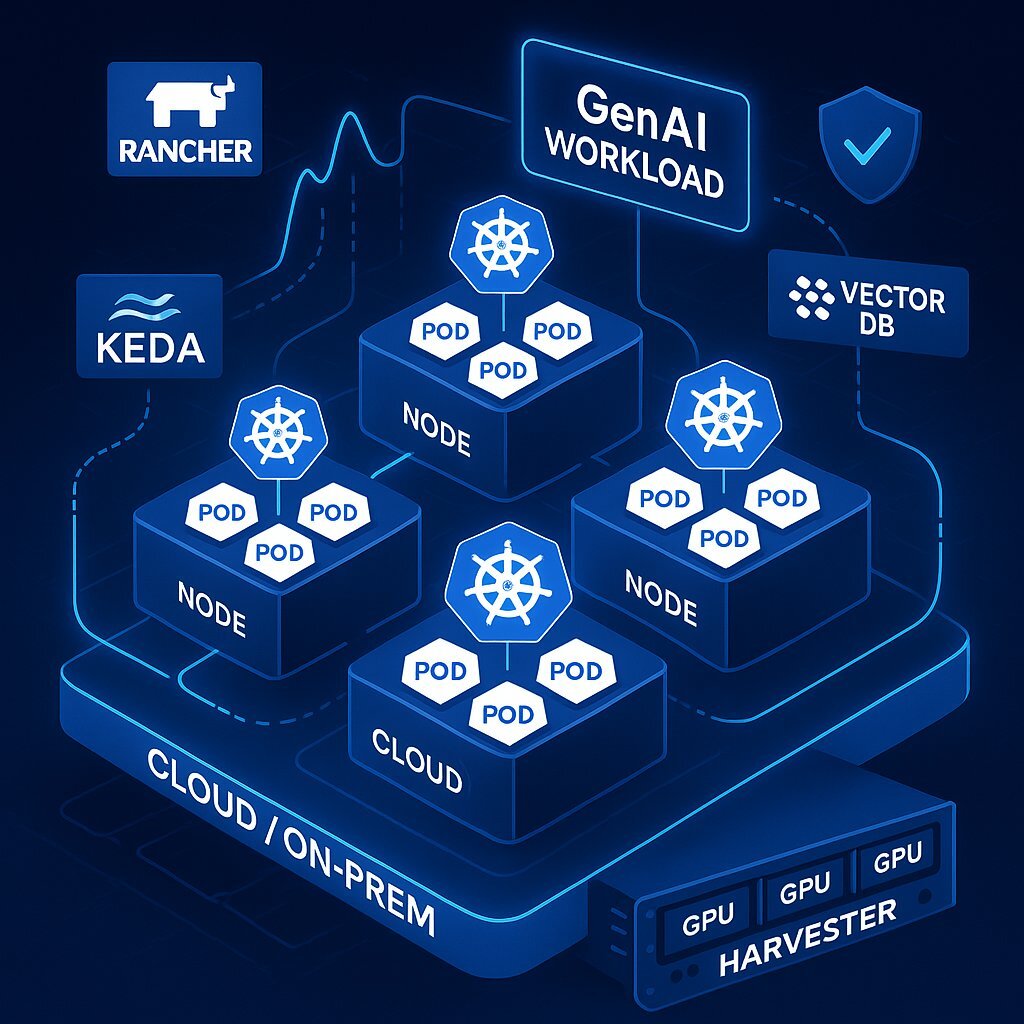

To support this new way of working, the underlying infrastructure must evolve. You cannot run 2025 AI workloads on 2015 infrastructure. Baytech Consulting’s expertise in Azure, Kubernetes, and specialized hardware like Harvester HCI aligns with the market’s direction toward “AI-Ready Infrastructure.”

The Dominance of Kubernetes in the AI Era

As organizations scale their use of GenAI, Kubernetes (K8s) has emerged as the de facto operating system for AI workloads.

- Why Kubernetes? AI models, particularly Large Language Models (LLMs), require massive computational resources that fluctuate wildly. Kubernetes excels at orchestrating these containerized workloads. It allows for the decentralization of workloads among several nodes, essential for distributing the computational intensity of deep learning training and inference.18

- Event-Driven Scaling (KEDA): Standard CPU-based autoscaling fails with AI. An inference server might be idle (low CPU) but have a queue of 1,000 prompts waiting. Tools like KEDA (Kubernetes Event-driven Autoscaling) allow infrastructure to scale based on specific AI triggers—such as the number of prompts in a RabbitMQ queue—rather than just CPU usage. This is vital for managing the costs of GPU-bound workloads.20

- The "GenAI Stack": A modern enterprise stack now includes:

- Vector Databases: pgvector on Postgres (managed via pgAdmin) for RAG implementations.

- Model Serving: KServe or Knative for serverless inference.

- Orchestration: Rancher for managing multiple Kubernetes clusters across hybrid environments (On-Prem + Cloud).21

The Hybrid Cloud Reality: Azure DevOps On-Prem & Data Sovereignty

For enterprises deeply embedded in the Microsoft ecosystem, 2025 brings significant maturity to the toolset, particularly for those with strict data sovereignty requirements (Finance, Government).

Azure DevOps Server 2025:

The on-premise version of Azure DevOps Server now supports deeper integration with AI tools without sending code to the public cloud. This allows for a “hybrid” approach where sensitive IP remains local, running on internal servers (like OVHCloud dedicated instances or on-prem hardware), while still leveraging AI capabilities.22

- Security Advantage: By keeping the “Brain” (the model) local or using private endpoints, companies mitigate the risk of IP leakage.

- The MCP Server: The Azure DevOps Model Context Protocol (MCP) Server is a critical innovation. It runs locally, allowing AI assistants to access work items, PRs, and documentation securely without exfiltrating data to external model providers. This is a game-changer for secure environments.11

Local LLMs vs. Cloud API: The “Buy vs. Build” of 2025

A major trend in 2025 is the repatriation of AI workloads. While OpenAI and Anthropic dominate the cloud, privacy-conscious enterprises are increasingly deploying open-source models (like Llama 3, Mistral, or DeepSeek) locally. This shift is thoroughly explored in our latest analysis of AI trust and automation in software development.

The "Harvester HCI" Advantage:

For a technically sophisticated partner like Baytech, utilizing Harvester HCI (Hyperconverged Infrastructure) enables the creation of a “Private AI Cloud.”

- Performance: Running models on local GPUs (passed through to Kubernetes nodes via Harvester) eliminates network latency.

- Cost Control: For high-volume, repetitive tasks (like internal document summarization or basic code completion), a fine-tuned local model can be significantly cheaper than paying per-token API fees to a major provider.

- Privacy: No data leaves the physical server. This is the ultimate selling point for highly regulated industries.24

Microsoft Foundry:

For those engaging with the cloud, Microsoft Foundry provides a unified platform. It bridges the gap between “model builders” and “app developers,” integrating directly with VS Code. It allows developers to deploy models from a catalog (including OpenAI and Meta models) and test agents within their native environment, streamlining the loop between “coding an agent” and “deploying it to Azure”.25

Part 4: Vertical Transformations — Sector-Specific Impacts

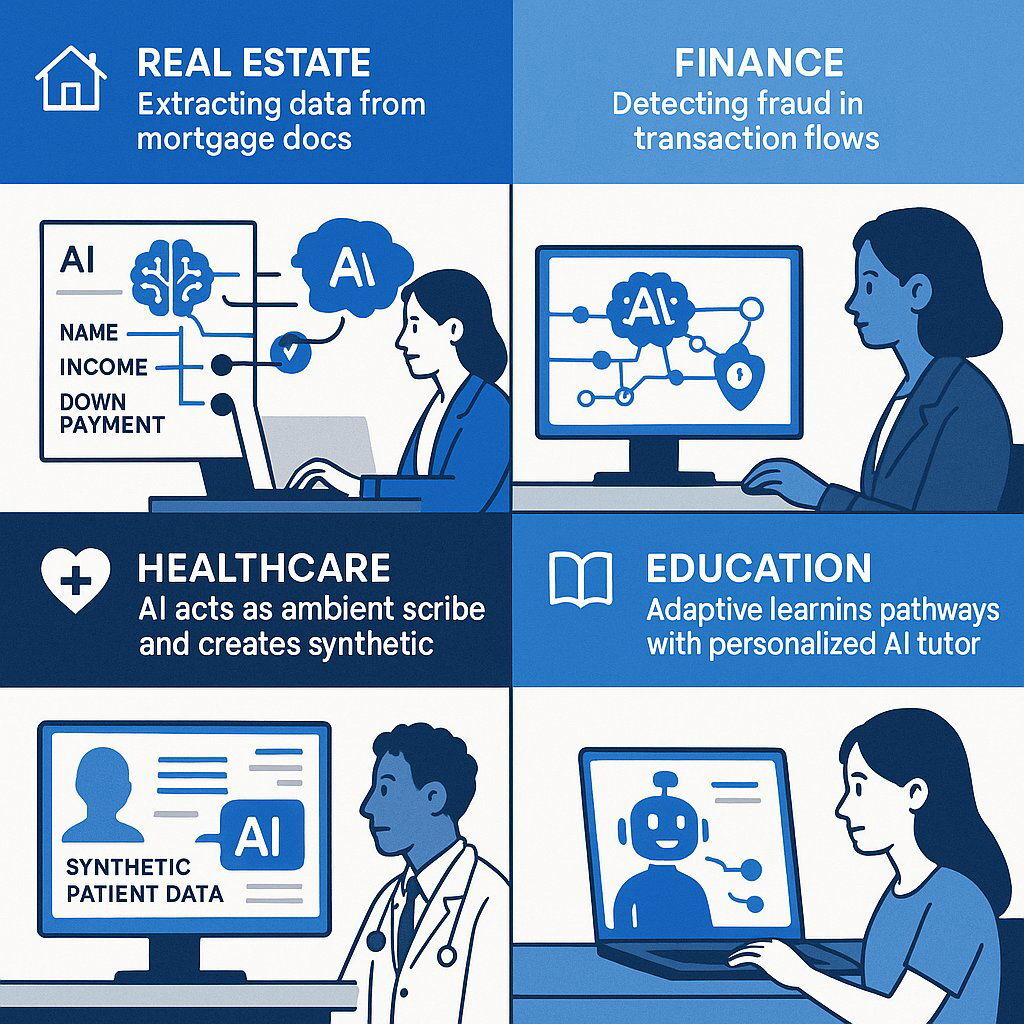

The “Ideal Reader” for this report spans several high-value industries. The impact of GenAI is not generic; it is highly specific to the operational bottlenecks of each vertical. For a deeper view on how AI is transforming individual sectors, see our article on AI in the future of real estate brokerage.

1. Real Estate and Mortgage (PropTech)

The real estate sector, historically slow to adopt tech, is leapfrogging with GenAI. The focus here is on Speed to Close.

- Mortgage Velocity: In mortgage lending, time is the enemy of the deal. GenAI is automating the most friction-heavy parts of the process: underwriting and document processing. AI extracts and verifies data from income statements, tax returns, and credit reports, reducing manual intervention and speeding up loan approvals. This directly addresses the “expensive and inefficient elements of the loan lifecycle”.26

- Predictive Default Modeling: GenAI analyzes borrower behavior patterns to predict default risks earlier and with greater nuance than traditional FICO scores. It can even automate personalized collection communications to reduce delinquency rates, moving from “harassment” to “financial counseling”.28

- Lease Abstraction: For commercial real estate, parsing 100-page lease agreements is tedious. GenAI agents now extract key dates, clauses, and financial obligations instantly, feeding them into property management systems. This transforms unstructured PDF data into structured SQL data usable for analytics.29

2. Fintech and Finance

Trust and speed are the currency of Fintech. GenAI is optimizing both, specifically in the realm of Compliance and Legacy Modernization. You can get additional insights into strategy and modernization for tech executives in our guide on how CTOs evolve from cost center managers to value creators.

- Compliance as Code: Regulatory compliance (BSA/AML) is being automated. GenAI acts as a “virtual policy expert,” drafting Suspicious Activity Reports (SARs) and ensuring that new software features comply with changing regulations before they are deployed. It can generate reports by synthesizing information from multiple sources, identifying patterns indicative of compliance risks.30

- Legacy Code Migration: Many banks still run on decades-old mainframes. GenAI is accelerating the translation of this legacy COBOL code into modern, secure languages. This reduces operational risk and addresses the “talent gap” of retiring mainframe engineers. By using AI to document and explain the logic of legacy systems, the risk of migration is significantly lowered.32

- Fraud Detection: GenAI analyzes transaction datasets in real-time to identify anomalies that standard rule-based systems miss. It acts as a constantly evolving immune system for the financial network.33

3. Healthcare and MedTech

The focus here is on Interoperability and Clinician Burnout.

- Interoperability: Healthcare data is notoriously fragmented (HL7, FHIR, custom formats). GenAI is solving the “data silo” problem by mapping disparate data standards automatically. It helps clean and normalize patient data for better analytics, a crucial step for population health management.34

- Clinical Documentation: Ambient AI scribes listen to doctor-patient consultations and generate structured clinical notes, saving clinicians hours of paperwork daily. This “joy of medicine” return is a massive selling point and a key driver of adoption.35

- Synthetic Data: To test software without violating HIPAA, GenAI generates realistic “synthetic patients”—datasets that mathematically resemble real patient populations but contain no PII (Personally Identifiable Information). This allows developers to test edge cases (e.g., a rare disease progression) without risking patient privacy.34

4. Education and LMS (EdTech)

The “one-size-fits-all” model is dead. The new model is Adaptive Learning, at the heart of the latest software product development services.

- Dynamic Content Generation: Learning Management Systems (LMS) are shifting from static courses to dynamic pathways. If a student struggles with a concept (e.g., algebra), the AI generates—in real-time—new explanations, quizzes, and examples tailored to that student’s learning style. It is no longer a static library of videos; it is an interactive tutor.36

- AI Tutors & Mentors: 24/7 virtual tutors provide Socratic guidance, answering student queries not by giving the answer, but by leading them to it. This democratization of private tutoring is a major equity driver. Leading platforms like Duolingo and Coursera are already leveraging this, but the tech is now accessible to smaller LMS providers via APIs.37

- Content Summarization: For higher education, AI tools summarize vast learning materials into flashcards and study guides, helping students focus on comprehension rather than rote memorization.37

Part 5: The Dark Side — Security, Governance, and Legal Risks

As an industry analyst, it’s essential to address the massive risks accompanying this shift. 2025 has revealed that AI-generated code is not inherently secure. In fact, without proper governance, it expands the attack surface—a critical finding discussed in our recent deep dive on AI-driven software security strategies.

The "Insecure Code" Epidemic

A 2025 report by Veracode analyzed code generated by over 100 Large Language Models and found that nearly half (55% secure, meaning 45% insecure) of AI-generated code contained vulnerabilities.38

- Lack of Context: AI models often generate code that is functionally correct but insecure because they lack understanding of the broader system architecture and security posture. They might suggest a database query that is vulnerable to SQL injection because they don’t “know” the sanitation libraries used elsewhere in the project.

- The "Sleepy Reviewer" Syndrome: As the volume of code increases, human reviewers experience fatigue. They may glance at AI-generated code, see that it “looks” right (syntax is perfect), and approve it without catching subtle logic flaws or security gaps. This “rubber stamping” is a major vector for bugs.39

- Dependency Risks: AI often hallucinates dependencies—suggesting libraries that don’t exist or, worse, libraries that have been compromised by “typosquatting” attacks.

Legal and IP Minefields

- Copyright Infringement: There is a persistent risk that GenAI models might reproduce copyrighted code from their training data. Organizations face potential indemnification issues if their software is found to contain proprietary code from a competitor.40

- Data Leakage: The “Samsung Incident” of 2023 (where employees pasted sensitive code into ChatGPT) is still the cautionary tale. In 2025, strict governance is required to ensure that proprietary algorithms or customer PII are not sent to public model training sets.

- Regulatory Bias: In Fintech and Hiring, AI models can inadvertently perpetuate bias. If a mortgage model is trained on historical data that contains bias, the AI will scale that discrimination. “Algorithmic Auditing” is now a legal requirement in many jurisdictions.41

Table 2: Risk Mitigation Strategies for 2025

Part 6: Strategic Implementation — The "Buy vs. Build" Decision

For executives reading this report, the question is how to proceed. The “Buy vs. Build” dichotomy has evolved into “Integrate vs. Fine-tune.” To make informed technology investments, explore our guide on software scoping for successful projects.

The "Tailored Tech Advantage"

Off-the-shelf AI tools are powerful, but they are generic. For a company like Baytech, and for our clients, the value lies in Tailored Tech Advantage.

- Commodity AI: Use standard tools (Copilot, ChatGPT Enterprise) for commodity tasks: email, basic coding, meeting summaries. Do not build your own “ChatGPT.”

- Differentiating AI: Build custom solutions for core business IP. This involves:

- RAG Architectures: Connecting an LLM to your specific company knowledge base (SharePoint, SQL databases) so the AI “knows” your business.

- Fine-Tuning: Taking a base model and training it on your specific industry data (e.g., a mortgage lender fine-tuning a model on 10 years of their specific underwriting decisions).

- Agentic Workflows: Building chains of AI agents that can perform multi-step tasks (e.g., “Analyze this lease, extract the dates, update the database, and email the property manager”).

The Agile Adaptation

GenAI accelerates the Agile loop. Sprints that used to be two weeks might compress to one week because coding and testing are faster. However, “Refinement” and “Review” meetings become more critical. The bottleneck moves from doing the work to deciding what work to do. Enhanced product delivery often depends on the partnership model adopted—explore our partnership approach for maximizing these benefits.

- Advice for CTOs: Invest in your “Review Layer.” You need fewer junior coders and more senior architects/code reviewers. The ratio of “Doers” to “Checkers” is shifting.

Conclusion: The Era of the AI-Augmented Enterprise

The state of Generative AI in the enterprise in 2025 is defined by a transition from novelty to necessity. The productivity gains—double-digit percentages across the board—are too significant to ignore. However, realizing these gains requires more than just a software subscription.

It requires a fundamental re-architecting of the SDLC, a commitment to modern infrastructure (Kubernetes, Cloud-Native, Hybrid Cloud), and a rigorous approach to security governance. The companies that win in this era will be those that treat AI not as a magic wand, but as a powerful, volatile engine that requires expert engineering to harness.

For leaders in Fintech, Real Estate, Healthcare, and Tech, the path forward is clear: Move from experimentation to integration. Focus on high-value, custom implementations that leverage your proprietary data. And above all, keep the human in the loop—elevating your teams from “coders” to “system architects.”

At Baytech Consulting, we see this future every day. Through Rapid Agile Deployment and a mastery of the modern AI stack—from Azure DevOps to Kubernetes—we help enterprises navigate this shift, ensuring that the “AI Promise” becomes an “Operational Reality.”

Frequently Asked Question

Q: Enterprises report double‑digit productivity gains (around 15% or more) from integrating generative AI across the SDLC. Is this realistic for my team, and where will we see it first?

A: Yes, gains of 15% to 50% are realistic, but they are not uniform. You will not see a flat 15% speed boost across every minute of the day. Instead, you will see massive acceleration in specific “toil” heavy areas:

- Boilerplate Coding: 40-50% reduction in time.

- Unit Testing: 50-60% reduction in time (AI writes the tests).

Documentation: 50%+ reduction in time.

However, you may see a slowdown in code review initially, as your senior engineers adjust to reviewing AI-generated code. The net result is a significant increase in velocity, but it requires upskilling your team to manage AI outputs effectively. Expect the “J-Curve” effect: a slight dip in productivity during the first month of adoption/training, followed by a sharp rise as the team adapts to the “TuringBot” workflow.

Further Reading

- https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier

- https://www.forrester.com/report/the-state-of-generative-ai-in-the-enterprise-2025/RES180501

- Gartner Hype Cycle for Artificial Intelligence, 2025

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.