AI Transformation in Law: Executive Playbook for Legal Firms

October 21, 2025 / Bryan ReynoldsThe AI Gavel: An Executive's Guide to AI Transformation in the Legal Industry

The legal profession is standing at a technological inflection point. For years, artificial intelligence was a topic reserved for forward-thinking seminars and speculative articles. Today, it is a line item in board meeting agendas and a decisive factor in competitive positioning. The shift is no longer hypothetical; it is happening now, fundamentally altering the business of law. As Raghu Ramanathan, President of Legal Professionals at Thomson Reuters, aptly stated, "we're entering a brave new world in the legal industry... that will redefine conventional notions of how law firms operate, rearranging the ranks of industry leaders along the way".

For legal executives, this new world presents a profound challenge. On one side lies the immense promise of AI-driven efficiency, enhanced service delivery, and unprecedented growth. On the other, significant ethical, security, and operational risks loom large. A performance gap is rapidly widening between firms that have a formal, visible AI strategy and those that do not, creating a new competitive chasm that will define the industry's leaders and laggards for the next decade.

This article is designed to cut through the hype and provide clarity. Adhering to the 'They Ask You Answer' philosophy, it will directly address the most pressing questions on the minds of legal executives. What is the real state of AI adoption today? Where are firms finding tangible, measurable returns on their investment? What are the non-negotiable risks and ethical landmines that must be navigated? How can a firm build a smart, strategic framework for implementation? And finally, what does the future hold, and how can we prepare for it?

What Is the Real State of AI Adoption in Law Firms Today?

The current AI landscape in the legal sector is characterized by a fascinating and revealing paradox. While headlines proclaim an AI revolution, many executives observe a more cautious, measured reality within their own firms and among their peers. The data tells a tale of two distinct trends: a groundswell of individual enthusiasm running up against the deliberate, and sometimes slow, pace of official firm-wide implementation.

A Tale of Two Trends: Individual Enthusiasm vs. Firm Caution

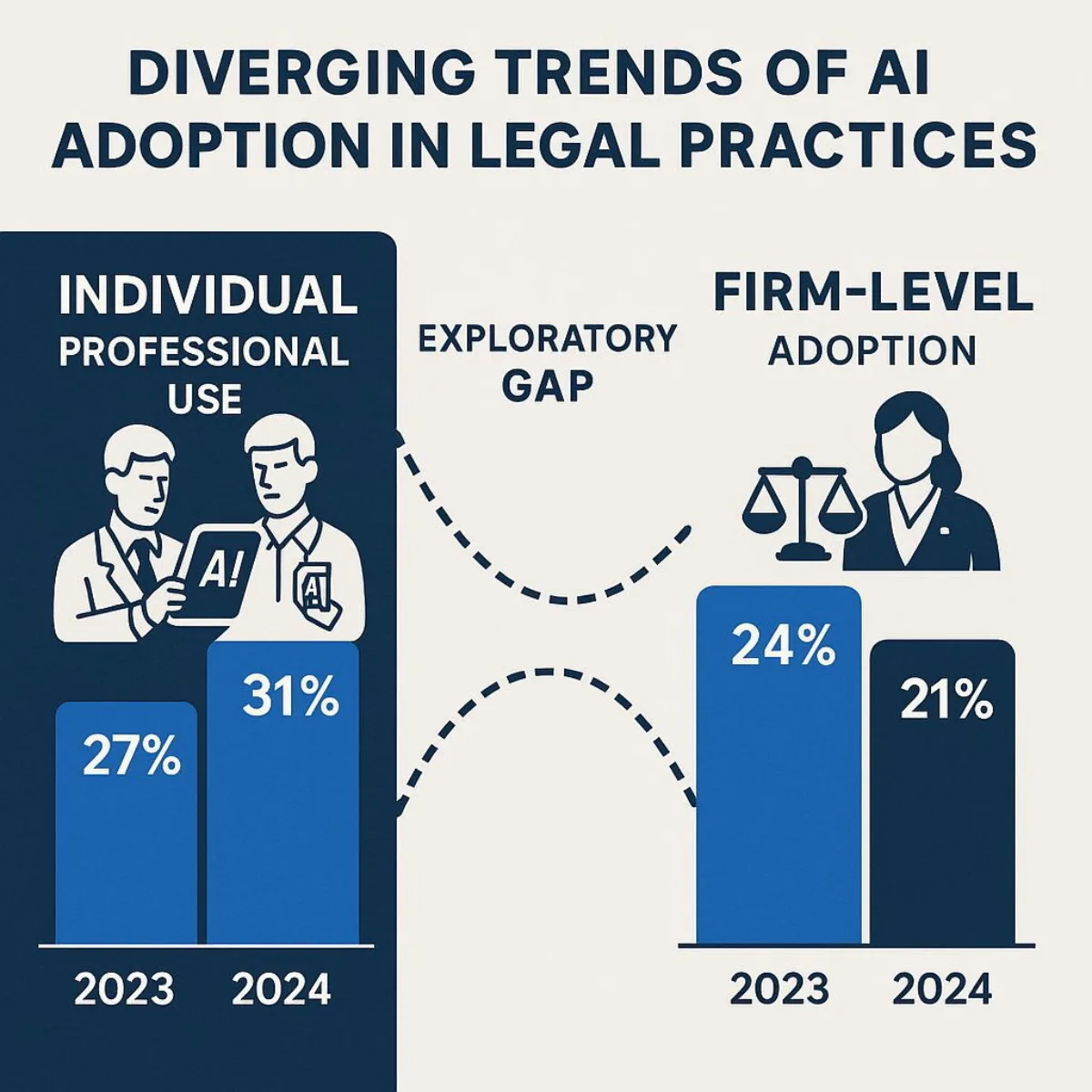

At the grassroots level, legal professionals are actively experimenting with AI. Personal use of generative AI tools for work-related tasks has seen a notable increase, rising from 27% in 2023 to 31% in 2024. This indicates a clear and growing interest among individual lawyers and paralegals in leveraging technology to enhance their personal productivity. They are exploring accessible, often free or low-cost, tools to streamline daily tasks like drafting correspondence and conducting general research.

In stark contrast, official, firm-level adoption of legal-specific AI has experienced a slight dip, moving from 24% in 2023 to 21% in 2024. This apparent decline does not signal a retreat from AI. Instead, it highlights a critical "Exploratory Gap"—a transitional phase where firms are moving from informal, ad-hoc usage to more structured, deliberate evaluation. The complexities of enterprise-level deployment—justifying ROI, ensuring data security, navigating ethical guidelines, and developing a coherent strategy—are causing a strategic bottleneck. Firms are shifting from a mindset of "let's try it" to asking, "how do we deploy this safely, ethically, and effectively at scale?".

This period of cautious exploration is where the competitive divide deepens. While employees in some firms experiment individually, their competitors are building scalable, secure, and integrated AI frameworks. The data is clear: despite 80% of legal professionals believing AI will be transformative for their business, only a mere 22% report that their firm has a visible AI strategy in place.

The Great Divide: Firm Size and Practice Area

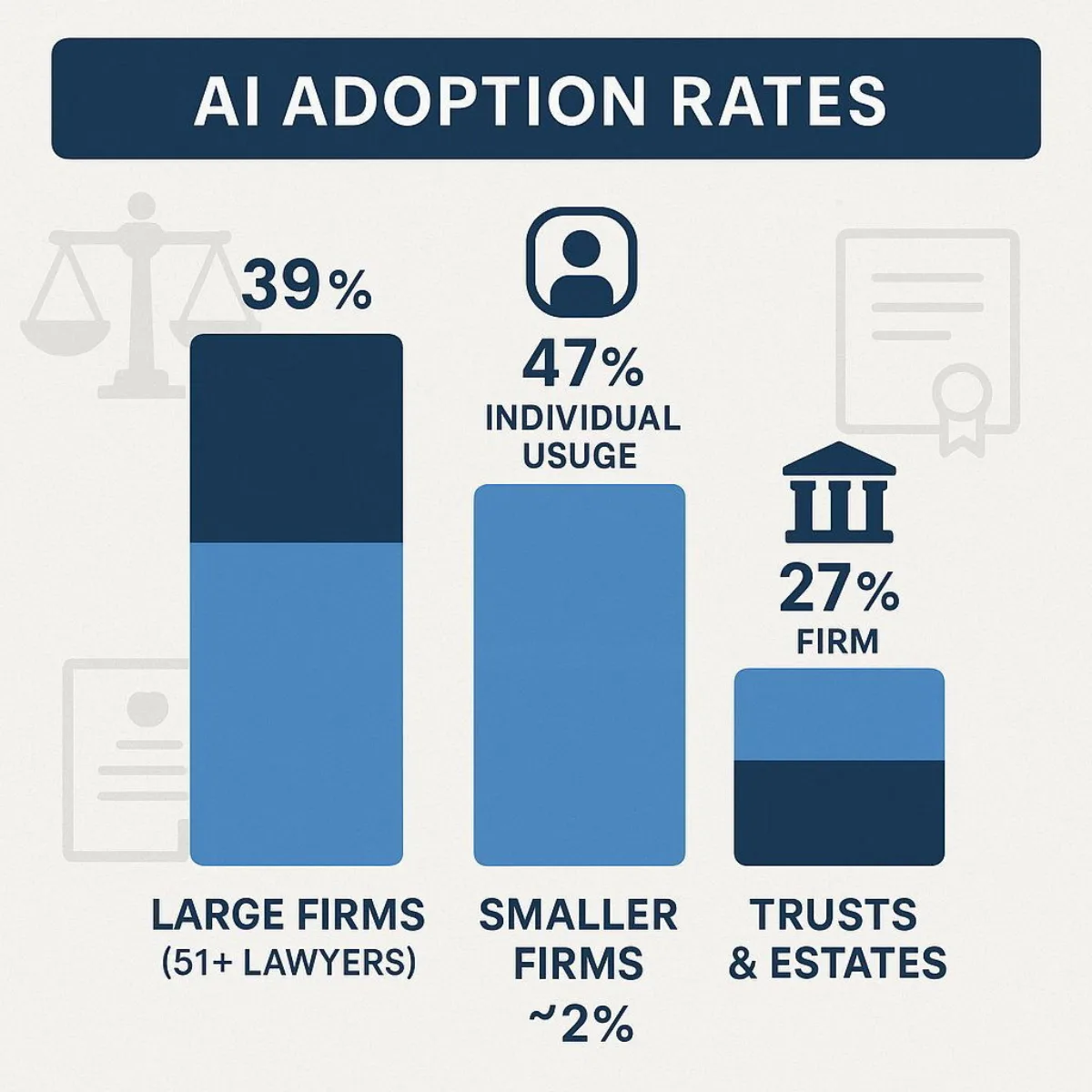

The adoption data also reveals significant disparities based on firm size and area of practice. Large firms are moving more decisively into the AI space. Firms with 51 or more lawyers report a 39% generative AI adoption rate, a figure that is nearly double the approximately 20% rate seen in firms with 50 or fewer lawyers. This technology gap is likely driven by larger firms having greater resources to invest in enterprise-grade solutions and dedicated teams to manage implementation.

Adoption rates also vary significantly across different legal practice areas, providing a market signal as to where AI delivers the most immediate and obvious value. For instance, immigration practitioners lead all other groups in personal AI usage, with 47% incorporating it into their workflows. This high rate of individual adoption is logical; immigration law often involves high-volume, repetitive tasks such as form-filling, drafting standard correspondence, and summarizing client histories—functions where current generative AI tools excel.

However, when it comes to official firm-level adoption, civil litigation firms take the lead at 27%. This is because their work involves processing massive volumes of data for e-discovery, conducting complex legal research, and analyzing case law—areas where sophisticated, enterprise-grade AI tools can provide a clear and substantial return on investment, justifying the significant financial outlay. In contrast, practice areas that may rely more on bespoke client counseling and less on high-volume document processing, such as trusts and estates (18% firm adoption), show lower rates. These patterns tell executives where the lowest-hanging fruit for AI implementation lies, suggesting that initial pilot programs should target workflows characterized by high-volume, repeatable tasks.

| Metric | 2024 | 2023 |

|---|---|---|

| Individual Professional Use (GenAI) | 31% | 27% |

| Firm-Level Adoption (Legal-Specific AI) | 21% | 24% |

| Adoption Rate: Firms with 51+ Lawyers | 39% | N/A |

| Adoption Rate: Firms with <=50 Lawyers | ~20% | N/A |

| Firms with a Visible AI Strategy | 22% | N/A |

How Are Leading Firms Using AI to Drive Measurable ROI?

Moving beyond adoption statistics, the crucial question for any executive is whether these technologies deliver tangible value. The evidence from early adopters is compelling, demonstrating that strategic AI implementation is not just an operational upgrade but a powerful driver of productivity, profitability, and competitive advantage. For greater detail on how software development jargon such as AI, Agile, DevOps, and more directly impact business ROI, see our guide Software Development Jargon Explained: An Executive’s Guide to Agile, DevOps, CI/CD & Microservices.

The Most Common Battlegrounds: Where AI is Deployed First

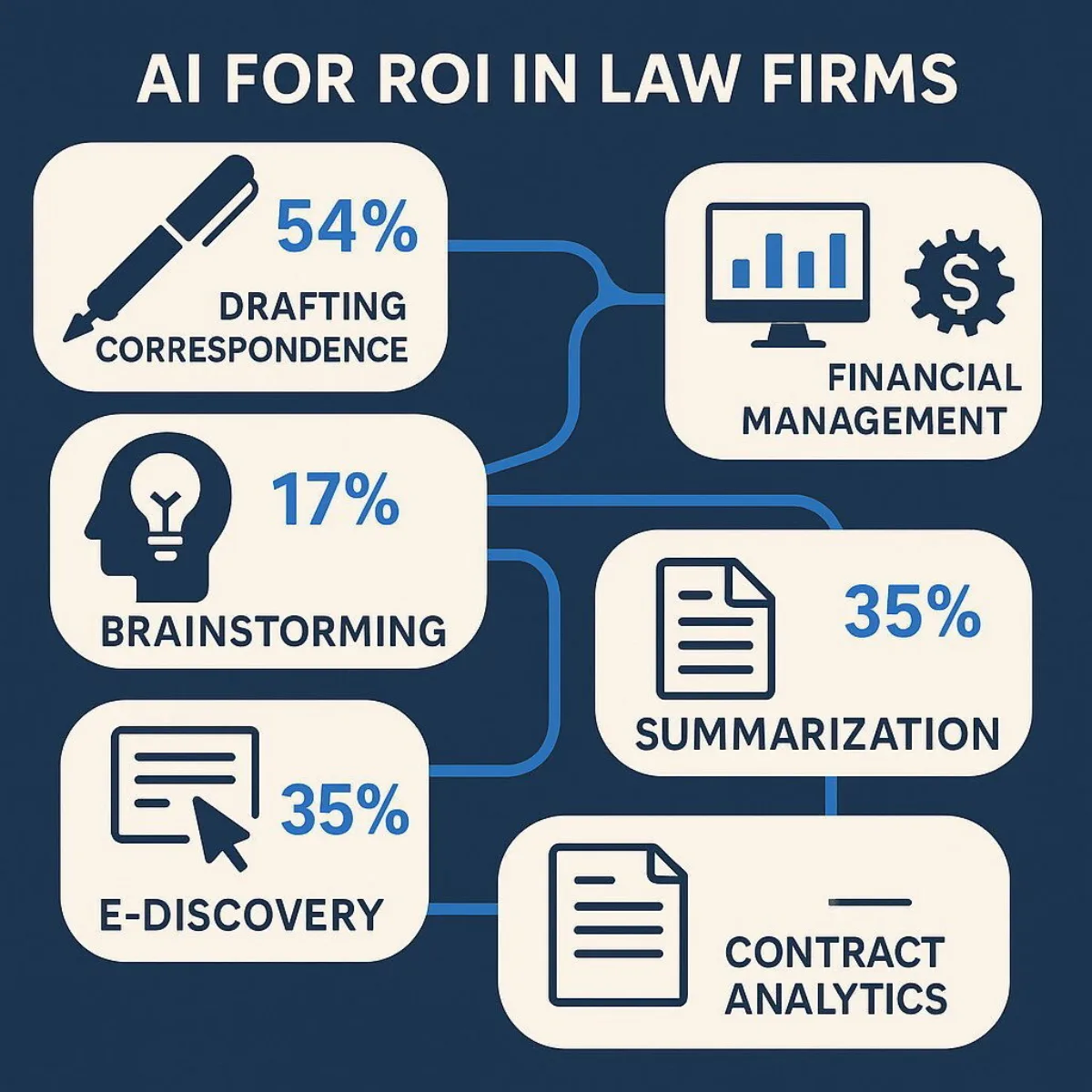

Firms that have embraced AI are applying it to a range of tasks that form the bedrock of legal work. Among legal professionals who use generative AI, the most common applications are drafting correspondence (54%), brainstorming ideas (47%), conducting general research (46%), and summarizing documents (39%). These applications target the time-consuming, often non-billable, work that can bog down legal teams.

Beyond these foundational uses, leading firms are deploying more advanced AI for high-stakes functions such as:

- E-Discovery: AI-powered software can sift through millions of documents and electronically stored information (ESI) with incredible speed and accuracy, identifying relevant material far more efficiently than human reviewers. To see how large law firms are managing and scaling such operations securely, refer to Scaling SaaS: Executive Strategies for 1 Million+ Users.

- Contract Analysis: AI tools can review thousands of contracts in minutes, identifying risks, inconsistencies, and deviations from standard language, a process that would traditionally take weeks of associate time.

- Litigation Analytics: Predictive analytics platforms are being used to analyze vast datasets of past cases, judicial rulings, and opposing counsel behavior to inform case strategy, predict outcomes, and allocate resources more effectively.

- Financial Management: A growing number of firms are using AI to analyze their own financial data, gaining insights into profitability, setting more competitive pricing strategies, and optimizing billing processes.

From Theory to Tangible Returns: Real-World Case Studies

The most persuasive argument for AI is found in the quantifiable results achieved by firms on the front lines of adoption. These case studies demonstrate a clear return on investment:

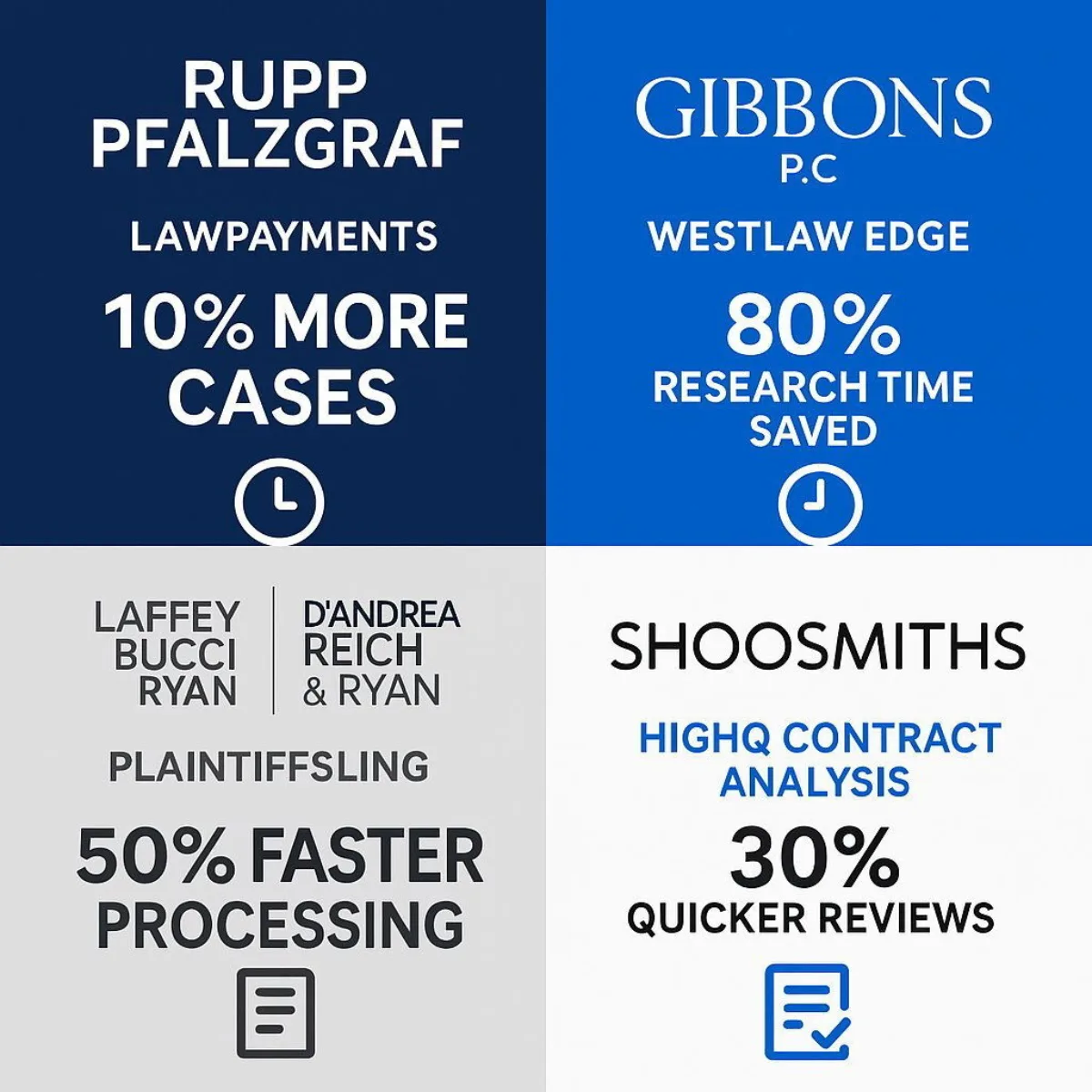

- Rupp Pfalzgraf , a forward-thinking firm, integrated Lexis+ AI into its practice and achieved an impressive 10% increase in attorney caseload capacity . The firm also reported that complex federal court motions now take a quarter of the time they previously required.

- Gibbons P.C. leveraged the same platform to "reach the second level faster" in their casework. The firm found that AI significantly accelerated their ability to identify key issues, allowing attorneys to spend less time on foundational work and more on high-level strategic analysis.

- At Laffey Bucci D'Andrea Reich & Ryan , attorney Guy D'Andrea reported saving up to 80% of the time he previously dedicated to legal research by using the AI-Assisted Research feature in Thomson Reuters Westlaw Precision.

- The law firm Shoosmiths utilized an AI contract review platform that analyzed complex agreements in approximately 3 minutes with 90% accuracy . For comparison, a qualified lawyer would typically take 4 hours to complete the same task with an 86% accuracy rate.

The Productivity Multiplier Effect

These individual efficiency gains create a powerful "productivity multiplier effect" across a firm. The time saved is not just a cost reduction; it is a strategic asset that can be reinvested into higher-value activities. Research indicates that AI systems can save lawyers an average of four hours per week, which can translate into significant new billable time over the course of a year. Among individual legal professionals using AI, 65% report saving between one and five hours per week, while another 19% save six or more hours weekly.

This reclaimed time allows lawyers to focus on the work that truly matters: deepening client relationships, engaging in complex strategic planning, and delivering insightful, expertise-driven guidance. For small and midsize firms, this effect is particularly transformative. AI enables them to increase their caseloads and scale revenue without a proportional increase in overhead, allowing them to compete more effectively against larger, better-resourced rivals. If you'd like to dig further into building predictable ROI and minimizing surprises in technology projects, consider our deep dive on How to Budget for Custom Software in 2026.

| AI Use Case | Primary Function | Reported Productivity Gain / ROI Metric |

|---|---|---|

| Legal Research | Identifying relevant case law, statutes, and precedents. | Up to 80% reduction in research time. |

| Document Review & E-Discovery | Analyzing large volumes of documents to find key information. | Can identify critical evidence missed by human reviewers; accelerates review of millions of documents. |

| Contract Analysis | Reviewing agreements for risks, inconsistencies, and key clauses. | Analysis of complex agreements in ~3 minutes vs. 4 hours for a human lawyer. |

| General Practice Management | Streamlining overall workflows, from drafting to client communication. | 10% increase in attorney caseload capacity; complex motions completed in 25% of the time. |

| Drafting & Summarization | Creating initial drafts of correspondence, memos, and briefs; summarizing documents. | 65% of users save 1-5 hours/week; 19% save 6+ hours/week. |

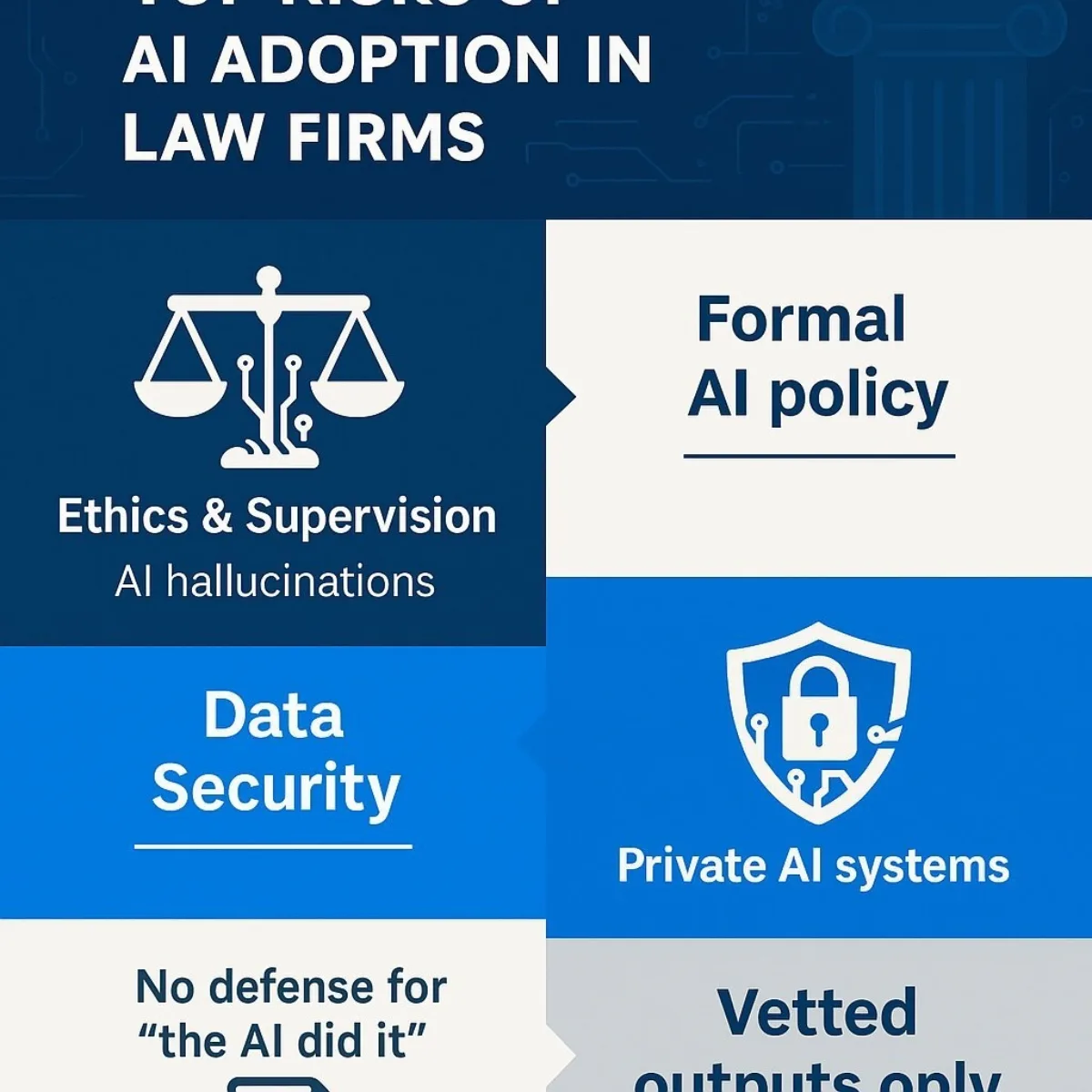

What Are the Critical Risks and Ethical Landmines We Must Navigate?

While the potential returns on AI are substantial, the risks associated with hasty or uninformed implementation are equally significant. For a profession built on principles of diligence, confidentiality, and trust, these challenges are not minor considerations; they are paramount. A successful AI strategy is as much about risk mitigation as it is about technological adoption. Executives must confront three critical areas of risk head-on: ethical compliance, data security, and output accuracy.

Risk 1: Ethical Compliance and the Duty of Supervision

The American Bar Association (ABA) has made it clear that while the tools of legal practice may change, a lawyer's core ethical obligations have not. AI is generally considered "nonlawyer assistance" under ABA Model Rules 5.1 and 5.3. This means that a supervising lawyer is ultimately and fully responsible for the work product generated by an AI system, just as they would be for work produced by a junior associate or paralegal. The defense of "the AI did it" will not stand.

Furthermore, the duty of technological competence, outlined in Comment 8 to ABA Rule 1.1, now explicitly requires lawyers to understand the "benefits and risks associated with relevant technology," which includes AI. This duty was put into sharp relief by the infamous

Avianca v. Mata case, where a lawyer was sanctioned by the court for submitting a legal brief containing fictitious case citations generated by ChatGPT. This incident serves as a stark cautionary tale: technological ignorance is not a viable defense for professional malpractice.

Risk 2: Data Security and Client Confidentiality

Perhaps the most immediate and severe risk involves the use of public, consumer-grade generative AI tools. When a lawyer inputs a prompt or uploads a document into many of these public systems, that data can be used to train the AI model and may be retained or shared with third parties. This practice poses an existential threat to the duty of client confidentiality, governed by ABA Rule 1.6.

Guidance from state bars has been unequivocal. The State Bar of California, for example, explicitly warns: "A lawyer must not input any confidential information of the client into any generative AI solution that lacks adequate confidentiality and security protections". This is why many firms are bypassing public tools and partnering with specialist firms like

Baytech Consulting to build secure, proprietary AI systems. A custom-built solution, trained on a firm's own private and verified data, can provide the powerful benefits of AI without compromising the non-negotiable duty of client confidentiality. Such systems ensure that sensitive client information remains within the firm's secure environment, fully firewalled from the public domain. To safeguard your own organization’s IP, especially when outsourcing development, read our practical checklist: Outsourcing Software Development? The Executive Checklist for IP Protection.

Risk 3: Accuracy, "Hallucinations," and Algorithmic Bias

The reliability of AI-generated output remains a primary concern and a significant barrier to adoption. A well-documented issue with large language models is their tendency to "hallucinate"—that is, to generate confident, plausible-sounding, but entirely false information. The

Avianca case is a prime example of this phenomenon in action. This risk is compounded by technical limitations, such as the "context window," which represents the amount of information an AI can process at one time. When dealing with long, complex legal documents, a limited context window can impair the AI's ability to maintain coherence and accuracy.

A more subtle but equally pernicious risk is that of algorithmic bias. AI models are trained on vast datasets of existing information, and if that data reflects historical or societal biases, the AI will learn and potentially amplify them. An AI tool used for screening potential clients or employees, if trained on biased data, could produce discriminatory outcomes, creating significant legal and reputational liability for the firm.

These risks underscore a critical distinction that all legal executives must understand: the difference between consumer-grade AI and professional-grade AI. Public tools are trained on the vast, unverified expanse of the open internet. In contrast, professional-grade legal AI solutions, offered by established legal tech vendors, are specifically designed to mitigate these risks. They are trained on curated, reliable legal databases, feature end-to-end encryption to protect client data, and are built to provide verifiable, citable outputs. If you want to explore effective approaches to governing the lifecycle of AI and digital assets within your organization, see AI Governance and Asset Management: The Strategic Framework for the Modern Enterprise.

The choice of technology is therefore a primary risk management decision. The question is not simply "Should we use AI?" but rather, "Which AI architecture—a vetted professional tool or a secure custom-built system—is appropriate for our firm's risk profile and ethical obligations?"

What Is a Practical Framework for Implementing AI Strategically?

Navigating the promise and peril of AI requires more than just purchasing software; it demands a deliberate, strategic approach. Firms that are realizing the greatest returns on their AI investments are those that treat it not as a standalone IT project, but as a core component of their business strategy. The data shows that organizations with a visible, formal AI strategy are 3.5 times more likely to experience a positive ROI compared to those without one. The following five-step framework provides a practical roadmap for executives to move from cautious exploration to strategic implementation. To learn how disciplined scope management underpins successful rollouts—including AI projects—review How to Prevent Scope Creep in Software Projects: Baytech Consulting’s Proven Strategy.

Step 1: Start with Problems, Not Technology

The most common mistake in technology adoption is to lead with the solution—chasing the latest AI tool without a clear understanding of the problem it is meant to solve. Successful implementation begins with an internal assessment to identify specific business pain points. Leaders should ask fundamental questions: What are the two or three most time-consuming, inefficient tasks for our legal teams? Where are the biggest bottlenecks in our workflows? Which administrative burdens are consuming the most partner time? By defining the problem first, the firm can then select the technology that best addresses its unique needs, ensuring that the investment is targeted and effective.

Step 2: Develop a Formal AI Governance Policy

Given the significant ethical and security risks, operating without a formal AI policy is untenable. Yet, a staggering 52% of organizations report that they lack GenAI usage policies. A comprehensive governance policy is the foundation of responsible AI adoption. This document should be developed by a cross-functional team of legal, technical, and operational leaders and should clearly define:

- Permissible Use Cases: What AI tools are approved for use and for which specific tasks.

- Ethical Guidelines: Reinforce the lawyer's duty of supervision, competence, and ultimate accountability for all work product.

- Data Security Protocols: Explicitly prohibit the input of confidential client information into non-approved, public AI systems.

- Client Transparency: Establish when and how the firm will disclose its use of AI in client matters, including how efficiency gains will be reflected in billing.

Step 3: Vet Technology Partners Rigorously

Not all AI is created equal. The choice of a technology partner is a critical decision that directly impacts a firm's risk exposure and potential for success. When evaluating potential AI solutions, whether off-the-shelf or custom, executives should use a stringent checklist:

- Data Source: Was the tool trained on a reliable, curated legal database, or on the open web?

- Integration: Will the tool connect seamlessly with the firm's existing practice management, billing, and document management systems? A lack of integration is a major barrier to adoption.

- Security and Confidentiality: Does the provider offer end-to-end encryption and a robust, verifiable commitment to data privacy?

- Vendor Expertise: Does the provider have a deep understanding of legal workflows and the specific challenges of the legal profession?

Step 4: Commit to Continuous Training and Upskilling

AI is not a simple plug-and-play solution that can be deployed without adequate preparation. Its effective and ethical use requires a firm-wide commitment to continuous education. While there is a significant training gap—with 64% of professionals having received no specific GenAI training—the trend is positive. In 2025, 31% of professionals reported that training was available to them, a notable increase from just 19% in 2024. This training should cover not only the technical aspects of using the tools but also the firm's governance policies and the ethical obligations involved. As AI automates more routine tasks, the skills that will become most valuable are those that are uniquely human: adaptability, critical problem-solving, creativity, and nuanced communication. For insights on mastering post-launch success and continuous software evolution, explore Software Maintenance Mastery: Executive Guide to Post-Launch Success.

Step 5: Establish Metrics and Measure ROI

To justify the investment in AI and guide future strategy, firms must define what success looks like from the outset. This involves establishing clear metrics to measure the return on investment. These metrics can be both quantitative and qualitative, and may include:

- Time saved on specific tasks (e.g., document review, legal research).

- Increased caseload capacity per attorney.

- Faster turnaround times on client matters.

- Reductions in human error.

- Improved case outcomes or client satisfaction scores.

Measuring and communicating this value is also crucial for client relationships. A surprising 71% of law firm clients do not know whether their outside counsel uses GenAI, despite a majority wanting them to. By tracking and sharing data on AI-driven efficiencies, firms can have more transparent conversations about value and billing, strengthening trust and aligning incentives.

What Does the Future Hold? Preparing for the Next Wave of Agentic AI

While the legal industry is still grappling with the implications of generative AI, the next technological wave is already on the horizon: agentic AI. Understanding this evolution is critical for executives who wish to position their firms not just for today's challenges, but for the paradigm shifts of tomorrow. Learn more about real-world adoption of AI agents—including ROI, readiness, and tool selection—in our focused guide AI Agents in Software Development: Executive Guide to TCO, ROI & Top Tools for 2025.

Defining the Next Frontier: From Generative to Agentic AI

The distinction between generative and agentic AI marks a fundamental leap in capability. Generative AI, such as ChatGPT, is primarily reactive; it responds to specific human prompts to perform a task, acting as a sophisticated assistant. Agentic AI, in contrast, is proactive and autonomous. It can interpret a high-level goal, independently devise a multi-step plan to achieve it, execute the necessary tasks, and adapt its strategy based on the results, all with minimal human intervention.

One can think of it with this analogy: "Generative AI is the intellect; Agentic AI is both the mind and the hands". Where a generative tool might draft a contract clause when asked, an agentic system could be tasked with the broader objective of managing the entire contract lifecycle, from initial drafting to negotiation and final execution.

Agentic AI in Practice: A Glimpse into the Future

To make this concept concrete, consider a complex legal workflow like a private equity transaction. A legal team could assign an AI agent the high-level goal: "Support the legal team through all stages of this transaction to completion." The agent would then autonomously break this objective down into a series of sub-tasks:

- Initiation: It would begin by drafting the Non-Disclosure Agreement (NDA), using the firm's preferred templates and clauses.

- Due Diligence: As documents are uploaded to the data room, the agent would review them, identifying ambiguous language or potential risks.

- Cross-Referencing and Analysis: If it finds an inconsistency in a vendor report, it would not just flag it. It would cross-reference the issue against previously flagged concerns in the sector, consult the firm's internal deal databases to see how similar issues were handled in past transactions, and then propose three distinct clause amendments to mitigate the risk.

- Reporting: Finally, it would generate a concise internal memo for the human lawyers, summarizing the issue, providing a risk assessment, and outlining its recommended approach, complete with supporting precedent.

Implications for the Legal Profession

The rise of agentic AI represents a significant step toward a true "AI co-worker". These systems will have the capacity to manage complex, multi-stage workflows, further automating tasks and freeing up legal professionals to concentrate on the highest echelons of legal work: strategic judgment, creative problem-solving, nuanced client advocacy, and business development.

The path to adopting such powerful technology must be deliberate and strategic. Firms should begin now by building confidence and familiarity with current AI through low-risk applications, such as using generative tools for deposition summaries or initial contract analysis. This creates the foundational knowledge and internal processes necessary to move toward more sophisticated, integrated implementations of agentic systems in the future. The emergence of agentic AI reinforces the central theme of this technological transformation: the goal is not to replace lawyers, but to augment them. The uniquely human skills of judgment, creativity, empathy, and advocacy will not be rendered obsolete; they will become more valuable than ever before. For a closer look at how to balance flexibility, configuration, and complexity within technology environments (including AI), see The Configuration Complexity Clock: Why Too Much Flexibility Can Hurt Your Business.

Conclusion and Supporting Links

The transformation of the legal industry by artificial intelligence is no longer a distant forecast; it is the present reality. The data clearly shows a profession in transition, marked by a groundswell of individual adoption, cautious but accelerating firm-level investment, and a widening competitive gap between the strategic adopters and the hesitant observers. For B2B executives, the message is unequivocal: AI is now a fundamental component of a modern, competitive law practice.

The path forward, however, is not a simple technology purchase. It is a strategic journey that demands a clear-eyed understanding of the tangible ROI, a rigorous approach to mitigating the profound ethical and security risks, and a commitment to building a culture of continuous learning and adaptation. The firms that will lead the legal industry in the coming decade will be those that move beyond the "exploratory phase" and begin implementing a formal, strategic AI roadmap today. They will be the ones who understand that AI's greatest promise is not the replacement of human lawyers, but the augmentation of their uniquely human expertise. The time for strategic action is now.

Supporting Articles for Further Reading:

- For a deep dive into the latest data: The Future of Professionals: Action Plan for Law Firms 2025

- For a crucial guide on ethics: Generative AI and ABA Ethics Rules

- To understand the next wave of innovation: Agentic AI Use Cases in the Legal Industry

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.