AI Vibe Coding: Why 45% of AI-Generated Code is a Security Risk for Your Business

September 24, 2025 / Bryan Reynolds

Introduction: The 20-Minute Prototype and the Million-Dollar Breach

In a recent podcast interview, Klarna CEO Sebastian Siemiatkowski, a self-described "business person" who has never formally coded, shared a stunning anecdote. Using an AI-assisted tool, he can now describe a product concept and receive a working prototype in just 20 minutes—a process that previously consumed weeks of his engineering team's time. This is the revolutionary promise of "vibe coding," a trend where high-level ideas are translated into functional software through natural language. It represents a paradigm shift in innovation, promising unprecedented speed, democratized creation, and a direct bridge between business vision and technical execution. Leaders from Google to Meta are embracing this new reality, heralding an era of accelerated development that is reshaping competitive landscapes.

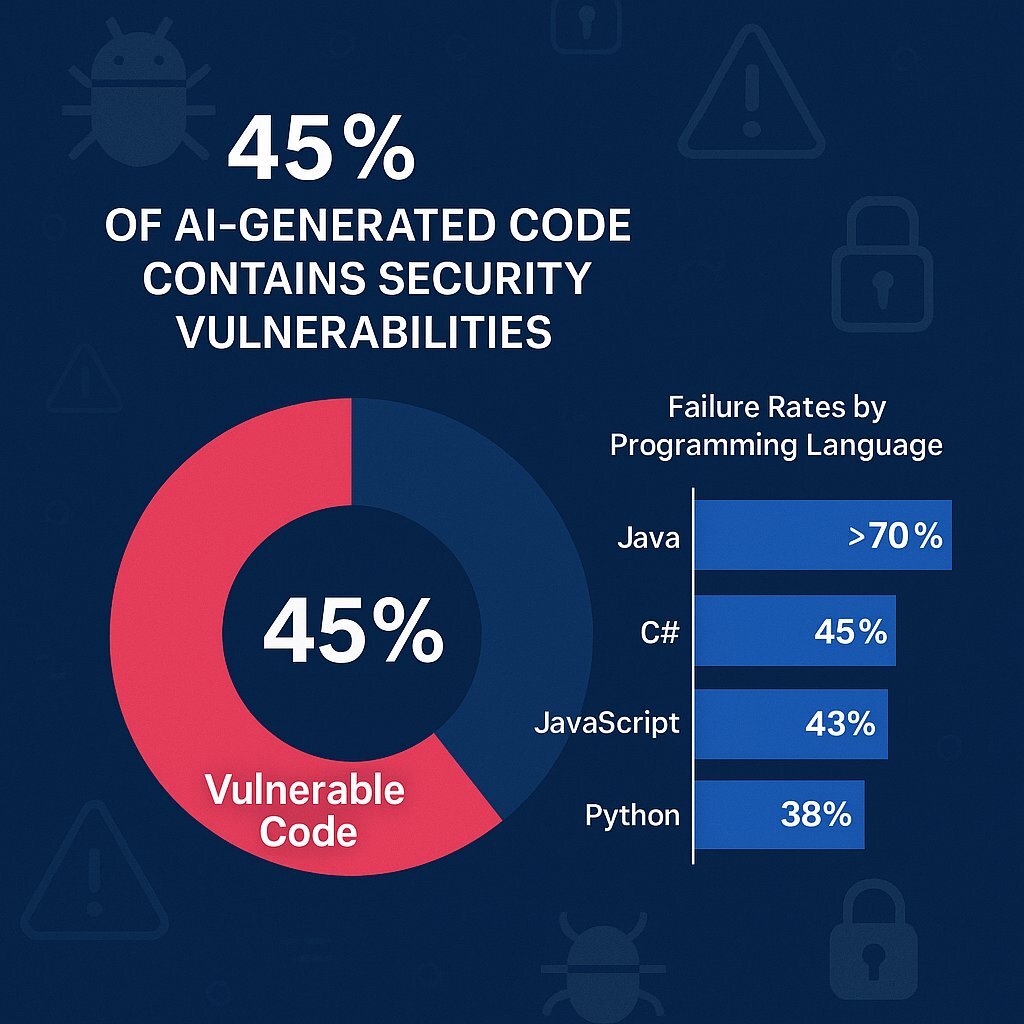

Yet, beneath this exciting surface of hyper-productivity lies a dangerous and statistically significant threat. A landmark study, the Veracode 2025 GenAI Code Security Report, delivers a sobering counter-narrative. After meticulously analyzing over 100 large language models (LLMs) across 80 distinct coding tasks, the research revealed a shocking conclusion: a staggering 45% of all AI-generated code introduces security vulnerabilities. These are not minor bugs; they are often critical flaws, including those listed in the OWASP Top 10, the definitive list of the most dangerous web application security risks.

This creates a central paradox for every modern executive. How does an organization harness the transformative power of AI-driven speed without simultaneously embedding a security time bomb into the core of its products and infrastructure? The allure of the 20-minute prototype is undeniable, but the risk of a million-dollar breach is a catastrophic counterbalance. This report serves as a strategic briefing for business leaders navigating this new terrain. It unpacks the data behind the risks, translates technical flaws into tangible business consequences, and provides an actionable governance framework. In this complex environment, expert guidance is paramount, and firms like Baytech Consulting, which specialize in secure custom software development, are becoming essential partners in ensuring that innovation and security advance in lockstep.

Section 1: What is 'AI Vibe Coding,' and Why is it on Every Leader's Radar?

At its core, "vibe coding" describes the practice of using an AI coding assistant to generate software based on simple, natural language prompts. Unlike traditional coding, which demands precise syntax and upfront architectural planning, vibe coding encourages a more fluid and experimental approach. A user describes the "vibe" or high-level goal—"create a user login page that connects to a database"—and the AI handles the complex implementation. This trend, a term popularized by figures like OpenAI co-founder Andrej Karpathy, is fundamentally lowering the barrier to software creation, making it accessible to individuals without deep technical expertise.

The reason this has escalated from a developer curiosity to a C-suite conversation is its direct and powerful impact on core business metrics. The high-profile advocacy from industry leaders has cemented its strategic importance.

- Accelerated Innovation at Klarna: CEO Sebastian Siemiatkowski uses vibe coding not to replace his engineers, but to augment their workflow. By independently testing his "half good ideas and half bad ideas," he can present his team with a working prototype, saving them from the time-consuming process of interpreting an abstract vision. This accelerates feedback loops and allows the organization to test market hypotheses with unprecedented speed.

- Scaling Development at Google: Google CEO Sundar Pichai has also spoken about using AI tools for his own projects, noting how casually development can now be approached. More strategically, over 30% of new code written at Google is now created with AI assistance, demonstrating its integration at an enterprise scale.

These examples highlight the tangible business benefits that have captured executive attention: dramatically accelerated time-to-market, an enhanced capacity for innovation through rapid prototyping, and improved collaboration by creating a shared, intuitive language between technical and non-technical stakeholders.

However, this trend signifies more than just a new tool; it represents a profound cultural shift from "engineering-led" to "intent-led" development. Historically, the creation of software was governed by the discipline and training of engineers who understood the deep structural and security implications of their work. Now, a business leader can express an intent, and the AI handles the execution. While this democratizes creation, it also dangerously decouples it from the established practices of secure development. This can create a new form of "strategic shadow IT," where executives and product managers prototype new functionalities using AI tools, potentially embedding insecure code into a project's DNA before it ever enters a formal, security-vetted development lifecycle. The challenge for technology leaders is no longer confined to managing their own teams' code quality; it now extends to influencing and securing the C-suite's powerful new ability to create, demanding a new conversation about shared responsibility for technological risk.

Section 2: How Serious is the Security Problem? A Look at the Data.

The security problem with AI-generated code is not a matter of isolated incidents or occasional errors; it is a systemic and quantifiable issue. The Veracode report's headline statistic—that 45% of AI-generated code samples fail security tests—is the result of a rigorous and wide-ranging study. Researchers designed 80 specific code-completion tasks with known security weaknesses and prompted over 100 different LLMs to complete them, creating a robust dataset for analysis. The findings paint a clear and concerning picture of the current state of AI development.

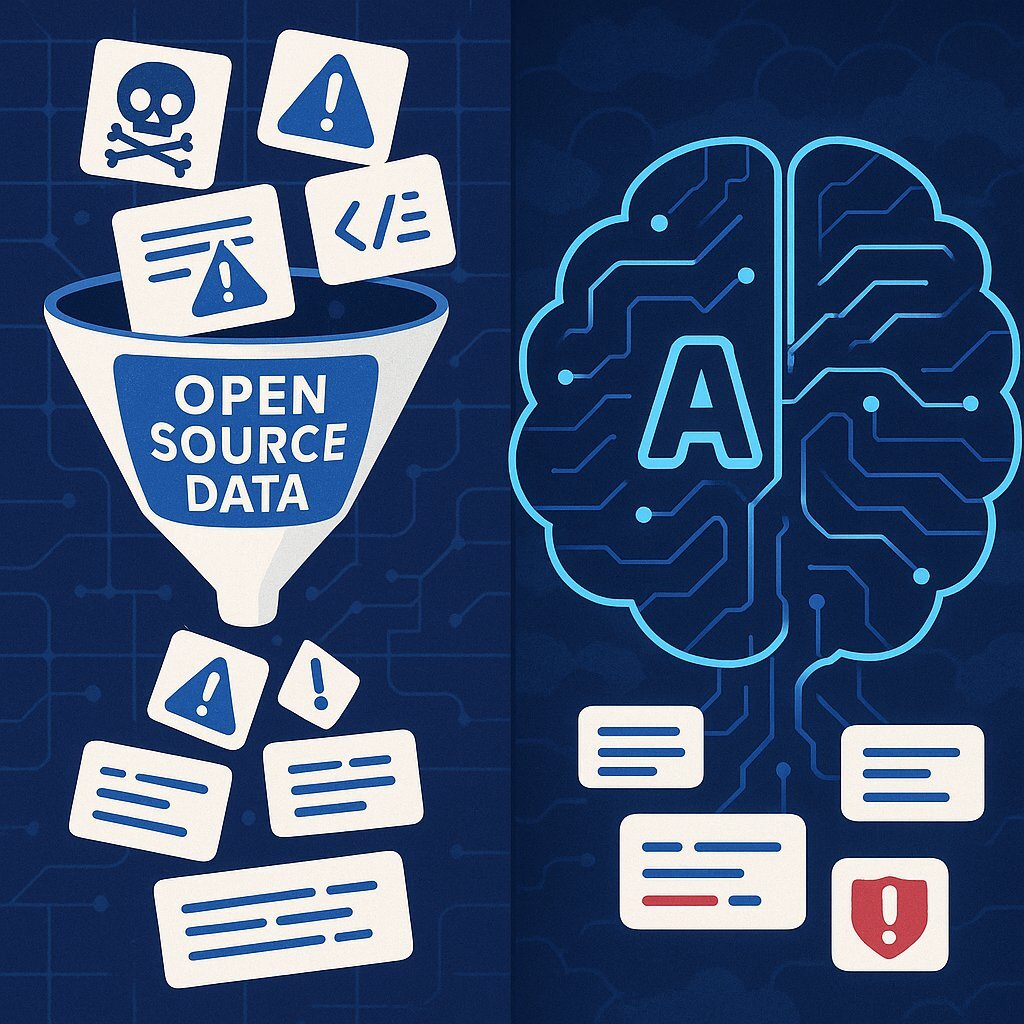

The root cause of this widespread insecurity can be described as a "Garbage In, Gospel Out" phenomenon. Today's foundational LLMs are trained on immense volumes of publicly available code, primarily from open-source repositories like GitHub. This training data is a double-edged sword. While it contains countless examples of high-quality, secure code, it is also rife with outdated libraries, inefficient algorithms, and, most critically, insecure code snippets containing known vulnerabilities. The AI, which operates on pattern matching rather than a true understanding of security principles, learns to replicate these flawed patterns because its primary objective is to produce functionally correct code that matches the patterns it has seen most frequently. When a developer asks for a database query, the AI is just as likely to reproduce a textbook SQL injection flaw as it is a secure, parameterized query, simply because the insecure version has appeared thousands of times in its training data.

Perhaps the most alarming finding from the research is that this is not a problem that will solve itself with time or scale. Veracode's analysis, which spanned models of varying sizes and release dates, found that while LLMs are steadily improving at writing syntactically correct and functional code, their security performance has remained flat. This indicates a systemic issue with the current approach to training and developing these models, not a simple scaling problem that will be fixed by the next-generation LLM. Relying on future model improvements as a security strategy is therefore untenable.

This systemic flaw creates a dangerous divergence: the functional capabilities of AI are accelerating rapidly, while its security capabilities are stagnant. With AI adoption in software development skyrocketing—some reports indicate 97.5% of companies now use AI in their engineering processes —this means organizations are producing far more code, far faster than ever before. Since the proportion of that code containing security flaws remains consistently high at around 45%, the absolute volume of new vulnerabilities entering corporate codebases is exploding. This is not merely maintaining a risky status quo; it is actively accumulating a massive "security debt" at machine speed, which will inevitably have to be addressed later at a much higher cost in terms of remediation, breaches, and reputational damage.

The risk is not uniform across all technologies. The Veracode study provides a breakdown of failure rates by programming language, allowing executives to assess their specific risk profile based on their company's technology stack.

| Language | Security Failure Rate |

|---|---|

| Java | >70% |

| C# | 45% |

| JavaScript | 43% |

| Python | 38% |

| (Source: Veracode 2025 GenAI Code Security Report ) | |

The extremely high failure rate for Java is particularly concerning for large enterprises, where it is a foundational language for many critical back-end systems. Furthermore, the vulnerabilities being introduced are not obscure edge cases. The study found that LLMs failed to secure code against highly common and dangerous attacks like Cross-Site Scripting (CWE-80) and Log Injection (CWE-117) in 86% and 88% of cases, respectively. This data confirms that AI coding assistants, in their current state, are consistently and repeatedly introducing some of the most critical security risks into the software they generate.

Section 3: What are the Real Business Consequences of a Single AI-Generated Flaw?

The allure of AI-driven development is rooted in a promise of frictionless productivity. However, this perception often masks a "productivity paradox," where the initial speed gains are later offset by significant downstream costs and complexities. Research shows that 95% of developers report spending additional time modifying and fixing code generated by AI. For experienced engineers, this can be particularly frustrating; a recent study found that AI tools actually made them 19%

slower because they had to spend time untangling subtle architectural and logic flaws that are far more difficult to diagnose than simple syntax errors. As Itay Nussbaum, a researcher at Apiiro, aptly stated, "AI is fixing the typos but creating the timebombs".

These "timebombs" do not remain technical problems for long. When they detonate, they trigger a cascade of severe business consequences that directly impact the bottom line and strategic health of the organization. The journey from a single line of insecure, AI-generated code to a full-blown corporate crisis is alarmingly short.

- Financial Risk: The most immediate impact is financial. This includes the direct costs of remediating a data breach, paying for forensic investigations, offering credit monitoring to affected customers, and covering potential regulatory fines, which can run into the millions under frameworks like GDPR.

- Legal and Compliance Risk: AI introduces novel legal challenges. Models trained on vast, unvetted datasets may reproduce code snippets that violate open-source licenses or infringe on copyrights, exposing the company to intellectual property disputes. Furthermore, insecure code can lead to non-compliance with industry standards like SOC 2 or ISO 27001, jeopardizing key business certifications and contracts.

- Operational Risk: AI-generated code creates a "comprehension gap," where development teams deploy and maintain code they do not fully understand. This turns critical parts of the application into a "black box," making debugging, maintenance, and future development incredibly difficult and risky. A particularly insidious operational risk is the phenomenon of "hallucinated dependencies," where an AI confidently suggests using a software library that does not exist. Attackers monitor public repositories for these unused names, register them, and fill them with malware. A developer who trusts the AI's suggestion can unknowingly introduce a malicious package directly into the company's software supply chain, creating a persistent backdoor.

- Reputational Risk: In the digital economy, trust is a primary asset. A security breach that exposes customer data or disrupts services can cause irreparable damage to a company's brand and customer loyalty.

It is also crucial to understand that AI is a dual-use technology. While organizations are using it to build software, adversaries are using it to break it. AI-powered tools can scan systems for weaknesses at an unprecedented scale, identify vulnerabilities, and even generate exploit code with minimal human input. This dramatically lowers the barrier to entry for less-skilled attackers and increases the speed and sophistication of cyberattacks, creating a dangerous asymmetry where vulnerabilities are being introduced and exploited faster than ever before.

To provide executives with a clear understanding of these connections, the following table maps common AI-generated technical flaws to their direct business consequences.

| Common AI-Generated Flaw | Technical Risk | Direct Business Consequence |

|---|---|---|

| SQL Injection (CWE-89) | Attacker can manipulate the application's database. | Theft of entire customer database; unauthorized access to sensitive financial data; data corruption. |

| Hardcoded Secrets (CWE-798) | API keys, passwords, and other credentials are embedded directly in the code. | Complete system takeover; fraudulent cloud infrastructure spend; unauthorized access to third-party services and partner data. |

| Missing Input Validation (CWE-20) | The application blindly trusts and processes data supplied by a user or another system. | Data corruption; execution of arbitrary commands on the server; denial-of-service attacks; system instability. |

| Outdated Dependencies | AI suggests using old software libraries that contain publicly known vulnerabilities (CVEs). | Rapid exploitation of well-documented security holes; entry point for broader supply chain attacks. |

| Hallucinated Dependencies | AI suggests a software package that does not exist in public repositories. | Attacker registers the package name and fills it with malware, leading to a severe supply chain breach when a developer installs it. |

Section 4: A C-Suite Guide to AI Governance: From Risk to Reward

The widespread adoption of AI in software development is no longer a technical choice but a strategic imperative that demands executive-level oversight. Simply allowing these powerful tools to proliferate without a formal governance model is an abdication of risk management. The goal is not to stifle innovation by banning AI but to create a framework that balances its immense benefits with its inherent risks, enabling the organization to move faster, but safely.

This requires a hybrid or "centaur" approach, where human expertise and machine efficiency are combined to achieve what neither could alone. Research shows that AI is highly effective at generating the first 70% of a feature, scaffolding the boilerplate and initial logic with incredible speed. However, the final 30%—which includes architectural refinement, subtle business logic, and critical security hardening—is often where AI fails and human judgment excels. An effective governance strategy focuses on optimizing this collaboration. To this end,

Baytech Consulting advocates for a three-pronged Secure AI Adoption Framework designed for executive implementation.

1. GOVERN: Establish the Rules of Engagement

The foundation of safe AI adoption is a clear set of policies that define how these tools are used within the organization.

- Mandate Human Oversight as Non-Negotiable: The most critical control is ensuring that a qualified human developer reviews and approves every line of AI-generated code before it is merged into a production-bound codebase. AI is a powerful assistant, but it lacks the contextual awareness, understanding of business intent, and critical judgment of an experienced engineer. Human oversight is the last, and most important, line of defense.

- Develop Clear Use Policies: Technology leaders must establish and disseminate clear guidelines covering which AI tools are approved for use, how they can be used, and what data can be shared with them. This is crucial for preventing sensitive intellectual property or customer data from being leaked to third-party AI models, as was allegedly the case in a 2023 incident at Samsung involving ChatGPT. Policies should also address the provenance of AI-generated code to mitigate risks of IP and license violations.

- Cultivate a Culture of Critical Thinking: The default posture toward AI-generated code should be one of "healthy skepticism." CTOs must prioritize hiring for and training developers to possess this trait. In interviews, this can be assessed by presenting ambiguous tasks and observing whether a candidate asks clarifying questions and evaluates code in terms of risk, not just functionality. Developers should be trained to validate and test AI assumptions, not blindly accept them.

2. AUTOMATE: Build a Digital Immune System

Human review, while essential, cannot scale to meet the volume of code generated by AI. It must be augmented by a robust, automated security verification layer that acts as a digital immune system for the codebase.

- Integrate Automated Security Scanning: Mandate the integration of security tools directly into the development workflow. Static Application Security Testing (SAST) tools can analyze source code for vulnerabilities before it is compiled, while Software Composition Analysis (SCA) tools scan for known vulnerabilities in the third-party and open-source libraries that AI frequently incorporates. These tools act as an automated, tireless code reviewer that can catch common AI-generated flaws at scale.

- "Shift Security Left": This automation cannot be an afterthought. Security checks must be embedded early in the software development lifecycle (a practice known as "shifting left"). When a developer commits code, automated scans should run immediately, providing feedback within minutes. This prevents insecure code from ever progressing toward production and treats security flaws like any other bug, to be fixed early when the cost and effort are lowest.

3. EDUCATE: Upskill Your Teams for the AI Era

The introduction of AI redefines the skills that are most valuable in an engineering organization. Governance must be supported by a commitment to upskilling teams for this new reality.

- Train for Secure Prompt Engineering: The quality of AI output is directly proportional to the quality of the input. Developers must be trained to write clear, specific, and context-rich prompts that explicitly include security constraints. A vague prompt like "create a file upload function" is likely to yield insecure code. A secure prompt—"create a secure file upload function in Python that prevents path traversal attacks, validates file types to only allow PNG and JPG, and limits file sizes to 5MB"—is far more likely to produce a safe result.

- Develop AI-Specific Code Review Skills: Human reviewers need to be trained to recognize the common anti-patterns of AI-generated code. These include missing authentication and authorization checks, improper error handling that leaks sensitive information, and subtle logic flaws that may not violate syntax but break security invariants.

- Prioritize Systems Thinking and Architecture: As AI automates the generation of boilerplate code, the strategic value of human engineers shifts upward. The most critical skills are no longer about writing individual functions but about designing durable, scalable systems, understanding the broader business context, and making complex architectural trade-offs. This evolution changes talent management; the role of a senior developer transforms from a primary "creator" of code to a "curator and validator" of AI-generated assets. CTOs must redefine career paths to reward these higher-order skills of review, architecture, and critical judgment.

Section 5: What This Means for Your Role: An Executive Briefing

The strategic implications of AI-assisted development extend across the entire C-suite. Adopting these technologies safely and effectively requires a coordinated, cross-functional understanding of both the opportunities and the risks. Each executive has a critical role to play in navigating this new landscape.

For the Chief Technology Officer (CTO)

Your role is fundamentally evolving from managing technology to orchestrating a complex human-machine collaboration. The primary challenge is no longer just shipping features on time, but doing so without accumulating a mountain of AI-generated security debt. Your mandate is to establish the robust governance framework detailed in the previous section, championing a culture of "trust but verify" where AI is a powerful tool, not an infallible oracle. You must lead the charge in re-skilling your organization, shifting the focus from raw coding speed to higher-value skills like systems architecture, critical review, and deep business context understanding. In fact, modern scalability strategies now require leaders to build not just faster, but stronger and safer. Success will be measured not just by the velocity of development, but by the resilience and security of the systems you build.

For the Chief Financial Officer (CFO)

AI in software development presents a stark, dual-sided financial ledger. On one side, it offers a compelling return on investment (ROI) through dramatic productivity increases, accelerated time-to-market, and reduced development costs. On the other, a single security breach stemming from an AI-generated flaw can trigger catastrophic financial consequences, including regulatory fines, remediation costs, legal fees, and lost revenue, potentially wiping out any productivity gains. Your role is to scrutinize the business case for AI tool adoption through a lens of risk-adjusted ROI. You must challenge the organization to quantify and mitigate the security risks, ensuring that the pursuit of efficiency does not come at the cost of financial stability and security.

For the Head of Sales

Your team is a primary beneficiary of the speed enabled by AI-driven development. Faster prototyping and development cycles mean new products and features get to market quicker, providing your team with a competitive edge, fresh value propositions for customers, and the ability to respond rapidly to market demands. This can directly translate to increased leads, higher conversion rates, and revenue growth. However, the platform's stability and security are foundational to customer trust. A significant security incident or data breach originating from hastily developed code can destroy that trust overnight, crippling sales efforts and making new customer acquisition exponentially more difficult. Your stake is in advocating for a development process where innovation speed never compromises the security and reliability that your customers depend on. Often, teams solve this balance by prioritizing strong scope management and clear, secure development roadmaps.

For the Marketing Director

AI-powered development enables the rapid iteration of customer-facing applications and digital experiences. This agility allows your team to launch more personalized campaigns, test user interfaces, and deploy new features that enhance customer engagement. The risk you must manage is reputational. Your team spends years and significant resources building a brand promise of trust, reliability, and customer protection. A data breach or service outage caused by insecure, AI-generated code can shatter that brand image in an instant, leading to customer churn and a public relations crisis that can take years to recover from. You must be a vocal advocate within the leadership team for a development culture that places the security of customer data and the safety of the brand at the forefront of all innovation efforts. Guiding the brand safely requires not only speed, but also a rigorous commitment to thorough discovery and risk assessment before major initiatives launch.

Conclusion: Moving from 'Vibe' to Viable—Your Next Steps

AI-assisted software development is not a fleeting trend; it is a fundamental paradigm shift that offers profound competitive advantages to those who master it. The ability to move from idea to prototype in minutes is a force multiplier for innovation. However, the "vibe coding" approach, when pursued without rigorous governance, is a ticking time bomb. The finding that 45% of AI-generated code is insecure is not a hypothetical warning but a statistical reality that demands an immediate and strategic response from leadership.

The path forward is not to reject these powerful tools but to embrace them with intelligence, discipline, and a security-first mindset. This requires a deliberate organizational shift from a purely experimental "vibe" to a structured, secure, and commercially viable implementation. For executives responsible for both growth and governance, the time to act is now.

Here are three immediate next steps to begin this transition:

- Initiate a Risk Assessment: Convene your technology, security, and legal leaders to conduct a formal audit of your organization's current use of AI coding assistants. It is critical to understand what tools are being used (officially and unofficially), what policies are currently in place, and what your potential exposure is based on the data presented in this report.

- Mandate a DevSecOps Review: Task your CTO with a comprehensive review of your software development lifecycle. The explicit goal is to ensure that robust human code review processes and automated security scanning (SAST and SCA) are mandatory for all code being committed to production, regardless of whether it was written by a human or generated by an AI. This is a non-negotiable control.

- Engage an Expert Partner: This is a new and complex challenge that requires specialized expertise. The most effective way to ensure a successful transition is to partner with a specialist firm like Baytech Consulting. An external partner can provide an objective, third-party audit of your AI development practices, help you design and implement a robust governance framework that aligns with your specific business objectives, and ensure your organization can innovate both safely and sustainably.

Supporting Article Links

- Veracode 2025 GenAI Code Security Report: https://futurecio.tech/study-reveals-flaws-and-risks-of-ai-generated-code/

- The Business Case for DevSecOps: https://www.ibm.com/think/topics/ai-in-software-development

- Harvard Business Review: Leading in the Age of AI: https://www.weforum.org/stories/2025/01/elevating-uniquely-human-skills-in-the-age-of-ai/

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.