Why Quantum Computing Demands a 10-Year Encryption Strategy

October 16, 2025 / Bryan ReynoldsThe Ticking Clock on Digital Trust

The entire modern digital economy—from financial transactions and secure communications to intellectual property and national security—is built upon a single, foundational premise: cryptographic trust. This trust is guaranteed by the assumption that certain mathematical problems are prohibitively difficult for even the most powerful classical supercomputers to solve. For decades, this assumption has been the bedrock of our digital world, the invisible force securing data in transit and at rest.

However, a new paradigm of computation is rapidly moving from theoretical physics into reality, and it is poised to shatter this fundamental assumption. Quantum computing does not represent an incremental improvement over classical machines; it is a revolutionary shift that operates on entirely different principles. A sufficiently powerful quantum computer will not just be faster; it will be capable of solving the very mathematical problems that underpin our current public-key encryption, effectively rendering the locks on our digital world obsolete.

The timeline for the arrival of a cryptographically relevant quantum computer (CRQC)—a machine capable of breaking today's standards—is no longer a distant, academic concern. A convergence of expert analysis, hardware development roadmaps, and algorithmic breakthroughs now places this event squarely within a 10-year strategic planning horizon. This is not a future problem. Because of a silent, ongoing threat known as "Harvest Now, Decrypt Later," data stolen today is at risk of future exposure. Consequently, the time for technology leaders to begin planning for a post-quantum cryptographic transition is not in a decade, but now.

This shift represents more than just another vulnerability to be managed. The quantum threat is a foundational risk to the very concept of digital trust. It does not exploit a flaw in a specific software implementation that can be patched; it breaks the underlying mathematical principles upon which our entire security architecture is built. For Chief Technology Officers, Chief Information Officers, and Chief Security Officers, this is not a tactical issue of adding another layer of defense. It is a strategic imperative to plan for the controlled replacement and modernization of a fundamental pillar of the enterprise's security infrastructure. This briefing serves as a strategic guide to the nature of the threat, the emerging solutions, and the immediate, actionable steps required to ensure long-term organizational resilience.

When a New Machine Gets a Master Key: How Quantum Will Break Today's Encryption

The security of our most critical digital infrastructure relies on a clever mathematical concept known as a "trapdoor function," which is the engine behind asymmetric, or public-key, cryptography. Systems like RSA and Elliptic Curve Cryptography (ECC) are designed to be easy to compute in one direction but practically impossible to reverse without a secret key. For RSA, this means it is simple to multiply two extremely large prime numbers to generate a public key, but infeasible for any classical computer to take that public key and factor it back into the original primes to discover the private key. This elegant asymmetry is the Achilles' heel that quantum computers are uniquely poised to exploit. It is the foundation for the security of everything from HTTPS web traffic and VPNs to digital signatures and blockchain transactions.

Shor's Algorithm: The Master Key for Public-Key Cryptography

In 1994, mathematician Peter Shor developed a quantum algorithm that can solve the "hard" problems of integer factorization and discrete logarithms—the foundations of RSA and ECC, respectively—at a speed exponentially faster than any known classical algorithm. Shor's Algorithm is, in effect, the master key that unlocks the mathematical trapdoors of modern public-key cryptography.

To understand its power without delving into the complexities of quantum mechanics, consider an analogy. Imagine a classical computer trying to break an RSA key is like searching for two specific, unique grains of sand on a vast beach by examining each grain one by one—a task that would take longer than the age of the universe. A quantum computer running Shor's Algorithm, by contrast, can leverage the principles of superposition and interference to behave more like an instrument that analyzes the patterns of all the waves on the beach simultaneously. By observing how these waves interact and cancel each other out, it can efficiently pinpoint the exact location of the two grains of sand it is looking for. This ability to process immense possibility spaces in parallel is what gives quantum computers their cryptographic-breaking power.

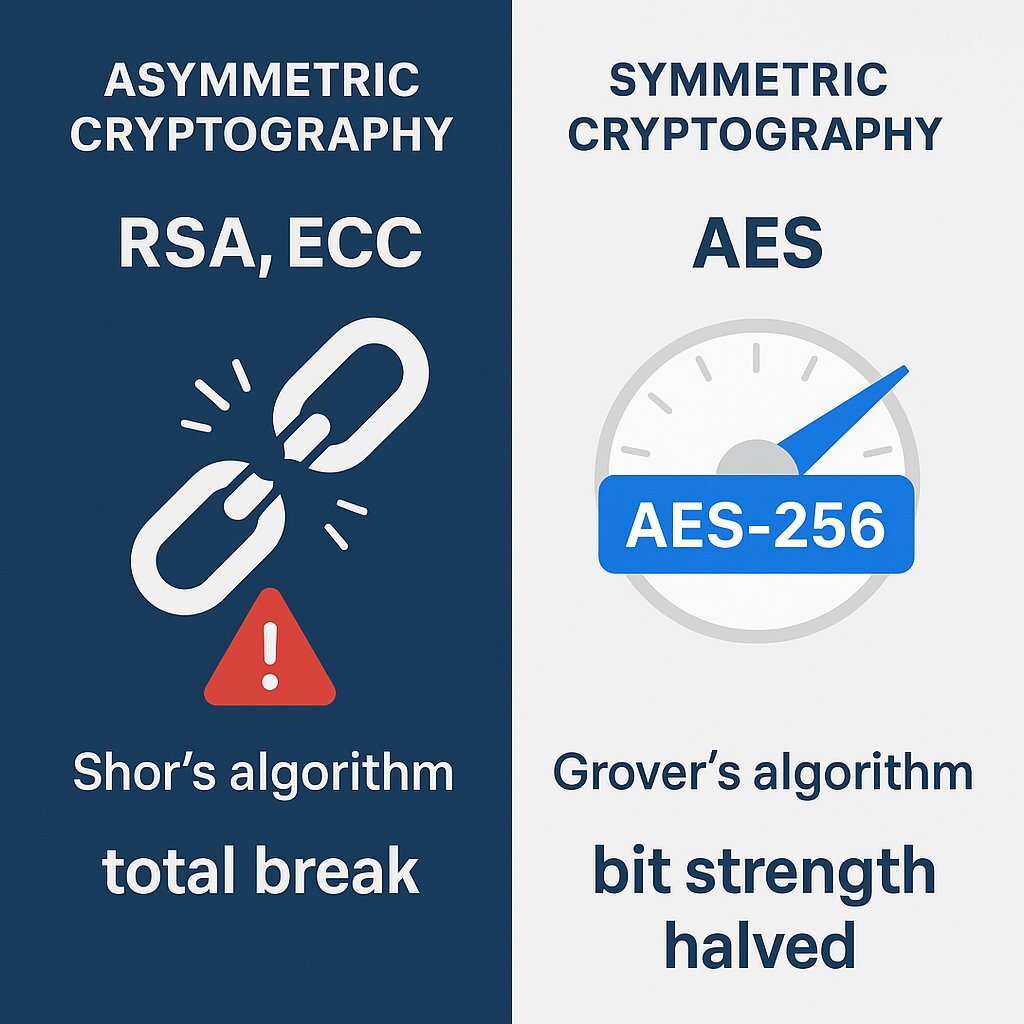

A Bifurcated Risk: Asymmetric vs. Symmetric Encryption

It is critical for technology leaders to understand that the quantum threat is not uniform across all types of encryption. This creates a strategic bifurcation in cryptographic risk that has profound implications for planning and resource allocation.

- Asymmetric Cryptography (RSA, ECC): The threat here is existential. Shor's Algorithm doesn't just weaken these systems; it breaks them completely, allowing an attacker to derive a private key from a public key. The only defense is to migrate to an entirely new family of algorithms. This is an architectural challenge.

- Symmetric Cryptography (AES): The threat is less severe. Grover's Algorithm, another quantum algorithm, provides a quadratic speed-up for brute-force searches against symmetric keys. This effectively halves the bit-strength of the encryption; for example, AES-128 becomes as secure as a 64-bit key, which is breakable. However, this threat is tactical and can be easily mitigated by adopting stronger key lengths. AES-256, which would be reduced to 128-bit security, is widely considered resistant to quantum attacks.

This distinction is crucial. While a policy change to mandate AES-256 is a relatively straightforward task, the migration away from RSA and ECC is a multi-year, enterprise-wide undertaking. Conflating these two distinct problems is a strategic error. Leadership focus and long-term planning must be centered on the far more complex and dangerous threat to public-key infrastructure.

The Silent Heist: Why "Harvest Now, Decrypt Later" Is Today's Quantum Problem

The urgency to address the quantum threat does not begin when a CRQC is publicly announced. It begins now, due to a silent and invisible attack strategy known as "Harvest Now, Decrypt Later" (HNDL). This strategy involves adversaries—particularly well-resourced nation-states—intercepting and storing vast quantities of encrypted data today. They cannot break the encryption with current technology, so they simply archive it, waiting patiently for the day when a capable quantum computer becomes available to decrypt it at will.

The HNDL attack is uniquely dangerous because it is passive and leaves no immediate trace. An organization may have no indication that its most sensitive data has been exfiltrated and is sitting in an adversary's data center, a ticking time bomb waiting for "Q-Day"—the day quantum computers can break today's encryption.

The Data Longevity Risk Equation

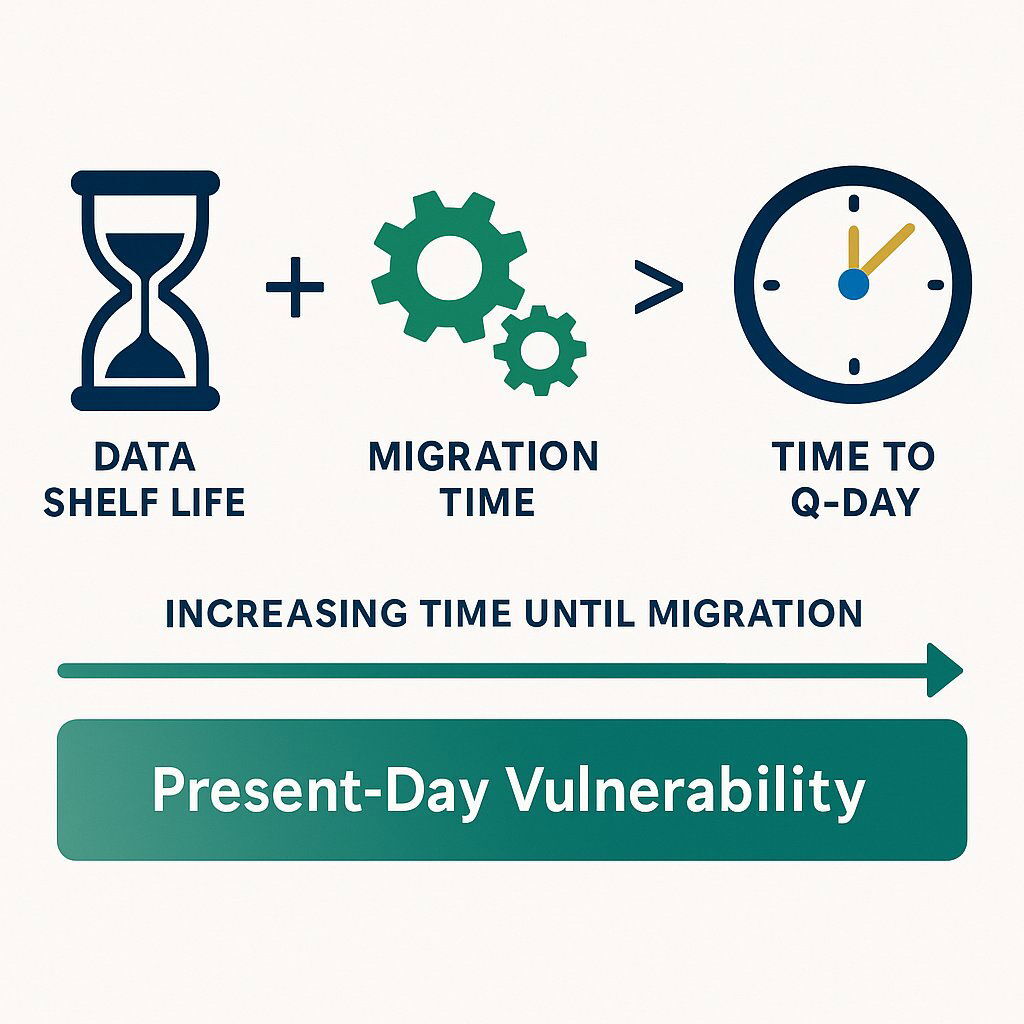

To quantify this immediate risk, executives can use a simple but powerful formula:

DataShelfLife+MigrationTime>TimetoQ−Day=Present−DayVulnerability

Let's break down the components:

- Data Shelf Life: This is the period during which data must remain confidential. For many organizations, this extends for decades. Examples include intellectual property like pharmaceutical formulas or proprietary source code, classified government intelligence, corporate M&A strategies, and personal health records protected under regulations like HIPAA.

- Migration Time: This is the time required for an organization to inventory, plan, and execute a full transition of its critical systems to post-quantum cryptography. Given the complexity of embedded systems, legacy infrastructure, and supply chain dependencies, analysts and government agencies estimate this process will take a minimum of five to ten years, and likely longer for large, complex enterprises.

- Time to Q-Day: This is the arrival of a CRQC. As will be detailed, a strong consensus is forming that this will occur in the early 2030s, placing it firmly within the next decade.

If the sum of your data's required confidentiality period and your migration timeline exceeds the time until a CRQC arrives, that data is already vulnerable if it is exfiltrated today.

The Compliance Paradox

Critically, regulatory compliance can inadvertently amplify an organization's exposure to HNDL attacks. Mandates such as the GDPR, HIPAA, and the Sarbanes-Oxley Act (SOX) often require the long-term retention of sensitive, encrypted data. While intended to protect consumers and ensure accountability, these regulations create legally mandated, centralized repositories of high-value information—perfect targets for adversaries to harvest and store for future decryption.

This reality fundamentally alters the calculus of data breach risk. The value of stolen encrypted data is no longer negligible; it is a speculative asset whose value is expected to appreciate dramatically upon the arrival of a CRQC. This forces a paradigm shift in security strategy: protecting data is no longer just about securing it against today's threats, but about ensuring its encryption is durable enough to last for its entire required lifetime.

The Quantum Countdown: Sizing Up the 10-Year Horizon

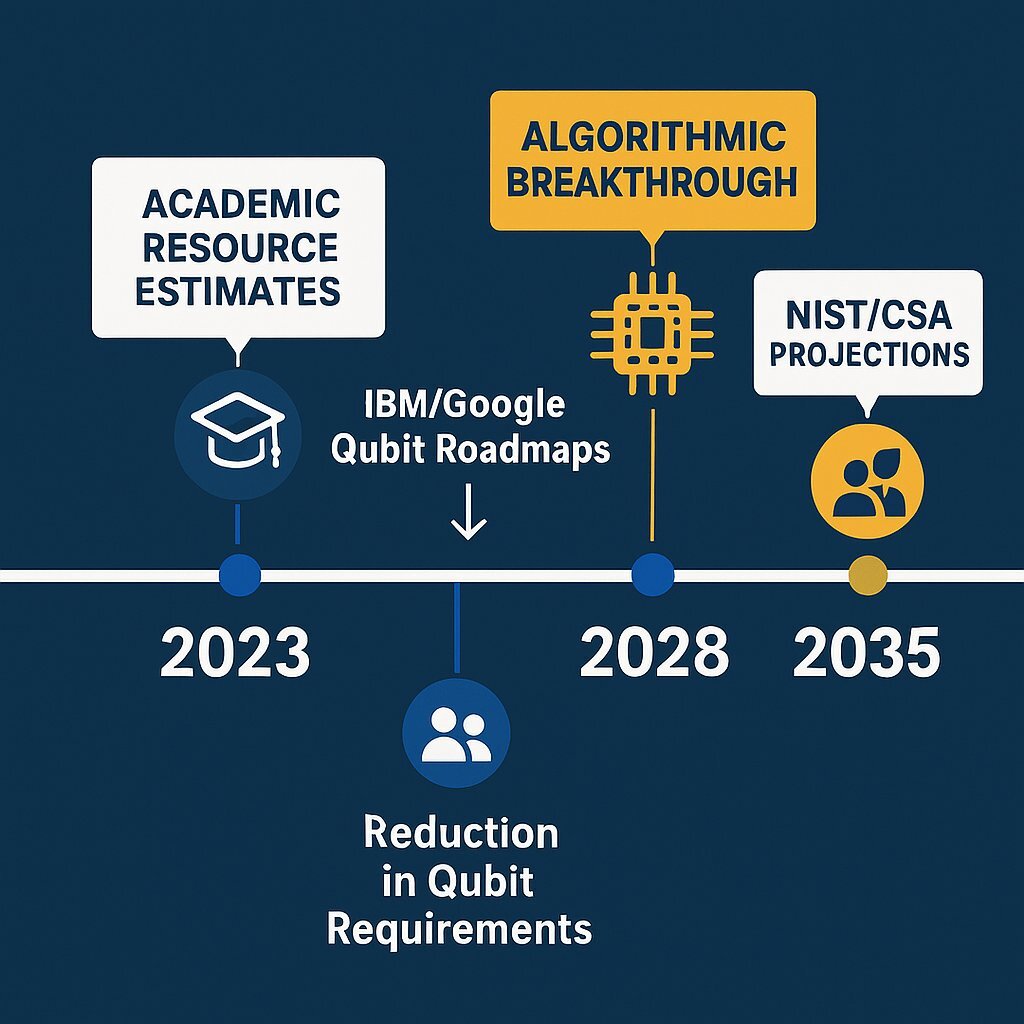

Predicting the exact arrival of a CRQC is challenging, but the once-hazy timelines are rapidly coming into focus. A convergence of expert projections, corporate hardware roadmaps, and significant algorithmic breakthroughs provides a data-driven case for a 10-year planning horizon.

Various authorities now place Q-Day in the early 2030s. Gartner predicts that current public-key cryptography will become unsafe by 2029 and could be broken by 2034. The Cloud Security Alliance has established a symbolic "countdown clock" targeting April 14, 2030, as a focal point to galvanize industry action.

The most significant development accelerating this timeline is not just the steady increase in the number of quantum bits (qubits) in experimental processors, but recent algorithmic breakthroughs that dramatically reduce the number of qubits required for an attack. In 2019, academic estimates suggested that breaking a standard RSA-2048 key would require a machine with approximately 20 million physical qubits—a monumental engineering challenge. However, by 2025, researchers at Google published work demonstrating that due to more efficient methods, the same feat could be accomplished with fewer than 1 million noisy qubits in under a week. This greater than 95% reduction in required resources makes the prospect of building a CRQC far more feasible.

This new, lower resource requirement aligns alarmingly well with the public hardware roadmaps of the industry's leading players. Both IBM and Google are on record with plans to build million-qubit quantum computers by or around 2030. This convergence—where the hardware capabilities being built meet the newly understood requirements for an attack—is a powerful signal that the threat is maturing on a predictable schedule. This momentum is further evidenced by the massive capital flowing into the sector; the quantum computing market is projected to grow from around $1.2 billion in 2024 to over $20 billion by 2032, with some estimates reaching as high as $72 billion by 2035. This is no longer a niche academic pursuit; it is a global industrial race.

| Source / Expert Group | Projected Timeline for CRQC | Resource Estimate to Break RSA-2048 | Key Context |

|---|---|---|---|

| Gartner | Unsafe by 2029, potentially broken by 2034 | N/A | Analyst consensus points to the early 2030s as the critical window. |

| Cloud Security Alliance | Estimates Q-Day as April 14, 2030 | N/A | A symbolic date set by an industry body to create urgency and a focal point for migration. |

| Gidney & Ekerå (2019) | N/A | ~20 million physical qubits | The prior academic benchmark, considered a massive engineering challenge. |

| Gidney (Google, 2025) | Aligns with NIST's 2030-2035 guidance | < 1 million physical qubits | A >95% reduction in required resources due to algorithmic breakthroughs, dramatically increasing feasibility. |

| IBM / Google Roadmaps | Targeting ~1 million qubit systems by 2030 | N/A | Corporate hardware development timelines are now converging with updated resource requirements for an attack. |

Building a Quantum-Resistant Future: The NIST Standardization Process

In response to the looming quantum threat, the global cryptographic community, led by the U.S. National Institute of Standards and Technology (NIST), has been working to develop a new generation of public-key algorithms. This initiative, known as Post-Quantum Cryptography (PQC), aims to create new cryptographic standards that are secure against attacks from both classical and future quantum computers.

PQC algorithms are not designed to run on quantum computers. Instead, they are classical algorithms that can be implemented on the hardware and infrastructure in use today. Their security is derived from different families of mathematical problems—such as those found in lattices, error-correcting codes, and hash functions—that are believed to be difficult for both classical and quantum computers to solve.

To ensure a stable, trusted, and orderly global transition, NIST initiated an open, transparent standardization process in 2016. This multi-year effort invited cryptographers from around the world to submit and rigorously analyze candidate algorithms, building broad consensus on the most secure and efficient designs. This process reached a pivotal milestone in August 2024, when NIST published the first three finalized Federal Information Processing Standards (FIPS) for PQC. This publication effectively fired the starting gun for enterprise migration, moving PQC from a theoretical solution to a standardized, actionable reality.

| FIPS Standard | Algorithm Name | Purpose | Mathematical Basis | Key Characteristics |

|---|---|---|---|---|

| FIPS 203 | ML-KEM (CRYSTALS-Kyber) | Key Encapsulation (General Encryption) | Module-Lattice-based | Primary standard for establishing shared keys. Noted for efficiency and relatively small key sizes. |

| FIPS 204 | ML-DSA (CRYSTALS-Dilithium) | Digital Signatures | Module-Lattice-based | Primary standard for authentication and verifying data integrity. Excellent all-around performance. |

| FIPS 205 | SLH-DSA (SPHINCS+) | Digital Signatures | Stateless Hash-based | Backup signature standard. Slower and produces larger signatures, but is based on different mathematics for security diversity. |

Your Quantum Readiness Plan: Four Steps to Take in the Next 12 Months

The transition to PQC is a marathon, not a sprint. It will be one of the most significant and complex cryptographic migrations ever undertaken. However, waiting for Q-Day to arrive will be too late. The journey to quantum readiness must begin now with deliberate, foundational steps. The following four actions provide a pragmatic roadmap for technology leaders to initiate within the next 12 months.

Step 1: Initiate a Comprehensive Crypto-Inventory

An organization cannot protect what it cannot see. The foundational first step in any PQC migration is to conduct a comprehensive discovery and inventory of all public-key cryptography in use across the enterprise. This is a monumental task that cannot be accomplished manually and requires the use of automated scanning and discovery tools. A thorough inventory must be exhaustive, covering what experts call the "Five Pillars" of the cryptographic footprint:

- External Networks: Public-facing TLS/SSL certificates on websites, APIs, and servers.

- Internal Networks: Encryption securing service-to-service communication, internal APIs, and cloud services.

- IT Assets: Encryption on endpoints, servers, mobile devices, and IoT hardware.

- Databases: Encryption protecting data at rest in relational and non-relational databases.

- Code: Cryptographic libraries and functions embedded in source code, applications, and firmware.

The ultimate goal is to create a dynamic, continuously updated Cryptographic Bill of Materials (CBOM) that serves as the single source of truth for all cryptographic assets, their locations, owners, and configurations.

Step 2: Prioritize Assets Based on Risk and Data Longevity

With a complete inventory in hand, the next step is to prioritize systems for migration. Not all assets carry the same level of risk. The prioritization process should be guided by a risk-based assessment that considers both business impact and data longevity. The first systems to target for migration should be those that protect the most sensitive data with the longest required shelf life—the "crown jewels" most at risk from HNDL attacks. These often include Public Key Infrastructure (PKI) root Certificate Authorities, code signing systems, and databases containing long-term intellectual property.

This assessment must also extend beyond the organization's own walls to its supply chain. It is critical to begin engaging with all key technology vendors, cloud providers, and SaaS partners to understand their PQC roadmaps and timelines, as their readiness will directly impact your own.

Step 3: Architect for Crypto-Agility

The PQC landscape will continue to evolve, and new vulnerabilities—both classical and quantum—may be discovered in the future. Therefore, the strategic goal is not a one-time "rip and replace" of old algorithms with new ones. The goal is to build crypto-agility: the architectural capability to rapidly adapt and switch cryptographic algorithms with minimal disruption to business operations.

Achieving crypto-agility is an architectural discipline that must be embedded into development, procurement, and IT governance policies now. Key principles include standardizing on a small set of vetted cryptographic libraries, using abstraction layers that separate applications from underlying cryptographic functions, and eliminating hard-coded dependencies on specific algorithms or protocols. This approach ensures that the next cryptographic migration—whenever and for whatever reason it occurs—will be far less painful and costly than the one currently facing every organization.

Step 4: Educate and Engage Your Ecosystem

The migration to PQC is not solely a task for the cybersecurity team; it is a cross-functional business transformation that will touch nearly every part of the organization. Success requires buy-in and collaboration across the enterprise. The process must begin now with a concerted effort to educate stakeholders at all levels.

This includes briefing the board of directors and executive leadership on the quantum threat as a material business risk. It involves training development teams on secure coding practices and the principles of crypto-agility. It also means actively participating in the broader ecosystem by joining industry working groups, such as those run by the Cloud Security Alliance, to share best practices and stay informed of emerging standards.

Importantly, the PQC migration project offers an opportunity to address long-standing issues. The comprehensive crypto-inventory required for quantum readiness will inevitably uncover existing cryptographic weaknesses that pose a risk today—such as the use of deprecated algorithms like SHA-1, expired certificates, hard-coded keys, or inconsistent key management practices. In this way, the PQC migration initiative can be framed as a catalyst for paying down decades of "cryptographic technical debt." The immediate return on investment is a stronger, more resilient classical security posture, providing tangible benefits long before the first PQC algorithm is deployed in production.

Conclusion: Partnering for a Quantum-Safe Future

The evidence is clear and compelling: the quantum threat to cryptography is no longer a distant theoretical problem. The convergence of algorithmic breakthroughs, accelerating hardware development, and the immediate risk of "Harvest Now, Decrypt Later" attacks places this challenge squarely within the strategic planning horizon of every modern enterprise. With NIST having now published the first official post-quantum standards, the era of passive observation is over, and the time for active preparation has begun.

The migration to post-quantum cryptography will be a complex, multi-year journey. It will demand deep technical expertise, meticulous planning, and flawless execution to navigate legacy systems, complex supply chains, and evolving standards without disrupting business operations. This is more than a simple technology upgrade; it is a fundamental transformation of an organization's security foundation.

Successfully navigating this transition requires a partner with proven expertise not only in modern cryptography but also in secure software architecture, legacy system modernization, and strategic IT consulting. At Baytech Consulting, we combine deep expertise in building resilient, secure software solutions with a track record of guiding enterprises through their most complex technological transformations. The journey to a quantum-safe future is one of the most significant security challenges of the next decade.

Further Reading & Supporting Articles

- NIST Post-Quantum Cryptography Project: The official source for standards, timelines, and technical documentation from the National Institute of Standards and Technology. https://csrc.nist.gov/projects/post-quantum-cryptography

- CISA Quantum Readiness: Actionable guidance, fact sheets, and roadmaps from the U.S. Cybersecurity and Infrastructure Security Agency for critical infrastructure and enterprise organizations. https://www.cisa.gov/resources-tools/resources/quantum-readiness-migration-post-quantum-cryptography

- Cloud Security Alliance (CSA) Quantum-Safe Security Working Group: A leading industry forum for developing best practices, research, and practical guidance on navigating the transition to a quantum-safe world. https://cloudsecurityalliance.org/research/topics/quantum-safe-security

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.