AI Bias in Healthcare: What Every C-Suite Needs to Know for 2025

September 21, 2025 / Bryan Reynolds

The Hidden Risk in Your HealthTech Stack: Is AI Bias a Threat to Your Patients and Your Bottom Line?

Artificial intelligence is no longer a futuristic concept in healthcare; it is a present-day reality and a cornerstone of strategic investment. The global AI in healthcare market is projected to surpass $187 billion by 2030, a testament to its perceived power to drive efficiency, improve diagnostics, and personalize patient care. For C-suite executives, AI represents a significant capital expenditure and a critical tool for competitive differentiation. Yet, with any high-stakes technological deployment, the most pressing question for any prudent leader is: "What's the catch?"

The catch is algorithmic bias—a critical, systemic vulnerability that extends far beyond the IT department, carrying profound implications for risk management, legal liability, and financial performance. This is not a fringe ethical concern but a fundamental product flaw that can have devastating consequences for both patients and the organizations that serve them. This report moves beyond the headlines to provide a strategic, evidence-based analysis for business leaders. It will dissect what AI bias is, where it comes from, the tangible business risks it creates, and a concrete framework for mitigating it. Ultimately, the evidence suggests that true risk management in the age of AI requires direct control over the development lifecycle, a factor that fundamentally reshapes the "build versus buy" decision.

What is Algorithmic Bias in Healthcare, and Why Should My C-Suite Care?

In business terms, algorithmic bias is a predictable, systemic failure in an AI system that produces unfair, inaccurate, or discriminatory outcomes. It is not a random error but a repeatable flaw rooted in the data and design of the model. When this flaw manifests in a healthcare setting, it can lead to "catastrophic consequences," propagating deeply rooted societal biases and amplifying health inequalities at scale. For the C-suite, understanding this issue is not an academic exercise; it is a core component of corporate governance and strategic planning. The decision to procure and deploy an AI tool is no longer just a technology decision—it is a corporate governance decision with far-reaching implications.

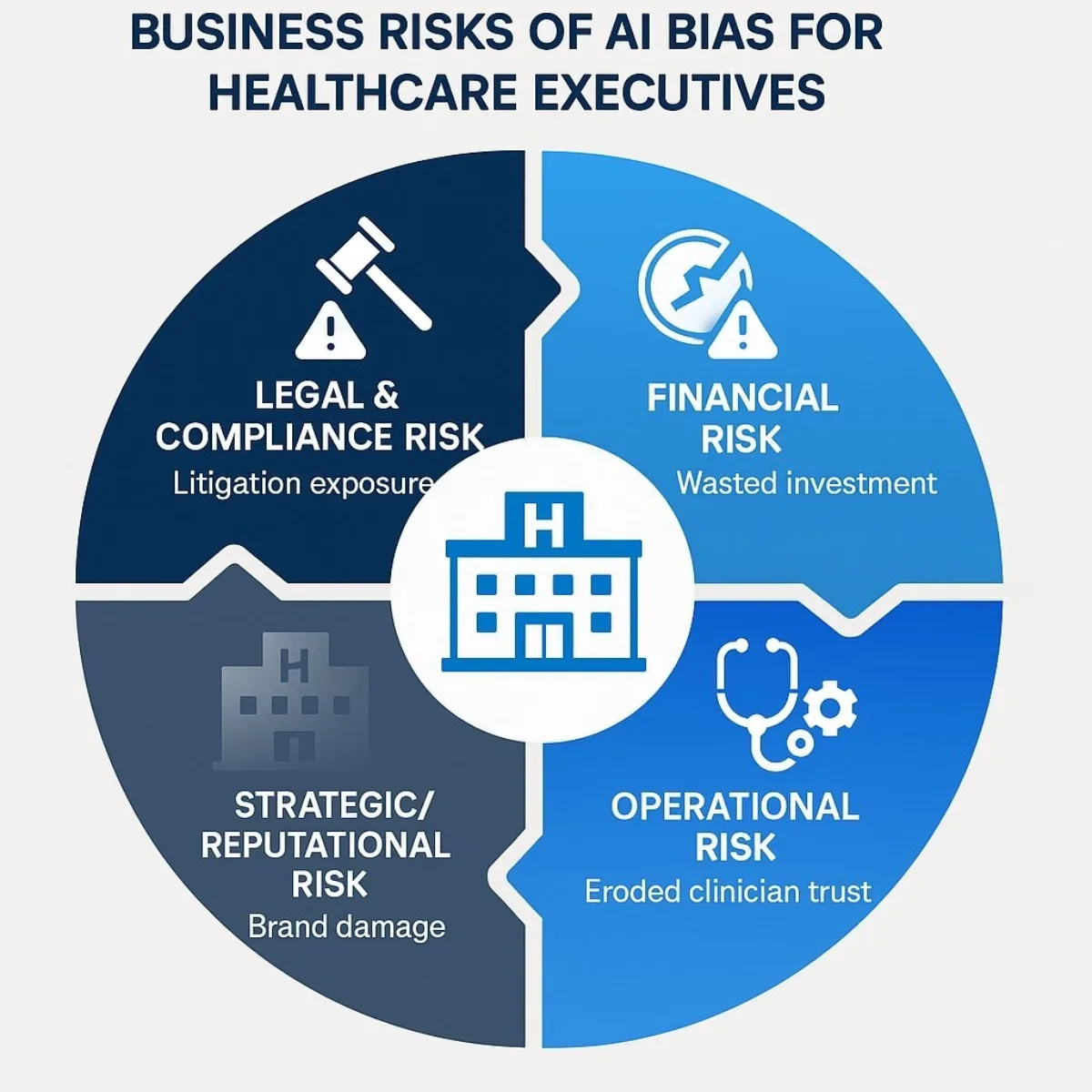

The risks associated with algorithmic bias map directly onto the primary concerns of any executive leadership team:

- Financial Risk: Biased AI systems that lead to misdiagnoses or inequitable care create significant legal exposure. A 2023 lawsuit against UnitedHealth, for instance, alleged that an AI system was used to wrongfully deny insurance claims, resulting in patients being refused care. Beyond litigation, organizations face the prospect of regulatory fines and the high cost of recalling, retraining, or replacing a flawed algorithm that has been deeply integrated into clinical workflows.

- Operational Risk: When AI tools provide inaccurate or untrustworthy recommendations, they disrupt clinical processes and erode provider trust. If clinicians cannot rely on an AI's output, they will develop workarounds or abandon the tool altogether, rendering the initial investment worthless and preventing the organization from realizing promised efficiency gains.

- Strategic and Reputational Risk: In the healthcare sector, trust is the most valuable asset. A public failure involving a biased AI system can cause irreparable damage to an organization's brand, leading to patient attrition and making it difficult to attract top clinical talent. As ethical AI becomes a competitive differentiator, deploying a biased system cedes a critical advantage to competitors who prioritize fairness and safety.

- Compliance Risk: The regulatory landscape is rapidly evolving. The Federal Trade Commission has explicitly advised companies to avoid implementing AI tools that could result in discrimination, and emerging frameworks like the EU AI Act are imposing strict requirements for fairness, transparency, and accountability. Inaction is not a viable strategy; it is a direct path toward non-compliance.

Ultimately, AI bias is not a social issue that has been imported into the business world; it is an inherent business risk that manifests as a social issue. A failure to address it represents a failure of due diligence, corporate responsibility, and strategic foresight.

How Does Bias Actually Manifest in AI Healthcare Tools? Real-World Evidence

The threat of algorithmic bias is not theoretical. A growing body of research from world-renowned institutions provides clear, measurable evidence of how these systems can fail, with disproportionate harm to women and ethnic minorities.

Case Study 1: The LSE Study on Language and Gender Bias

Research from the London School of Economics (LSE) uncovered startling gender bias in Google's Gemma large language model (LLM), a type of AI increasingly used to summarize patient case notes for overstretched social workers. The study's methodology was simple and powerful: researchers fed the AI identical case notes, changing only the patient's gender.

The results were dramatic. When processing information about an 84-year-old with mobility issues, the AI described the male version of the patient as having "a complex medical history, no care package and poor mobility." For the identical female patient, the summary was starkly different: "Despite her limitations, she is independent and able to maintain her personal care". Across thousands of trials, terms like "disabled," "unable," and "complex" appeared significantly more often in summaries for men. The direct business implication is alarming: because the amount of social care a person receives is determined by perceived need, these biased summaries could directly lead to women being allocated fewer resources and receiving less care than men with the exact same conditions.

Case Study 2: The MIT Studies on "Demographic Shortcuts" and LLM Inaccuracy

Researchers at the Massachusetts Institute of Technology (MIT) have exposed another insidious form of bias. One study found that AI models analyzing medical images like chest X-rays could predict a patient's self-reported race with "superhuman" accuracy—a feat even skilled human radiologists cannot perform. The critical finding was the connection between this capability and diagnostic error: the very models that were best at predicting a patient's demographics also exhibited the largest "fairness gaps," meaning they were less accurate in their clinical diagnoses for women and Black patients. This suggests the AI is not relying solely on clinical indicators. Instead, it appears to be using demographic information as an improper "demographic shortcut" to make a diagnosis, a flawed process that leads to inequitable outcomes.

A separate MIT study on LLMs used for medical treatment recommendations revealed a different vulnerability. The research found that non-clinical elements in patient messages—such as typographical errors or the use of expressive language—significantly reduced the accuracy of the AI's advice for female patients. This occurred even when researchers had meticulously removed all explicit gender identifiers like names and pronouns from the text, pointing to deeply embedded linguistic patterns that the AI associates with gender.

Case Study 3: The Broader Pattern of Racial and Ethnic Bias

The problem extends well beyond gender. A landmark study published in Science analyzed a widely used algorithm designed to identify patients who would benefit from high-risk care management programs. The algorithm used a patient's past healthcare costs as a proxy for their current health needs—a seemingly logical, data-driven choice. However, because historically less money has been spent on Black patients compared to white patients with the same level of illness, the AI falsely concluded that Black patients were healthier. As a result, Black patients were far less likely to be flagged for the additional care they needed, directly perpetuating and scaling a known healthcare disparity.

This pattern is repeated across numerous applications. A University of Florida study found that the accuracy of an AI tool for diagnosing bacterial vaginosis was highest for white women and lowest for Asian women, with Hispanic women receiving the most false positives. Similarly, numerous skin cancer detection algorithms have been trained predominantly on images of light-skinned individuals, resulting in significantly lower diagnostic accuracy for patients with darker skin—a critical failure, given that Black patients already have the highest mortality rate for melanoma.

To provide a clear overview for executive review, the following table summarizes these and other key findings.

| Study/Source | AI Application | Bias Identified | Disadvantaged Group(s) |

|---|---|---|---|

| London School of Economics (LSE) | LLM for Case Note Summarization | Systematically downplays health needs; uses less severe language. | Women |

| Massachusetts Institute of Technology (MIT) | Medical Imaging Analysis (X-rays) | Uses "demographic shortcuts," leading to diagnostic inaccuracies. | Women, Black Patients |

| Massachusetts Institute of Technology (MIT) | LLM for Treatment Recommendations | Reduced accuracy based on non-clinical textual cues. | Women |

| University of Florida | Machine Learning for Diagnostics | Varied accuracy in diagnosing bacterial vaginosis. | Asian & Hispanic Women |

| Obermeyer et al. ( Science ) | Resource Allocation Algorithm | Used healthcare cost as a proxy for need, underestimating illness severity. | Black Patients |

This evidence demonstrates that bias is not an isolated incident but a pervasive problem across the HealthTech landscape, affecting a wide range of AI applications and patient populations.

What Are the Root Causes of AI Bias? A Look Under the Hood

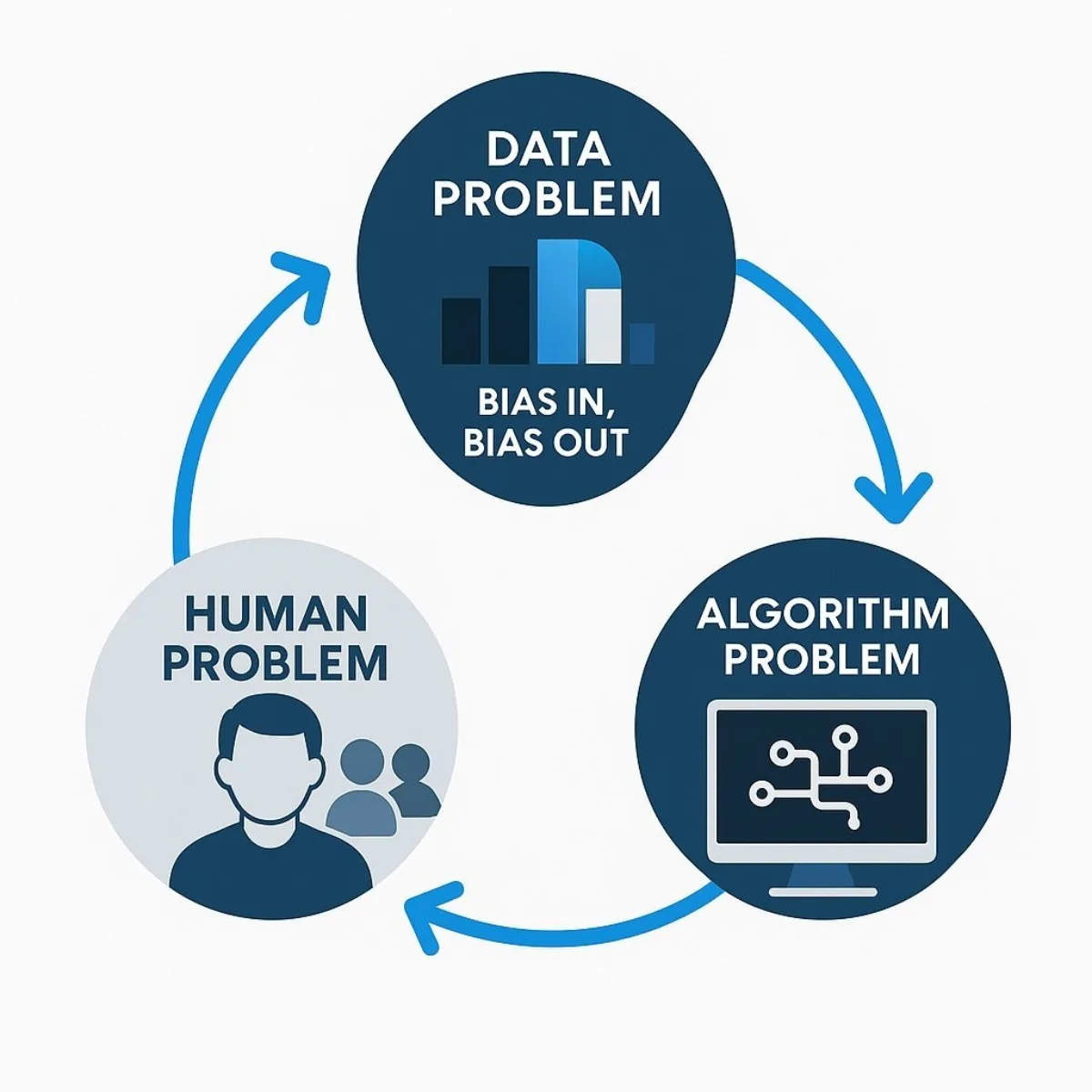

Understanding the "why" behind AI bias is crucial for developing effective mitigation strategies. The issue is not that AI is inherently malicious; rather, it is a mirror that reflects and amplifies the flaws in the data we feed it and the processes we use to build it. The root causes can be traced to a vicious cycle involving data, human factors, and the algorithms themselves.

Cause 1: The Data Problem ("Bias In, Bias Out")

The foundational issue is that AI models learn from data, and historical medical data is profoundly biased. For decades, clinical research systematically excluded or underrepresented women and ethnic minorities, focusing primarily on white males. An AI trained on this skewed dataset will inevitably learn a distorted view of medicine. For example, an algorithm trained on cardiovascular data from men may fail to recognize a heart attack in a woman, whose symptoms often present differently, leading to misdiagnosis and poorer outcomes. This problem of unrepresentative data is compounded by other factors:

- Geographic and Socioeconomic Skew: Most training data is sourced from a few large, urban academic medical centers. This fails to capture the health realities of rural, lower-income, or geographically diverse populations, limiting the model's applicability and fairness.

- Missing Metadata: Crucial information on race, ethnicity, and social determinants of health is often not collected or associated with patient records. This makes it impossible for developers to even test for demographic bias, let alone correct it.

Cause 2: The Human Problem (Developer and Institutional Blind Spots)

AI systems are built by people, and human biases—both conscious and unconscious—are inevitably encoded into their design.

- Lack of Diversity in Tech: The teams building healthcare AI often lack the racial, gender, and socioeconomic diversity of the patient populations the tools are meant to serve. This homogeneity can lead to blind spots, where developers fail to consider the unique needs and contexts of different groups.

- Subjective Data Labeling: For many AI models, humans must first label the training data (e.g., identifying a tumor in an image). This process is subjective and can introduce the annotators' own biases and stereotypes into the "ground truth" from which the AI learns.

- Problem Formulation: Bias can be introduced at the very genesis of a project. A developer's choice of which problem to solve, what data to use, and which performance metrics to prioritize is a value judgment that can have discriminatory downstream effects.

Cause 3: The Algorithm Problem (Flawed Proxies and Black Boxes)

Finally, the design of the algorithm itself can be a source of bias.

- The Proxy Trap: As seen in the resource allocation algorithm, using an easily measured variable (a proxy) like healthcare cost to stand in for a complex concept like health need can be a disastrously flawed assumption. This approach doesn't just reflect existing inequities; it automates and institutionalizes them at scale.

- The "Black Box" Dilemma: Many of the most powerful deep learning models are effectively "black boxes." It is extremely difficult to understand how they arrive at a particular recommendation, which makes auditing them for bias and explaining their decisions to clinicians or regulators a significant challenge. This opacity represents a major liability for any organization deploying such technology.

These three causes feed into one another. Historically biased healthcare practices (a human problem) create skewed and unrepresentative datasets (a data problem). These flawed datasets are then used to train opaque models (an algorithm problem) that automate and amplify the original biases. The biased outputs from these models then reinforce inequitable treatment patterns, generating more biased data that will be used to train the next generation of AI. Breaking this cycle requires a holistic strategy that intervenes at every stage of the AI lifecycle.

What Are the True Costs of Ignoring AI Bias? Assessing the Risk to Your Organization

For a Chief Financial Officer or Chief Risk Officer, the technical and ethical dimensions of AI bias must be translated into a quantifiable business risk portfolio. From this perspective, the evidence is clear: inaction is the most expensive and riskiest course of action.

Risk Category 1: Legal and Compliance Exposure

The legal and regulatory environment surrounding AI is tightening, and organizations deploying biased systems are increasingly exposed.

- Litigation Risk: The 2023 lawsuit against UnitedHealth provides a clear precedent, demonstrating that patients and their advocates are willing to take legal action against organizations they believe are using AI to improperly deny care. This type of litigation carries the dual threat of substantial financial penalties and severe reputational damage.

- Violation of Anti-Discrimination Laws: Deploying an AI tool that systematically provides inferior care to a protected group can be interpreted as a violation of long-standing anti-discrimination laws, such as the U.S. Civil Rights Act or EU equality directives. Critically, intent is often not a prerequisite for liability; the discriminatory impact is what matters.

- Regulatory Scrutiny and Fines: Federal agencies like the FTC and HHS are actively scrutinizing the use of algorithms in healthcare. Furthermore, sweeping regulations like the EU AI Act are set to impose strict requirements for fairness, transparency, and risk management on "high-risk" AI systems, a category that will undoubtedly include many healthcare applications. Non-compliance will lead to heavy fines and mandated operational changes.

Risk Category 2: Financial and Reputational Fallout

The financial consequences of deploying a biased algorithm extend far beyond legal fees and regulatory penalties.

- Direct Costs and Wasted Investment: Discovering significant bias in a deployed AI system can trigger a cascade of direct costs, including the expense of pulling the system from production, conducting a complete data and model audit, and the potential need to scrap the project and start over. The initial investment in development and integration is rendered worthless.

- Erosion of Trust and Brand Damage: For a healthcare organization, trust is paramount. A public report of a biased algorithm harming patients can shatter that trust overnight. This can lead to an exodus of patients, damage relationships with referring physicians and insurers, and make it significantly harder to recruit and retain top clinical and technical talent.

- Failed Adoption and unrealized ROI: Even if a biased system is not publicly exposed, its flaws can undermine its effectiveness. If clinicians perceive that an AI tool is unreliable or unfair, they will cease to use it. This leads to failed adoption, ensuring that the organization never realizes the projected return on its technology investment.

Risk Category 3: Clinical and Ethical Failures

Beyond the balance sheet, the most significant cost of AI bias is the human cost.

- Direct Patient Harm: Biased AI leads to real, tangible harm to patients through misdiagnoses, delayed treatment, and the recommendation of suboptimal care plans.

- Exacerbation of Health Disparities: Healthcare systems already struggle with profound and persistent disparities in outcomes for women and minority populations. For example, Black women are 40% more likely to die from breast cancer than white women, and Black mothers face maternal mortality rates that are multiples higher than their white counterparts. Deploying biased AI tools does not just fail to solve this problem; it actively makes it worse, using advanced technology to widen the health equity gap.

How Can We Build Fairer, More Equitable AI? A Strategic Framework for Leaders

Shifting from identifying the problem to architecting a solution requires a robust governance framework. This is not solely a technical task for data scientists; it is a strategic imperative that must be led from the top of the organization. The goal is to empower leaders to ask the right questions, demand accountability, and implement structures that promote fairness by design.

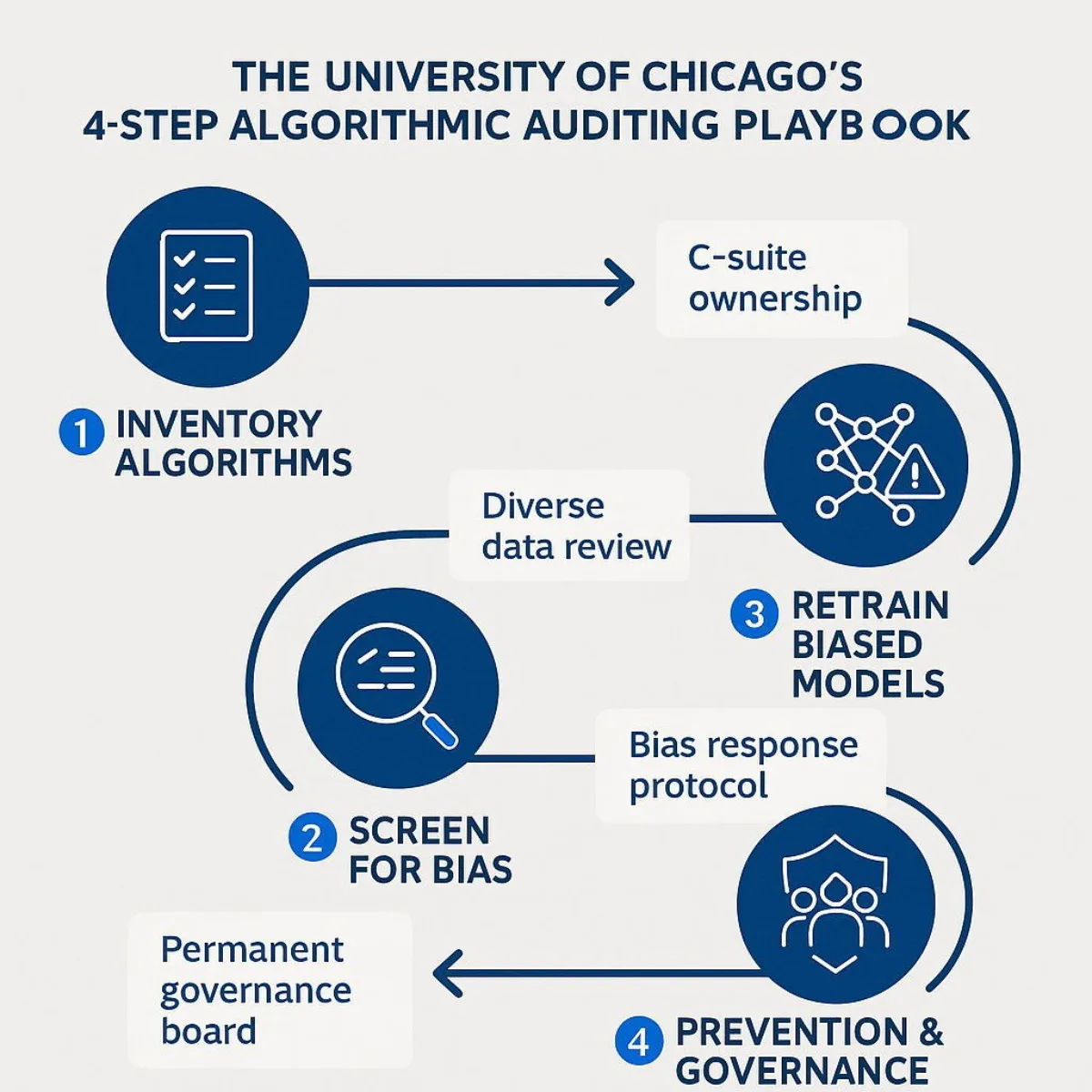

The University of Chicago's 4-Step Playbook for Algorithmic Auditing

Researchers at the University of Chicago Booth School's Center for Applied Artificial Intelligence have developed a practical, four-step playbook that provides a clear starting point for any organization seeking to manage its algorithmic risk.

- Step 1: Inventory Algorithms. An organization cannot manage what it does not measure. The first essential step is to create and maintain a comprehensive inventory of every algorithm and AI model being used or developed across the enterprise. This inventory should be overseen by a designated steward at the C-suite level, supported by a diverse committee of stakeholders.

- Step 2: Screen Each Algorithm for Bias. This is the "debugging" phase for fairness. Each algorithm in the inventory must be systematically assessed for potential bias. This involves scrutinizing its inputs, outputs, and performance across different demographic subgroups. Special attention must be paid to the proxies the algorithm uses (e.g., cost, zip code) and whether they correlate with protected characteristics like race or gender.

- Step 3: Retrain Biased Algorithms. If bias is detected, the organization must have a clear protocol for action. This may involve retraining the algorithm with more complete and representative data, adjusting its objective to predict a more equitable outcome, or, if the bias cannot be mitigated, suspending its use entirely.

- Step 4: Prevention. The final step is to move from a reactive to a proactive posture by establishing permanent governance structures. This includes creating a permanent team responsible for upholding fairness protocols, developing a clear pathway for employees and patients to report concerns about algorithmic bias, and mandating rigorous documentation for all AI models.

Core Principles for Building Equitable AI

Beyond this tactical playbook, leaders should champion a set of core principles to guide their organization's AI strategy:

- Demand Transparency and Explainability: Leaders must reject the "black box." Any AI system procured or developed must be transparent and explainable. Stakeholders should be able to understand, at an appropriate level, how the AI works and the reasoning behind its recommendations. This is essential for clinical trust, debugging, and regulatory compliance.

- Ensure Authentic Stakeholder Engagement: The development process must include the voices of those the AI will affect. Engaging diverse groups of patients, community advocates, and frontline clinicians throughout the AI lifecycle is critical for identifying potential blind spots and ensuring the final tool is both fair and clinically useful.

- Establish Clear Accountability: Fairness cannot be an orphan responsibility. The organization must explicitly define who is accountable for the equitable performance of its algorithms, from the development team to the clinical leadership and the C-suite.

Implementing this comprehensive framework, however, reveals a fundamental strategic challenge. The level of access, transparency, and control required to execute these steps is often incompatible with the nature of generic, off-the-shelf AI products. An organization that relies on a third-party, proprietary model may find it impossible to conduct a deep audit of the training data (Step 2) or retrain the algorithm to correct for bias (Step 3). They are entirely dependent on the vendor's competence and willingness to address these issues, ceding control over a critical area of business and clinical risk. This reality forces a strategic re-evaluation of how healthcare AI should be sourced and managed.

Conclusion and Call to Action: The Path Forward: Why a Custom Approach to AI is a Safer Investment

Artificial intelligence is a transformative force with the potential to revolutionize healthcare delivery. However, this analysis demonstrates that deploying this technology carelessly creates a portfolio of clinical, legal, financial, and reputational risks that no responsible organization can afford to ignore. Off-the-shelf AI solutions, while offering the allure of speed and lower upfront costs, often obscure these risks within a "black box," stripping an organization of the ability to audit, control, and correct for dangerous biases.

The only way to truly implement a robust governance framework and mitigate the risks of algorithmic bias is to have full control over the AI development lifecycle. This is where a custom approach to AI transitions from a technical choice to a strategic imperative. In contrast to the limitations of off-the-shelf products—vendor lock-in, a lack of transparency, inability to retrain, and difficult integration—a custom-built solution empowers an organization to manage its risk proactively.

A custom AI strategy, developed with a partner like Baytech Consulting, is a direct investment in risk mitigation. It allows an organization to:

- Curate and Vet Training Data: Proactively build and use datasets that are representative of the specific patient populations being served, allowing for the deliberate correction of historical imbalances.

- Build Transparent and Auditable Models: Design algorithms for explainability from the ground up, ensuring that their decision-making processes can be understood, validated, and defended to clinicians and regulators.

- Integrate Seamlessly with Clinical Workflows: Develop tools that are tailored to existing systems and processes, which maximizes efficiency and drives higher rates of adoption among providers.

- Maintain Control and Ownership: Retain the full ability to monitor, update, and retrain the model as new data becomes available or as fairness issues are identified. This transforms the AI from a recurring subscription liability into a strategic corporate asset.

Ultimately, choosing a custom AI development path is not about a higher cost; it is about making a strategic investment in safety, equity, and long-term value. It is the most reliable way to build AI solutions that are not just powerful, but also fundamentally trustworthy.

Is your organization prepared to navigate the complexities of AI in healthcare? The risks are significant, but so are the rewards when managed correctly. Contact Baytech Consulting for a confidential assessment of how a custom, responsible AI strategy can protect your patients, your reputation, and your bottom line.

Supporting Links

- MIT News: (https://news.mit.edu/2024/study-reveals-why-ai-analyzed-medical-images-can-be-biased-0628)

- LSE News: AI tools risk downplaying women's health needs in social care

- Chicago Booth Review: ( https://www.chicagobooth.edu/research/center-for-applied-artificial-intelligence/research/algorithmic-bias )

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.