6 Expensive Software Development Myths Every CTO Needs to Know

November 13, 2025 / Bryan ReynoldsThe 6 Software Development Myths That Are Costing Your Business Money

Answering the Questions That Keep CTOs Awake at Night

As a technology leader—a VP of Engineering, CTO, or Project Manager—you operate under a unique and constant set of pressures. The C-suite demands innovation and speed, while finance scrutinizes every line item of your budget. A 2024 Gartner report on the priorities of software engineering leaders confirms this reality: integrating AI and increasing developer productivity are top goals for 48% of leaders, yet 60% expect significant budget restrictions to be a primary obstacle. How do you deliver more with less? How do you accelerate growth while managing costs?

These are the tough questions. And in an industry often clouded by buzzwords and folklore, getting straight, data-backed answers is a challenge. This article is different. It is an exercise in radical transparency, built on the 'They Ask You Answer' methodology. The core principle is simple: to become the most trusted voice in your field, you must be willing to answer the questions everyone is asking, especially the difficult ones about costs, potential problems, and honest comparisons.

Today’s technology leaders are sophisticated buyers who conduct extensive research to de-risk major decisions. Their primary fear is not just overspending, but making a strategic mistake that costs market share, talent, and time. By addressing these fears head-on with data, we can move past the myths. This report will provide a data-backed framework to challenge six of the most pervasive and financially damaging myths in software development, replacing industry folklore with financial and operational facts. For a deeper dive into mitigating budget risks, see our guide on how to budget for custom software in 2026.

Myth 1: To go faster, add more people

The Question You're Really Asking

"My project is behind schedule and the pressure is immense. How can I accelerate delivery without derailing the entire project and blowing the budget?"

When a project falls behind, the most common gut reaction is to throw more resources at it. This stems from a manufacturing-era mindset where work is measured in interchangeable units like the "man-month." However, as Fred Brooks articulated in his seminal 1975 book, The Mythical Man-Month, software engineering is a craft of complex, interdependent tasks. His famous analogy remains as true today as it was then: "nine women can't make a baby in one month".

The Data-Backed Reality of Brooks's Law

This observation is formalized in what is now known as Brooks's Law: "Adding manpower to a late software project makes it later." This is not merely a clever saying; it is a principle supported by decades of empirical evidence. The law is rooted in three inescapable realities of software development:

- Ramp-Up Time: New engineers are not immediately productive. They must learn the codebase, the architecture, the tools, and the team's unique processes. This requires significant time from your most experienced team members, pulling them away from critical path tasks and creating a net drain on the project's current velocity.

- Task Indivisibility: Many complex software tasks are inherently sequential and cannot be perfectly partitioned. Attempting to divide an indivisible task can introduce more management and integration overhead than it saves in parallel work.

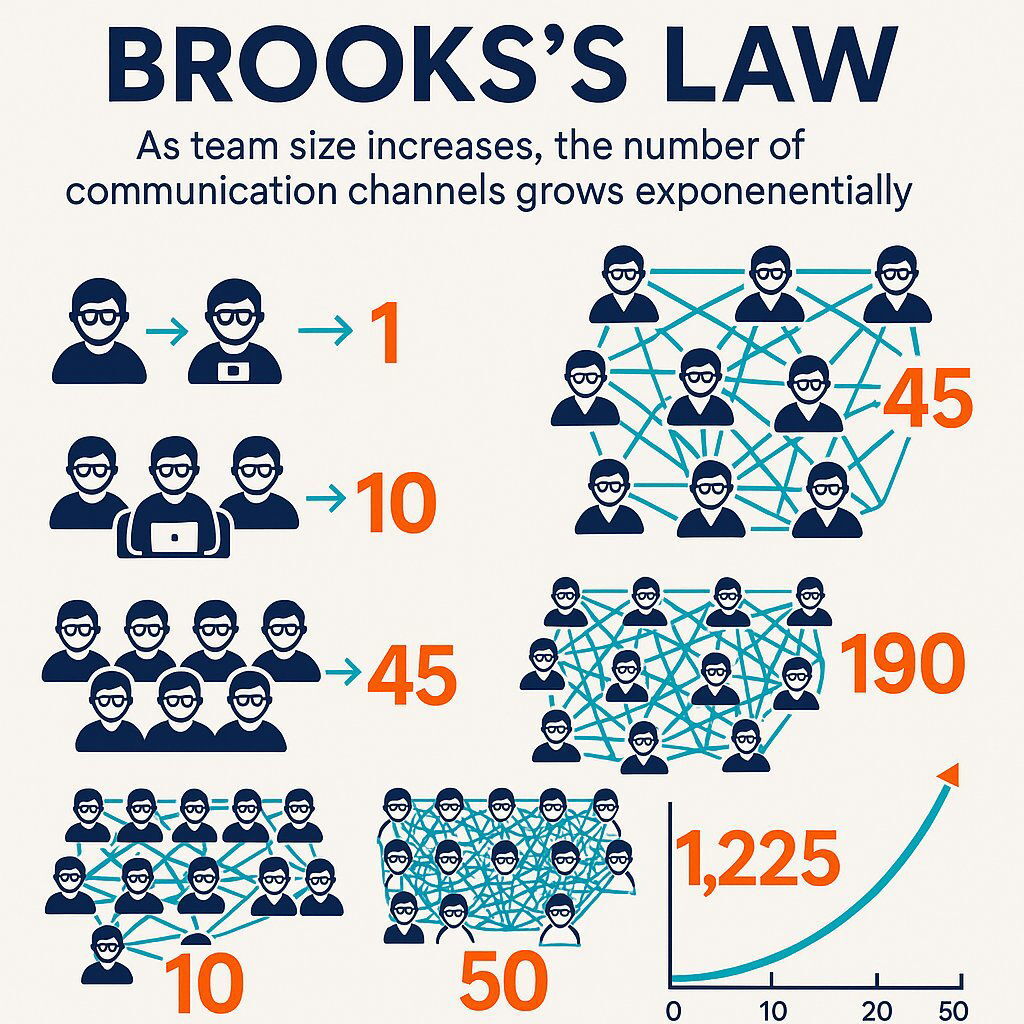

- Communication Overhead: This is the silent killer of productivity. As you add people to a team, the number of potential communication channels does not grow linearly; it grows exponentially. The time spent coordinating, clarifying, and resolving conflicts begins to consume the very hours you sought to add.

This explosion in complexity can be quantified using a standard project management formula for calculating communication channels, where 'n' is the number of people on the team: Channels=n(n−1)/2. The results are staggering.

| Team Size (n) | Number of Communication Channels |

|---|---|

| 2 people | 1 channel |

| 5 people | 10 channels |

| 10 people | 45 channels |

| 20 people | 190 channels |

| 50 people | 1,225 channels |

A manager might intuitively believe that doubling a team from 10 to 20 people doubles the complexity. The mathematics show it more than quadruples it, from 45 channels to 190. This transforms the abstract concept of "overhead" into a hard, financial reality.

The decision to overstaff a project creates a dynamic similar to technical debt, but within the team's structure. This "organizational debt" accrues interest daily in the form of more meetings, status updates, and miscommunications. Advanced code analysis tools have even visualized this effect, showing a widening gap between the total number of developers on a project and their normalized output—proof that each additional person becomes progressively less productive. The solution is not to add more resources, but to "refactor" the team's processes and remove impediments, much like one would refactor code to pay down technical debt. To maximize impact while minimizing risk, consider proven strategies recommended in The True Business Value of the Agile Manifesto, which explores how agility—not just headcount—drives true productivity.

Myth 2: The budget is approved. The big spending is over

The Question You're Really Asking

"What is the true, all-in cost of this software over its entire lifecycle, and how can I budget for it accurately?"

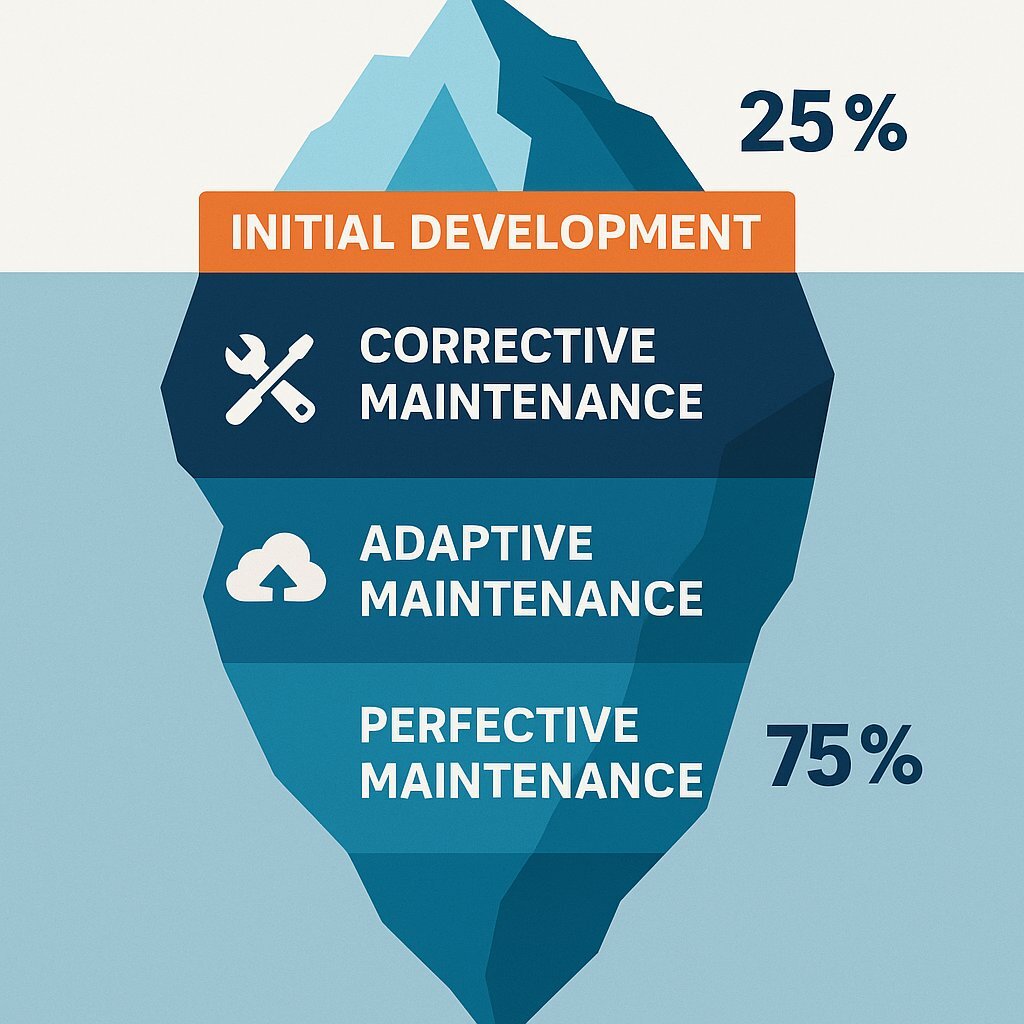

Securing the initial development budget often feels like the final hurdle. In reality, it is merely the entry fee. The initial build cost is often just the tip of the iceberg, representing a fraction of the software's true lifetime expense. To understand the full financial picture, leaders must adopt a Total Cost of Ownership (TCO) mindset, which accounts for all direct and indirect costs from "cradle to grave." Key components of TCO include initial development, infrastructure, support, training, and, most significantly, ongoing maintenance and upgrades.

The Data-Backed Reality of Maintenance Costs

The data on post-launch software costs is one of the most sobering realities in the technology industry. Multiple independent studies converge on a startling conclusion:

- Across the industry, maintenance typically accounts for 50-80% of a software's total lifetime expenditures.

- Gartner research estimates that organizations spend 55-80% of their total IT budgets on maintenance activities rather than on new, innovative projects.

- IBM research corroborates this, finding that maintenance consumes 50-75% of total software costs over the application's lifecycle.

To translate these percentages into real dollars, consider a software project with an initial development cost of $500,000. Based on these industry benchmarks, the business should expect to spend an additional $1 million to $2 million on maintenance over its lifetime.

The term "maintenance" itself can be misleadingly simple. It is not a single activity but a collection of distinct, ongoing efforts, each with its own cost structure. Understanding this breakdown is crucial for accurate budgeting.

| Cost Category | Example Cost (for a $500k project) | Percentage of TCO |

|---|---|---|

| Visible Cost (The Tip of the Iceberg) | $500,000 | 25% |

| Corrective Maintenance (Bug Fixes) | $300,000 - $600,000 | 15-30% |

| Adaptive Maintenance (New OS/APIs) | $300,000 - $500,000 | 15-25% |

| Perfective Maintenance (Enhancements) | $600,000 - $800,000 | 30-40% |

| Preventive Maintenance (Refactoring) | $200,000 - $400,000 | 10-20% |

| Total Lifetime Cost (TCO) | $1.9M - $2.8M | 100% |

This data reveals a profound truth: the single greatest lever for controlling long-term TCO is not negotiating developer rates or cutting features. It is the quality of the initial architectural decisions made before a single line of code is written. A well-designed system, built on principles of modularization, abstraction, and separation of concerns, is inherently more resilient to change and cheaper to maintain. Conversely, a poorly designed, monolithic system incurs a "maintenance tax" on every future bug fix, update, and enhancement. Investing in expert-level software design upfront is not a cost center; it is the most effective TCO reduction strategy available. For a comprehensive look at why maintenance is always a strategic priority, see Software Maintenance Mastery: Executive Guide to Post-Launch Success.

Myth 3: We'll save time and money by cutting the QA budget

The Question You're Really Asking

"In a high-pressure environment, how can we balance the need for speed with the imperative for quality without introducing unacceptable business risk?"

When project timelines are compressed and budgets are tight, the Quality Assurance (QA) phase is often seen as a final, expensive bottleneck—a prime candidate for cuts. This perspective treats quality as a feature that can be traded for speed, rather than as an integral part of risk management. This is a classic false economy, and the data proves it is one of the most expensive mistakes a business can make.

The Data-Backed Reality of Escaping Defects

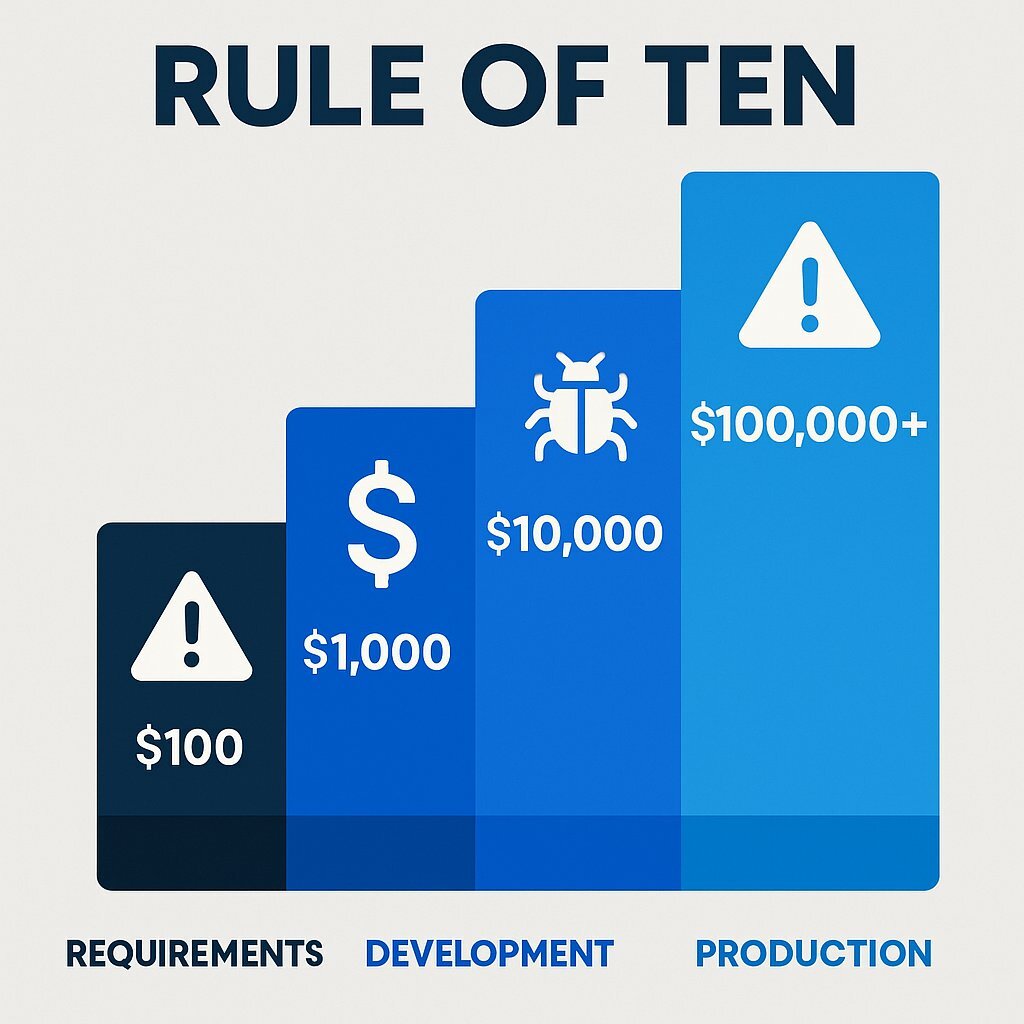

The economics of software quality are governed by a principle known as the "Rule of Ten." This rule, validated by numerous studies, states that the cost to fix a bug increases by a factor of 10 for each stage of the software development lifecycle (SDLC) it passes through undetected.

- A bug found during the requirements gathering phase might cost $100 to fix.

- If that same bug is found by a developer during unit testing, the cost rises to $1,000.

- If it escapes development and is found during the QA phase, the cost escalates to $10,000.

- And if the bug makes it into production and affects customers, the remediation cost explodes to $100,000 or more, not including the secondary business costs.

| Development Stage | Cost to Remediate |

|---|---|

| Requirements/Design | $100 |

| Development (Unit Test) | $1,000 |

| System/QA Testing | $10,000 |

| Post-Release (Production) | $100,000+ |

This table provides a powerful financial argument against shortchanging quality. Saving $10,000 by rushing or skipping QA can easily lead to a $100,000 emergency fix down the line. But even that figure dramatically understates the true business impact. The cost of poor software quality in the US alone is estimated at a staggering $2.41 trillion. This includes:

- Direct Financial Losses: A pricing algorithm error cost small businesses on Amazon £100,000 each, while a 2021 Facebook outage resulted in an estimated $100 million in lost revenue.

- Reputational Bankruptcy: Research shows that 81% of consumers lose trust in a brand after a major software failure, and a staggering 68% will abandon an application after encountering just two bugs.

- Innovation Stagnation: Development teams often spend 30-50% of their sprint cycles firefighting defects from previous releases, preventing them from building new, value-generating features.

A company's approach to quality is therefore a leading indicator of its overall engineering maturity. Immature organizations treat QA as a separate, final "testing phase." Mature organizations, in contrast, "shift left," integrating quality into every stage of the lifecycle. Practices like automated regression testing, continuous integration, and Test-Driven Development (TDD) are not just about catching bugs; they create tight feedback loops that improve code quality and make the entire development process more predictable and financially stable. For practical recommendations on modernizing your approach, see DevOps Efficiency, which details best practices for risk-managed, quality-driven development.

Myth 4: We only hire developers with Computer Science degrees

The Question You're Really Asking

"In a competitive talent market, how do I find and retain the most effective engineers to build a high-performing team?"

For decades, the Computer Science degree has been the gold standard for developer hiring, often serving as a non-negotiable filter for recruiters. While a formal CS education provides a valuable theoretical foundation, clinging to it as the sole indicator of competence is a costly mistake that ignores the modern reality of how top engineering talent is forged.

The Data-Backed Reality of the Modern Developer

The latest industry data paints a far more diverse and nuanced picture of the developer landscape:

- The 2024 Stack Overflow Developer Survey found that while 66% of professional developers hold a Bachelor's or Master's degree, only 49% of them actually learned to code in school.

- The primary way developers acquire their skills is through online resources (82.1%), followed by books and e-courses. A significant 10.7% of the talent pool comes from coding bootcamps.

- A 2024 salary survey from Arc.dev directly contradicts the degree-first myth. It found that in their first three years, coding bootcamp graduates earn a median of 37% more than developers with a bachelor's degree, indicating that the market places a high premium on their practical, job-ready skills.

- The same survey revealed that for senior roles (16+ years of experience), self-taught developers out-earn those with bachelor's degrees by 26% and even those with master's degrees by 10%.

A rigid, degree-focused hiring policy creates an artificial talent shortage. It shrinks the available candidate pool, which extends hiring cycles, increases recruitment agency fees, and drives up salary demands for a small, over-fished group of candidates. More importantly, it risks overlooking a vast pool of passionate, highly motivated, and practically skilled developers who may be a better fit for the team's immediate needs.

This restrictive approach creates a form of "human capital debt." A team's greatest strength is its diversity of thought and problem-solving approaches. Engineers from bootcamp, self-taught, or non-CS backgrounds often bring different perspectives that can lead to more creative and pragmatic solutions. By filtering out this diversity, a company incurs a debt in its team's adaptability and resilience. This debt comes due when the team faces a novel problem that their uniform training has not prepared them for, leading to project delays and the need for costly external consultants. A strategic hiring process focuses on assessing tangible skills, problem-solving ability, and cultural fit, thereby building a more innovative and cost-effective team. For a real-world look at custom development’s impact on team value, explore why 75% of IT leaders are choosing custom software for competitive edge.

Myth 5: We must use the newest technology to have a competitive edge

The Question You're Really Asking

"How do I choose a technology stack that empowers my team to deliver value quickly while ensuring long-term stability and a positive ROI?"

In the fast-paced world of software, there is a powerful temptation to adopt the latest, most talked-about technology. "Shiny object syndrome" can be driven by a genuine desire for innovation, a fear of being left behind, or pressure to use trendy technologies to attract talent. However, making technology decisions based on hype rather than a rigorous assessment of business value and TCO is a recipe for budget overruns and project failure.

The Data-Backed Reality of Mature Technologies

A look at what the world's professional developers are actually using to ship production software reveals a clear preference for mature, stable, and well-supported technologies. The 2024 Stack Overflow survey shows that the enterprise world runs on a foundation of proven tools:

- Languages: JavaScript (used by 64.6% of professional developers), SQL (54.1%), Python (46.9%), and Java (30%) remain dominant forces in enterprise development.

- Databases: Time-tested, open-source relational databases are the clear leaders, with PostgreSQL used by 51.9% of professionals and MySQL by 39.4%.

- Cloud Platforms: The market is controlled by a stable oligopoly of mature providers: Amazon Web Services (52.2% of professionals), Microsoft Azure (27.8%), and Google Cloud (25.1%).

Choosing to build a core business system on a bleeding-edge technology introduces significant and often unquantified financial risks. These include a smaller talent pool (making hiring slow and expensive), an immature ecosystem lacking critical libraries and documentation, a higher risk of undiscovered security vulnerabilities, and the existential threat of the technology being abandoned by its creators, leaving the project stranded.

A CTO's role in technology selection is analogous to that of a financial portfolio manager. A sound portfolio balances stable, lower-yield assets with a smaller allocation to high-risk, high-reward investments. In a technology stack, the "stable assets" are the mature, dominant technologies that form the core of mission-critical systems. They guarantee stability, talent availability, and long-term support. The "high-risk assets" are the emerging technologies, which can be used for smaller, non-critical projects or R&D initiatives to explore future advantages without betting the company's future on them. Chasing every new trend without this disciplined, portfolio-based approach is not innovative; it is financially reckless. For a blueprint on modern, business-aligned architecture, see Cloud-Native Architecture: The Executive Blueprint for Business Agility.

Myth 6: An MVP is just a cheap, stripped-down version of our product

The Question You're Really Asking

"We have a great product idea, but limited capital. How can we test the market and validate our vision without sinking millions into a product nobody wants?"

The Minimum Viable Product (MVP) is one of the most powerful concepts in modern product development, but it is also one of the most misunderstood. The myth is that the "M" in MVP stands for "minimal cost," leading teams to believe its purpose is simply to build a cheap, feature-light version of their final product. While cost-effectiveness is a benefit, it is a secondary outcome. The primary goal is far more strategic.

The Data-Backed Reality of MVP as a Strategic Tool

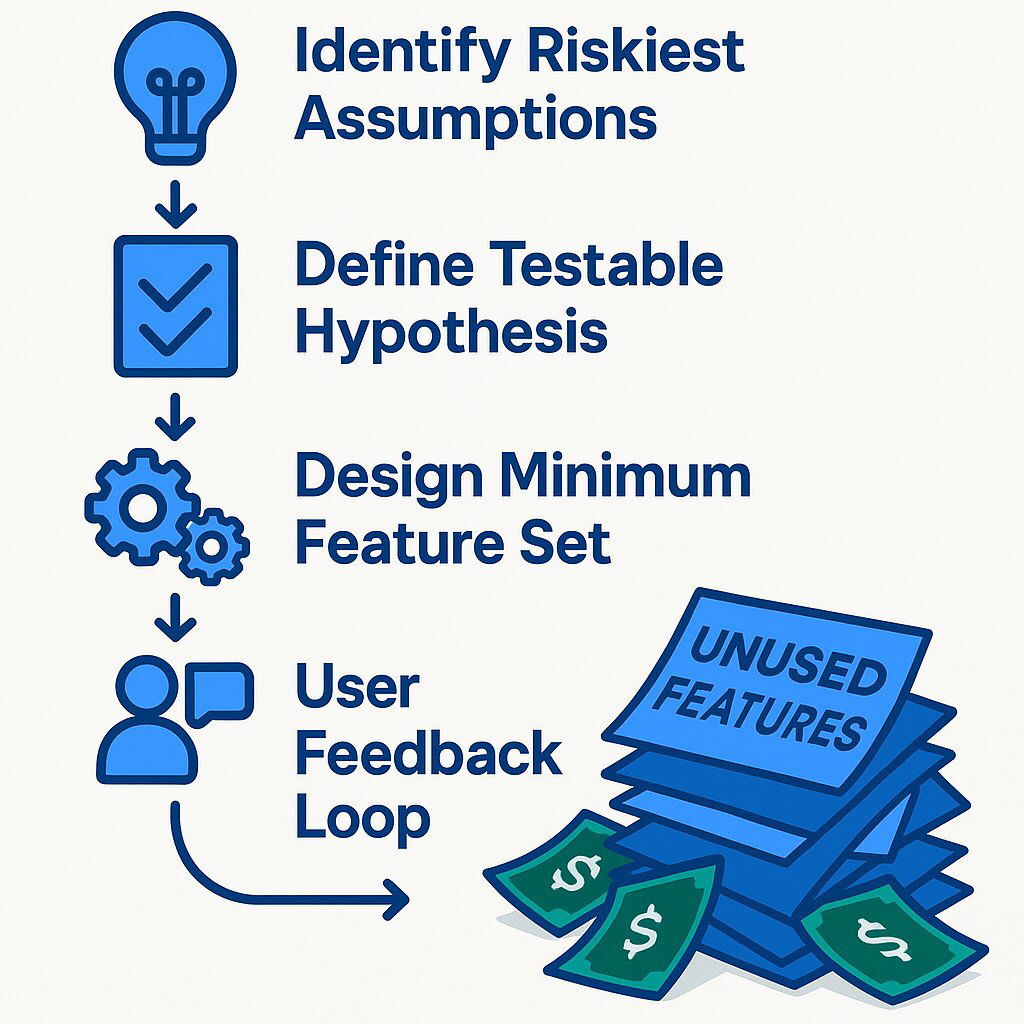

The true purpose of an MVP, as defined by the lean startup methodology, is to achieve the maximum amount of validated learning about customers with the minimum amount of effort . It is a scientific instrument for de-risking a business idea. To see how discovery up front saves cost and prevents misalignment, check out why the Discovery Phase saves your software project.

This strategic purpose means that MVPs, while leaner than full-scale products, are not necessarily cheap. Industry data shows that an average MVP can cost anywhere from $15,000 to $150,000 or more , depending on its complexity. This is a significant strategic investment, not a trivial expense. The return on this investment is not measured in lines of code, but in the quality of the data and user feedback it generates. The legendary success of companies like Dropbox, Airbnb, and Uber was built on simple MVPs that validated their core business hypotheses, allowing them to attract massive investment and build with confidence.

A poorly executed MVP is a 100% loss, no matter how "cheap" it was. The most common failures stem from misunderstanding its purpose:

- Feature Bloat: Adding "just one more feature" dilutes the core hypothesis being tested and needlessly inflates the cost and timeline.

- Ignoring Feedback: Building an MVP without a rigorous process for gathering and acting on user feedback defeats its entire purpose.

- Solving a Problem Nobody Has: The single biggest cause of startup failure is building a perfect solution to a problem that does not exist. The MVP is specifically designed to prevent this catastrophic and expensive mistake.

An MVP is fundamentally a business experiment, not a software project. The "product" is the data that the experiment yields. Therefore, the most critical work happens long before development begins. It involves rigorously defining the business's riskiest assumptions, formulating a clear, testable hypothesis, and then identifying the absolute minimum feature set required to get a definitive answer from the market. A team that jumps straight into coding without this strategic groundwork is not building an MVP; they are building a "Minimum Viable Cost" project that is likely destined to become expensive shelfware. To see frameworks for balancing configuration flexibility and cost, explore the Configuration Complexity Clock for software.

Conclusion: From Costly Myths to Profitable Realities

The six myths debunked in this report share a common thread: they encourage reactive, short-sighted decisions that prioritize perceived short-term gains over long-term financial health and strategic stability. The data tells a different story. It reveals the direct, quantifiable costs of these misconceptions:

- Adding People: Leads to exponential communication overhead and project delays, increasing costs.

- Ignoring TCO: Multiplies initial development budgets by 3-4x over the software's lifetime, creating massive, un-budgeted liabilities.

- Cutting QA: Increases bug-fixing costs by up to 100x, erodes brand trust, and stifles innovation.

- Narrow Hiring: Artificially inflates recruitment costs and starves teams of the diverse perspectives needed to innovate.

- Chasing Trends: Introduces unnecessary technical risk, maintenance burdens, and higher talent acquisition costs.

- Misunderstanding MVPs: Leads to wasted investment on products that fail to answer critical business questions, increasing the risk of total project failure.

The unifying principle that emerges from the data is clear: proactive, strategic, and data-driven management is the key to transforming software development from an unpredictable cost center into a reliable, profitable engine for business growth. Navigating the complex trade-offs between speed, cost, and quality requires more than just technical skill; it requires business acumen and strategic foresight. Baytech Consulting partners with technology leaders to replace costly myths with proven, data-backed methodologies, helping you build engineering organizations that are not just efficient, but are a durable competitive advantage. For guidance on taking a strategic approach from day one, see our Agile methodology services, designed to help you move from myth to measurable results.

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.