Revolutionize AI Integration with MCP: The Future of Open Standard Protocols 2025

October 31, 2025 / Bryan Reynolds

Architecting Intelligence: A Comprehensive Guide to the Model Context Protocol for.NET Core Developers

Section 1: Demystifying the Model Context Protocol (MCP)

The landscape of Artificial Intelligence is evolving at an unprecedented pace, shifting from monolithic models to sophisticated, agentic systems that interact with the world. For these AI agents to be truly useful, they must connect to a vast and heterogeneous array of external tools, data sources, and APIs. This presents a significant integration challenge. The Model Context Protocol (MCP) emerges as a strategic, open-source standard designed to solve this problem, providing a universal communication layer between AI applications and the external context they require. This guide provides a comprehensive exploration of MCP, its architecture, and its practical implementation within the .NET ecosystem, and draws upon broader hybrid microservices and SaaS architecture wisdom for context.

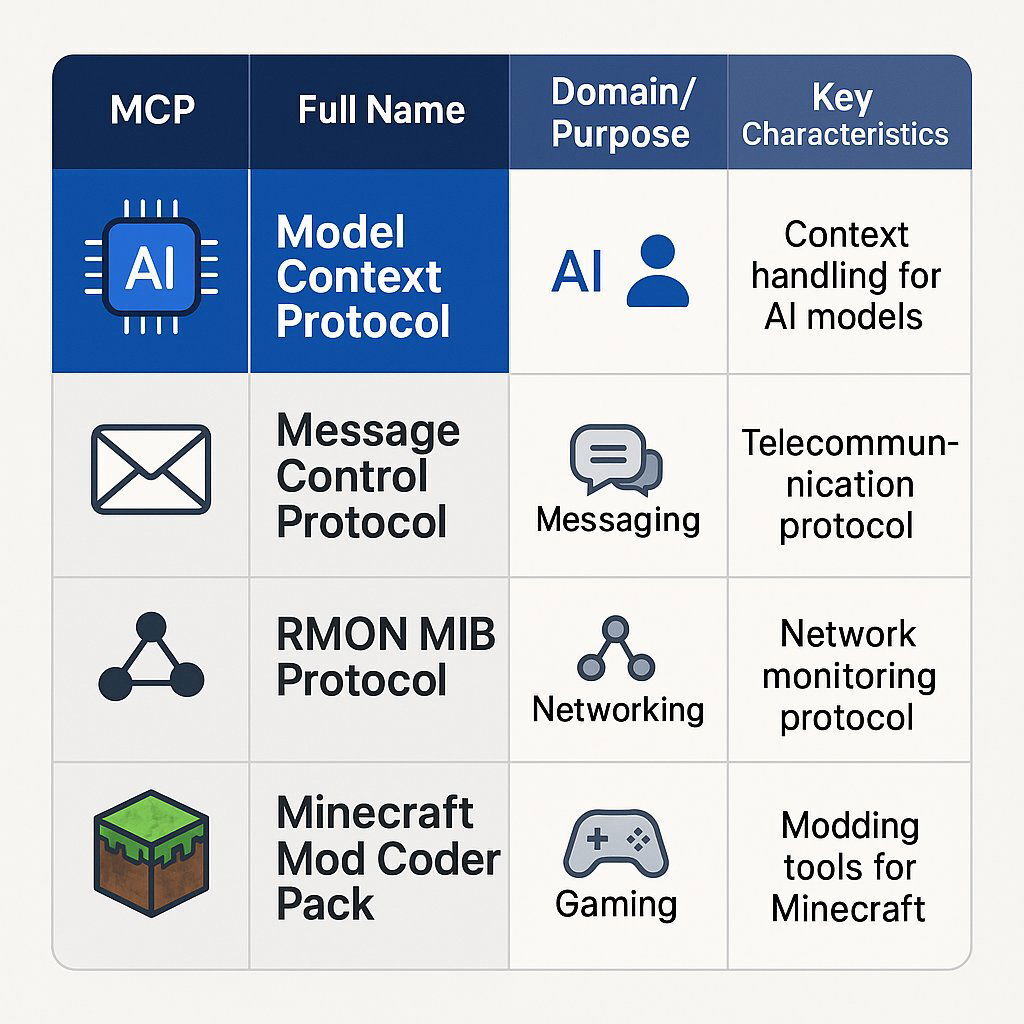

1.1. Acronym Disambiguation: A Crowded Field

A primary hurdle when approaching this topic is the ambiguity of the acronym "MCP." A search for the term yields results from disparate technological domains, creating significant confusion. Before proceeding, it is essential to disambiguate the Model Context Protocol from other technologies that share its initials. The focus of this report is exclusively on the protocol for AI integration.

Model Context Protocol: The subject of this report, introduced by Anthropic in 2024, is an open standard for connecting AI applications (like Large Language Models) to external systems such as databases, APIs, and local filesystems. It aims to solve the "M x N" integration problem by creating a standardized "USB-C port for AI," fostering a reusable and interoperable ecosystem.

Message Control Protocol: This term refers to a different class of protocols focused on standardizing message handling, integrity, and reliable delivery within communication systems. Examples include frameworks designed for platforms like Slack to ensure consistent message formatting and processing, as well as specifications in telecommunications, such as the Short Message Control Protocol (SM-CP). It is also used in the context of the Internet Message Control Protocol (ICMP), a core internet protocol whose specification has been found to contain ambiguities.

Remote Network Monitoring (RMON) MIB Protocol: Defined in IETF documents like RFC 2896, this protocol is used for network management within the Simple Network Management Protocol (SNMP) framework. It is entirely unrelated to AI or application-level integration.

Minecraft Mod Coder Pack (MCP): A well-known but completely unrelated toolset in the video game modding community, used for decompiling and modifying the game Minecraft. Its prevalence often pollutes search results for the Model Context Protocol.

To provide immediate clarity, the following table summarizes these distinctions.

| Acronym | Full Name | Domain/Purpose | Key Characteristics |

|---|---|---|---|

| MCP | Model Context Protocol | AI Integration | An open standard using JSON-RPC 2.0 to connect AI agents to external tools and data sources. Defines Host, Client, and Server roles. |

| MCAP/MCP | Message Control Protocol | Messaging & Communications | Focuses on message formatting, integrity (checksums, signatures), and reliable delivery in systems like Slack or mobile networks (SM-CP). |

| MCP | RMON MIB Protocol Identifiers | Network Management | Used within the SNMP framework for identifying protocol encapsulations for remote network monitoring. Defined by IETF RFCs. |

| MCP | Mod Coder Pack | Gaming (Minecraft) | A toolset for decompiling and creating modifications ("mods") for the game Minecraft. Unrelated to network protocols or AI. |

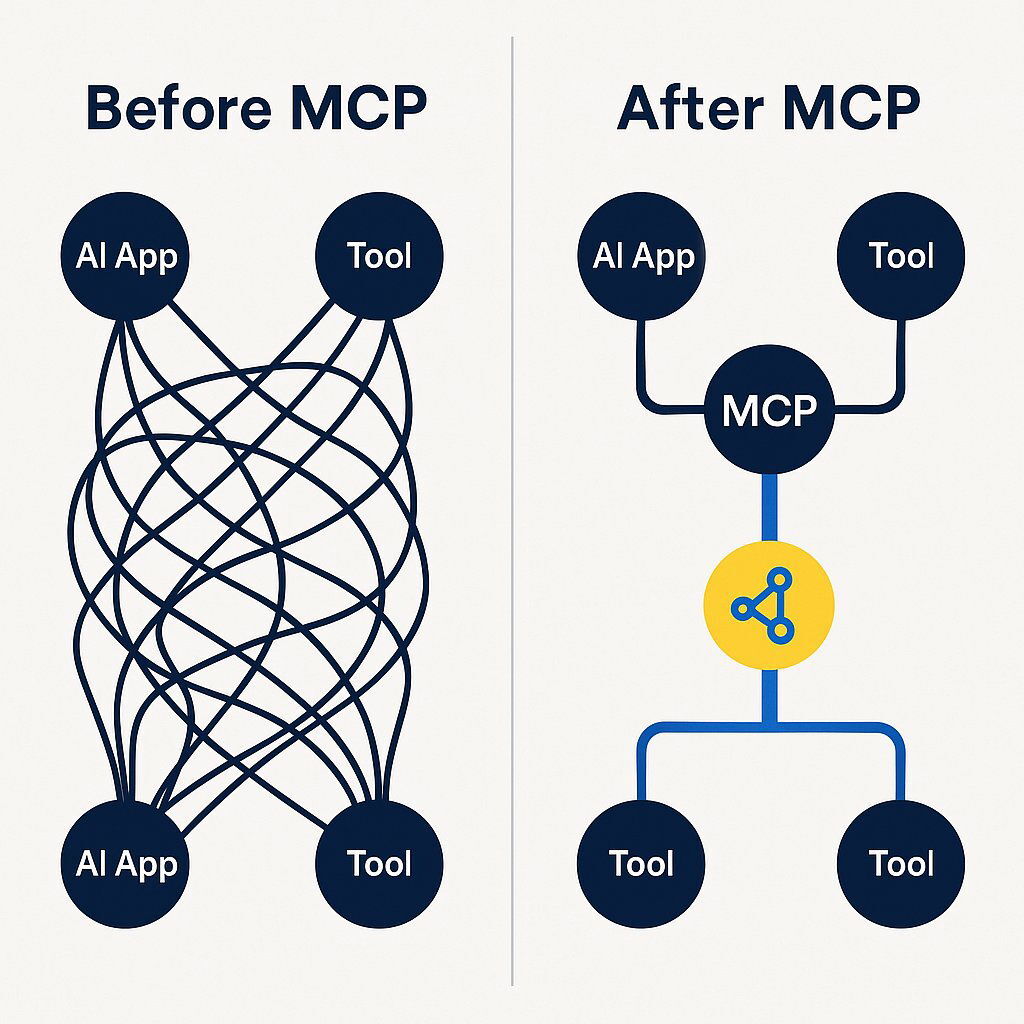

1.2. Foundational Concepts: The "Why" Behind MCP

The core mission of MCP is to standardize the chaotic landscape of custom AI integrations. Historically, connecting M different AI applications to N different tools required building M x N unique connectors. This approach is brittle, expensive to maintain, and scales poorly. MCP transforms this into an "M + N" problem by defining a common protocol: an application needs only one MCP client implementation to connect to any MCP-compliant server, and a tool needs only one MCP server to be accessible by any MCP-compliant client.

For developers, this paradigm shift offers significant benefits:

- Reduced Custom Work: A single server implementation can serve numerous AI clients, eliminating the need for bespoke connectors.

- Reusability: The standardized server design can be applied across different environments and applications.

- Lower Maintenance: Updates to a single server automatically benefit every connected client.

For end-users, this translates to more capable, secure, and cohesive AI assistants that can seamlessly access a growing ecosystem of tools and data sources.

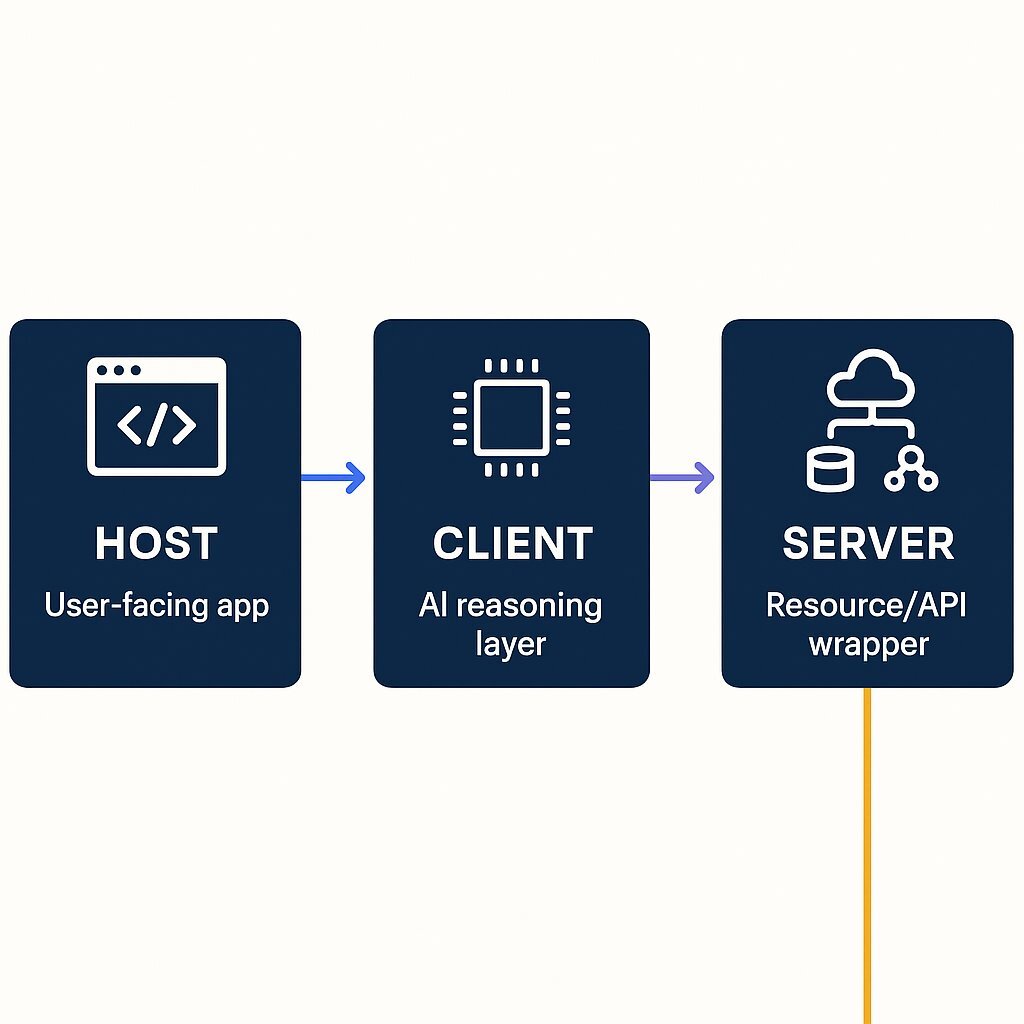

1.3. The Three-Part Architecture: Host, Client, and Server

The MCP specification defines a clear, three-part architecture with distinct roles and responsibilities. This separation of concerns is fundamental to its design and enables the protocol's flexibility and power.

MCP Host: The user-facing application where the AI interaction occurs. This could be an Integrated Development Environment (IDE) like Visual Studio Code, a chat application like Claude Desktop, or any other software that manages the user experience. The host is responsible for collecting user input, displaying results, and, crucially, instantiating and managing one or more MCP clients.

MCP Client: The "thinker" and decision-making component, typically powered by an LLM but distinct from the model itself. The client resides within the host and is responsible for the protocol-level communication with a single MCP server. Its duties include discovering the server's capabilities, deciding which tools to call based on the user's request, orchestrating the execution, and processing the response. Each client maintains a strict one-to-one connection with a corresponding server.

MCP Server: A program that acts as a wrapper or adapter for an external resource, such as a web API, a database, or a local filesystem. The server's role is to expose the capabilities of the underlying resource in a standardized MCP format, enforce security and access control policies, and translate incoming MCP requests into concrete actions. Servers can be deployed locally on the same machine as the host or remotely in the cloud.

1.4. Protocol Mechanics and Primitives

MCP's communication layer is built on well-established standards, providing a robust foundation for interaction between clients and servers.

Underlying Protocol and Lifecycle

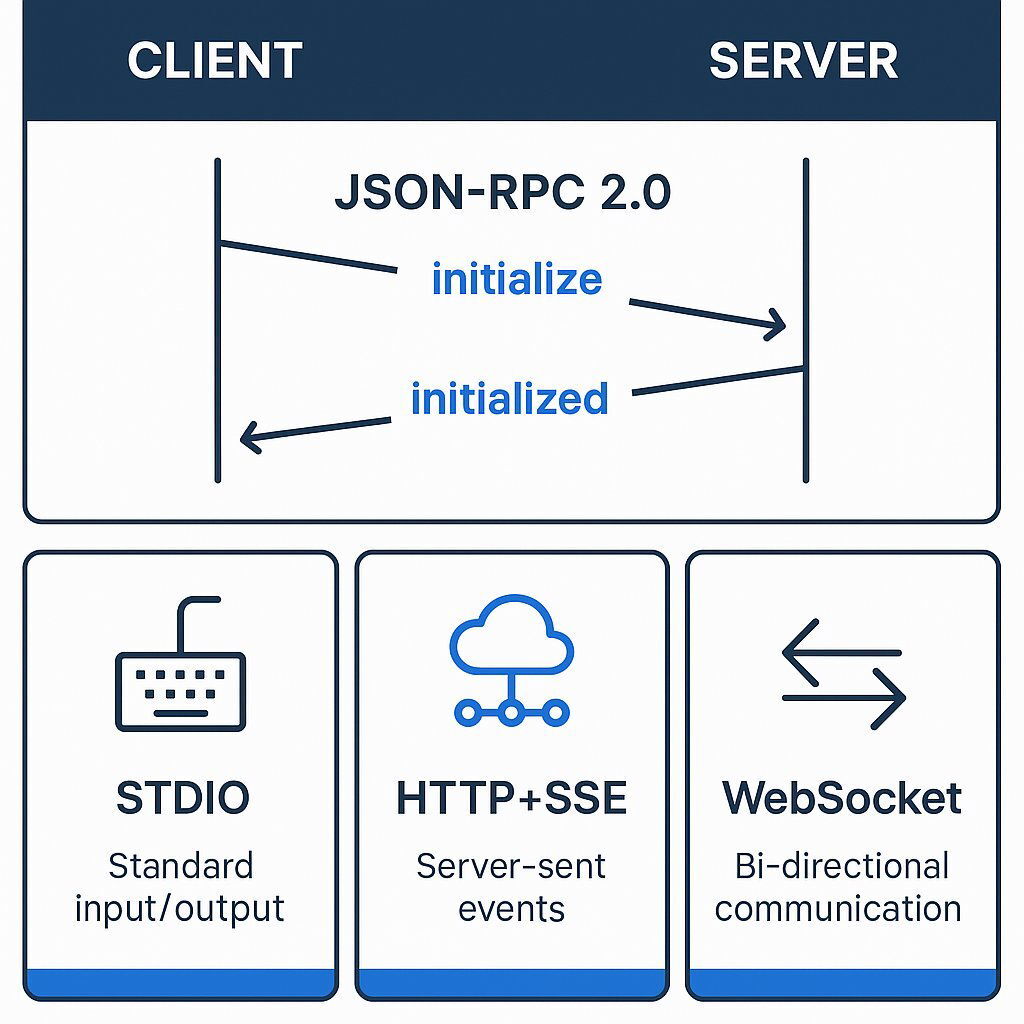

At its core, MCP uses JSON-RPC 2.0 as its underlying remote procedure call protocol. This defines a simple, text-based message structure for requests, responses, and notifications.

Communication begins with a lifecycle management handshake to establish a connection and negotiate capabilities:

- The client sends an

initializerequest, declaring its supported features. - The server replies with an

initializeresponse, detailing its own information and the capabilities it offers (e.g., support for "tools," "resources"). The client sends an

initializednotification to confirm the handshake is complete, and the connection is ready for use.

Transport Layers

MCP is designed to be transport-agnostic, but the specification standardizes three primary transport layers, each suited for different use cases:

Standard Input/Output (STDIO): This transport leverages a process's

stdinandstdoutstreams for communication. It is ideal for local MCP servers running as a subprocess of the host application (e.g., an IDE plugin). It offers extremely low latency and inherits operating system-level security, as it requires no network stack.HTTP + Server-Sent Events (SSE): This model is designed for remote servers. It uses standard HTTP POST requests for client-to-server communication and a long-lived SSE connection for server-to-client streaming. This makes it compatible with existing web infrastructure like proxies and load balancers but introduces the complexity of managing two separate communication channels.

WebSockets: While less commonly highlighted in introductory materials, WebSockets provide a persistent, full-duplex (bidirectional) connection, offering low-latency communication suitable for highly interactive or high-frequency message exchanges.

Core and Advanced Primitives

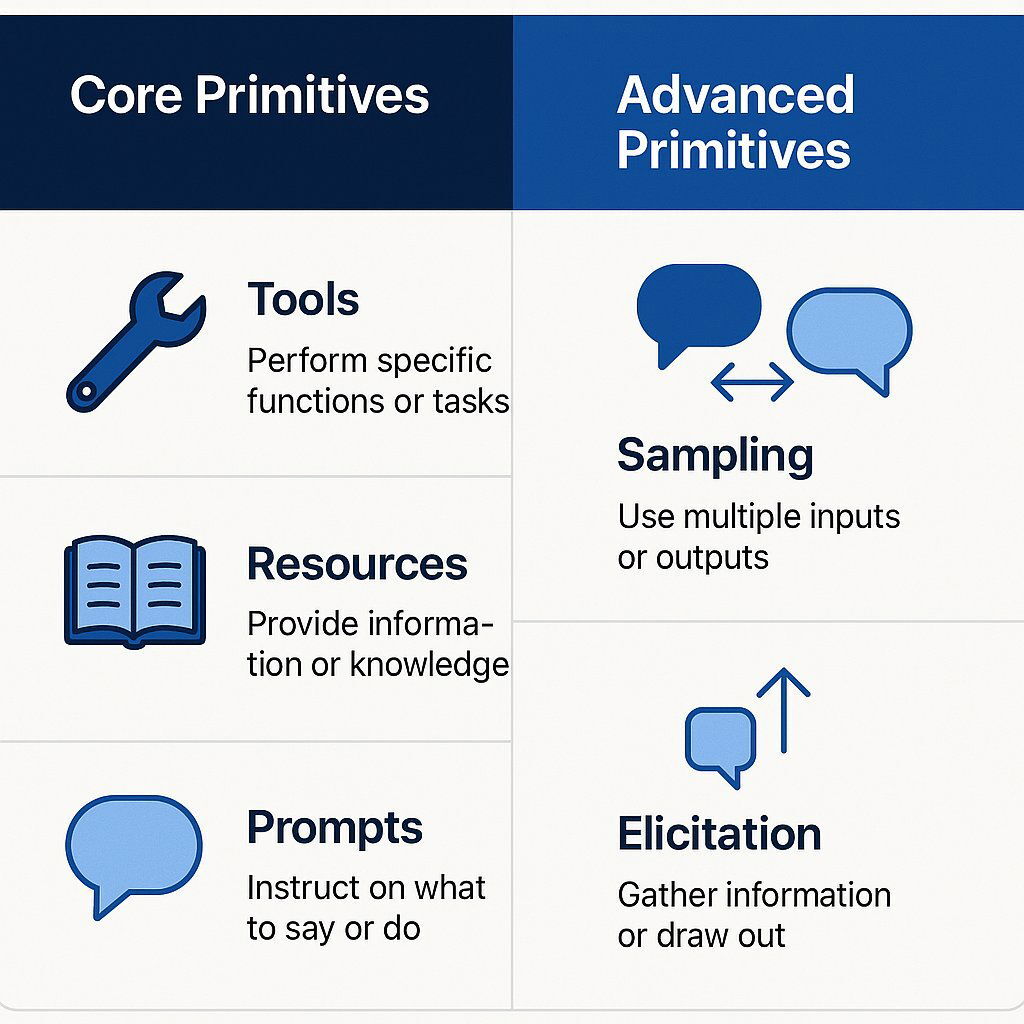

The "context" that a server provides is exposed through a set of standardized primitives. These are the fundamental capabilities that a client can discover and utilize.

Core Primitives:

- Tools: These are executable functions or actions that the server can perform. A client can discover them via a

tools/listrequest and invoke them with atools/callrequest, passing the required parameters. - Resources: These represent read-only, file-like data that a server can expose. A client can discover available resources with

resources/listand retrieve their content withresources/read. - Prompts: These are pre-configured prompt templates that a server can offer to guide users or the LLM in accomplishing specific tasks. They are discoverable via

prompts/listand retrievable viaprompts/get.

Advanced Primitives: Beyond simple request-response, MCP defines advanced primitives that enable more sophisticated, agentic workflows. These features elevate MCP from a simple RPC protocol to a comprehensive architectural pattern for building interactive AI systems.

- Sampling: This powerful feature allows a server to request an LLM completion from the client. A server can formulate a prompt and ask the client's LLM to process it, effectively outsourcing AI-dependent tasks without needing its own model access or credentials. This maintains a clean separation of concerns, keeping the AI model logic on the client side.

- Elicitation: This primitive enables a server to request additional information or confirmation directly from the end-user. The server sends an elicitation request, and the host application is responsible for presenting a UI to the user to gather the required input. This is essential for interactive tools that may need clarification or user approval before performing an action.

The definition of these distinct architectural roles (Host, Client, Server) and the inclusion of advanced, bidirectional interaction patterns like Sampling and Elicitation demonstrate that MCP is more than a data exchange format. It is a complete architectural blueprint for designing and building complex, interactive AI agents. Developers adopting MCP should think not just in terms of API endpoints, but in terms of these roles and the rich interaction flows they enable—mirroring the focus on composable architecture as a foundation for modern, modular digital solutions.

Section 2: The MCP Ecosystem for.NET

For .NET developers, the MCP landscape is rapidly maturing, anchored by official support from Microsoft and a growing community of contributors. This section provides a detailed survey of the libraries, tools, and pre-built servers available, offering a clear view of the options for building MCP-enabled applications on the .NET platform.

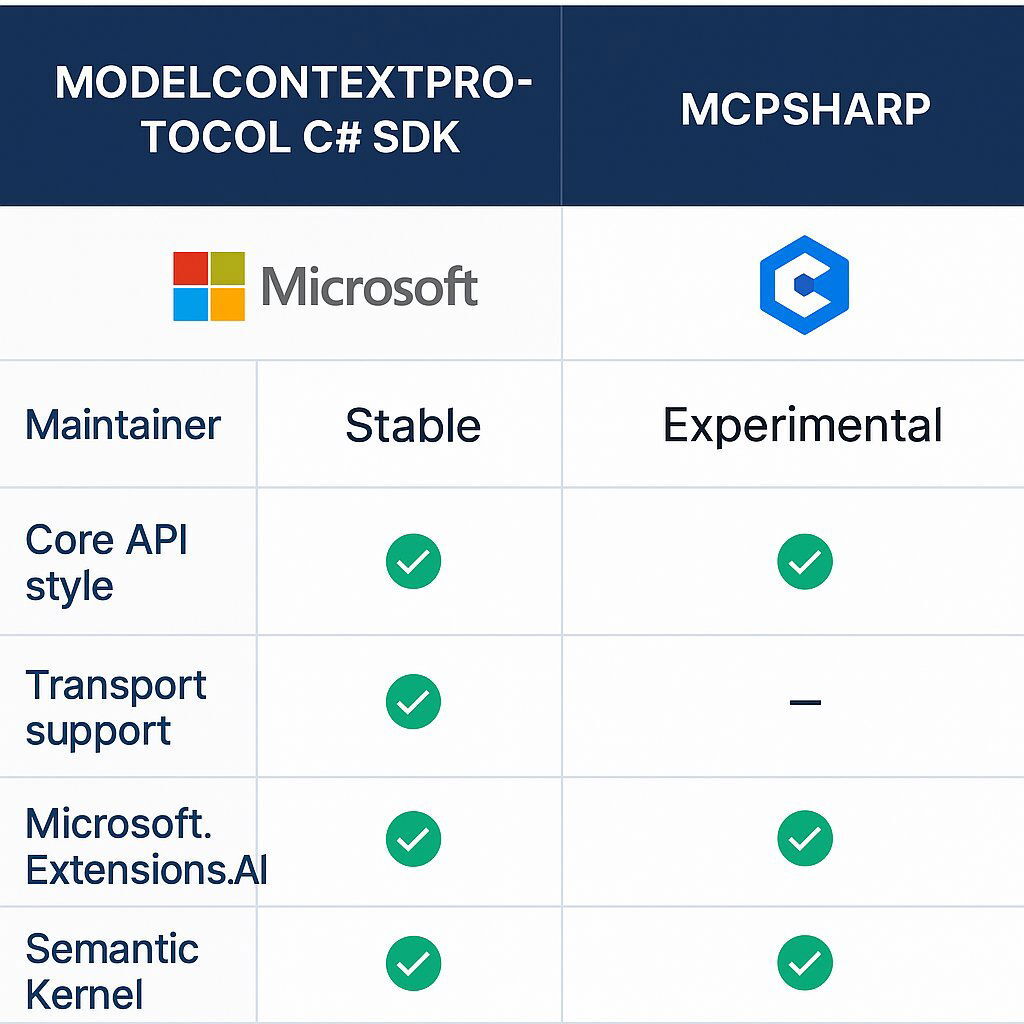

2.1. Microsoft's Official C# SDK: The Cornerstone

The central and most important component for .NET developers is the official MCP C# SDK, co-maintained by Microsoft and Anthropic. This library provides the foundational APIs for building both MCP clients and servers in C#.

- Maturity and Status: It is critical to note that the SDK is currently in a preview state. This implies that its APIs are subject to change, and developers should be prepared for potential breaking changes in future releases. This consideration is paramount when planning for production deployments.

- Package Structure: The SDK is distributed via NuGet as a set of modular packages, allowing developers to include only the dependencies they need:

ModelContextProtocol: This is the main package, suitable for most projects. It includes hosting extensions and integration with .NET's dependency injection system, making it ideal for console applications or services that do not require an HTTP server.ModelContextProtocol.AspNetCore: This package is specifically for building HTTP-based MCP servers (using the SSE transport) within an ASP.NET Core application. It provides the necessary middleware and endpoint mapping capabilities.ModelContextProtocol.Core: A lightweight package containing only the low-level client and server APIs. It is intended for developers who need minimal dependencies or want to build their own hosting and transport layers.

- Key Dependency and Strategic Alignment: The official SDK has a foundational dependency on the

Microsoft.Extensions.AIlibraries. This is not merely a technical detail but a significant strategic indicator. It signals that Microsoft's vision for MCP in .NET is one of tight integration with its broader, standardized abstractions for AI development. This dependency provides core types for interacting with AI models and allows MCP tools to be seamlessly exposed asAIFunctionobjects, which can be directly consumed by anyIChatClientimplementation (such as those for OpenAI or Azure OpenAI).

2.2. Community-Driven Libraries: MCPSharp and Beyond

Alongside the official SDK, the .NET community has produced alternative libraries, with MCPSharp being the most prominent.

- Features of MCPSharp: This library offers a streamlined, attribute-based API for creating MCP servers. Developers can expose methods as tools simply by decorating them with a designated attribute. It also provides a client implementation capable of importing a server's tools directly into C# code or, importantly, converting them into the same

AIFunctionsused byMicrosoft.Extensions.AI, ensuring compatibility with the broader ecosystem. There is also evidence of integration with Semantic Kernel, another key Microsoft AI framework. - Comparison of Approaches: The existence of both an official, DI-centric SDK and a community-driven, attribute-centric library provides developers with a choice of API style and philosophy.

This comparison reveals a significant trend: the .NET MCP ecosystem is not developing in a vacuum but is actively converging around Microsoft's strategic AI abstractions. The official SDK is built on Microsoft.Extensions.AI, and leading community libraries like MCPSharp are ensuring compatibility with it. Furthermore, Microsoft is integrating MCP support directly into its flagship AI orchestration framework, Semantic Kernel. For a .NET developer, this convergence implies that the most effective and future-proof path for MCP development involves embracing these foundational Microsoft AI libraries, as they provide the common language for interoperability across the ecosystem.

2.3. Survey of Open-Source .NET MCP Servers and Tools

The .NET community has been active in creating a diverse collection of pre-built MCP servers, templates, and tools, many of which are cataloged in resources like the Awesome-DotNET-MCP repository. These open-source projects provide both ready-to-use functionality and valuable examples for developers. For deeper exploration of the decision-making and strategic value in building custom solutions, see five signs it's time to build custom software.

Servers can be broadly categorized by their function:

- Database Integration: Projects like

MCP_PostgreSQLandKnowledgeBaseServer(for SQLite) provide direct MCP interfaces for querying and interacting with databases. - Cloud Services: The

azure-mcpserver offers tools for interacting with key Azure services, whileacs-email-mcp-serverprovides email automation via Azure Communication Services. - Development and Tooling:

DotNetMetadataMcpServerexposes .NET type information for AI coding agents, andAvaloniaUI.MCPprovides tools for Avalonia UI development. - Workflow and Business Logic: Libraries such as

hangfire-mcpallow AI agents to enqueue background jobs using Hangfire, anddotnet-mcp-ortoolsexposes Google's powerful OR-Tools for constraint solving. - API Wrapping:

MCPP.NETis a notable project that can dynamically convert Swagger/OpenAPI specifications into MCP tools, providing a rapid way to expose existing APIs.

Additionally, several templates and samples are available to accelerate development, including mcp-template-dotnet for bootstrapping a new project and remote-mcp-functions-dotnet for building and deploying remote MCP servers using Azure Functions.

Section 3: Implementation Blueprint: Exposing Your .NET Core API via MCP

This section provides a practical, step-by-step guide for wrapping an existing .NET Core API with an MCP server. The blueprint progresses from a simple local server for foundational understanding to a fully integrated remote server, demonstrating the architectural patterns recommended by the official C# SDK.

3.1. Part I: Building a Local (STDIO) MCP Server

Creating a local server that communicates via Standard I/O is the ideal starting point for understanding the core mechanics of the SDK in a simple, controlled environment.

Project Setup

First, create a new .NET console application and add the necessary NuGet packages for the MCP SDK and .NET's generic host.

dotnet new console -n MyLocalMcpServer

cd MyLocalMcpServer

dotnet add package ModelContextProtocol --prerelease

dotnet add package Microsoft.Extensions.Hosting

Server Scaffolding

Next, configure the Program.cs file to set up the host, register the MCP server, and specify the STDIO transport. The WithToolsFromAssembly() method instructs the server to automatically discover any classes and methods decorated with the appropriate MCP attributes within the current project.

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Hosting;

using ModelContextProtocol.Server;

using System.ComponentModel;

var builder = Host.CreateApplicationBuilder(args);

builder.Services

.AddMcpServer()

.WithStdioServerTransport() // Configure for local STDIO communication

.WithToolsFromAssembly(); // Discover tools in this project

await builder.Build().RunAsync();

// Tool definition in a separate file or below

public static class SimpleEchoTool

{

public static string Echo(string input) => $"Server received: {input}";

}

Defining a Tool

A tool is defined as a static method within a static class.

- The class is marked with a designated attribute.

- The method is marked with a method-level attribute.

- Crucially, a description attribute is added. This description is not just documentation for humans; it is the primary prompt that the LLM-powered client uses to understand what the tool does and when to use it. A clear, descriptive text is essential for reliable tool invocation.

Local Configuration and Testing

To test this local server, it must be registered with an MCP host like Visual Studio Code. This is done by creating or editing the mcp.json file in the project's .vscode folder (or in the user's global settings).

{

"servers": {

"MyLocalMcpServer": {

"type": "stdio",

"command": "dotnet",

"args":

}

}

}

With this configuration in place, the server can be started and tested from within VS Code's Copilot Chat view by enabling "Agent mode" and invoking the tool through natural language.

3.2. Part II: Building a Remote (HTTP+SSE) MCP Server in ASP.NET Core

While STDIO is excellent for local tools, exposing a web API requires a remote server. The most common pattern for this in .NET is to integrate the MCP server directly into an existing or new ASP.NET Core application.

Project Setup

Create a new ASP.NET Core web application and add the ModelContextProtocol.AspNetCore package, which is specifically designed for this scenario.

dotnet new web -n MyRemoteMcpApi

cd MyRemoteMcpApi

dotnet add package ModelContextProtocol.AspNetCore --prerelease

Server Scaffolding

In Program.cs, the configuration is slightly different. WithHttpTransport() is used instead of WithStdioServerTransport(), and the app.MapMcp() middleware is added to the request pipeline to expose the MCP endpoints.

using ModelContextProtocol.Server;

using System.ComponentModel;

var builder = WebApplication.CreateBuilder(args);

// Register MCP server services and specify HTTP transport

builder.Services.AddMcpServer()

.WithHttpTransport()

.WithToolsFromAssembly();

var app = builder.Build();

// Map the MCP endpoints (e.g., to /mcp)

app.MapMcp();

app.Run();

// Tool definitions remain the same

public static class RemoteInfoTool

{

public static string GetServerTime() => $"Server time is: {DateTime.UtcNow:O}";

}

Configuration and Testing

The client configuration in mcp.json now uses the sse type and specifies the URL where the MCP server is listening.

{

"servers": {

"MyRemoteMcpApi": {

"type": "sse",

"url": "http://localhost:5000/mcp" // Adjust port and path as needed

}

}

}

3.3. Part III: Wrapping Your Existing Services (The Architectural Bridge)

This is the most critical step: connecting the MCP tool definitions to your application's existing business logic. A naive implementation might involve placing HttpClient calls or database logic directly inside the tool methods. However, the official SDK's design promotes a much cleaner, more maintainable architectural pattern.

The SDK's deep integration with .NET's dependency injection (DI) system allows for a clean separation of concerns. This design naturally guides developers toward a robust architecture where the MCP server acts as a thin "Adapter" or "Controller" layer, responsible only for handling protocol communication and delegating to the core business logic, which remains untouched and independent. This promotes testability, reusability, and overall system health.

Dependency Injection is Key

First, register your existing services—such as an API client or a data repository—within the standard ASP.NET Core DI container.

// In Program.cs

// Assume ProductService is your existing class that contains business logic

builder.Services.AddSingleton<ProductService>();

builder.Services.AddHttpClient(); // If your service needs it

builder.Services.AddMcpServer()

.WithHttpTransport()

.WithToolsFromAssembly();

Injecting Services into Tools

The SDK allows these registered services to be injected directly as parameters into the static tool methods. The MCP server host will resolve the service from the DI container at runtime and pass it to the method. This decouples the tool definition from the implementation of the business logic.

The following complete example demonstrates this powerful pattern:

// Your existing business logic service (remains unchanged)

public class ProductService

{

private readonly HttpClient _httpClient;

public ProductService(HttpClient httpClient) { _httpClient = httpClient; }

public async Task<Product?> GetProductByIdAsync(int id)

{

// Logic to fetch a product from a database or another API

// For demonstration, returning a mock product

if (id == 123)

{

return new Product { Id = 123, Name = "Flux Capacitor", Price = 1500.00m };

}

return null;

}

}

public record Product

{

public int Id { get; init; }

public required string Name { get; init; }

public decimal Price { get; init; }

}

// The MCP Tool class, which acts as the adapter

public static class ProductTools

{

public static async Task<string> GetProduct(

ProductService productService, // Service is injected here by the host

int productId)

{

var product = await productService.GetProductByIdAsync(productId);

if (product == null)

{

return $"Product with ID {productId} not found.";

}

return System.Text.Json.JsonSerializer.Serialize(product);

}

}

This architectural pattern is the definitive answer to exposing an existing .NET API via MCP. It is clean, testable, and aligns perfectly with modern .NET development best practices. The business logic within ProductService can be unit-tested independently of the MCP protocol, and the ProductTools class becomes a simple, declarative mapping layer. For more on evaluating business and technical tradeoffs in "build vs. buy" scenarios, see our strategic build versus buy framework.

Section 4: Advanced Architecture and Best Practices

Moving beyond basic implementation requires addressing the strategic and operational considerations for building production-grade MCP servers. This includes designing effective tools, securing the entire communication chain, and establishing robust deployment and configuration practices.

4.1. Design Patterns for API-to-Tool Mapping

Translating a REST API into a set of tools for an LLM is not a simple one-to-one mapping; it is an exercise in designing an interface for a non-human, probabilistic consumer. The design of the tools directly influences the LLM's ability to reason about and use them effectively. For insight on why off-the-shelf software may not provide the best alignment—or long-term value—see our deep dive on the hidden total cost of ownership for off-the-shelf solutions.

A REST API is typically designed for a deterministic human developer who can read documentation, understand complex flows, and handle nuanced error states. An LLM, by contrast, begins each interaction with limited context, relying almost exclusively on the names and descriptions of the tools provided by the server. Poorly designed tools—those that are too granular, "chatty," or have unclear descriptions—lead to inefficient token usage, high latency, and frequent errors.

This reality means that designing an MCP server is a hybrid discipline, blending API design with prompt engineering. The tool's name, its parameters, and especially its description attribute are not just metadata; they are a functional system prompt that directly guides the AI's behavior.

- Adopt an Intent-Based Approach: Instead of exposing low-level, API-centric tools that mirror every single REST endpoint (e.g.,

get_user,get_user_posts,get_user_comments), create higher-level, intent-based tools that accomplish a complete task. For example, a singleget_user_activity_summarytool that internally calls multiple APIs is far more efficient and reliable for an LLM to use than forcing the LLM to orchestrate the sequence of calls itself. - Grouping and Naming Conventions: Organize tools into logical groups based on the resource or domain they operate on (e.g.,

UserTools,ProjectTools). Use consistent, verb-based naming conventions (get_,list_,create_,update_,delete_) to create a predictable interface. - Schema Design and Rich Descriptions: Flatten complex REST parameters from the path, query, and body into a single, unified

inputSchemafor each tool. Use shared schemas for common objects (e.g., aUserrecord) to ensure consistency across tools. Most importantly, write detailed, unambiguous descriptions that explain what the tool does, when to use it, and what the expected inputs and outputs are. The description is the LLM's primary source of truth. - Handling Complex Operations: Design explicit patterns for complex API behaviors. For pagination and filtering, include parameters like

limit,offset, and search fields directly in the tool'sinputSchema. For file uploads and downloads, the standard pattern is for the tool to return a temporary, signed URL rather than attempting to transfer binary data through the protocol. - Automated Conversion from OpenAPI: Tools like

MCPP.NETcan automatically generate MCP tools from an OpenAPI/Swagger specification. This can be a powerful accelerator for bootstrapping a server, but the generated tools are often API-centric. They should be reviewed and likely refactored into more intent-based tools for optimal performance with an LLM.

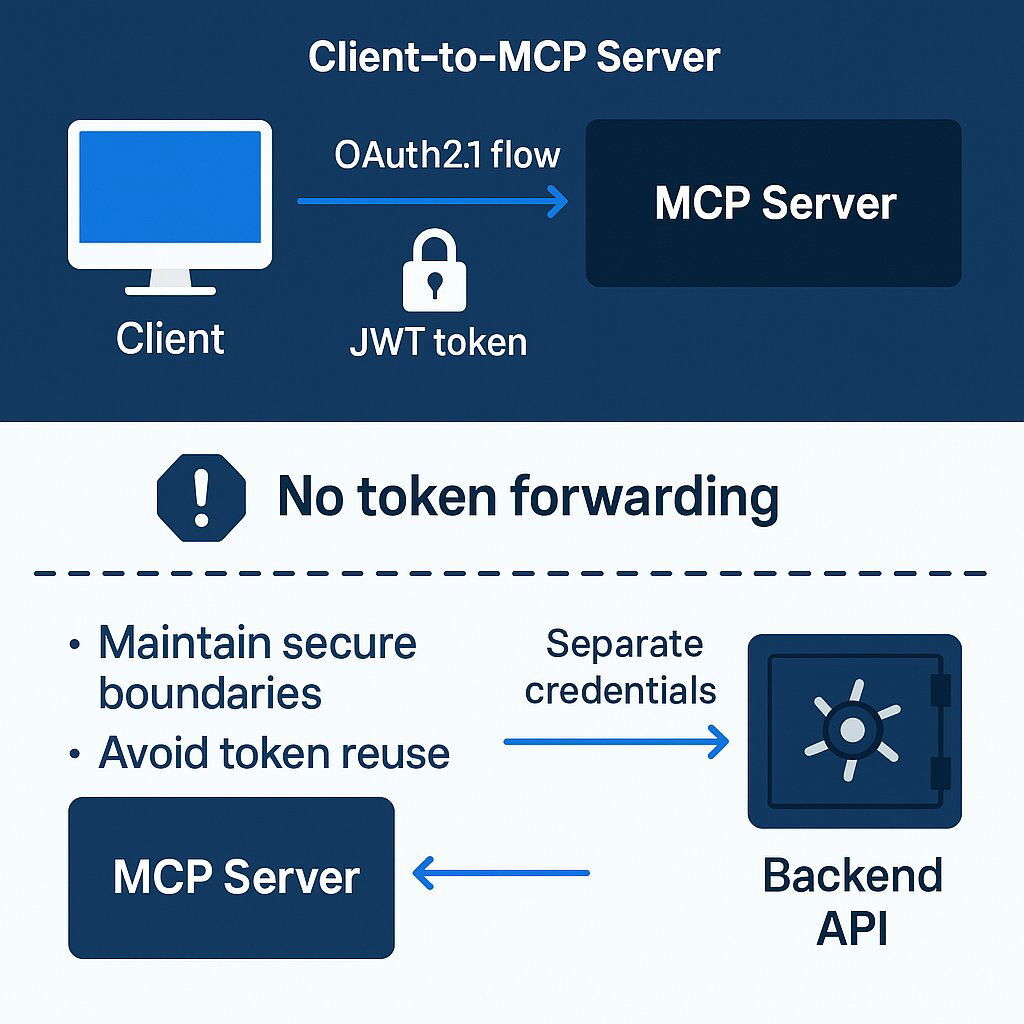

4.2. Securing Your MCP Server and Downstream API

Security in an MCP architecture must be addressed at two distinct layers: the connection between the AI client and your MCP server, and the connection between your MCP server and your backend API. For a broader take on how executives and boards should think about cybersecurity—not just as a technical measure but as a critical business risk—our 2025 guide on cybersecurity as business risk is invaluable.

Layer 1: Securing the MCP Server (Client -> Server)

For remote servers using HTTP-based transports, the official MCP specification mandates the use of OAuth 2.1 for authorization. The standard flow is as follows:

- An MCP client makes an initial, unauthenticated request to the server.

- The server responds with an

HTTP 401 Unauthorizedstatus code. - This response triggers the client (or its host) to initiate a standard OAuth 2.1 authorization flow (e.g., redirecting the user to a login page).

- Upon successful authentication, the client receives an access token.

- The client retries the original request, this time including the access token in the

Authorization: Bearer <token>header.

In an ASP.NET Core application, this can be implemented using standard middleware. By configuring JWT bearer authentication (e.g., with Microsoft Entra ID as the identity provider) and applying the appropriate authorization attributes to the MCP endpoint mapping, you can secure your server in a standard, robust manner.

Layer 2: Securing the Downstream API (Server -> API)

Your MCP server acts as a client to your existing backend API and must authenticate its own requests. It is critical to manage these credentials securely.

- Best Practice for Credential Management: Avoid insecure practices like hardcoding API keys or passing them via environment variables in production, a method noted as not sustainable. Instead, leverage .NET's configuration system (

IOptions) and integrate with a secure secrets management solution like Azure Key Vault or .NET User Secrets for local development. These credentials should be securely injected into your API client service via DI. - Preventing the "Confused Deputy" Problem: A significant security risk is the "confused deputy" vulnerability, where a server might mistakenly accept a token from a client and pass it through to a downstream service. The MCP server must not forward the client's access token to the backend API. The server should authenticate the client's identity for its own access control and then use its own credentials to authenticate with the downstream API. This maintains a clear security boundary between the two layers.

4.3. Configuration, Containerization, and Deployment

- Configuration: For local development and testing, configuration values like API keys can be passed to the server via the

envblock in themcp.jsonfile. For production environments, use standard ASP.NET Core configuration providers (e.g.,appsettings.json, environment variables, Azure App Configuration) to supply settings to your application. - Containerization: Packaging your MCP server in a container is a best practice for portability and consistent deployments. A multi-stage Dockerfile should be used to first build the .NET application in an SDK image and then copy the published artifacts to a lean ASP.NET runtime image, resulting in a smaller and more secure final image.

- Deployment: Remote MCP servers are well-suited for modern cloud platforms. A particularly effective pattern in the .NET ecosystem is deploying the server as a serverless function using Azure Functions. This approach offers scalability, cost-efficiency, and seamless integration with other Azure services. Several templates and samples exist to facilitate this deployment model.

4.4. Building a .NET MCP Client (A Brief Overview)

While the primary focus is on server implementation, a complete understanding includes how to consume MCP services. The official C# SDK provides a straightforward client API. For executives interested in the practical ROI and acceleration DevOps brings—particularly in software release cycles—explore our detailed analysis of cutting release cycle times and business ROI using Azure DevOps.

- Instantiation: A client connection is established using

McpClient.CreateAsync(orMcpClientFactory.CreateAsyncin more complex scenarios), providing the necessary transport configuration, such asSseClientTransportfor a remote server. - Interaction: Once connected, the client can discover the server's capabilities with

await mcpClient.ListToolsAsync()and execute a specific tool withawait mcpClient.CallToolAsync(...). - Integration with AI Chat: The most powerful use case is integrating these tools into an AI chat loop. Because the tool objects returned by

ListToolsAsyncinherit from theAIFunctionbase class inMicrosoft.Extensions.AI, they can be passed directly into theToolsproperty of the chat options when calling anIChatClient(e.g., one backed by Azure OpenAI). The AI model will then be able to reason about these tools and request their execution as part of its response generation, enabling seamless function calling. You may also want to review our executive's guide to essential software development concepts for a refresher on Agile, DevOps, and CI/CD in modern AI-first workflows.

Conclusions

The Model Context Protocol represents a pivotal standardization effort in the field of AI engineering, providing a robust and extensible framework for connecting intelligent agents to the world's data and tools. For developers in the .NET ecosystem, the path to adopting this powerful protocol is well-defined and strongly supported.

The analysis leads to several key conclusions and recommendations:

- MCP is a Strategic Architectural Choice: Adopting MCP is more than implementing a new protocol; it is embracing a specific architectural pattern that separates the user-facing host, the AI-driven client, and the resource-providing server. This structure, combined with advanced primitives like Sampling and Elicitation, provides a comprehensive blueprint for building sophisticated, interactive AI systems.

- The .NET Ecosystem is Aligned with Microsoft's AI Strategy: The official C# SDK's foundational dependency on

Microsoft.Extensions.AI, coupled with community efforts to maintain compatibility, signals a clear direction. Developers building MCP solutions in .NET will achieve the greatest interoperability and long-term viability by also adopting Microsoft's core AI abstractions. - The Official SDK Promotes Clean Architecture: The SDK's design, particularly its elegant use of dependency injection to provide services to tool methods, naturally guides developers toward a clean, maintainable, and testable application architecture. This should be considered the canonical pattern for exposing existing business logic via MCP.

- Tool Design is a New, Hybrid Discipline: Creating effective MCP tools requires a blend of API design and prompt engineering. The structure, naming, and descriptive text of the tools are not merely metadata; they are functional components that directly influence the AI's behavior. Developers must learn to design these interfaces for a probabilistic, non-human consumer to build reliable and efficient AI agents.

For a .NET Core developer seeking to expose an API via MCP, the recommended approach is clear: leverage the official, preview-status C# SDK, build upon an ASP.NET Core foundation, and embrace the dependency injection-based pattern to create a clean adapter layer over existing services. By focusing on intent-based tool design and implementing robust, two-layer security, developers can successfully transform their existing applications into powerful, context-aware resources for the next generation of artificial intelligence.

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.