Akka vs. Alternatives: The Executive Guide to High-Performance Software Scalability

August 01, 2025 / Bryan ReynoldsThe High-Stakes Scalability Challenge

In the digital economy, performance is not just a feature; it's the foundation of customer trust and revenue. Imagine a system failure during a peak demand event—a Black Friday sale, a viral marketing campaign, or a critical financial transaction window. For a business, this isn't a mere technical inconvenience; it's a direct and immediate loss of revenue, a blow to brand reputation, and a potential exodus of customers to competitors. In this high-stakes environment, the ability of a software application to handle immense, unpredictable loads without faltering is a primary competitive advantage.

This is the challenge that led global leaders like Walmart, Verizon, and Capital One to build their most critical systems on Akka, an industrial-strength technology designed specifically for applications that simply cannot fail. When systems must be resilient, responsive, and capable of scaling to millions of users, they turn to this specialized toolkit.

However, for business leaders, the decision to adopt a technology like Akka is fraught with questions. What exactly is it? What are the real-world business benefits versus the potential costs and risks? How does it fit into a modern technology stack that already includes tools like Kubernetes? This article, drawing upon the expertise of custom software development specialists at Baytech Consulting, aims to answer these critical questions. It provides a clear, business-focused guide for executives navigating the complex landscape of high-performance systems.

1. What is Akka, and Why Should an Executive Care?

At its core, Akka is a source-available platform, software development kit (SDK), and runtime for building applications that need to handle massive numbers of users and vast amounts of data simultaneously. Its fundamental purpose is to simplify the notoriously difficult task of creating concurrent, distributed, and fault-tolerant systems on the Java Virtual Machine (JVM). For an executive, this translates to software that is inherently faster, more reliable, and capable of scaling efficiently to meet business demand.

The Core Idea: The Actor Model Analogy

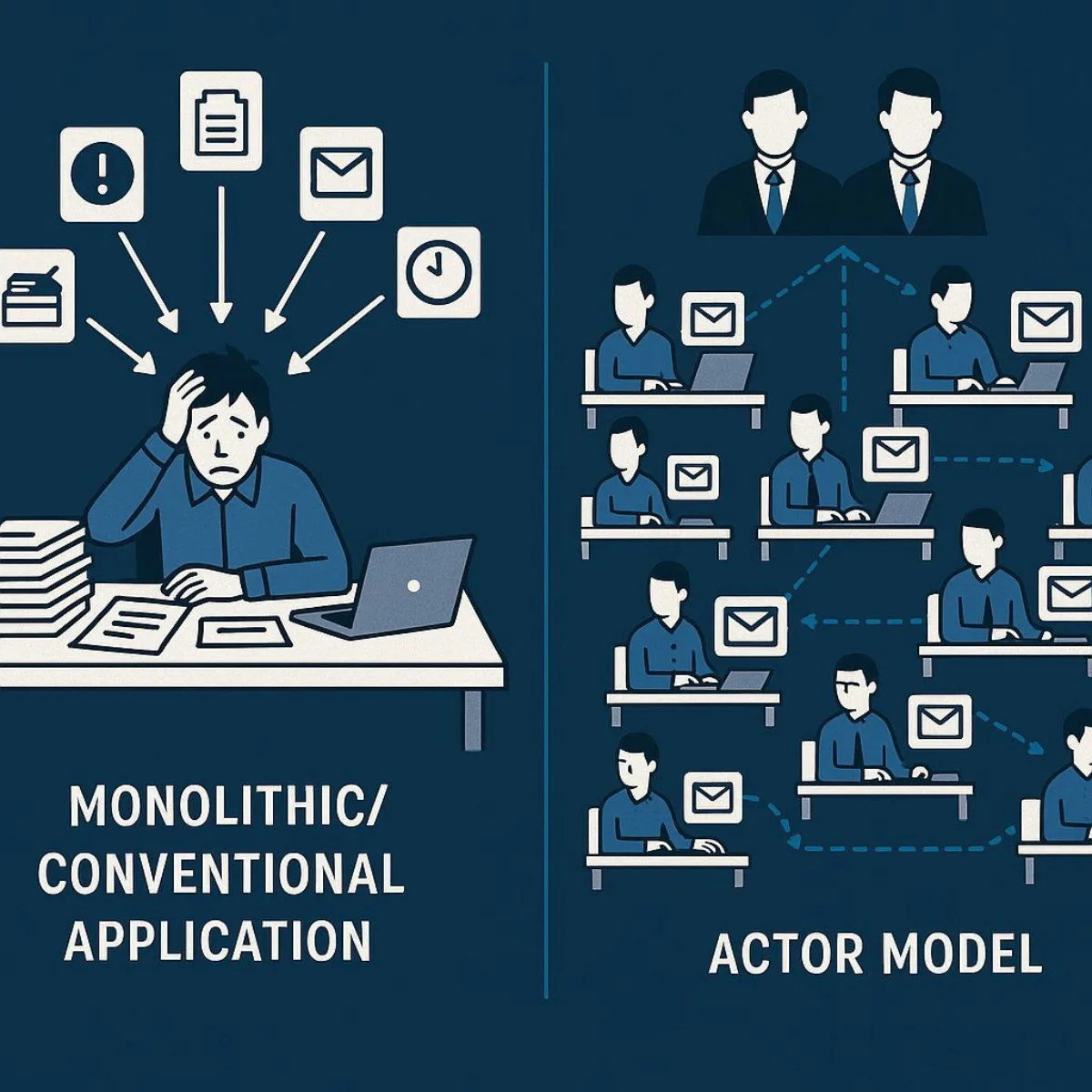

To understand Akka's power, it's helpful to use an analogy. A traditional software application can be thought of as a single, brilliant, but ultimately overwhelmed employee. This employee can only do one thing at a time. If they are busy with a complex task and a new request comes in, that new request must wait. If they get sick (i.e., the application crashes), everything grinds to a halt.

An application built with Akka, however, is like a large, highly efficient corporation. This "corporation" is built on a concept called the Actor Model, a paradigm for concurrent computation first defined in 1973.

- Autonomous Specialists (Actors): The organization is composed of thousands, or even millions, of lightweight, autonomous "actors." Each actor is like a specialist employee with a single, well-defined job. It encapsulates its own state (manages its own private information—no shared desks) and its own behavior. This isolation is key; actors do not share memory, which eliminates a whole class of complex and error-prone issues related to data corruption in concurrent systems.

- Asynchronous Communication (Mailboxes): Instead of directly calling each other and waiting for a response (like a synchronous phone call that ties up both parties), actors communicate by sending asynchronous messages to each other's dedicated "mailbox." An actor can send a message and immediately move on to its next task, without waiting for a reply. The receiving actor processes messages from its mailbox one at a time, in a controlled manner. This message-passing approach prevents bottlenecks and ensures the entire system remains responsive.

- Hierarchical Management (Supervision): This is the most powerful part of the analogy for business leaders. Every actor in the system has a "manager" (a parent actor). If an actor fails while performing its task—perhaps due to a bug or a temporary network issue—it doesn't bring down the entire company. Instead, the failure is contained and reported to its supervisor. The supervisor then decides how to handle the failure according to a predefined strategy: it might restart the failed actor, delegate the task to another actor, or escalate the problem further up the chain of command. This "let-it-crash" philosophy is the foundation of Akka's fault tolerance. It allows for the creation of "self-healing" software where failures are treated as normal, expected events that are handled gracefully without causing a system-wide outage.

Connecting to Business Value

This architectural model directly translates into tangible business outcomes:

- Performance: By breaking down work into small tasks that millions of actors can process in parallel, the system can handle immense workloads, leading to faster response times for customers and more efficient data processing.

- Resilience: The supervision model creates self-healing software that maximizes uptime. Failures are isolated and managed without user-facing disruption, protecting revenue and brand reputation.

- Efficiency: The actor model is extremely resource-efficient. Millions of actors can run on a single machine with a small memory footprint, allowing businesses to achieve greater performance from their existing hardware and potentially reduce infrastructure costs.

It's important for decision-makers to recognize that Akka has evolved beyond a simple set of libraries. Initially conceived as a "toolkit" that required expert assembly, it has matured into a comprehensive "Platform." This evolution signifies a strategic shift by its maintainers to provide more integrated and opinionated solutions, including a high-level SDK, serverless deployment options, and capabilities for edge computing. This change lowers the barrier to entry and can accelerate development, but it also means that adopting Akka is not just about choosing a library; it can be about committing to an entire ecosystem. This presents multiple adoption paths, and navigating this decision is where an experienced partner like Baytech Consulting can provide critical guidance.

2. Who Actually Uses Akka? A Look at Real-World Success Stories

The true measure of any technology is its adoption and success in solving real-world business problems. Akka is trusted by some of the world's largest companies to power their most demanding, mission-critical applications. These case studies demonstrate a clear pattern: Akka is chosen when the scale is massive and the consequences of failure are severe.

Case Study 1: Verizon - Doubling Performance on Half the Hardware

- Business Problem: Verizon's e-commerce operations were constrained by a monolithic legacy platform from Oracle. This system struggled to handle peak traffic during events like new iPhone launches, was slow and cumbersome to update (taking two months for new features), and required the company to build a costly, separate, parallel website each year just to survive the holiday season.

- Solution & Results: Verizon re-architected its platform using reactive microservices built on Akka. The results were transformative. Customer response times were slashed from 6 seconds to 2.4 seconds. The system's capacity for processing orders increased by 750%, from 1,600 to 12,000 orders per minute. This performance boost translated directly to the bottom line: sales on the new platform increased by 235%, and conversion rates jumped by 197%. Critically, the Total Cost of Ownership (TCO) was cut in half, as the new system delivered double the performance on half the hardware.

Case Study 2: Walmart Canada - Conquering Black Friday

- Business Problem: Like many retailers, Walmart Canada's legacy infrastructure could not scale effectively for the extreme traffic of Black Friday, leading to slow performance, a poor customer experience, and lost sales. The development process was also slow and tied to expensive, specialized hardware.

- Solution & Results: By rebuilding its web and mobile stack with Scala and the Akka Platform, Walmart Canada achieved dramatic improvements. The company saw a 20% boost in conversions and a 98% increase in mobile orders shortly after launch. Page load times were reduced by 36% during peak traffic, contributing to its most responsive Black Friday ever. The new architecture also accelerated the development cycle by a factor of two to three, all while saving 20% to 50% on web-related infrastructure costs by moving workloads to commodity servers.

Case Study 3: Capital One - Real-Time Finance at Scale

- Business Problem: The auto loan process was a major point of friction for customers, filled with uncertainty and delays that led to a poor experience and lost business. The existing systems could not provide the real-time decision-making required to change this dynamic.

- Solution & Results: Capital One built "Auto Navigator," a cloud-based, real-time financing platform with Akka at its core. The new system increased loan application processing capacity by nearly 5x (from 100 to 486 applications per minute). A data processing task that previously took over two days was reduced to under one second. This enabled a game-changing feature: allowing customers to get pre-qualified for financing in real-time, with no impact on their credit score, before ever setting foot in a dealership.

The common thread across these success stories—and others like PayPal, which handles over a billion daily transactions on just eight virtual machines using Akka—is the need to manage extreme scale in mission-critical scenarios. These are not simple web applications; they are complex, distributed systems where latency or downtime translates directly and immediately into massive financial or reputational damage. The high cost of failure in these domains justifies the significant investment in the specialized skills and architectural approach that Akka represents. As these examples show, adopting Akka is a strategic decision driven by high-stakes business needs. At Baytech Consulting, the first step in any engagement is to analyze the business problem to determine if the scale and criticality of the application justify this level of architectural investment.

3. What Are the Strategic Benefits and Business Drawbacks?

While Akka is exceptionally powerful, it's a specialized tool, not a universal solution. For business leaders, understanding the strategic trade-offs is essential for making an informed decision. A balanced view acknowledges that while the benefits can be transformative, the associated costs and complexities are significant.

Table 1: Akka at a Glance: Pros and Cons for Business Leaders

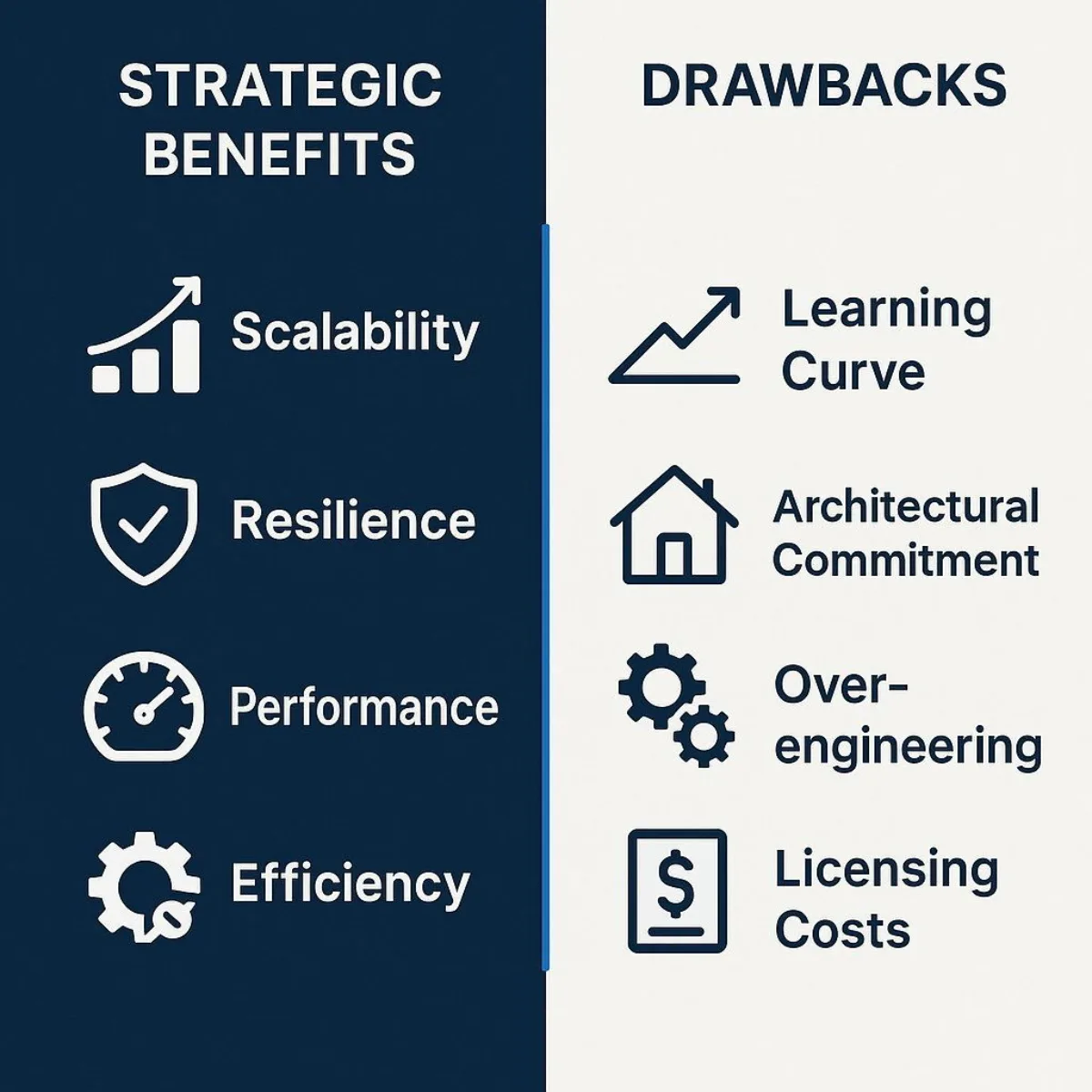

| Strategic Benefits (The 'Why') | Business Drawbacks (The 'Watch-Outs') |

|---|---|

| Extreme Scalability: Supports massive user growth without performance degradation, enabling business expansion. | High Learning Curve: Requires a significant investment in specialized team training and a paradigm shift in thinking. |

| High Resilience: Self-healing systems protect revenue by maximizing uptime and ensuring business continuity. | Architectural Commitment: Can be intrusive to the codebase; it is a foundational choice that is not easily swapped out later. |

| Enhanced Performance: Fast response times lead to better user experience, higher engagement, and improved conversion rates. | Risk of Over-engineering: Using such a powerful tool for simple problems adds unnecessary complexity and overhead. |

| Resource Efficiency: Achieves more with less hardware, potentially lowering infrastructure costs and improving TCO. | Commercial Licensing Costs: Production use for companies with over $25M in revenue requires a paid license, impacting TCO. |

A Deeper Look at the Drawbacks

While the benefits are compelling, the drawbacks require careful consideration from a business perspective.

- The Learning Curve & Talent Pool: Adopting Akka is not a simple matter of learning a new library. It requires developers to embrace a fundamentally different way of thinking about concurrency and state management, moving from traditional object-oriented approaches to the Actor Model. This represents a steep learning curve and necessitates a significant investment in team training. Alternatively, it means competing for a smaller and often more expensive pool of engineers who already possess this specialized expertise. For an in-depth exploration of what this talent landscape looks like, consider reading Beyond the Code: Strategic Integration Requires Strategic Talent.

- Architectural Intrusiveness: Akka is not a drop-in component that can be easily added or removed. It is a foundational architectural choice that deeply influences the entire structure of an application. Once a system is built on the Actor Model with Akka, migrating away from it is a major and costly re-engineering effort. This makes the initial decision a long-term commitment.

- The Business Source License (BSL): This is a critical factor for any business with significant revenue. In 2022, Akka's license changed from the permissive Apache 2.0 open-source license to the Business Source License (BSL) 1.1. In practical terms, this means that while Akka is free to use for development, testing, and pre-production environments, any company with over $25 million in annual revenue must purchase a commercial license for production use. The pricing is based on a "per-core" model (defined as a vCPU or thread), which can become a significant operational expense for large-scale deployments. This license cost must be factored into any calculation of the Total Cost of Ownership.

The apparent contradiction that Akka is both a "high-level abstraction" and a complex tool with a "steep learning curve" is important to unpack. While Akka abstracts away the dangerous, low-level complexity of manual thread management, locks, and synchronization—a common source of bugs that are notoriously difficult to find and fix—it also introduces the high-level conceptual complexity of the Actor Model itself. For an executive, this means the "simplicity" Akka offers is not in the ease of learning but in the operational stability, correctness, and resilience of the final system. It represents an upfront investment in complexity (training, hiring, architectural design) for a long-term reduction in operational complexity (fewer critical bugs, higher uptime, less firefighting). This is a classic strategic trade-off, and Baytech Consulting helps clients model the total cost of ownership (TCO), factoring in not just licensing, but also hiring, training, and the long-term operational savings from a more resilient system, to determine if the investment makes sense for a specific business case.

4. How is Akka Different From Other Technology We Use?

To make a sound strategic decision, it's crucial to understand where a tool fits within the broader technology landscape. Akka is often discussed alongside other technologies for building scalable and resilient applications, but it serves a unique and specific purpose.

Akka vs. Standard Concurrent Programming

Most concurrent applications are built without a framework like Akka. Developers often rely on the tools built directly into the Java platform, primarily the java.util.concurrent package. This approach can be thought of as the "hard way." It requires developers to manually manage threads, locks, and shared memory, a process that's fraught with peril. Common pitfalls like

deadlocks (where threads get stuck waiting for each other) and race conditions (where the outcome of an operation depends on unpredictable timing) are incredibly difficult to diagnose and can lead to catastrophic, intermittent failures in production.

More modern Java features like CompletableFuture offer a better way to handle asynchronous operations but still lack the comprehensive, high-level model that Akka provides for managing distributed state, supervision, and self-healing across a cluster of machines. Akka was created precisely to provide a higher level of abstraction that shields developers from these dangerous and error-prone complexities, allowing them to focus on business logic instead of low-level concurrency mechanics.

Akka vs. Kubernetes: Application vs. Infrastructure Resilience

For many executives, the promise of resilience and scalability is synonymous with Kubernetes, the de facto standard for container orchestration. A common and critical question is, "Don't we already solve this with Kubernetes?" The answer is that Akka and Kubernetes are not competitors; they are powerful partners that operate at different layers of the technology stack.

The relationship between the two can be understood with a simple table:

Table 2: Fault Tolerance: Akka vs. Kubernetes

| Feature | Kubernetes | Akka |

|---|---|---|

| Key Role | Infrastructure Manager (The "Hospital") | Application Paramedic (The "Doctor") |

| Unit of Control | Container / Pod (The "Ambulance") | Actor (The "Patient's Organs") |

| Failure Response | Restarts the entire failed container, which can be slow and disruptive. | Restarts only the tiny, failed actor within milliseconds, while the rest of the application continues running. |

| State Management | Treats containers as stateless by default. Managing stateful applications is notoriously complex. | Natively designed to manage distributed, in-memory state within the application itself. |

| Analogy | If a delivery truck crashes, Kubernetes provides a new, empty truck. | Akka ensures the packages from the crashed truck are instantly recovered, rerouted, and the delivery schedule is updated without losing a single order. |

This reveals a powerful symbiotic relationship. Kubernetes provides coarse-grained, infrastructure-level fault tolerance. It ensures that a specified number of application containers are running. If a server fails, Kubernetes will restart the affected containers on a healthy machine. This is highly effective for stateless applications, where any container can handle any request.

However, for stateful applications, simply restarting a container is insufficient, as all the in-memory state is lost. This is where Akka provides fine-grained, application-level resilience. An Akka application running on Kubernetes can manage its own distributed state. If a container (an Akka node) fails, the Akka cluster detects this failure. It can then automatically restart the specific actors that were running on that node on other healthy nodes within the cluster. Using its persistence features, Akka can recover the exact state of those actors from a shared event log, allowing them to resume their work precisely where they left off, often within milliseconds.

In essence, Akka allows developers to build and run complex stateful services on Kubernetes as if they were stateless, which dramatically simplifies development and operations. The most powerful modern systems do not choose between Akka and Kubernetes; they use them together. Kubernetes manages the infrastructure, and Akka manages the application's state and resilience. Architecting this powerful combination is a specialty of the experts at Baytech Consulting.

5. What Are the Main Alternatives to Akka?

The technology landscape offers several paths to building reactive, scalable systems. If the Actor Model is the right paradigm for a business problem, there is a key alternative to consider. If not, other powerful frameworks exist that may better align with a company's existing technology stack and development philosophy.

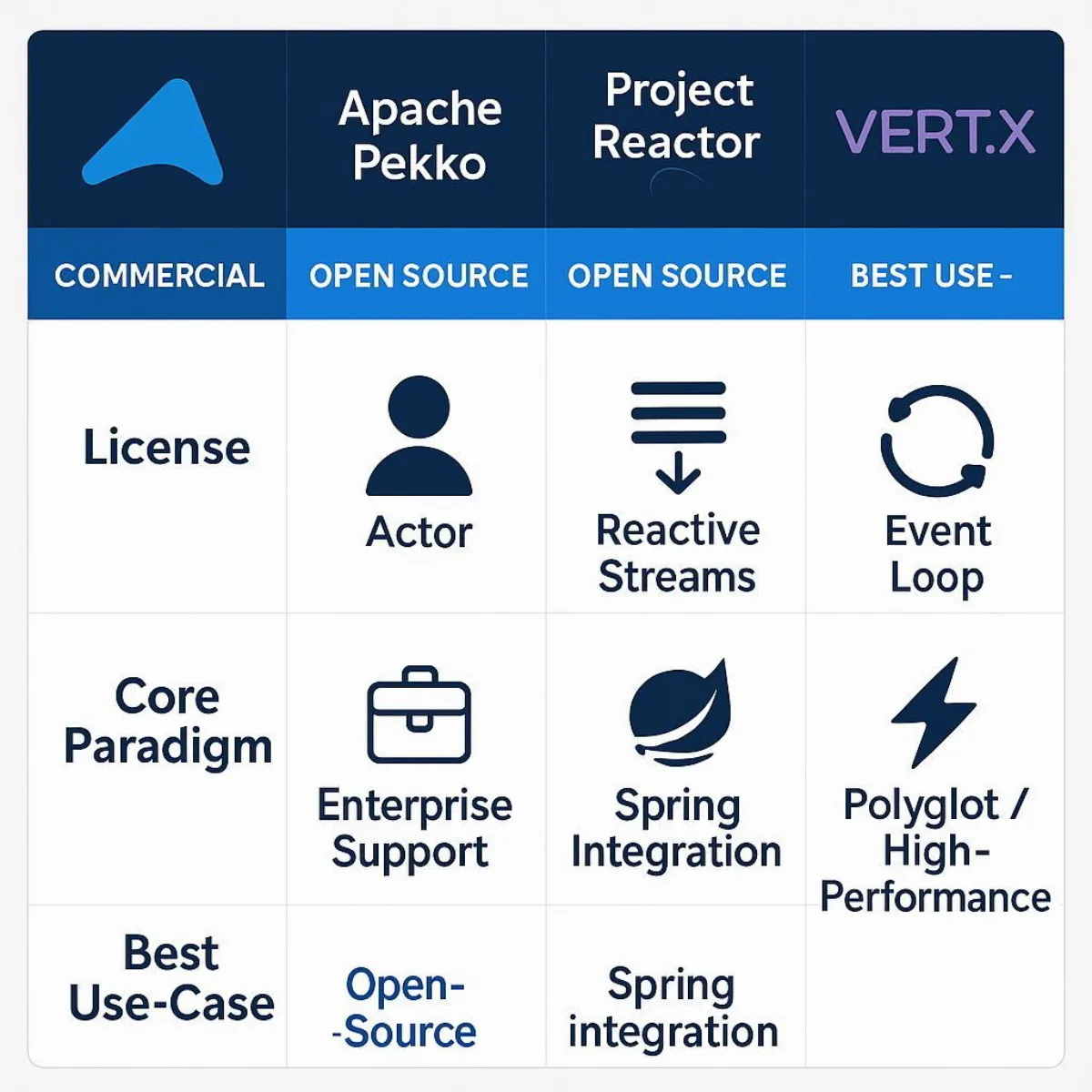

The Direct Open-Source Alternative: Apache Pekko

The most direct alternative to Akka is Apache Pekko. Pekko's origin is a direct consequence of Akka's license change. In response to the move to the BSL, the open-source community created Pekko as a fork of the last Apache 2.0 licensed version of Akka (2.6.x). In May 2024, Pekko graduated to become a Top-Level Project at the Apache Software Foundation, a significant milestone that signals its credibility, active community, and long-term viability.

For businesses, the choice between Akka and Pekko represents a fundamental strategic fork in the road. It is no longer just a technical decision but a choice between two business models:

- Akka: A commercially backed product with professional support, a clear roadmap driven by a single vendor (Lightbend), but with associated licensing costs for larger companies.

- Apache Pekko: A community-driven, fully open-source project with no licensing fees, but which relies on community support and contributions for its maintenance and evolution.

Alternative Paradigms for Reactive Systems

Beyond the Actor Model, other frameworks provide different approaches to building reactive applications. The choice among these is often dictated by a company's existing ecosystem and developer skillset.

- Project Reactor: This is the standard choice for organizations heavily invested in the Spring ecosystem. It is the foundation of Spring WebFlux and provides a powerful way to build non-blocking applications using the Reactive Streams specification.

- Vert.x: A flexible, polyglot (multi-language) toolkit known for its high performance and a gentler learning curve than Akka. It is based on an event-loop model and is less opinionated, but its built-in fault tolerance mechanisms are not as sophisticated as Akka's supervision hierarchies.

- ZIO: A modern framework popular in the Scala community for teams committed to a pure functional programming style. It offers a powerful and type-safe approach to managing concurrency and side effects.

Tools for Different Jobs: Differentiating from Message Queues

It's also crucial to clarify that tools like Apache Kafka and RabbitMQ are not direct competitors to Akka but are often complementary. A common point of confusion arises from the shared term "message." The roles can be distinguished as follows:

- Message Brokers (Kafka, RabbitMQ): These are the durable "postal service" for a microservices architecture. They are designed to reliably transport messages between independent services, decoupling them so they don't have to communicate directly. They excel at data pipelines and asynchronous inter-service communication.

- Akka: This is the "smart office worker" inside a service. Akka provides the computational model for processing messages, managing internal state (remembering past correspondence), handling failures, and performing complex business logic within the boundaries of a single service or a tightly coupled cluster.

In many high-performance architectures, these tools are used together. A service might use Kafka to receive a stream of events from other services, and then use Akka Streams internally to process that data in a highly concurrent and fault-tolerant manner.

Table 3: Strategic Alternatives to Akka

| Technology | License Model | Core Paradigm | When to Consider It |

|---|---|---|---|

| Akka | Commercial (BSL) | Actor Model | A business needs vendor support and a managed platform for a mission-critical, stateful, distributed system. |

| Apache Pekko | Open Source (Apache 2.0) | Actor Model | A business wants the power of the Actor Model without commercial licensing fees and is comfortable with community-driven support. |

| Project Reactor | Open Source (Apache 2.0) | Reactive Streams | An organization is heavily invested in the Spring ecosystem and needs to build non-blocking, reactive applications. |

| Vert.x | Open Source (Apache 2.0, EPL 2.0) | Event Loop | A business needs a flexible, high-performance, multi-language toolkit for building event-driven services, often with a focus on web APIs. |

6. When Should My Business Consider Akka (or Pekko)?

The decision to adopt a powerful, specialized technology like Akka or its open-source counterpart, Pekko, should be driven by a clear alignment between the technology's strengths and the business's most critical challenges. The following checklist can serve as a litmus test to help executives identify if their problems fall into the sweet spot for the Actor Model.

Consider Akka or Pekko if the answer is "yes" to several of these questions:

- Does the application need to manage the state of thousands, millions, or even billions of concurrent entities, such as IoT devices, online gaming players, user sessions, or financial instruments?

- Is low-latency, high-throughput data processing a critical business requirement where milliseconds matter, as in financial trading, real-time analytics, or ad-tech bidding platforms?

- Is "self-healing" and extreme fault tolerance non-negotiable because system downtime is unacceptably expensive or would cause a catastrophic loss of customer trust?

- Is the system inherently distributed, needing to scale across multiple servers, potentially in different data centers or cloud regions, while maintaining a consistent view of its state?

- Does the application involve complex, stateful streaming data pipelines that require more than simple data transformation, such as those involving feedback loops, complex event processing, or dynamic routing?

If your business is facing several of these challenges, you are likely grappling with the exact class of problems that Akka and Pekko were designed to solve. The next logical step is a deeper architectural analysis to weigh the immense benefits against the costs of complexity, training, and ownership. This is a process where an experienced partner like Baytech Consulting can provide invaluable guidance, ensuring the final decision is grounded in a comprehensive understanding of both the technology and the business objectives.

Conclusion: Making the Right Architectural Choice for Your Business

Akka, and its open-source sibling Apache Pekko, represent an exceptionally powerful and specialized framework for building the next generation of resilient, scalable software. It's not a general-purpose tool for every problem. However, for the right class of high-stakes, high-scale, distributed systems, adopting the Actor Model can provide a significant and durable competitive advantage, enabling performance and reliability that are simply unattainable with traditional approaches.

The decision to adopt this technology is a major strategic one. It requires balancing immense technical capability against the realities of a steep learning curve, the need for specialized talent, and the total cost of ownership—which, in the case of Akka, includes potential licensing fees. It's a long-term architectural commitment that has profound implications for a company's ability to scale, innovate, and withstand failure.

Choosing a core application architecture is one of the most critical technology decisions a business can make. At Baytech Consulting, we specialize in helping businesses navigate these complex choices. Our expertise in custom software development and distributed systems allows us to partner with organizations to understand their unique business goals and design an architecture that is not only powerful but perfectly aligned with their strategy and budget.

Contact us today for a strategic consultation to discuss how to build a resilient, scalable future for your digital platforms.

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.