AI Beyond Chatbots: The Executive Playbook for a Defensible Business Advantage

July 31, 2025 / Bryan ReynoldsBeyond the Hype: The Executive's Guide to the Next Wave of AI and Building a Defensible Business Advantage

Introduction: The AI Revolution is Not About Chatbots—It's About Rewiring Your Business

The ongoing discourse surrounding Artificial Intelligence often centers on generative tools that have captured the public imagination. However, to view this technological shift through the narrow lens of chatbots is to fundamentally misunderstand its magnitude. The AI revolution is a paradigm shift far greater than the internet revolution of the 1990s. While the internet connected people to information, AI is connecting intelligence to everything . Its impact is deeper, its reach is broader, and its potential to transform every facet of business and human life is exponentially larger.

The initial wave of AI adoption, characterized by widespread experimentation with tools like ChatGPT, was about exploring possibilities. The next, more profound wave is about strategic integration. It is about fundamentally rewiring the very fabric of how organizations operate, compete, and create value. The most significant returns on AI investment are realized not when it is treated as just another piece of technology—a static tool like an HR system—but when it is understood as a living, adaptive system. This system learns, grows, challenges organizational norms, and evolves alongside its environment, creating a dynamic feedback loop between the technology and its human users. According to Gartner, nearly 80% of AI implementations fall short of expectations precisely because of this flawed, tool-based perspective.

This transformation is not a speculative trend; it is a massive economic shift demanding executive attention. The global AI market is on a trajectory of explosive growth, with forecasts projecting it will exceed $1.8 trillion by 2030, expanding at a compound annual growth rate (CAGR) of over 35%. This report will guide executives through this evolving landscape, moving beyond the initial hype to provide a clear, strategic roadmap. It will begin by assessing the current state of AI adoption, then explore the next-generation model architectures that are poised to redefine what is possible. From there, it will delve into the rise of autonomous agents and their power to automate complex enterprise workflows. Crucially, it will analyze the central strategic decision facing every leader—leveraging generalist platforms versus investing in specialized, custom-built AI—and conclude with a practical playbook for building a durable, AI-powered competitive advantage through custom software solutions.

Section 1: The 2025 AI Landscape: From Widespread Adoption to True Integration

The AI landscape of 2025 is defined by a marked acceleration in both adoption and strategic maturity. What was once the domain of specialized tech teams has now become a central pillar of corporate strategy, driven from the top down. This shift has moved the conversation from "if" to "how," with a relentless focus on generating tangible business value.

The State of Play: AI is Now Table Stakes

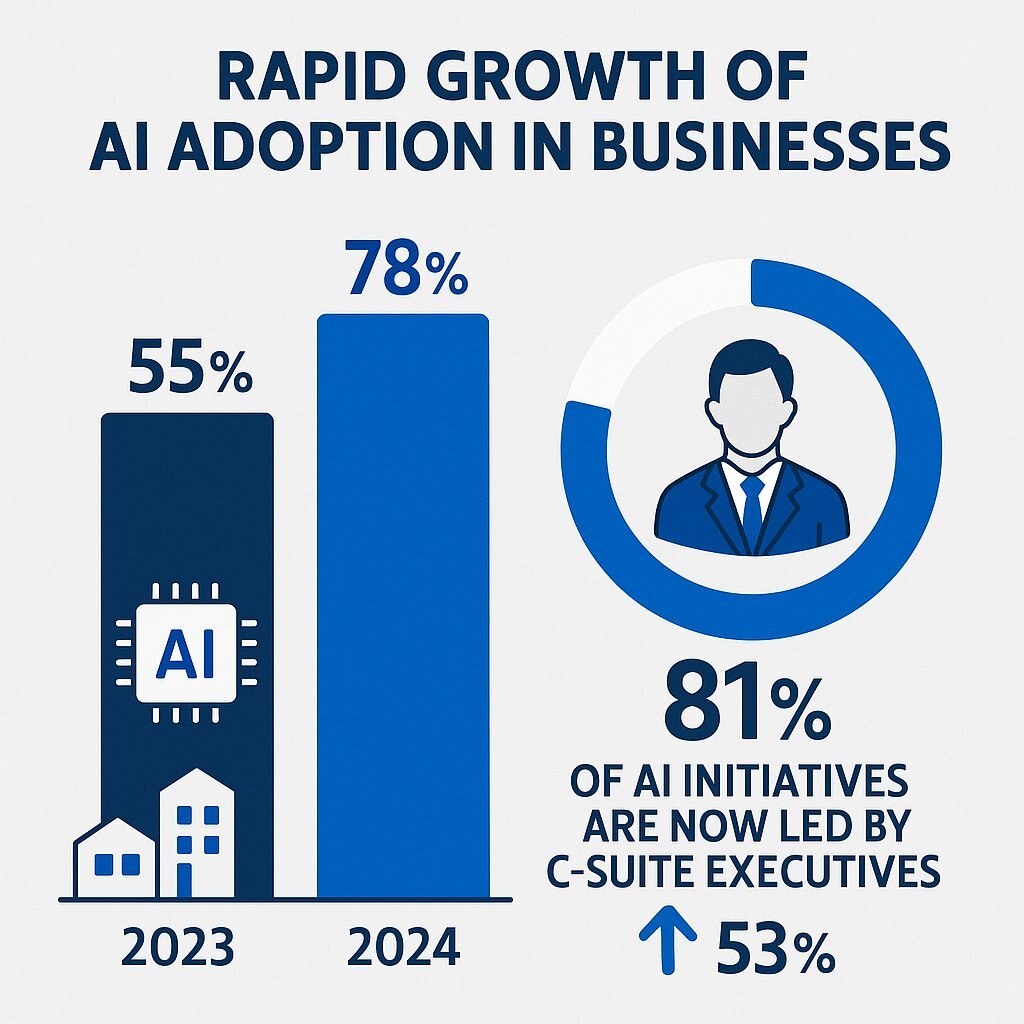

AI adoption is no longer a niche activity for early movers; it has become a standard component of modern business operations. More than three-quarters of organizations now report using AI in at least one business function, a significant leap propelled by the accessibility and rapid proliferation of generative AI tools. Data from 2024 shows that 78% of organizations were using AI, a dramatic increase from just 55% the previous year, highlighting the speed at which this technology is becoming ubiquitous.

This surge is not a grassroots movement bubbling up from IT departments. It is a C-suite-led mandate. According to a 2025 report on AI infrastructure, a staggering 81% of AI initiatives are now commanded by C-suite executives , a sharp increase from 53% in the prior year. This top-level ownership signifies a critical evolution in how AI is perceived: it has transitioned from a series of departmental experiments into a core element of corporate strategy, essential for growth and competitiveness. This executive sponsorship is backed by significant financial commitment, with 70% of organizations now devoting at least 10% of their total IT budgets to AI initiatives spanning software, hardware, and networking.

The New Mandate: Moving from "Using AI" to "Generating Value with AI"

With adoption now widespread, the focus for business leaders has matured beyond simple implementation to achieving measurable financial returns. The primary question is no longer "Are we using AI?" but "Is our AI generating value?" The data is unequivocal on what separates successful AI adopters from the rest: the single biggest factor affecting an organization's ability to see a positive impact on Earnings Before Interest and Taxes (EBIT) from generative AI is the redesign of business workflows . Organizations that fundamentally reshape their processes to leverage AI are the ones reaping the financial rewards.

This focus on value is accompanied by an intense pressure for speed. A majority of executives— 51%—expect to see measurable financial benefits from their AI investments within the next year . While this urgency drives investment and accelerates innovation, it also introduces significant risk. A rush to deploy without proper planning can lead to failed projects, security vulnerabilities, and an inability to scale, ultimately undermining the very ROI that leaders are seeking.

The scale of the economic opportunity is immense, justifying the strategic focus and investment. The table below provides a concise overview of the AI market's trajectory, breaking down the overall market into its most dynamic sub-segments: Multimodal AI and Agentic AI, which represent the next frontier of value creation.

| Market Segment | 2025 Market Size (USD) | 2030-2034 Projected Size (USD) | CAGR (%) |

|---|---|---|---|

| Overall AI Market | ~$370 Billion | ~$1.8 Trillion (by 2030) | ~30-36% |

| Multimodal AI | ~$2.5 Billion | ~$42 Billion (by 2034) | ~37% |

| Agentic / Autonomous AI | ~$13.8 Billion | ~$103 Billion (by 2034) | ~39-42% |

Beneath these headline numbers, a more nuanced and complex picture of the AI landscape emerges. The data reveals critical divergences and paradoxes that will define the competitive environment for years to come.

First, the competitive gap in AI is widening, but the nature of this gap has changed. The initial "AI chasm" was between companies that used AI and those that did not. Today, with adoption rates soaring, the chasm is between those who are merely using AI tools and those who are strategically rewiring their entire business around AI. Large enterprises are moving aggressively, with 21% already having fundamentally redesigned at least some workflows to integrate generative AI. They are centralizing governance for risk and compliance while using hybrid models to deploy tech talent, all in a concerted effort to drive EBIT impact. In stark contrast, small and medium-sized businesses (SMBs) are falling further behind. While over 90% of small business owners are optimistic about AI's potential, many admit they do not know how to start, leading to hesitation and fear. This creates a dangerous dynamic where the force-multiplying power of AI concentrates economic opportunity in the hands of a few large corporations, potentially stifling the broader ecosystem of innovation. This is not a gap of access to tools, which are more available than ever, but a gap of strategic and operational capacity to integrate them effectively. As large firms become more adept at AI integration, they generate more data and efficiencies, which in turn fuels better AI models, creating a virtuous cycle that SMBs will find increasingly difficult to penetrate.

Second, a dangerous "AI Maturity Paradox" has taken hold at the executive level, where confidence is dramatically outpacing foundational readiness. C-suite confidence in their organization's ability to execute on AI has surged from 53% to 71% in just one year. Similarly, 94% of organizations express confidence in their AI infrastructure roadmaps. Yet, a look at the underlying operational realities reveals critical weaknesses. Only 14% of leaders believe they have the right talent to meet their AI goals, a gap that is worsening. Nearly half of organizations (42%) report they have insufficient proprietary data to properly customize models. A similar number (48%) admit to having gaps in their policies for detecting AI bias, and 55% say AI adoption has increased their vulnerability to cyber threats. Furthermore, despite high confidence in roadmaps, only 17% are planning their data center and infrastructure needs more than three years out, a dangerously short-sighted approach given the long lead times for securing power and capacity. This high-confidence, low-readiness state is a leading indicator of future trouble. It suggests a coming wave of AI projects that will fail to deliver on their promised ROI, suffer from significant security or bias incidents, or stall because they cannot scale. The executive mandate for rapid returns will only amplify this risk, creating pressure to prioritize speed over the robust foundational work required for sustainable success.

Section 2: The Future of Models: What's Coming After Transformers?

The current era of generative AI has been overwhelmingly defined by a single, powerful architecture: the Transformer. This model design, which introduced a "self-attention" mechanism, gave systems like GPT-4o and BERT their remarkable ability to understand context and nuance in language. However, for all their power, Transformers have fundamental architectural limitations that create significant business challenges. Their computational and memory requirements scale quadratically with the length of the input sequence, meaning that doubling the length of a document quadruples the processing cost. This results in high operational costs, slow processing times for long inputs, and constrained capabilities, creating a bottleneck for innovation.

To overcome these hurdles, the research community is developing a new generation of AI architectures. This marks a pivotal shift in the AI landscape. The era of a single, dominant, general-purpose architecture is giving way to a future defined by a portfolio of specialized models. For business leaders, this means the strategic challenge is no longer about finding the next GPT, but about understanding this emerging "toolbox" of architectures and knowing which tool to deploy for which business problem.

The Emerging Architectures: A Specialized Toolbox for Business

The next wave of AI models is not about replacing Transformers wholesale, but about providing specialized solutions that excel where Transformers struggle. Three key architectures are leading this charge: Multimodal AI, Diffusion Models, and State Space Models.

A. Multimodal AI: The Convergence of Senses

What it is: Multimodal AI represents a significant leap toward more human-like comprehension by processing and integrating multiple types of data—such as text, images, audio, and video—within a single, unified system. Instead of analyzing a text description in isolation, a multimodal model can look at a picture, listen to a spoken command about that picture, and generate a relevant text response. This allows for a more holistic and contextually rich understanding of information. Leading models in this space include Claude 3, which demonstrates impressive accuracy in understanding complex visual data like charts and diagrams.

Business Impact: The ability to fuse different data streams is a game-changer for a wide range of industries, enabling richer context and entirely new user experiences.

- Intelligent Document Processing: In sectors like finance and healthcare, multimodal AI can automate the extraction of structured data from complex documents that mix text, tables, handwriting, and images, such as invoices, insurance forms, or medical records.

- Enhanced Customer Service: A service agent can gain a complete picture of a customer's sentiment by analyzing not just their written words in a chat, but also the tone of their voice and their facial expressions on a video call, leading to more empathetic and effective interactions.

- Smarter Retail and E-commerce: Multimodal systems power features like Amazon's StyleSnap, which allows a user to upload a photo of an outfit and use a text query to ask for similar, more affordable options, blending computer vision with natural language understanding to create a highly personalized shopping experience.

- Insurance Fraud Detection: Insurers can drastically reduce fraud by using multimodal models to cross-reference a diverse set of data sources, including text-based customer statements, transaction logs, and supplemental photos or videos of claimed damages.

B. Diffusion Models: Beyond Images to Parallel Generation

What it is: Diffusion models are best known as the engine behind the explosion in high-quality, AI-generated art, powering tools like Stable Diffusion and DALL-E 3. This architecture works through a unique dual-phase process: it first learns to systematically add random "noise" to data until it becomes unrecognizable, and then it trains a neural network to reverse this process, starting from pure noise and progressively refining it into a coherent, high-fidelity output. While pioneered for images, cutting-edge research is now successfully applying this methodology to text. Instead of generating text sequentially one word at a time, these models can generate and refine an entire sequence in parallel, akin to a writer drafting a rough paragraph and then editing it holistically.

Business Impact: For business applications, the core advantages of text-based diffusion models are unprecedented speed and granular control .

- Extreme Speed: By generating text in parallel, these models can achieve speeds of over 1,000 tokens per second on a single high-end GPU, making them ideal for latency-sensitive, real-time applications like interactive coding assistants or dynamic chatbot responses.

- Fine-Grained Control: The diffusion process allows for "plug-and-play" adjustments to the output's characteristics—such as style, sentiment, or topic—without needing to retrain the entire model. This is incredibly valuable for applications like generating personalized marketing copy on the fly or ensuring brand voice consistency in automated content.

- Creative Industries: Beyond text, diffusion models continue to revolutionize creative workflows in graphic design, film production, and music generation by allowing artists and designers to rapidly generate a wide range of high-quality assets, concepts, and prototypes from simple descriptions.

C. State Space Models (SSMs): The "Long Memory" Architecture

What it is: State Space Models are a revolutionary architecture, exemplified by models like Mamba, that draws inspiration from control systems engineering, a field with roots in the Apollo space program. SSMs are designed to process sequences with

linear-time complexity . This means that doubling the input length only doubles the computation, a massive improvement over the Transformer's quadratic scaling. This efficiency makes them exceptionally adept at handling extremely long sequences of data. They work by compressing the entire history of a sequence into a compact "state" and updating it recurrently, allowing them to effectively "remember" information over vast distances.

Business Impact: SSMs directly solve the Transformer's critical "memory" and efficiency problems, unlocking new strategic capabilities.

- Massive Context Window: SSMs can process sequences of a million tokens or more, far surpassing the capabilities of most standard Transformers. This enables deep, comprehensive analysis of previously intractable documents, such as lengthy legal contracts, multi-year financial reports, entire software codebases, or extensive patient medical histories.

- Superior Cost and Speed Efficiency: Because they are attention-free, SSMs are significantly faster and cheaper to run for inference than Transformers of an equivalent size. This has a direct and positive impact on the total cost of ownership and ROI of large-scale AI deployments.

- Real-Time Data Stream Analysis: Their inherent efficiency and recurrent nature make them perfectly suited for analyzing continuous time-series data, a task that is challenging for Transformers. This opens up applications in monitoring financial market fluctuations, processing IoT sensor data for predictive maintenance, or analyzing DNA sequences.

The emergence of these diverse architectures presents a critical strategic choice for technology leaders. The path forward involves understanding the distinct strengths and weaknesses of each model type and mapping them to specific business needs, as summarized in the table below.

| Architecture | Core Strength | Ideal Business Use Case | Key Limitation | Relative Inference Cost |

|---|---|---|---|---|

| Transformer | Sophisticated reasoning, broad knowledge | Complex Q&A, general content creation | Quadratic scaling cost, limited context | High |

| Multimodal AI | Holistic understanding from diverse data | Insurance fraud detection (analyzing text, photos, logs) | High data integration complexity | High |

| Diffusion Model | Parallel generation, speed, creative control | Real-time coding assistant, personalized ad creative | Computationally intensive training | Medium-High |

| State Space Model (SSM) | Extreme context length, linear efficiency | Analyzing entire codebases, financial time-series data | Still emerging, less mature ecosystem | Low |

The development of these new architectures also reveals a deeper strategic trend. The central weakness of the Transformer model is its limited and computationally expensive memory, represented by its context window. The research community is pursuing two distinct paths to solve this problem. The first path is a fundamental architectural solution, embodied by State Space Models like Mamba, which redesigns the model from the ground up to build efficient memory into its core design. This approach aims to create a new class of models that are inherently better at handling long sequences.

The second path is a hybrid solution. This approach keeps the powerful and mature Transformer architecture as the "brain" but augments it with an external, queryable memory system. Techniques like Retrieval-Augmented Generation (RAG), which allows a model to fetch information from a database, and more advanced concepts like Compressive Transformers or Titans, which have an explicit long-term memory module, fall into this category. This is akin to giving a brilliant but forgetful expert a notebook and access to a library. This divergence presents a critical strategic choice for a CTO: Is it better to bet on a new, potentially superior core architecture that is still maturing? Or is it wiser to stick with the well-understood Transformer ecosystem and invest heavily in the sophisticated data infrastructure required to build a hybrid memory solution? The optimal path will depend on an organization's specific use cases, existing technology stack, and tolerance for risk.

Section 3: The Action Layer: How Autonomous Agents Will Automate Your Enterprise

While new model architectures are expanding what AI can understand, the most profound business impact will come from what AI can do . This is the domain of Agentic AI. The leap from generative AI to agentic AI is the leap from analysis to action. It is the difference between a brilliant consultant who can write a detailed report on how to fix a supply chain bottleneck and an autonomous operations manager who takes that report, devises a multi-step plan, coordinates with the ERP and logistics software, and executes the fix with minimal human oversight.

For business leaders, it is crucial to grasp this distinction. An autonomous AI agent is not merely a more conversational chatbot; it is an autonomous, goal-driven software entity . It is designed to perceive its digital environment, reason about a desired outcome, formulate a plan, and execute that plan by interacting with other software applications and tools. This ability to act independently to achieve complex goals is what sets agentic AI apart and positions it as the engine for the next wave of enterprise automation. The market forecasts reflect this potential, with the agentic AI market projected to soar from approximately $13.8 billion in 2025 to over $140 billion by 2032, demonstrating a powerful enterprise conviction in its capabilities.

Core Capabilities of an AI Agent

The power of an AI agent stems from a unique combination of capabilities that traditional AI systems lack.

- Autonomous Decision-Making: Unlike a simple script that follows a rigid path, an agent can evaluate a situation and decide on the next best action without waiting for a human prompt. It possesses the agency to determine how to achieve a goal it has been given.

- Multi-Tool Coordination: This is arguably the killer feature for enterprise automation. Agents are not confined to a single application. They are designed to orchestrate complex workflows across a multitude of enterprise systems—your Customer Relationship Management (CRM), Enterprise Resource Planning (ERP), internal databases, and external APIs—acting as a digital connective tissue.

- Goal-Oriented Intelligence: You don't give an agent a script; you give it an objective. For example, instead of a linear set of instructions, an agent can be tasked with a high-level goal like "increase customer satisfaction scores by 10%" or "reduce time-to-resolution for IT tickets." The agent then reasons backward from that outcome, dynamically creating and adjusting its plan to achieve it.

- Adaptive Learning and Error Handling: Traditional AI models often fail silently or require a new prompt if their first attempt at a task is unsuccessful. An autonomous agent, by contrast, can evaluate the success of its actions in real-time. If an initial attempt to resolve an issue fails, it can try an alternative approach without waiting for human instruction, learning from context and refining its behavior with each task.

Agentic AI in Action: Transforming Business Functions

The theoretical power of agentic AI is already translating into tangible value across core business functions, automating entire value chains and creating new levels of operational efficiency.

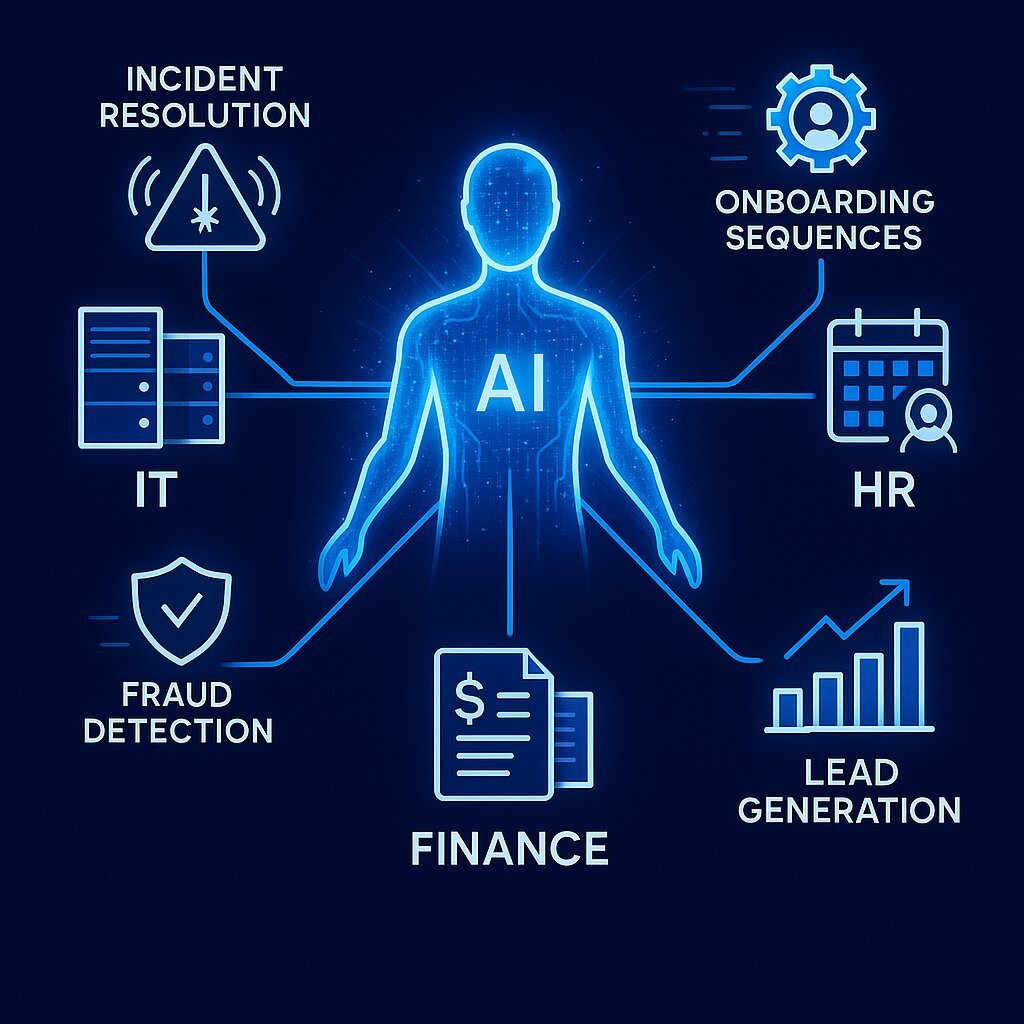

- IT Support & Service Management: An agent can autonomously handle a full incident lifecycle. Upon detecting a system outage, it can analyze diagnostic logs, attempt a series of escalating fixes (like restarting a service or reallocating resources), and only create a ticket and escalate to a human engineer if its own attempts fail. This dramatically reduces mean-time-to-resolution and frees up senior engineers to focus on more complex architectural problems.

- Finance & Accounting: In fraud detection, an agent can go far beyond simple flagging. It can identify a suspicious transaction, autonomously retrieve the customer's transaction history from the database, cross-reference it with other data, trigger a multi-factor authentication request to the user's device via an API, and then, based on the combined results, either approve or block the transaction and freeze the account.

- Human Resources: The complex, multi-day process of onboarding a new employee can be fully automated. An agent can be tasked with "onboard new hire Jane Doe." It would then interact with various systems to collect necessary documents, create user accounts in HRIS, Active Directory, and Slack, grant appropriate system permissions based on role, and schedule orientation meetings in the calendars of relevant team members.

- Sales & Marketing: An agent can function as a tireless AI Sales Development Representative (SDR). Tasked with a goal like "generate 10 qualified leads this week," it can scrape professional networking sites for prospects matching a certain profile, find their contact information, write highly personalized outreach emails by referencing their recent online activity, and manage the follow-up sequence until a meeting is booked. Playmaker, an AI sales rep tool, has demonstrated the ability to achieve a 9.5% reply rate on cold outreach by writing emails that feel 1-to-1.

The rise of agentic AI fundamentally changes the calculus of business investment. The business case for the first wave of generative AI primarily focused on "generative" ROI—productivity gains from automating discrete tasks, such as writing marketing copy or summarizing a meeting faster. The value was measured in saved hours. The business case for agentic AI is about "operative" ROI. It focuses on automating entire, complex, multi-step processes and even enabling new, fully autonomous business models. The unit of value shifts from a "task" to a "process" or an "outcome." Consequently, the ROI calculation evolves from "How much time did we save our marketing team?" to "How much did we increase revenue by creating a fully automated, 24/7 sales prospecting and qualification engine?" This represents a potential 10x or 100x larger value proposition. This requires a strategic shift in perspective from the C-suite, particularly the CFO, to view AI not as a cost center to be optimized, but as a revenue-generating asset to be invested in for long-term growth.

However, this immense potential comes with a critical prerequisite. An agent's power is directly proportional to the number and quality of the tools it can access. In a business context, these "tools" are the APIs that connect to enterprise software systems. An agent that cannot read from your CRM, write to your ERP, or query your product database is effectively useless for automating core business processes. This reality means that a company's internal API infrastructure is no longer a mere technical concern for the CTO; it is a direct and critical enabler—or blocker—of advanced AI automation. Organizations with modern, well-documented, and comprehensive API layers will be able to deploy powerful agents quickly and effectively, creating a significant competitive advantage. Conversely, companies saddled with siloed legacy systems and poor API hygiene will face a massive "integration tax"—a costly and time-consuming effort to build the digital connective tissue required for agents to function. This reframes API strategy from a technical best practice to a crucial prerequisite for future competitiveness.

Section 4: The Strategic Crossroads: Generalist Platforms vs. Specialized Custom Solutions

As AI becomes deeply embedded in corporate strategy, every executive faces a central dilemma that will define their organization's competitive posture for the next decade. The choice is between relying on powerful but generic, off-the-shelf AI platforms—such as the public API versions of models like GPT-4o or Claude 3—and making the strategic investment to build specialized AI models that are finely tuned to the unique data, workflows, and objectives of the business. This is not merely a technical decision; it is a fundamental strategic choice between convenience and competitive differentiation.

The Case for Generalist AI: The Path of Convenience

The appeal of generalist AI models is undeniable. They are the default choice for a reason: they are powerful, incredibly versatile, and easily accessible through APIs, requiring minimal upfront engineering effort. For a vast majority of users, the path of least resistance leads to these platforms; research shows that

91% of AI users reach for their favorite general tool for nearly every job .

This approach is exceptionally effective for a wide range of low-stakes, broad applications that do not rely on sensitive proprietary data. These include tasks like brainstorming marketing slogans, drafting generic internal communications, summarizing public news articles, or providing employees with a general-purpose productivity assistant. In this capacity, generalist platforms act as powerful force multipliers for individual productivity and are an excellent way to democratize AI within an organization, allowing for rapid experimentation and fostering a culture of AI literacy with low initial costs.

The Case for Specialized AI: The Path to a Defensible Moat

While generalist models offer breadth, specialized models offer depth. A specialized AI model is one that has been explicitly designed, trained, or fine-tuned to excel at a specific task or within a particular domain. This specialization is the key to unlocking a durable, defensible competitive advantage in the age of AI. While competitors can easily access the same public APIs, they cannot replicate a solution built on a company's unique data and processes. The key advantages of this approach create a powerful business moat:

- Superior Performance and Accuracy: A model's performance is a direct function of the data it is trained on. A specialized model fine-tuned on a company's historical financial transaction data will be orders of magnitude more accurate at detecting fraudulent patterns specific to that business than a generalist model trained on the open internet. This superior performance in core business functions is a direct competitive advantage.

- Cost-Efficiency at Scale: The "build vs. buy" calculation for AI is often counterintuitive. While the upfront investment for developing a specialized model can be higher, the long-term operational costs are often significantly lower. Specialized models are typically smaller and more streamlined, requiring less computational power for each inference. For high-volume tasks—like analyzing millions of customer support tickets or processing thousands of insurance claims per day—the cumulative cost of using an expensive, general-purpose API can quickly exceed the initial cost of building a more efficient, tailored solution.

- Data Privacy and Security: This is a non-negotiable for many industries. When using a third-party API, a company is sending its data—and potentially its customers' data—to an external provider. For sectors like healthcare, finance, and legal services, this presents an unacceptable risk. Building a specialized model that can be hosted on-premise or within a private cloud environment ensures that proprietary and sensitive data remains securely within the organization's control.

- Greater Longevity and Adaptability: Relying on a third-party generalist model means being subject to the provider's update schedule and model changes, which can deprecate features or break established workflows overnight with no warning. A custom-built, specialized AI system is a modular asset. It is easier to update, retrain, or replace a specific component of a proprietary system in response to changing business needs or new technological advancements, providing greater agility and long-term strategic control.

The decision between these two paths is one of the most critical an executive team will make. The following table provides a framework for weighing the trade-offs.

| Factor | Generalist AI (e.g., Public GPT-4 API) | Specialized AI (e.g., Custom Fine-Tuned Model) |

|---|---|---|

| Primary Advantage | Speed to market, broad capabilities, low upfront effort | High accuracy, defensible competitive moat, data privacy |

| Primary Disadvantage | No competitive moat, high operational costs at scale, data privacy concerns | Higher upfront investment, longer development cycle, narrow scope |

| Cost Profile | Low upfront cost, high and unpredictable operational (inference) costs | Higher upfront cost, low and predictable operational costs |

| Best For (Scenario) | Prototyping, non-core tasks, employee productivity tools | Core business processes, tasks using proprietary data, high-volume automation |

This strategic choice highlights a fundamental tension in the AI-driven economy: the "Commodity vs. Moat" dilemma. The capabilities of generalist AI are rapidly becoming a commodity. When every one of your competitors is using the same handful of public AI tools, which are often built on the same underlying foundation models, no one gains a lasting advantage. It creates a level playing field, but one where it is impossible to pull ahead. True, defensible moats in the AI era will therefore be built not on access to the AI model itself, but on the unique and proprietary combination of a

specialized model, proprietary data, and tailored workflows . The strategic imperative for leadership is to identify the one or two core processes within their business where a custom-built AI can create a 10x improvement in efficiency, cost, or customer experience. This is where strategic investment should be focused to build a moat. For everything else, leveraging the commodity tools is the sensible approach. This necessitates a sophisticated portfolio strategy for AI investment, not a one-size-fits-all solution.

Furthermore, the economics of AI are inverting the traditional "build vs. buy" calculation for software. Historically, buying off-the-shelf software was seen as the cheaper, faster option compared to building a custom solution. For high-volume AI processes, this is no longer necessarily true. The long-term, recurring operational costs of making millions of API calls to a powerful but inefficient generalist model can quickly balloon, ultimately dwarfing the one-time upfront cost of developing a smaller, more efficient specialized model. A CFO analyzing the total cost of ownership (TCO) for a core, high-throughput process may now find that the business case for a "built" custom solution is financially superior to "buying" API access. This represents a fundamental shift in the logic of IT procurement and investment strategy that every business leader must understand.

Section 5: Building Your AI Advantage: The Custom Software Development Playbook

Understanding the strategic landscape and the nuances of emerging AI technologies is the first step. The second, more critical step is execution. For organizations aiming to build a genuine, defensible competitive advantage, the path inevitably leads to custom solutions. Custom software development serves as the essential bridge, translating the raw potential of AI models into a tangible, integrated, and profitable business engine. An off-the-shelf AI tool is a component you can rent; a custom AI solution is a strategic asset you own, built into the very chassis of your business.

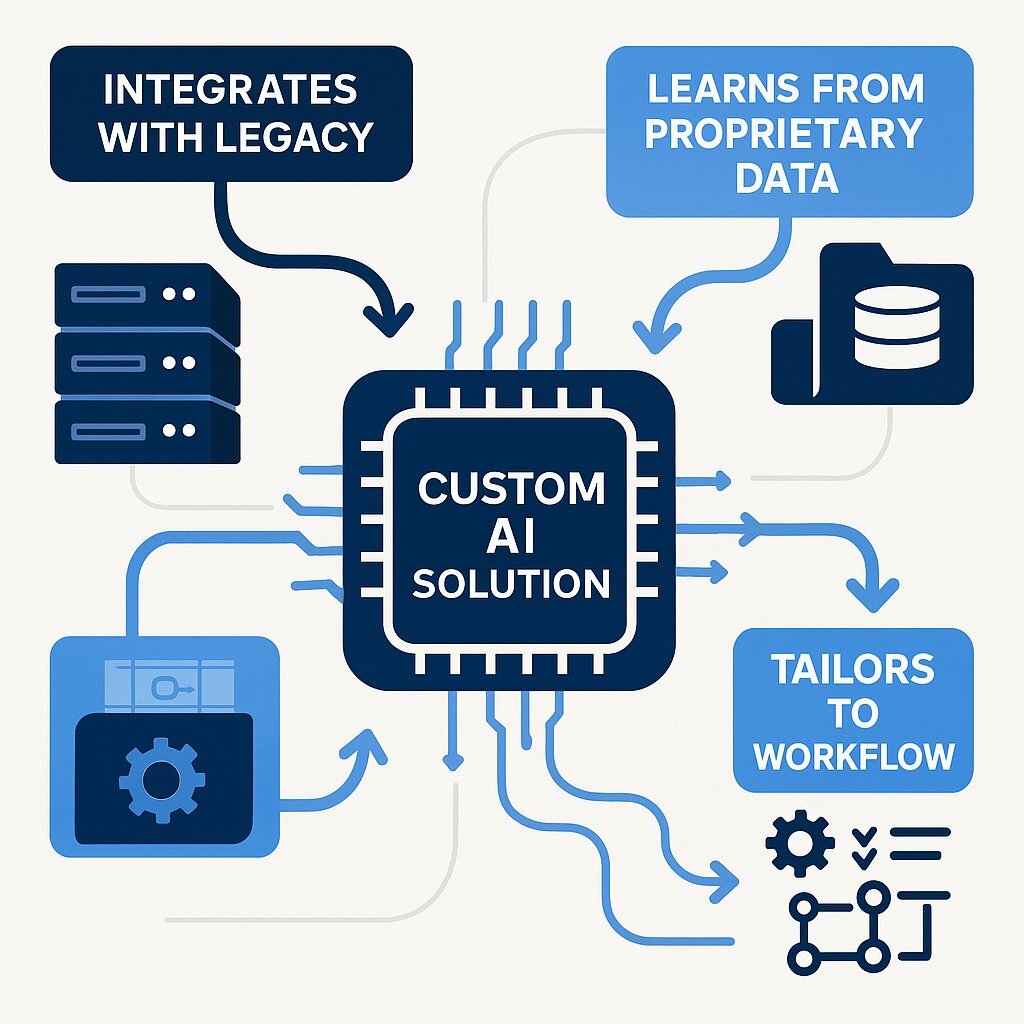

The primary role of custom development is to solve the "last mile" problem of AI integration. It allows a business to:

- Integrate with Complex and Legacy Systems: AI models are most powerful when they can interact with a company's core systems of record. Custom development enables the seamless connection of advanced AI capabilities with existing, often proprietary, ERPs, CRMs, and databases, something generic tools cannot do.

- Leverage Proprietary Data: An organization's most valuable and unique asset is its data. Custom solutions can be designed to train on and learn from this proprietary data, creating a virtuous cycle where the AI becomes progressively smarter and more attuned to the specific nuances of the business, building a moat that is impossible for competitors to replicate.

- Tailor to Unique Workflows: Every business has unique processes that are a source of its competitive edge. Rather than forcing these processes to conform to the rigid constraints of a generic tool, custom development allows for the creation of AI that perfectly matches, augments, and optimizes those specific workflows.

How AI is Revolutionizing the Development Process Itself

A primary concern for executives considering custom solutions is the perceived high cost and long development timelines. However, AI is not just the output of modern software development; it is also a powerful catalyst that is making the process of development itself dramatically faster, cheaper, and more efficient.

- AI-Powered Requirements and Design: The initial phase of a project, gathering requirements, can be accelerated and improved with AI. Tools can now analyze user behavior data, historical project documents, and industry trends to suggest key features and functionalities, leading to more accurate and user-focused initial plans.

- Automated Code Generation: The act of writing code is being transformed. AI-powered tools like GitHub Copilot, acting as a "pair programmer" for developers, can generate high-quality code snippets and even entire functions from simple natural language commands. This significantly speeds up the development process and reduces the likelihood of human error. In a proof-of-concept at Goldman Sachs, developers have written as much as 40% of their code automatically using generative AI, freeing them to focus on higher-level system architecture and complex logic.

- Intelligent Testing and DevOps: Quality assurance and deployment are also being augmented by AI. AI-based testing tools can automatically generate test cases, intelligently prioritize areas of the code to test, and even predict potential bugs or system failures before they happen. This leads to more resilient and reliable software, delivered through faster and more automated CI/CD pipelines.

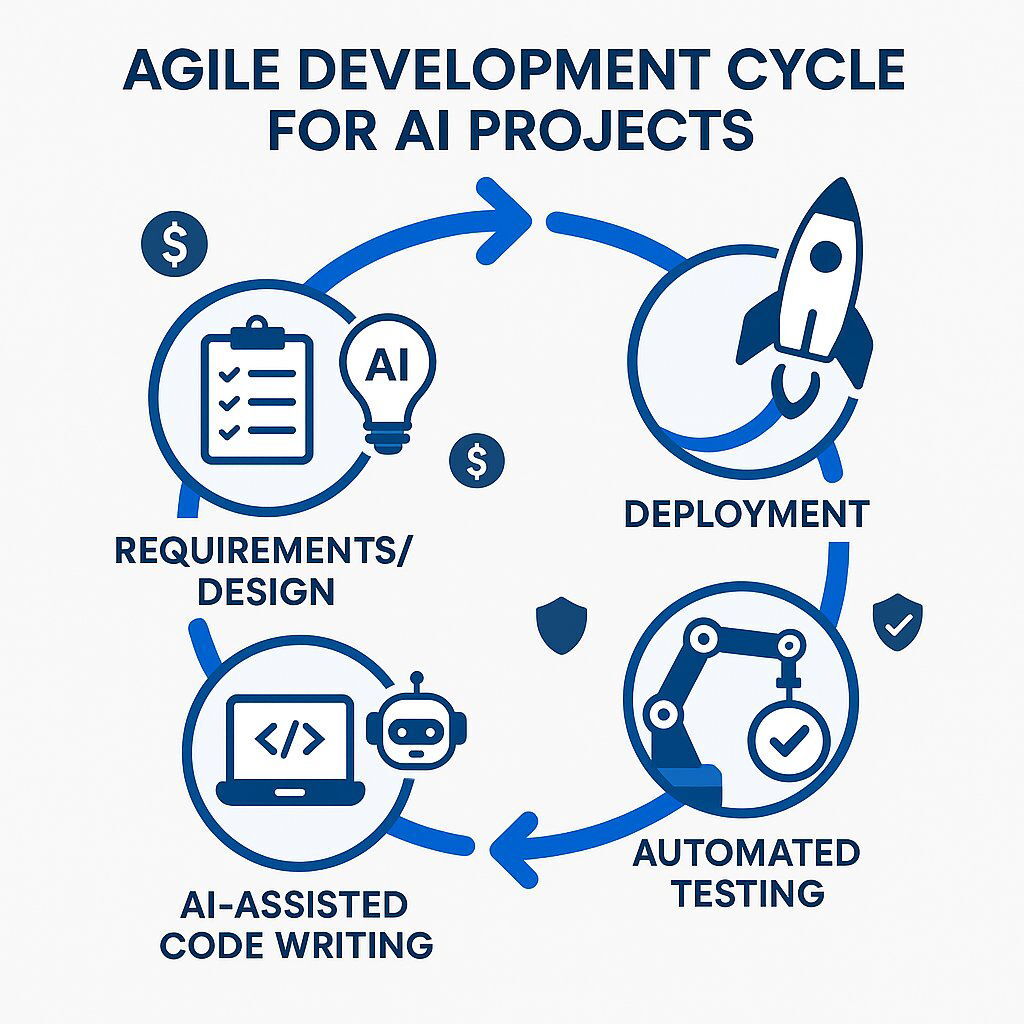

Why Agile is Non-Negotiable for AI Projects

The nature of AI development is fundamentally different from traditional software engineering. It is not a linear process with fixed requirements; it is inherently experimental, iterative, and data-driven . When embarking on an AI project, the requirements, the data's suitability, and even the ultimate viability of the model are often unknown at the outset. It is as much a research project as it is a development project.

This inherent uncertainty makes traditional "waterfall" project management, with its rigid upfront planning, a recipe for disaster. The only viable methodology is Agile. Agile frameworks like Scrum and Kanban are designed specifically for navigating uncertainty. They prioritize flexibility, rapid iteration in short cycles (sprints), and close, continuous collaboration between a cross-functional team of data scientists, developers, and business stakeholders. This approach allows a team to build a Minimum Viable Product (MVP) or a Proof-of-Concept (PoC), test it against real data and user feedback, learn from the results, and then pivot or persevere. For a CFO or CEO, this is not just a project management preference; it is a crucial

financial risk management framework . By structuring an AI initiative in small, iterative Agile sprints, a business can invest a modest amount to test a core hypothesis. If the hypothesis fails, the project can be stopped with minimal financial loss. If it succeeds, the business gains the data-backed confidence to invest in the next, more ambitious iteration. This de-risks innovation and ensures that investment is tied directly to validated learning and tangible progress.

Demystifying the Cost: A Realistic Look at Custom AI Investment

To make informed strategic decisions, executives need a tangible sense of the financial commitment required for custom AI development. While every project is unique, it is possible to provide representative cost brackets that help demystify the investment and frame it as a scalable endeavor that can start small and grow with demonstrated success.

- Proof-of-Concept (PoC): $10,000 - $50,000. This typically involves a small, time-boxed effort to test a core technical hypothesis, such as the feasibility of a particular model on a sample dataset.

- Minimum Viable Product (MVP): $50,000 - $150,000+. This involves building the first functional version of the AI solution with a core set of features, designed to be tested by early users and generate initial value and feedback.

- Advanced/Enterprise-Grade Solution: $150,000 - $500,000+, potentially exceeding $1 million. This represents a fully-featured, scalable, and deeply integrated AI system designed for enterprise-wide deployment, complete with robust security, data pipelines, and maintenance.

This financial landscape elevates the role of the custom software development partner. The value proposition is no longer simply about writing lines of code, a task increasingly automated by AI itself. Instead, the modern development firm acts as an

AI System Orchestrator . Their role is to navigate the immense complexity of the AI ecosystem—the fragmented landscape of models, architectures, frameworks, and deployment options—on behalf of the client. They are strategic guides who help the business select, assemble, and integrate the right components into a coherent, secure, and value-generating system that delivers on specific business objectives.

Conclusion: The Executive Playbook for Navigating the AI Frontier

The AI revolution has moved decisively beyond the hype cycle of generative tools and into an era of profound strategic integration. The narrative is no longer about adopting generic tools for marginal productivity gains; it is about fundamentally re-architecting core business processes to build durable, AI-powered competitive advantages. The future will belong not to the companies that simply use AI, but to those that leverage it to become truly AI-native in their most critical operations. For C-suite leaders, navigating this frontier requires a shift in mindset and a clear, pragmatic action plan.

The analysis reveals that the path to a defensible moat lies in specialization. While generalist platforms offer convenience, they are a competitive commodity. Lasting value is created by combining specialized AI models with proprietary data and unique workflows—a task that requires the expertise of custom software development. This journey is not a monolithic, high-risk endeavor. It can be de-risked and managed through an Agile, iterative approach that ties investment to validated learning and tangible results.

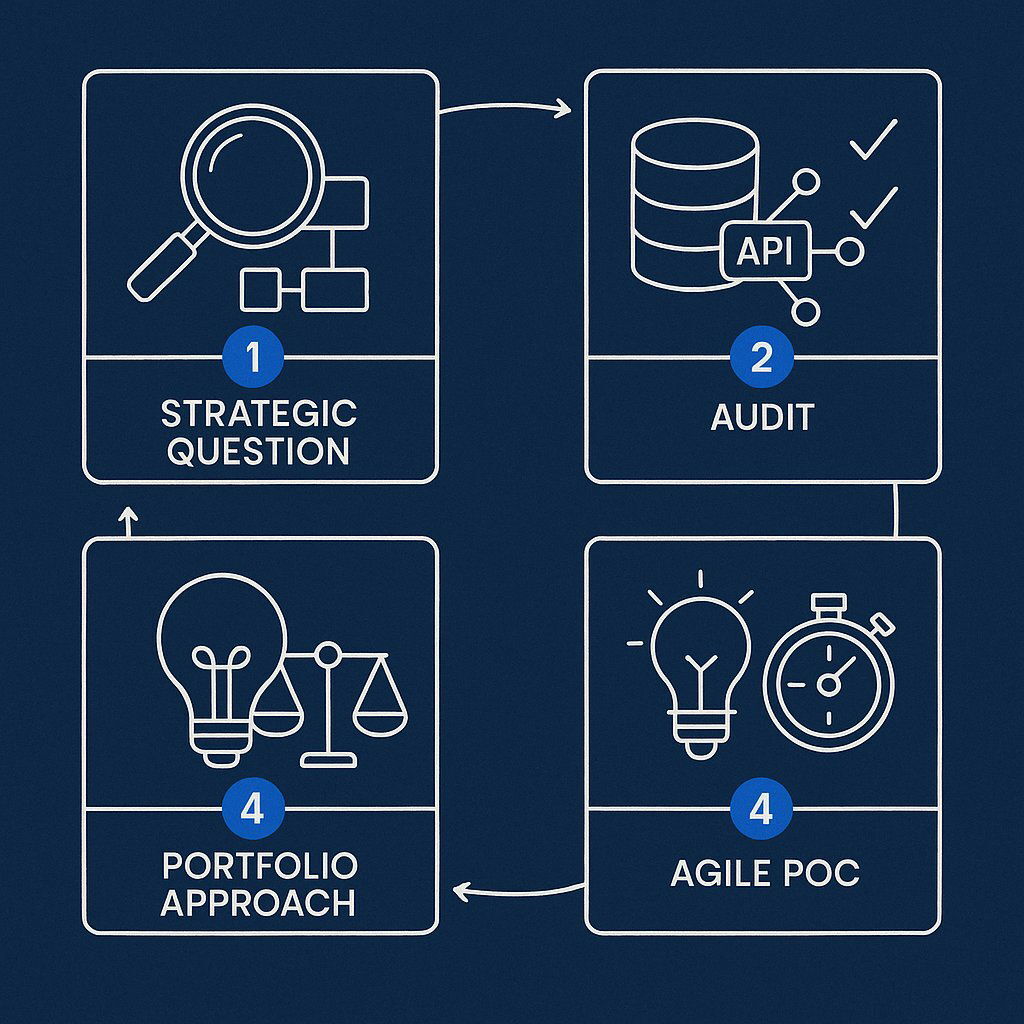

To translate these findings into action, executives should consider the following four-step playbook:

- Re-evaluate Your Strategy with a New Question. The strategic conversation must evolve. Stop asking, "How can we use AI?" and start asking, "Which single core business process, if automated and optimized by a specialized AI, would create an insurmountable competitive advantage?" This question focuses investment on areas of maximum impact, whether it's hyper-personalizing the customer experience, creating an ultra-efficient supply chain, or developing a predictive risk management capability that is years ahead of the competition.

- Audit Your Data and API Readiness. The power of your future AI is trapped within your current systems. Your most valuable asset is your proprietary data, and your ability to leverage it is determined by your API infrastructure. Initiate a formal, C-level sponsored audit of your data quality, governance, and API readiness. This foundation is a non-negotiable prerequisite for building any meaningful, custom AI capability. The results of this audit will inform your strategic roadmap and investment priorities.

- Embrace the Portfolio Approach to AI Investment. A one-size-fits-all AI strategy is destined to fail. A successful approach is a balanced portfolio. Empower your entire workforce with generalist, off-the-shelf AI tools to boost broad productivity and foster AI literacy. Simultaneously, concentrate your major strategic investments on one or two high-priority, specialized custom AI projects that target the core business challenges identified in your strategic review. This balances short-term gains with long-term moat-building.

- Start Small and Think Big with an Agile Partner. You do not need to "boil the ocean" with a massive, multi-year AI transformation project. The most effective path is to identify a high-value use case and launch a small, time-boxed, Agile-run Proof-of-Concept (PoC). Partner with a custom development firm that can act as your "AI System Orchestrator" to run this experiment. A successful PoC, delivered in weeks or a few months, de-risks the financial investment, provides tangible results to build organizational momentum, and creates the data-backed business case for further investment.

The AI revolution is a marathon, not a sprint. The winning organizations will be those that combine a bold, long-term vision with pragmatic, iterative, and disciplined execution. The technologies are ready, the business cases are clear, and the competitive landscape is being redrawn in real time. The time to begin building your unique advantage is now.

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.