What is Dify.ai? A Strategic Overview, Competitive Analysis, Pricing Breakdown, and Tech Stack Fit for Mid-Market B2B Firms

July 16, 2025 / Bryan ReynoldsIntroduction

Executives at mid-market B2B firms are increasingly hearing about AI development platforms and wondering which one is right for them. In particular, Dify.ai has emerged as a notable option in the low-code/no-code AI arena. This article follows a transparent, "They Ask, You Answer" approach to explain exactly what Dify.ai is and how it might benefit (or challenge) your business. We'll cover Dify's core concept in plain English, explore its features and capabilities, compare it to alternatives like LangChain, Flowise, OpenPipe, Azure ML, and AWS Bedrock, break down its pricing for mid-market use, and evaluate how it fits with common tech stacks. The goal is to give CTOs, CFOs, Heads of Sales, and Marketing Directors clear, actionable insights - with no fluff - so you can make informed decisions about Dify and similar AI platforms.

(This article is written in a professional yet approachable tone, aiming to inform and engage mid-market executives across industries such as advertising, gaming, real estate, finance, education, telecom, high-tech, healthcare, and fast-growing startups.)

What is Dify.ai? (Plain-English Explanation)

Dify.ai (also known as Dify AI, a no-code/low-code platform for building and deploying generative AI and LLM applications) is an open-source platform for building AI applications - essentially a toolkit that lets you create generative AI apps (like chatbots, AI assistants, content generators, etc.) without needing to write much code. Think of Dify as a combination of a construction kit and an operations console for AI: it provides all the building blocks (and the “backend” infrastructure) you need to develop and run AI-powered applications, and it handles a lot of the heavy lifting behind the scenes. In industry terms, Dify falls into the category of “LLMOps” or Large Language Model Operations platforms - tools that streamline the development and deployment of AI applications that use large language models.

In plain English, Dify.ai enables you to create custom AI applications quickly. Instead of coding an AI app from scratch (wiring together machine learning models, databases, APIs, and user interfaces), Dify offers an intuitive interface and pre-built components to do those things for you. For example, you can drag-and-drop components to define an AI workflow: taking user input, feeding it to a language model (like GPT-4 or Llama 2), possibly retrieving information from your company data, and then returning a useful result - all without traditional software coding. Dify combines the convenience of no-code/low-code development with the power of modern AI models, making it accessible to both developers and non-technical innovators.

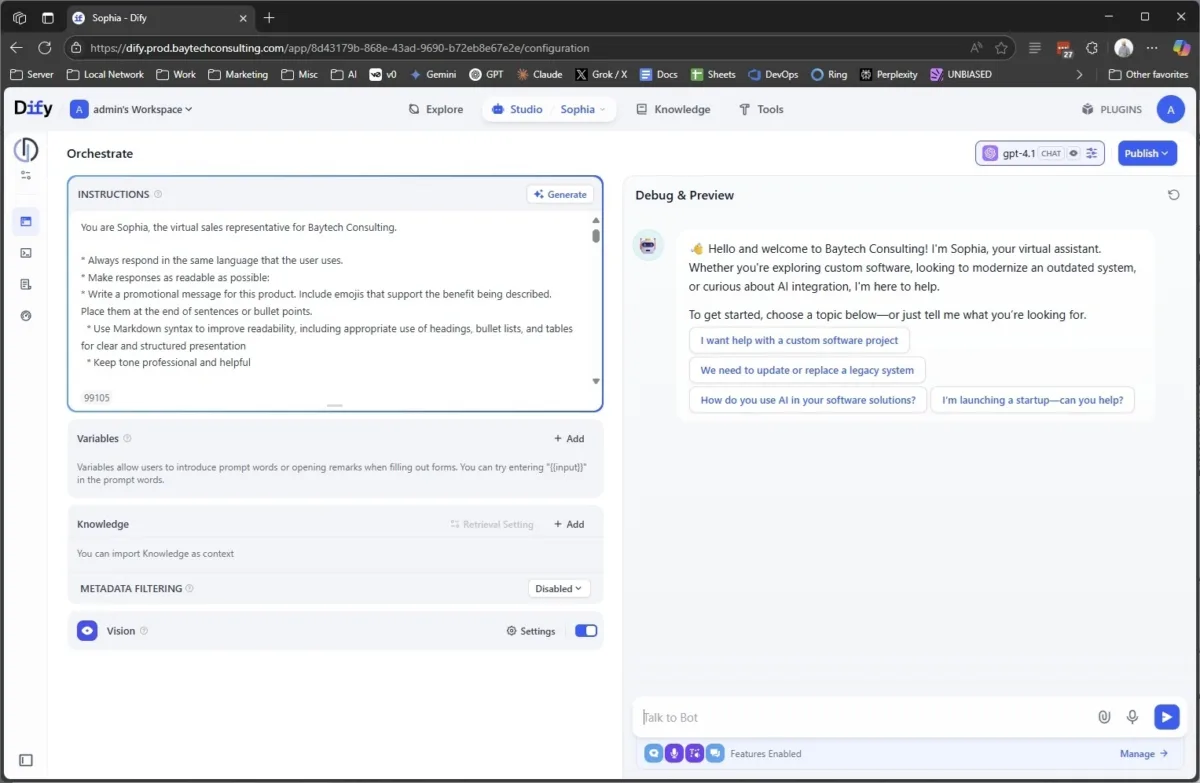

Dify serves as a comprehensive llm app development platform, supporting the full lifecycle of large language model applications. Dify's offerings include workflow building, model support, prompt engineering, retrieval-augmented generation, agent customization, operational monitoring, and API integration, making it a robust solution for building and deploying LLM apps.

Importantly for business leaders, Dify isn’t just a toy or a prototype tool - it is designed to be production-ready from day one, emphasizing scalability, stability, and security for enterprise use. In other words, it’s built so that the AI solutions you create can be reliably rolled out to real users (whether that’s your customers or internal teams) without a massive engineering ordeal. Dify’s name comes from “Define + Modify,” reflecting its ethos that you can define an AI app and continuously improve it over time. The platform has gained significant traction: as of mid-2024 it had facilitated the creation of over 130,000 AI applications on its cloud service, and its open-source project has around 34.8k stars on GitHub - a testament to its popularity among the developer community. This strong community backing means lots of examples, rapid improvements, and a lower chance of the platform suddenly disappearing. In short, Dify.ai is a one-stop-shop for AI application development that aims to democratize AI by letting even small teams build powerful AI-driven tools without an army of AI engineers.

Key Features, Model Management, and Capabilities of Dify.ai

What can Dify.ai actually do? At its core, Dify provides a suite of features that cover the end-to-end needs of developing and operating an AI application, along with additional features that enhance user experience and customization. Here’s a breakdown of its key capabilities in straightforward terms:

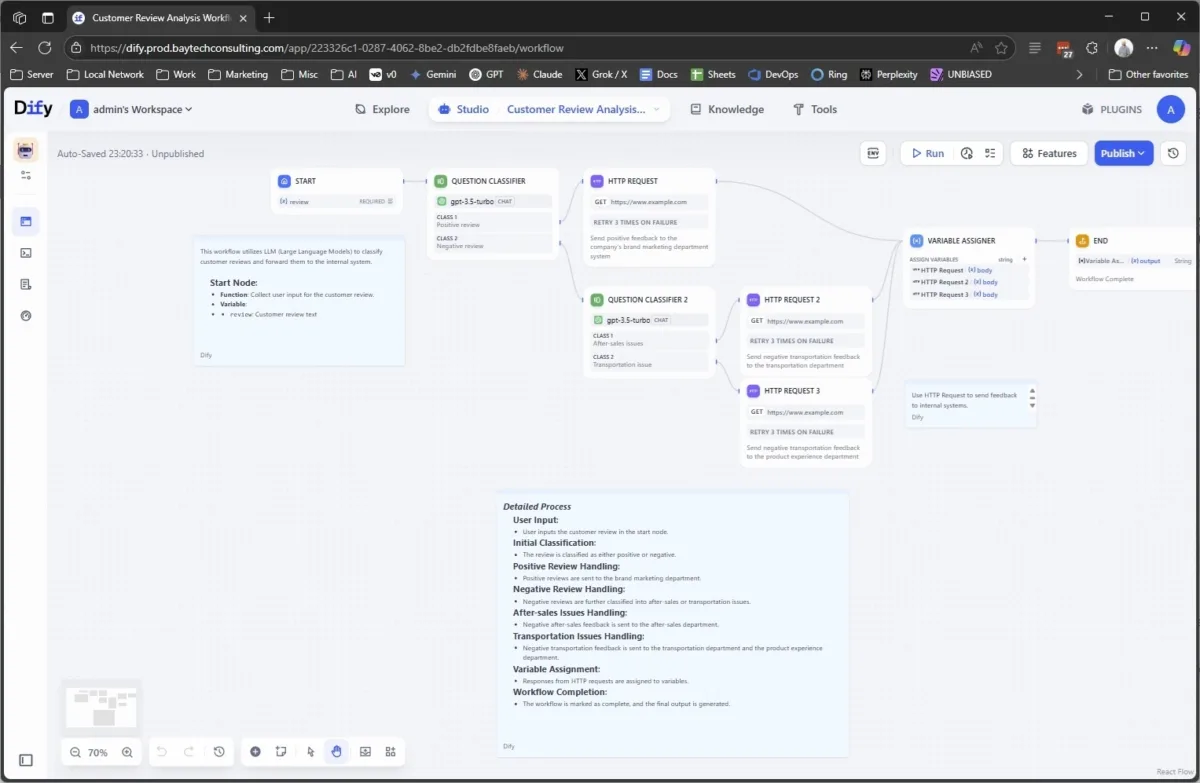

- Visual Workflow Builder (No-Code Studio): Dify offers a drag-and-drop interface for designing AI workflows. This means you can visually connect different functional blocks (like “take user input,” “call GPT-4 model,” “search the knowledge base,” “format the answer”) in a canvas. Non-technical team members can define how the AI should respond in various scenarios by tweaking these blocks and prompts, rather than writing if/then code. This lowers the barrier so that product managers or subject-matter experts in, say, real estate or healthcare can actively help shape an AI assistant’s logic. It also speeds up development - you can prototype an AI-powered chatbot or process in hours or days instead of weeks. The visual builder also allows you to test powerful AI workflows visually and interactively, ensuring robust performance before deployment.

- Support for Multiple AI Models (Model Flexibility): One of Dify’s strengths is model neutrality - it’s built to work with a variety of large language models and AI providers. Right out of the box, Dify integrates with major AI model APIs, including OpenAI (GPT-3.5/GPT-4), Anthropic (Claude), Meta’s Llama2, Azure OpenAI Service, Hugging Face models, and others. Dify’s models based architecture supports a wide range of supported model providers, including openai api compatible models, giving you flexibility and compatibility for different AI applications. You can switch between models or even use multiple models in one app (for instance, an image generation model in one step and a text model in another). This is valuable for mid-market firms because it avoids vendor lock-in to a single AI provider - you can choose the model that best fits your use case (whether for cost, speed, or accuracy) and even swap it out if your needs change or if a better model comes along. For example, an education company might start with OpenAI’s API for a tutoring bot, but later move to a cheaper open-source model on their own servers; Dify would support that transition with minimal friction.

- Prompt Orchestration and Templates: Writing good prompts (the instructions you give to an AI model) is a bit of an art and science. Dify simplifies prompt management with an intuitive prompt orchestration interface. You can define system instructions, example conversations, or conditional logic in a clear form, and Dify will handle sending these to the model. There are also pre-built application templates (for example, a Q&A chatbot, a content generator, etc.) which you can use as starting points. This means faster setup and the comfort of knowing you’re using prompt patterns that are proven to work. It’s akin to having templates for sales emails - it ensures you’re not starting from a blank page. Dify also supports comparing model performance and monitoring model performance directly within the platform, helping you optimize prompts and select the best-performing models for your application.

- Retrieval-Augmented Generation (Knowledge Integration): For many business applications, a standalone AI model is not enough; you need it to use your proprietary data (documents, databases, FAQs, etc.) to give useful answers. Dify has built-in support for knowledge bases and Retrieval-Augmented Generation (RAG) pipelines. In practice, this means you can upload documents or connect data sources, and Dify will index them in a vector database (Dify includes Weaviate by default for this) so that the AI can search your data when responding. Dify offers extensive RAG capabilities, including support for other common document formats beyond PDFs, such as PPTs and text files, to ensure comprehensive document ingestion. For example, a mid-market finance firm could load policy documents or financial reports into Dify, and their AI assistant app can pull relevant info from those in real-time to answer a question accurately. This feature is crucial for making AI responses grounded in truthful, company-specific information rather than the model’s general training data. (It’s worth noting that as of early 2025, Dify’s vector search component didn’t yet support fine-grained metadata filters - e.g., restricting search by date or category - but this is on their roadmap. There are workarounds using API extensions for now.)

- AI Agents and Tools Integration: Beyond simple Q&A or text generation, Dify also supports building more “agentic” AI applications. Agentic in this context means the AI can use tools or perform multi-step reasoning to achieve goals (think of an AI that can, say, browse a website, use a calculator, or call an external API as part of answering a question). Dify allows you to integrate plugins or tools into your AI app, including pre-built tools like Google Search. For instance, you could equip an AI agent with a web search plugin or connect it to your internal APIs. Dify supports agent capabilities model management, enabling you to develop, manage, and deploy agents with various capabilities and customizations. Your marketing team might love an AI that can automatically pull the latest stats from Google Analytics via API when asked about “this week’s web traffic” - that’s the kind of extension possible with Dify’s tool integrations. While this is a bit more advanced use-case, the platform provides a flexible agent framework and APIs for developers to extend functionality as needed. The key takeaway is that Dify isn’t limited to static Q&A; it can handle workflow automation and take actions within defined boundaries, which opens up a lot of possibilities (from automating parts of customer support, to assisting with mortgage application processing, to powering a “copilot” for sales teams that logs into CRM systems on their behalf). Dify also supports LLM function calling, allowing agents to invoke specific functions or tools within large language models for enhanced interactivity.

- Backend-as-a-Service & DevOps Built-In: Another selling point for Dify is that it bundles a lot of the “plumbing” needed for AI apps so you don’t have to reinvent it. This includes user management, APIs, logging, monitoring, and deployment support. Dify has a concept of workspaces and team collaboration, so multiple team members can work on AI app projects with role-based access control. It also provides APIs so that once you build an AI module, you can integrate it into your existing software via REST API calls. For example, if you have an existing CRM or a website and you build an AI FAQ bot in Dify, you can embed it into your site or call it from your software using Dify’s API. This separation is important: it means you can keep your existing business logic in your main software, and let Dify handle the AI logic (prompts, model calls, etc.) behind an API endpoint. Dify’s infrastructure (when self-hosted or on their cloud) also logs activity, which helps in monitoring usage, cost tracking, and auditing conversations - essential features for enterprise governance. In fact, one advantage noted by users is Dify’s strong debugging and experiment tracking capabilities during development: it keeps full logs of all test runs, so you can easily see how your AI app responded over time and even revert to earlier versions of your workflow. This is like having version control for your AI logic, which speeds up iterative improvement and ensures accountability. Dify’s platform also includes capabilities model management observability and model management observability features, enabling you to monitor, analyze, and optimize AI workflows, models, and tools in production environments for reliable and scalable deployment.

- Scalability and Enterprise Readiness: For mid-market firms, it’s not just about building an AI app quickly - it’s about running it reliably when you have hundreds or thousands of users (or when it becomes mission-critical for a business process). Dify was designed with scalability and security in mind. The platform can be deployed in a cloud-native way (containerized microservices behind an Nginx gateway, with horizontal scaling of workers, etc.) which means it can handle increasing load by adding more resources. There are case studies of enterprises using Dify as an internal AI hub across departments, which implies it’s been tested in environments with strict IT requirements (e.g. a bank deploying it internally for governed AI access). Security features include isolating the AI “sandbox” for running any code (Dify has a sandbox component that safely executes any Python code nodes with heavy restrictions) and offering options to self-host (so your data never leaves your own environment if you choose). We’ll discuss deployment in the tech stack section, but it’s worth noting here that you retain full control of your data when using Dify - unlike some SaaS AI products, Dify lets you decide where it’s hosted and even run completely offline with the open-source version. This is a major consideration for industries like healthcare, finance, or telecom that have compliance requirements around data.

In summary, Dify.ai brings together an array of features - multi-LLM support, visual workflow building, data integration, and built-in DevOps - to streamline AI app development. It enables cross-functional teams (from developers to domain experts) to collaborate on AI solutions. For a mid-sized business, this means you could empower different departments (customer service, marketing, operations, etc.) to spin up AI assistants or automate tasks with minimal IT bottlenecks, all while maintaining oversight through a unified platform. With Dify, you can build a wide range of applications, including chat based app and LLM apps, giving your organization a fast-track AI innovation lab, with guardrails included.

Competitive Comparison: Dify vs. LangChain, Flowise, OpenPipe, Azure ML, AWS Bedrock, etc.

No technology decision is made in a vacuum. As a mid-market executive, you’re probably also hearing about other AI development frameworks and services. How does Dify compare to some of the popular alternatives? Below, we provide a candid comparison of Dify.ai with several notable solutions:

- LangChain (open-source library) - a developer-centric approach to building LLM applications.

- Flowise and LangFlow (open-source visual builders) - similar no-code tools for LLM workflows.

- OpenPipe (LLMOps tool) - focused on fine-tuning and optimizing model usage, with an emphasis on leveraging production data for continuous improvement.

- Azure Machine Learning & Azure AI Studio (Microsoft) - enterprise ML platform and new AI orchestration tools.

- AWS Bedrock (Amazon Web Services) - a fully managed service to access AI models.

A key differentiator for Dify is its flexible deployment, including the ability to set up the dify server using Docker Compose for streamlined installation and configuration.

Each of these options has its own philosophy, strengths, and drawbacks. Let’s briefly explore each comparison, followed by a summary table.

Dify vs. LangChain (Code Library vs. No-Code Platform)

LangChain is an open-source Python (and JavaScript) library that has become very popular among developers building LLM-driven applications. If Dify is like a ready-to-use machine with safety covers, LangChain is like a box of tools and parts . With LangChain, developers write code to assemble prompts, chain together model calls, manage memory, etc. The key advantage of LangChain is flexibility - skilled developers can fine-tune every aspect of their AI app and integrate it deeply with custom logic, since they're working at code level. LangChain has a thriving community and lots of pre-built modules (for connecting to data sources, evaluating outputs, etc.), but it requires significant coding expertise and effort . As one comparison put it, "Dify is more suitable for developing LLM applications quickly and easily, while with LangChain you have to code and debug your own application" .

In practice, this means a mid-market company with a small dev team might build a prototype chatbot in Dify in a week, whereas using LangChain directly might take several weeks by the time you handle all the infrastructure and edge cases - LangChain often introduces extra abstractions and layers that can be overwhelming for newcomers. LangChain is powerful in the hands of a developer who wants granular control (for example, a gaming company's AI engineer could use LangChain to craft a very custom in-game narrative system integrated with game state), but that power comes with complexity. Dify, on the other hand, tries to cover the common needs in a simpler interface, adding complexity only when absolutely necessary. One metaphor from the Dify team: LangChain is like a toolbox (hammers, nails, etc.), whereas Dify is a "scaffolding system" - a more complete structure with refined design and built-in support, on which you can build faster .

For an executive, the decision here boils down to time-to-market and resources . If you have a strong software engineering team and very unique requirements, coding with LangChain (or other frameworks) might give ultimate flexibility. But if speed and cross-team collaboration are priorities, Dify's no-code approach can drastically cut development time - "you could have dozens of applications up and running in the time it takes to learn how LangChain works," as one analysis noted. Many companies might actually use both : Dify to rapidly prototype or handle standard use-cases, and LangChain or custom code for the parts that need heavy customization. The good news is that they're not mutually exclusive - Dify even allows inserting custom code via its sandbox nodes or calling external APIs which could be built with LangChain.

Dify vs. Flowise/LangFlow (Open-Source No-Code Alternatives)

If Dify is one player in the no-code LLM platform space, there are others like Flowise and LangFlow (both are open-source visual workflow builders for LLMs). These tools share a similar goal with Dify: allow building AI workflows without coding, typically by exposing the components of LangChain in a UI. Flowise is a Node.js-based tool that lets you create chatbots visually, and LangFlow is a Python-based visual interface on top of LangChain.

The similarities are that all provide a canvas to connect LLM components. The differences come in usability and depth of features. Dify has taken a somewhat opinionated approach: it decoupled from LangChain and trimmed the number of building blocks to a concise set (around 15 core node types as of v0.15) to cover a wide range of functionality without overwhelming the user. Flowise and LangFlow, on the other hand, initially exposed dozens of LangChain's components as nodes (embeddings, vector stores, various model types, etc.), which could be confusing - users reported having many similar options and needing to reference documentation frequently while building flows. LangFlow has made strides in improving UX (like filtering compatible nodes as you connect wires), but still, the learning curve was non-trivial for complex tasks.

Dify's edge: usability in building complex workflows and debugging. In a review by AI engineers, Dify was noted to have "the best debugging experience" among these platforms. It keeps comprehensive logs of each run, allows you to examine inputs/outputs of each node in a tracing panel, and crucially, it tracks all your test executions historically (acting as a rudimentary version control for your workflow logic). This is immensely helpful when iterating on an AI app - you can compare how a change affected the outcome, or debug why a certain input failed by looking at the exact state of a previous run. Flowise currently lags in debugging tools (no built-in trace view as of this writing). LangFlow has some visual feedback on nodes, but it lacks Dify's ability to easily inspect prior workflow versions or handle nested sub-flows transparently.

Another aspect is control flow and advanced logic . Dify supports conditional branches (if/else), looping over data, and even nesting workflows (calling one workflow from another) with proper logging of each sub-flow. For example, if you're processing a list of form submissions with an AI, Dify can loop through each entry and summarize it, all within the visual editor, and you can drill down into each loop iteration's details during debugging. Until recently, Flowise had only basic if/else and no loop support, while LangFlow just introduced a loop component in beta (and can run sub-flows but without great debug visibility). The bottom line: for complex automations, Dify currently offers more robust logic control (short of writing code) than its open-source no-code peers.

All three (Dify, LangFlow, Flowise) being open-source means you can self-host them and avoid license costs. The choice may come down to which one your team finds easier and which has the features you need. Dify's philosophy is a bit more constrained but user-friendly - which for a business often translates to faster deployment and fewer "gotchas." Flowise might appeal if you prefer a lightweight Node.js tool to quickly spin up a chatbot, especially if your use case is simple Q&A and you don't need the advanced workflow features. LangFlow appeals to those who want the power of LangChain in a GUI with the option to edit underlying code if necessary (it even allows editing the code of components for ultimate flexibility, though doing so can risk system stability if one isn't careful).

In summary, Dify vs. Flowise/LangFlow is akin to comparing different low-code app builders - all can achieve similar basic outcomes, but Dify might get you further before you have to "drop to code" due to its more comprehensive built-in features (especially for larger-scale workflows). It's also backed by an active core team and community which has been rapidly adding improvements and extensions (plugins) as the field evolves. If your company has minimal developer resources and needs a solution that "just works" with clarity, Dify is likely the safer bet. If you have specific reasons to align with LangChain's ecosystem or need to tweak low-level behaviors, LangFlow could be useful. For something quick and self-contained with fewer bells and whistles, Flowise might suffice (though you should be prepared to do more manual monitoring and possibly hit limitations if your needs grow).

(A quick note: Microsoft is also entering this space with Copilot Studio and similar offerings in Azure OpenAI Studio, which aim to let you orchestrate prompts and build copilots with low-code tools. These are proprietary and still evolving; they might integrate well if you're a Microsoft-centric shop, but they will lock you into Azure's ecosystem. In contrast, Dify and the others above are open platforms.)

Dify vs. OpenPipe (AI Monitoring/Fine-Tuning Tools)

Moving to a slightly different kind of competitor: OpenPipe . OpenPipe isn't a direct app-building platform like Dify; rather, it's an LLMOps tool focused on optimizing model usage . Specifically, OpenPipe provides SDKs and a platform for collecting AI model logs, evaluating outputs, and fine-tuning models on your own data . Companies use OpenPipe to, for example, capture every prompt & response that their application generates, then analyze that data to find ways to improve quality or reduce costs (maybe by fine-tuning a smaller model to replicate what a big model was doing, thereby saving on API costs).

If we compare, Dify is about building and deploying the AI application , whereas OpenPipe is about improving and managing the AI model's performance and cost . OpenPipe can log requests from any source (you could use it alongside a LangChain or even with Dify's API calls if integrated) and then help you create fine-tuned versions of models or A/B test prompts and models easily. It offers features like caching (so identical requests don't always hit the model API) and model comparison tools.

For a mid-market firm, the question is: do you need this level of optimization sophistication? If you plan to deploy AI features at scale (millions of requests, or critical business tasks), then using something like OpenPipe could drastically cut ongoing costs and ensure quality by training custom models from your usage data. But OpenPipe does not provide a user-facing app or workflow design - it assumes you already have an app or at least a process in place that generates prompts. So OpenPipe might be seen as a complement to a platform like Dify in some cases. You could build your AI app in Dify, and as it matures, start piping the data into OpenPipe to train your own model that you then plug back into Dify (since Dify supports custom model providers via API). This would be an advanced route that a tech-savvy mid-market company (with maybe a data science team) might pursue to reduce dependency on expensive external APIs.

If we position them as alternatives: choosing OpenPipe vs. Dify is a bit apples-to-oranges. However, some companies without a need for a full app builder might use OpenPipe + their existing software as an approach to add AI. For example, if you have an existing SaaS product and strong developers, you could call AI APIs directly in your code, log those calls with OpenPipe, and retrain models to eventually run on your own infrastructure. That path is more engineering-intensive upfront than using Dify, but potentially more cost-optimized long-term (and gives ultimate control over the model). On the flip side, if you want to stand up new AI-driven processes quickly and deliver value to users now , Dify's higher-level approach will get you there faster, albeit potentially with higher per-call costs if you stick to third-party models and without the hyper-optimization that OpenPipe offers.

To sum up: OpenPipe is a specialist tool for LLM optimization (fine-tuning, monitoring, logging) , whereas Dify is a generalist tool for AI application creation and operation . Mid-market firms that are in growth mode usually benefit from generalist tools to cover a lot of ground quickly. Specialist optimization can come into play once the AI usage reaches a scale where model inefficiencies cost significant money or impact user experience. One might start with Dify (fast deployment), and later introduce OpenPipe or similar techniques to refine and cut costs.

Dify vs. Azure Machine Learning (Azure ML) and AWS Bedrock

Finally, let's compare Dify with the offerings of the big cloud providers, since many mid-market companies are heavily invested in Microsoft Azure or AWS. Microsoft and Amazon (and Google, for that matter) each have their own approaches to helping customers build AI solutions:

- Azure Machine Learning (and Azure AI Studio): Azure ML is a comprehensive cloud platform for developing, training, and deploying machine learning models. It's not limited to LLMs - it's used for all kinds of ML and deep learning. Azure ML provides things like Jupyter notebooks, automated ML, a drag-drop pipeline designer, and MLOps capabilities for lifecycle management. Recently, Microsoft has also introduced Azure AI Studio and Prompt Flow (part of their OpenAI Service) which is more directly analogous to Dify in that it lets you orchestrate prompts and evaluate them, and build chatbots using Azure's models (including OpenAI models hosted on Azure). The major difference is that Azure's tools are proprietary and cloud-only - you'll be operating within the Azure ecosystem and your integrations will naturally favor other Azure services (e.g. Cosmos DB, Azure Data Lake) for data. Azure ML/AI Studio is powerful and suited for enterprise IT teams, but typically requires more technical expertise and can be overkill for narrow applications . For example, to build a simple Q&A bot on Azure, one might set up an Azure OpenAI resource, use Prompt Flow to craft prompts, use Cognitive Search for knowledge base, etc. - it's doable, but you're tying together multiple Azure services, each with its own configuration and pricing. Dify, by contrast, bundles the equivalent pieces together for you (the prompt interface, the vector store, the UI, etc.) and can run outside Azure. So, if you already have Azure-centric developers or you need tight integration with, say, Microsoft 365 data, you might lean into Azure's ecosystem. But if you want a more agnostic and potentially simpler solution , Dify could be up and running with less cloud architecture work. It's also worth noting cost: Azure ML and related services charge based on usage (for example, you'd pay for each OpenAI call, storage for vector index, etc. separately). Dify's cost structure (discussed in the next section) might be more predictable for initial experiments, especially if self-hosted.

- AWS Bedrock: AWS Bedrock is Amazon's managed service for consuming generative AI models. Essentially, Bedrock offers access to various foundation models (like Amazon's own Titan models, Anthropic's Claude, Stable Diffusion for images, etc.) via API, without you needing to manage model infrastructure. It also provides some tooling for building applications (Amazon has concepts like "AI agents" and uses Lambda for custom code, etc.), but at the time of writing, Bedrock is primarily about providing scalable, secure access to models on AWS . If you are an AWS-heavy organization, Bedrock can be attractive because it keeps all data and calls within AWS (meeting compliance needs) and simplifies billing (everything shows up on your AWS bill). However, Bedrock by itself is not a full application development platform . It's more comparable to just the "model provider" part of Dify. You would still need to implement the logic of your application - possibly using AWS Lambda, Step Functions, or other AWS services around it. For instance, a telecom company might use Bedrock to get access to a GPT-4-like model for analyzing customer chats, but they would orchestrate the workflow with custom AWS Lambda code and integrate with their databases through AWS tools. This is a solid approach if you have AWS architects on hand and you want maximum control under the AWS umbrella. But again, it's code-centric and service-integration heavy compared to Dify's out-of-the-box solution.

One risk to note is vendor lock-in : using Azure's or AWS's native AI services can deepen your dependence on those platforms. This might be fine if you're already all-in and get enterprise discounts, etc., but it can also mean less flexibility and potentially higher cost if you're not carefully managing usage (cloud costs can scale in non-linear ways). Dify, being open-source and model-neutral, gives an escape hatch - you could host it on OVHCloud or on-prem hardware, and you could swap the backend model from OpenAI to an open-source model to cut costs, for example.

Comparison Table

To summarize the competitive landscape, here's a high-level comparison of Dify and the above alternatives, highlighting their nature, strengths, and considerations:

| Platform | Type & Deployment | Key Strengths | Notable Limitations | Pricing Model |

|---|---|---|---|---|

| Dify.ai | Open-source low-code platform ; can self-host (Docker/K8s) or use Dify Cloud SaaS. | - No-code rapid development - visual workflow builder accessible to non-developers.- All-in-one features - integrates LLM support, data indexing (RAG), prompt management, and team collaboration in one platform.- Model-neutral & extensible - works with many LLMs (OpenAI, Anthropic, local models, etc.); offers API and plugin hooks to extend functionality. | - Flexibility trade-off - limited to provided components and sandbox for code (complex custom logic may require external services).- Young ecosystem - though growing fast, fewer third-party plugins compared to longer-standing toolkits; some advanced features (e.g. fine-grained vector search filters) still on roadmap.- Hosting overhead (if self-hosting) - requires DevOps effort to maintain containers and ensure scalability. | Open-source (free) for self-host. Cloud plans: Free tier, then Professional ($59/mo) and Team ($159/mo) for higher usage/collaboration, and Enterprise custom pricing. (Using third-party models may incur additional API costs.) |

| LangChain | Open-source developer library (Python, JS). Deployed within your custom application code. | - Highly flexible - you can craft any pipeline or behavior in code; large community with many integrations.- Granular control - tailor every prompt, memory, tool use to your needs; no hidden layers.- Ecosystem - many off-the-shelf modules and examples; integrates with other libraries (e.g. vector DBs, tools) readily. | - Requires coding expertise - not accessible to non-programmers; steep learning curve to master various concepts.- Development time - building and debugging an app from scratch is slower vs. no-code tools.- Maintenance - you own the entire stack; upgrading LangChain versions or fixing bugs is on your team. | Free (open-source). Cost comes from developer time and the runtime resources (and any model API usage). No license fee. |

| Flowise / LangFlow | Open-source no-code workflow tools (Flowise in Node.js; LangFlow in Python). Self-hosted on your server. | - Easy visualization - create chatbots and flows with nodes representing LLM calls, similar to Dify's concept.- Leverage LangChain components - especially LangFlow, which exposes many LangChain tools; can modify component code in LangFlow for flexibility.- Free and deployable - no cost to use; can run on your own hardware or cloud instance. | - Less polished UX - interface can be confusing with too many component options; lacks some quality-of-life features (e.g. Flowise has minimal debugging support).- Limited logic control - until recently no looping support, only basic branching in Flowise.- Smaller community - user base and community contributions are smaller than Dify's, which may slow issue resolution and new feature development. | Free (open-source). Similar to LangChain, your costs are hosting the app and any API usage. |

| OpenPipe | LLMOps optimization platform (hosted service with SDK; some open-source components). | - Cost optimization - helps reduce LLM API usage costs via caching and fine-tuning your own smaller models.- Logging & analytics - captures all prompts and responses for analysis; provides evaluation tools to improve prompts/models.- Fine-tuning pipeline - makes training custom models on captured data easier, potentially boosting performance and cutting latency. | - Not an app builder - no user interface or end-user app component; must be integrated into your own code or platform.- Reliance on OpenPipe service - while you can export data, using OpenPipe's full capabilities ties you to their platform for hosting models or running feedback loops.- Supported models only - fine-tuning and hosting are limited to certain models they support, so not entirely "bring your own model" (though it covers popular ones). | Free tier likely available; paid plans or usage-based pricing for hosting and advanced features (OpenPipe's pricing is typically custom or usage-based, since it often involves compute for fine-tuning). |

| Azure Machine Learning / AI Studio | Cloud enterprise ML platform (Microsoft Azure). Fully managed by Azure in their cloud. | - End-to-end ML ops - great for organizations doing custom model development; provides data pipeline, training, deployment, and monitoring in one ecosystem.- Azure OpenAI integration - easy access to OpenAI models (and others) with enterprise security, plus tools like Azure Cognitive Search for knowledge base integration.- Enterprise support - comes with Azure's reliability, compliance certifications, and support options - important for regulated industries. | - Complexity - high learning curve if you just want a simple AI app; many moving parts and Azure-specific concepts (might require an Azure ML engineer to utilize fully).- Proprietary - tied to Azure cloud; limited portability. If you ever switch providers, you'd need to rebuild elsewhere.- Cost - pay-as-you-go pricing for each component can add up (GPU compute, storage, API calls, etc.). Without careful management, experiments can become expensive. | Proprietary pay-per-use (no upfront license, but costs accrue for cloud resources). Azure OpenAI Service charges per 1K tokens for model usage; Azure ML charges for compute instances, etc. Budget control is needed to avoid surprises. |

| AWS Bedrock | Managed AI model service (Amazon AWS cloud). Available as an API/SDK in AWS environment. | - Multiple models on tap - offers a menu of foundation models (Amazon, Anthropic, Stability AI, etc.) behind a unified API.- No infrastructure to manage - you don't need to set up servers or GPU instances for these models; AWS handles scaling and performance.- Secure & integrated - works within your AWS Virtual Private Cloud, which appeals to IT security; easily connects with other AWS services for data storage, authentication, etc. | - Limited to inference - Bedrock handles the model inference, but not the overall application logic. You still need to build the app (prompt orchestration, UI, etc.) using other tools or code.- AWS lock-in - obviously, it's an AWS-only solution. If you use it heavily, moving to another environment would be non-trivial. Also model selection is limited to what AWS provides (for example, if a new model comes out elsewhere, you must wait for AWS to offer it).- Cost - pricing is usage-based per model invocation (and some models can be expensive per call). It's convenient, but at scale costs must be monitored. | Proprietary usage-based. Each model (e.g. Claude, Jurassic, etc.) has its own pricing per million tokens. No upfront costs, but high usage can incur significant monthly fees. (There's no "free Bedrock," though AWS may offer trial credits.) |

(Table: A comparison of Dify.ai and other AI development options. )

As the table and discussion indicate, Dify.ai's sweet spot is in enabling rapid, collaborative AI app development with minimal code , whereas alternatives like LangChain target developers who need fine control, and cloud services like Azure/AWS provide heavy-duty infrastructure but often require more investment in development effort and risk provider lock-in. For a mid-market B2B firm, using Dify could mean the difference between launching an AI-enhanced feature in a month versus in a quarter - but it's also important to acknowledge the trade-offs (less custom code freedom, reliance on the Dify platform's roadmap for some features). In the next sections, we'll delve into Dify's pricing (to see if it truly offers a cost advantage) and how it would fit into your company's tech stack and processes.

Pricing Breakdown of Dify.ai (Transparent Analysis)

One of the refreshing aspects of Dify.ai is that it offers an open-source option alongside a hosted cloud service - giving businesses flexibility in how they manage costs. Let's break down the pricing in a transparent way:

- Open-Source Self-Hosting (Free License): Dify's core platform is open-source (under a permissive license). This means you can download it from GitHub and run it on your own servers or cloud instances without paying a license fee . For budget-conscious teams or those with strict data control needs, this is a big plus. Essentially, the software is free; you incur only the infrastructure costs (servers/VMs, storage) to host it, and the costs of any API usage (e.g., if your Dify workflows call the OpenAI API, you still pay OpenAI for those calls). The open-source route does require IT effort - you'll need DevOps to deploy and maintain it (Dify provides Docker Compose and other deployment guides, and it can be containerized for Kubernetes as well). Many mid-market firms with existing DevOps teams (perhaps using Kubernetes/Rancher already) can integrate this relatively easily. The benefit is no recurring subscription fee to Dify and full control of data and environment . The downside might be the learning curve of updates and scaling - you'd handle upgrades and ensure it's running smoothly. However, since Dify is open-source, community support is available, and you could always reach out to the company for enterprise support if needed (likely for a fee or via their premium offerings).

- Dify Cloud (Hosted SaaS): For those who don't want to self-manage, Dify.ai offers a cloud-hosted service with a tiered subscription model . As of the latest information, the pricing tiers are:

- Sandbox - Free: This is essentially a trial/starter tier, including up to 200 messages (API calls) free . It's limited to 1 user and a handful of apps (5 apps, 50MB knowledge storage, etc.). The Sandbox is great for evaluating the platform or doing a proof-of-concept. No credit card is required to start, which lowers the barrier to try it out. Mid-market firms might use this to let a small team play around before deciding to scale up.

- Professional - $59 per workspace/month: This tier is aimed at "independent developers or small teams". It allows 3 team members in one workspace, up to 50 apps, and includes 5,000 message credits per month . It also expands knowledge base limits (500 documents, 5GB data) and provides "priority" processing and unlimited API call rate (meaning your apps can be used more intensively). For a mid-market business, the Professional plan could suffice for a pilot project or a small department's use. At $59/month, it's relatively affordable - likely far less than the salary cost of even one developer, which is a point to consider (i.e., if Dify saves your developer even a few hours of work a month, it pays for itself).

- Team - $159 per workspace/month: Geared towards "medium-sized teams", this allows up to 50 team members to collaborate in one workspace (suitable if you want many people - perhaps from different departments - building or using AI apps). It increases usage to 10,000 message credits/month and raises limits further (200 apps, 1,000 knowledge documents, 20GB storage). The Team plan also likely has the highest priority for any processing and more annotation quotas (useful if you are using Dify's feedback/annotation features). At $159/month, this is still modest for a mid-sized company's software subscription - you might compare it to other SaaS tools your teams use. If multiple departments (say marketing and customer service) each spin up AI apps in Dify, consolidating under one Team workspace could be cost-effective.

- Enterprise - Custom pricing (Contact Sales) : There is an Enterprise tier not listed with a fixed price, which presumably offers unlimited or higher limits, dedicated support, single sign-on integration, deployment on a private cloud/VPC, etc. The cost would depend on the scope (likely in the form of an annual contract). Large mid-market or enterprise customers would consider this if the standard plans don't meet their volume or compliance requirements. For example, an enterprise plan might allow multiple workspaces (for different business units) and more granular security controls.

A transparent point to note is the concept of "message credits." In Dify's pricing, a message credit typically corresponds to one AI model call (like one prompt/response) of a certain size. The free and paid plans include some credits, but if you use more than that, how does it work? Dify's documentation indicates that after using included credits, you would need to attach your own model API keys (e.g., your OpenAI key) or purchase additional credits. Essentially, the included credits are like a bundled value (Dify likely eats the cost up to that amount as part of your subscription). Beyond that, you're either paying the model provider directly or buying add-on packs. This setup is similar to how some cloud services bundle a quota and then go pay-as-you-go . It's transparent in that you can monitor usage in Dify's dashboard (they provide logs of all calls and costs), and you won't be surprised by Dify's bill - the limits are fixed per tier unless you intentionally scale up.

For mid-market B2B use cases , let's consider a couple of examples:

- A regional bank's IT team wants to deploy an internal AI assistant to help employees retrieve HR policy information. They could start with the Professional plan at $59 and build the app. As usage grows (let's say it ends up answering 8,000 queries a month), they might upgrade to Team $159 to comfortably cover ~10k queries and have more users on board. Their primary cost beyond this would be the OpenAI (or other model) fees for those queries - which, for 10k queries, might be, say, $20-$50 depending on the model and prompt length if using a GPT-3.5 model. So in total, maybe ~$200/month all-in, which is trivial compared to the value of saving employees' time searching manuals.

- A mid-sized e-commerce company wants to add a customer-facing chatbot for product questions. They plan for high volume (say 50k messages/month). They might directly engage Dify on an Enterprise plan to ensure high throughput and support. Let's assume (hypothetically) an enterprise deal of $500/month with higher limits - even then, the major cost might be the model usage (maybe a few hundred dollars more). In comparison, commissioning a custom software agency to build a similar chatbot from scratch could cost tens of thousands upfront. So, Dify's pricing can be seen as very transparent and cost-effective for the functionality it provides .

One should also compare with competitor costs:

- LangChain or self-built code : no platform fee, but you pay more in developer hours. If a dev at $100k/yr spends 10% of their time maintaining AI integrations, that's $10k/yr "cost," which dwarfs something like $1-2k/yr for a Dify Team subscription.

- Flowise/LangFlow : free platform, but again you'll spend time dealing with their limitations; also they don't have a managed cloud service, so you'd be running it on your hardware - minor cost but some effort.

- OpenPipe : likely charges per usage or a platform fee for their hosted components. If you fine-tune models, you pay for that compute too. It could be worthwhile if you save significantly on model calls, but it's a different value prop.

- Azure/AWS : no "subscription" but entirely pay-as-you-go. For instance, Azure's equivalent might be: you pay for an Azure OpenAI instance (which might require a minimum fee in some cases), each request, plus the storage for your data, etc. It's feasible to approximate: e.g., an Azure Cognitive Search service might be $200/month for the knowledge base, plus each AI call. In AWS, using Bedrock plus a Lambda-based orchestration might incur a mix of small charges (fractions of a cent per request) - which can be cheaper per call at large scale but you trade off development cost and flexibility.

Important: Dify's value isn't just the dollar cost - it's also the transparency and predictability . The plans clearly cap what you get, which is good for CFOs who hate surprise overruns. And if you self-host, you have full control; cost then is whatever you allocate (you could run Dify on an OVHCloud server you already pay for, for instance, adding effectively no new cost).

In conclusion, Dify's pricing is straightforward and relatively low for mid-market budgets , especially considering it can replace a lot of custom development. The free tier lets you try before committing. The monthly plans in the tens or low-hundreds of dollars are small investments for potentially significant gains in productivity or new capabilities (e.g., faster customer support responses, automated content generation, etc.). Moreover, the option to self-host for free acts as a safety net - if you ever felt the SaaS fees or limits weren't acceptable, you could bring it in-house (which mitigates vendor lock-in concerns from a financial perspective).

(As an actionable tip: CTOs and CFOs might collaborate to start with a low-tier subscription to experiment, and simultaneously budget for the infrastructure in case they decide to self-host long term. That way you can compare the convenience of SaaS vs. the control of self-host in real dollars.)

How Dify Fits into Mid-Market Tech Stacks (Integration & Deployment)

Introducing a new platform like Dify.ai into your organization raises practical questions: Will it play nicely with our existing systems and dev processes? Does it align with the technology stack we've invested in? Let's address those concerns by examining how Dify can integrate with common mid-market B2B tech stacks - including considerations for Kubernetes, Docker, CI/CD pipelines, databases, and cloud/on-prem environments.

Deployment in Containerized Environments (Kubernetes, Docker, Rancher): Dify was built with modern cloud-native practices in mind. The open-source version is distributed as a set of Docker containers (API server, frontend, workers, database, vector store, etc.), which means you can deploy it on any Docker-compatible infrastructure easily. For many mid-market firms using Docker and Kubernetes (often managed via Rancher or similar) , Dify can be just another application in your cluster. In fact, Dify provides a one-click Helm chart for Kubernetes in some environments (for example, Alibaba Cloud created an "ack-dify" easy deploy on their Kubernetes service). This indicates that running Dify on K8s is straightforward - it requires around 8 CPU cores and 16 GB RAM for a comfortable setup as per recommendations, which is modest for an organization-level tool.

If your infrastructure includes Harvester HCI and Rancher (for private cloud virtualization and K8s management), you could deploy Dify on your on-prem cluster to keep everything internal. Rancher would treat Dify's services like any other microservices. , as a firm proficient in Rancher/Kubernetes, often deploys custom solutions on such stacks - a platform like Dify would be well within scope to integrate, ensuring it adheres to your network policies and resource quotas. Because Dify uses containers, it's also compatible with Docker Swarm or even just Docker Compose on a single VM if you prefer simplicity (e.g., you could run it on a dedicated VM or an OVHCloud Public Cloud instance using Compose).

Integration with CI/CD and DevOps (Azure DevOps, Git, etc.): If your dev teams use Azure DevOps (on-prem Server or cloud) or GitLab/Jenkins pipelines , you might wonder how Dify fits in. Since Dify is more of an application platform than a library, you typically don't "build Dify into your codebase" - instead, you deploy it as a service and then use its APIs. This means your CI/CD might come into play for deploying Dify updates (pulling new Docker images and applying them) or managing configuration as code. You can store Dify's config (environment variables, etc.) in your configuration management system. For example, if you manage infrastructure with Terraform/Ansible, you could include Dify's deployment there. On the DevOps tools side, Dify doesn't require special tools beyond what you have: Azure DevOps can be used to orchestrate deployments, and you can host the Dify containers in your Azure environment if desired. If you're a Microsoft-centric shop, you might consider running Dify on Azure Kubernetes Service and using Azure DevOps pipelines to auto-deploy any updates. The key integration point with development workflows is Dify's API access - your development teams (perhaps using VS Code/Visual Studio 2022, which Baytech's engineers often use) can write code in existing apps that call Dify's REST endpoints for AI functionality. For instance, your existing web app could send a request to Dify's API whenever a user asks a question, and display the answer from Dify. This decoupling is nice: it means your developers don't have to embed prompt engineering logic in the main app code - they outsource that to Dify, which is easier to update in isolation.

Databases and Data Sources (PostgreSQL, SQL Server, etc.): Mid-market firms commonly use relational databases like Microsoft SQL Server or PostgreSQL to store business data. How would Dify interact with that data? Out-of-the-box, Dify doesn't directly connect to SQL databases (there isn't a "SQL query" node in the workflow as of now). Instead, the pattern would be to use knowledge documents or custom tools . For example, if you want Dify to leverage data from a database, you could export that data to a document (CSV, PDF, etc.) or expose it via an API endpoint, then let Dify ingest it. Dify's "Knowledge" feature can take text or files and index them, so one approach is generating a periodic report from your database and feeding it in. This is obviously not real-time, so another approach for dynamic data is to use a Dify code node or plugin that queries the database through an API. Since Dify's code node is sandboxed (no direct DB drivers by default), the recommended way is to create a simple API microservice on your end that Dify can call with an HTTP request to fetch data . Dify can call external APIs either via its webhooks or by using an "API tool" plugin if you develop one. While this might sound like a limitation, it's actually a safer architecture: it means the AI doesn't arbitrarily execute SQL (which could be a security risk). Instead, your IT controls exactly what data is exposed via an API, and the AI just uses that. For example, a real estate firm might have a property listings API; Dify's agent could call GET /api/listings?location=LA when a user asks "What properties do we have in Los Angeles under $500k?". You can implement and secure that API however you like (and Baytech's custom development expertise could help build such APIs if needed, ensuring enterprise-grade security around it).

It's worth noting Dify's internal architecture uses PostgreSQL (for application data) and a vector DB (Weaviate by default, or can be configured). This is mostly under the hood, but if your IT requires using a specific DB (say, using your corporate PostgreSQL or Azure SQL rather than the containerized one), that could be possible with configuration - but typically the easiest path is to allow Dify to run its own instance. The storage sizes (5GB to 20GB in cloud plans, or whatever you allocate self-hosted) are generally small unless you load huge datasets. So from a DBA perspective , Dify won't put load on your existing transactional databases - instead, it's a separate system that might occasionally interface via API.

Cloud Providers and Hybrid Deployments (OVHCloud, AWS, Azure, On-Prem): Dify is cloud-agnostic. You can deploy it on OVHCloud servers (which some mid-market European firms prefer for data sovereignty), on AWS EC2 or Azure VMs, or on-premise hardware. Baytech's infrastructure, for instance, includes OVHCloud and even self-hosted data centers with pfSense firewalls - Dify can be run in such environments since you just need to provide the computing environment for the containers. If deploying on-prem, you'd typically install Docker on a VM (maybe within your VMware or Harvester virtualization) and follow Dify's self-host guide. There may be considerations like allowing egress internet access if you want Dify to call external model APIs (your security team might need to whitelist OpenAI's API, etc. through pfSense). Alternatively, if using local models only (say, running an open-source model on the same server), you might not need any external internet - a fully offline setup is possible, which is a big plus for strict compliance.

Microsoft 365 and Google Drive Integration: Many companies have a lot of knowledge in documents on SharePoint, OneDrive, or Google Drive. While Dify doesn't natively have a one-click integration to those (as of now), you can get data from them into Dify's knowledge base fairly easily by exporting files. A likely feature on Dify's roadmap might be connectors to such services via API. Until then, a script that regularly pulls documents from a SharePoint folder and feeds them into Dify would do the trick. This is an area where a custom solution can bridge the gap - something Baytech could assist with, writing a connector that uses Microsoft Graph API to fetch documents and then uses Dify's API to upload them as knowledge documents. So, integration is achievable, it just may require a bit of custom glue code at the moment.

Enterprise Security and Access Control: Dify supports basic team/user management and presumably can tie into SSO in enterprise plans. If you self-host, you could put Dify behind your SSO (for example, running it only internally so only authenticated VPN users or through an SSO proxy can access it). The pfSense firewall in your network can restrict Dify's access in and out (for instance, you could allow it to call only certain external endpoints if needed). Since Dify will be handling possibly sensitive prompts or data, treat it as you would any new application: do a security review, ensure encryption in transit (it uses HTTPS via the Nginx container), and encryption at rest if needed (you might set up disk encryption on the host running it). Dify being open-source means your security team can even inspect the code for any known vulnerabilities or backdoors - a level of transparency not possible with closed SaaS.

Monitoring and Maintenance: If you integrate Dify into your stack, you'll monitor it like a service - e.g., using your standard monitoring tools (DataDog, Zabbix, Azure Monitor, etc.) to check that the containers are running and responsive. Dify has its own internal monitoring tab for AI usage, but you'll likely integrate logs or metrics (maybe from the Nginx access logs or API logs) into your central log system for auditing. Because Dify will be a critical piece if widely adopted, ensure your IT has a backup/HA strategy: you could run it in a redundant setup (e.g., multiple replicas in K8s for the stateless parts, a managed Postgres for state, etc.). These are all feasible given it follows standard software patterns.

In essence, Dify can fit quite naturally into mid-market tech ecosystems . It doesn't demand exotic technology - if you have modern DevOps with containers, it slides right in. If you have a more traditional Windows Server environment, you might run it on a Linux VM as a standalone appliance. It acts as a complementary layer: your existing systems remain in place, and Dify augments them with AI capabilities via its UIs and APIs. And if there's a gap (like connecting to a legacy system), that's where a bit of custom development can fill in - either by your internal team or with the help of a software partner.

From Baytech Consulting's perspective, we find that platforms like Dify are a great match for our philosophy of using the right tools for the job and tailoring solutions . We often help clients integrate off-the-shelf platforms (like Dify) into their stack when it provides a jump-start, and then customize around it for the perfect fit. With our expertise in technologies like Azure DevOps (for pipeline automation), Kubernetes/Docker (for deployment), and robust databases (PostgreSQL, SQL Server), we ensure that introducing a tool like Dify is smooth and aligns with enterprise-grade standards. The goal is to leverage Dify's speed while avoiding any lock-in risks by keeping the surrounding architecture flexible . If down the road you outgrow Dify's capabilities, having a well-structured integration means you could replace or augment it with a custom solution without starting from scratch.

Benefits, Limitations, and Risks of Using Dify.ai

No technology decision is complete without weighing the pros and cons honestly. Let's summarize the benefits , limitations , and risks of adopting Dify.ai for a mid-market B2B firm:

Benefits and Advantages of AI Agents

- Faster Time-to-Value: Dify enables rapid prototyping and development of AI features. This means you can experiment with AI in various departments (from an AI sales assistant to a support chatbot to an internal report summarizer) much faster than building custom AI solutions from scratch . Speed is not just about convenience - it's a competitive advantage. If your marketing team can deploy an AI content generator in a week, they can capitalize on trends quicker. If operations can automate a routine process with AI, they free up staff sooner.

- Accessibility for Non-Developers: The no-code aspect allows "citizen developers" or subject matter experts to participate. For example, a HR manager could design the flow of an AI tool that answers employee questions about benefits, because they know the context best, while an IT member just oversees it. This democratization can spur innovation bottom-up, as people closest to business problems can create AI solutions without waiting in the IT queue.

- Comprehensive Feature Set (One-Stop Shop): With Dify, you get a lot in one package - multi-model connectivity, data integration, prompt management, UI hosting, logging, etc. This reduces the need to stitch together multiple tools (which could introduce integration headaches or compatibility issues). Everything is integrated and tested to work together, which is a boon for reliability . It's also easier on the learning curve - your team is mastering one platform rather than five different libraries and systems.

- Lower Initial and Ongoing Cost: As discussed in pricing, the cost of using Dify is relatively low compared to hiring additional developers or subscribing to expensive enterprise AI SaaS. Even the engineering time saved on debugging or maintaining custom pipelines is a saving. Furthermore, because you can self-host without fee, you have the option to scale without escalating licensing costs (you'll just add infrastructure as needed, which often is cheaper per unit than user-based SaaS pricing).

- Open-Source and Community-Driven: The open-source nature is a big plus for reducing risk. It means transparency (you can audit the code), flexibility (you can fork or tweak if absolutely needed), and community support . There are over 180k developers in Dify's community - many of whom share best practices, plugins, and answer questions. This community momentum suggests Dify is not a fly-by-night product; it's evolving with real-world feedback. For a mid-market firm, that means you're investing in something that's likely to stay relevant and improve, without being entirely at the mercy of a vendor's roadmap.

- Maintaining Control Over Data: Using Dify (especially self-hosted or even the enterprise cloud option) allows you to keep your data within your own environment or at least segregated. All prompts and responses can be logged for auditing. You can connect your proprietary databases without exposing them to third-party services directly. This is crucial in industries like finance or healthcare - you can adhere to internal compliance by running Dify on-premises, ensuring that sensitive info (like patient data, financial records) used in AI processing doesn't leave your secure network.

- Enhanced Capabilities for Mid-Market Firms: Large enterprises often have resources to build custom AI platforms (or pay for the highest-end solutions). Mid-market firms historically might struggle to implement cutting-edge tech due to resource constraints. Dify levels that playing field - it gives mid-sized companies an "out-of-the-box AI infrastructure" that's enterprise-grade (scalable, secure), without having to invest enterprise-level money. This opens opportunities to innovate and differentiate against both bigger competitors (by being nimble) and smaller ones (by offering more sophisticated tech).

Limitations and Trade-offs

- Customization Ceilings: With any low-code platform, there's a ceiling to how much you can customize within its framework. Dify provides many building blocks, but if you have a highly unique requirement, you might hit a wall. For instance, if you want the AI to do something very specialized that isn't supported (say, a complex multi-turn negotiation strategy in a chatbot), you may find the visual interface limiting. While Dify allows custom code via sandbox nodes or calling external APIs, those are workarounds and not as straightforward as writing your own logic in a programming language. Essentially, Dify trades off some flexibility for simplicity . Most common use cases will fit, but truly novel or edge-case AI behaviors might not. In such cases, you might need to engage developers to extend Dify (e.g., write a custom plugin in Python) or bypass it for that portion of the problem.

- Dependency on Platform Updates: When you adopt Dify, you are somewhat tied to its update cycle for new features or fixes. The good news: it's active and open-source, but consider a scenario - if you desperately need a feature (like vector search with metadata filters or a direct SQL node) that's not there yet, you have to wait for the team to implement it or attempt a contribution yourself. In a purely custom-built solution, you'd have your devs implement what you need when you need it. With Dify, you either wait, implement a hacky workaround, or allocate effort to contribute to the open-source project (which might not be feasible for your team). There's a mild form of vendor lock-in here: not in the traditional sense (since you can leave with your data anytime), but in terms of being at the mercy of the tool's capabilities. Migrating away from Dify later would mean reimplementing its functionality in another way, which is not trivial if you heavily used it.

- Performance Overhead: Using a generalized platform may introduce some performance overhead compared to a highly optimized custom code path. Dify's architecture is quite efficient, but for extremely latency-sensitive applications (like a trading system making split-second decisions), adding an extra hop through Dify might be too slow. Most typical use cases (user-facing chatbots, internal tools) can tolerate the slight added latency of going through Dify's layers. But it's something to consider if you need to squeeze every millisecond or handle very high throughput - you'd need to scale the Dify deployment accordingly (which is doable by adding more worker nodes/containers).

- Training and Cultural Adoption: While Dify is easier than pure coding, it's still a new tool that staff must learn. Business users will need training to effectively design workflows. There could be an initial productivity dip as teams experiment and figure out best practices (e.g., how to write effective prompts, how to structure knowledge bases). There's also the aspect of getting buy-in: some developers might resist low-code, preferring to code things themselves; some business folks might be skeptical of AI's outputs. Addressing this requires internal evangelism and clear demonstration of value. In "They Ask, You Answer" spirit, you should communicate internally why you're using Dify and set realistic expectations (AI might need oversight, it's augmentative not magic).

- Concurrent User Limits and Scaling : Depending on your use case, you should validate how Dify scales in real scenarios. The Team plan supports 50 team members building, but if you deploy an AI feature to thousands of customers, will Dify's backend handle it? The open-source can scale with more containers, but you'll need to monitor and possibly tune it (for instance, adding more workers if CPU usage is high, or ensuring the vector database has enough memory). These are solvable with standard scaling techniques (load balancing, horizontal scaling), but it's a responsibility - using a SaaS like Azure's might abstract that away at higher cost. In short, scaling Dify is in your hands when self-hosting; ensure you allocate sufficient resources for production loads and have a plan for peak times.

Risks and Mitigation

- Vendor/Community Risk: Dify is relatively new (the blog references and version numbers indicate it's in active development post-2023). There's a risk that the project could slow down or the company behind it pivots . However, because it's open-source, the codebase would still be available. The risk is more about support and evolution - if key maintainers stopped, would the community carry it forward? Right now momentum is strong, but as an executive you should keep an eye on the project's health (GitHub activity, funding). Mitigation: have an exit strategy - e.g., design your usage such that if needed, you could either maintain a fork internally or transition to another platform (maybe LangChain or an emerging competitor) without catastrophic disruption. This means avoid over-customizing or relying on very specific Dify quirks; keep your AI logic as model-agnostic as possible so it can be ported.

- Data Privacy and Compliance: Using AI on company data always raises privacy questions. If using external models (like OpenAI via Dify), you are sending data to those third parties. A misconfigured Dify (e.g., using a non-approved model or logging sensitive data insecurely) could violate policies. Mitigation: enforce that Dify only uses approved model APIs (for instance, OpenAI's enterprise endpoint or Azure OpenAI where data is not retained) - Dify allows choosing model providers, so pick ones that sign a data processing agreement your legal is okay with. Alternatively, use open-source models hosted internally for very sensitive data. Also leverage Dify's ability to keep data logs - and then protect those logs. If an AI will handle personally identifiable information, involve your compliance officer to do a proper DPIA (data protection impact assessment) on how Dify processes and stores info. As a risk, also consider if users input something they shouldn't (like a customer typing a credit card number into a chatbot) - you might need measures to detect and mask such data (some AI platforms allow regex redaction of certain patterns before processing). Currently Dify doesn't explicitly mention built-in PII scrubbing, so that might be a process to add.

- Quality and Accuracy Risks: AI models sometimes give wrong or biased answers. Dify doesn't inherently solve that - it provides tools (like retrieval augmentation, prompt control, and annotations for feedback) to help manage it, but the risk remains that an AI app could produce incorrect output. In mid-market firms where one error can sour customer trust (imagine a healthcare bot giving the wrong advice), this is a risk that must be mitigated with human oversight, thorough testing, and gradual rollout. Dify's monitoring features (logs of all answers) are helpful because you can review what it's telling people. You might deploy internal first, get confidence, then external. Also, you can configure "guardrails" in prompts (explicit instructions for the AI to not do certain things) - but recognize it's not foolproof. Essentially, treat AI outputs as you would a new employee's work: review them until proven reliable. Dify makes it easier to do that review by centralizing the interactions.

- Vendor Lock-in (Soft Lock-in): While we touched on it, let's be explicit: if your entire workflow and prompt logic lives in Dify and one day you decide to move away (say a new platform XYZ is now the rage or you want to fully custom-build), you will have to replicate those workflows elsewhere. There is no export standard for "AI workflows" yet (though some projects are exploring open formats). This is similar to how moving from one CRM to another requires data and process migration. Mitigation: document your critical AI processes outside Dify as well (what prompts you use, what knowledge sources, etc.), so that you have the specs to rebuild if needed. Also, keep your training data and knowledge base content in your own backup (Dify stores knowledge docs, but you should still have the originals). The fact that Dify is open-source means you'll never lose access to the software, but you may choose to switch for other reasons, so being prepared is wise.

- Over-Reliance Risk: There's a subtle organizational risk: if teams get very used to Dify doing magic, they might lose some internal capability to solve problems directly or to code solutions. It's the classic risk of any high-level tool - people may start treating it as a black box and not understand how it works. This can become a problem if something goes wrong and no one knows how to fix or adjust because the team's skillset has atrophied or was never built (e.g., prompt engineering vs. algorithmic thinking). Mitigation is cross-training: ensure that some core team members deeply understand what Dify is doing under the hood (how LLMs work, how prompts function). Encourage a culture where Dify is a tool, not an oracle - employees should feel comfortable questioning its output and making improvements. By keeping critical thinking sharp, you reduce the chance of "garbage in, gospel out" mentality.

After assessing these, one might conclude that the benefits of Dify for mid-market firms outweigh the drawbacks, provided you implement it thoughtfully and with proper governance . The risks are manageable with strategies in place, and they are similar to the risks of adopting any new tech - requiring due diligence and clear policies. The limitations are mostly in edge cases or extreme customization scenarios; for a large class of common business AI uses, Dify's capabilities are more than sufficient. The advantages - speed, cost savings, empowerment, and maintaining control - align well with the needs of resource-conscious, agile mid-market organizations.

Actionable Insights and Recommendations for Mid-Market Executives

Adopting a platform like Dify.ai is not just a technical decision, but a strategic one. Here are actionable insights tailored to key leadership roles in a mid-market B2B firm, to help you move forward effectively:

- For CTOs / Technology Leaders: Start with a pilot to validate Dify's fit. Identify one or two high-impact, contained use cases (e.g., an internal knowledge assistant or a customer support chatbot for a specific product line) and implement them using Dify. This will help your team gain expertise and uncover any integration challenges on a small scale. Evaluate the pilot on metrics like development time saved, user satisfaction, and how well it integrated with your stack (did it deploy smoothly on your Kubernetes cluster? Did it work with your DevOps pipeline?). Given Dify's openness, involve your security and compliance team early - let them review the architecture (open-source code, data flow diagrams) to address concerns about data handling. If the pilot succeeds, create a roadmap for broader adoption: where else can similar AI assistants or automations provide value? As CTO, also plan for scaling - ensure that the infrastructure team is ready to manage the Dify deployment (monitoring, scaling, backup). In parallel, consider a skills development plan : you might send some of your engineers or citizen developers for prompt engineering training or Dify community webinars, to build in-house proficiency.

- For CFOs / Finance Executives: Scrutinize the cost-benefit analysis, but remember to account for both tangible and intangible benefits. Dify's subscription or self-hosting cost will likely be a rounding error in your budget; the more significant question is: what ROI do the AI applications deliver? Work with functional heads to estimate time saved or revenue opportunities. For instance, if an AI sales tool can handle lead qualification chats, does that free up your sales reps to close more deals (leading to X increase in conversion)? Or if an AI system handles 50% of Level-1 IT support queries, how much support staff time is saved (value that in dollars). Those efficiencies and improvements are the real ROI. Also budget for model usage costs - get a forecast from IT on expected API call volumes and thus monthly costs from OpenAI or others, so that you're not caught off guard. A tip: leverage any cloud credits or vendor agreements you have. If you have an Azure enterprise agreement, see if using Azure OpenAI via Dify makes sense (costs might roll into your existing contract). Or if you have committed spend on AWS, perhaps run Dify on AWS and call models there. Essentially, optimize the deployment to fit your financial strategy. Finally, consider the cost of not doing this: if competitors implement AI and you don't, what's the potential loss? Often, that strategic downside risk dwarfs the small investment in platforms like Dify.

- For Heads of Sales and Marketing: Think of Dify as an enabler to boost customer engagement and personalize experiences at scale. Brainstorm with your teams about repetitive or data-intensive tasks that slow them down. For example, in sales: crafting initial outreach emails, generating custom pitch decks, answering RFP questions - many of these could be accelerated with AI. Using Dify, you could build a "sales assistant" app that generates a first draft of a proposal or suggests responses to common objections by pulling from your knowledge base of past proposals. Try it in one segment (maybe an AI that helps inside sales with follow-up emails for the finance industry clients, using approved messaging). Measure if it improves response rates or reduces time spent per lead. For marketing: consider an AI content generator to produce blog outlines, social media copy, or even tailor website content to different visitor personas in real-time. Dify can integrate your brand's tone and factual info (because you can feed it your marketing collateral as a knowledge base) so the output stays on-brand. Important: put a human-in-the-loop for quality control. Marketing directors should set guidelines for where AI content can be used without review vs. where it must be reviewed. Over time, as trust in the AI's output grows, you might increase its autonomy. Another actionable insight is to use Dify's analytics: see what customers are asking the AI (via chatbots, etc.) - this can inform your content strategy or sales training (e.g., if many prospects ask about a feature, make sure sales collateral addresses it clearly). Sales and Marketing should collaborate with IT on these tools - content from marketing plus customer knowledge from sales can be combined in a Dify app that benefits both.