Upskill Your Software Engineers for AI Success in 2025

October 28, 2025 / Bryan ReynoldsIs Your Team AI-Ready? A Plan for Upskilling Your Developers for the Age of AI

As a technology leader, you're at the epicenter of a seismic shift. The board, your customers, and the market at large are all asking the same question: "What's our AI strategy?" The pressure to integrate artificial intelligence isn't just a trend; it's a competitive imperative. The most immediate, seemingly logical answer is to go out and hire a team of AI experts. But this path is fraught with peril. You're entering a hyper-competitive, astronomically expensive talent market where demand wildly outstrips supply.

There is a better way. The most sustainable, cost-effective, and strategically sound path to becoming an AI-powered organization is to invest in the brilliant minds you already have. Your software engineers possess 80% of the skills required; they understand your systems, your culture, and your customers. Transforming them into capable AI and machine learning practitioners isn't just possible—it's the smartest strategic decision you can make. This report provides the business case, the data, and the curriculum to turn that strategy into a reality.

The Hard Numbers: Why "Grow" Beats "Buy" in the AI Talent War

Before diving into a curriculum, the financial case for upskilling must be undeniable. The decision to build versus buy talent isn't just a philosophical one; it's a critical budgetary choice that impacts your bottom line, project velocity, and long-term stability. A comprehensive analysis of the Total Cost of Ownership (TCO) reveals that cultivating internal talent is not only cheaper but strategically superior.

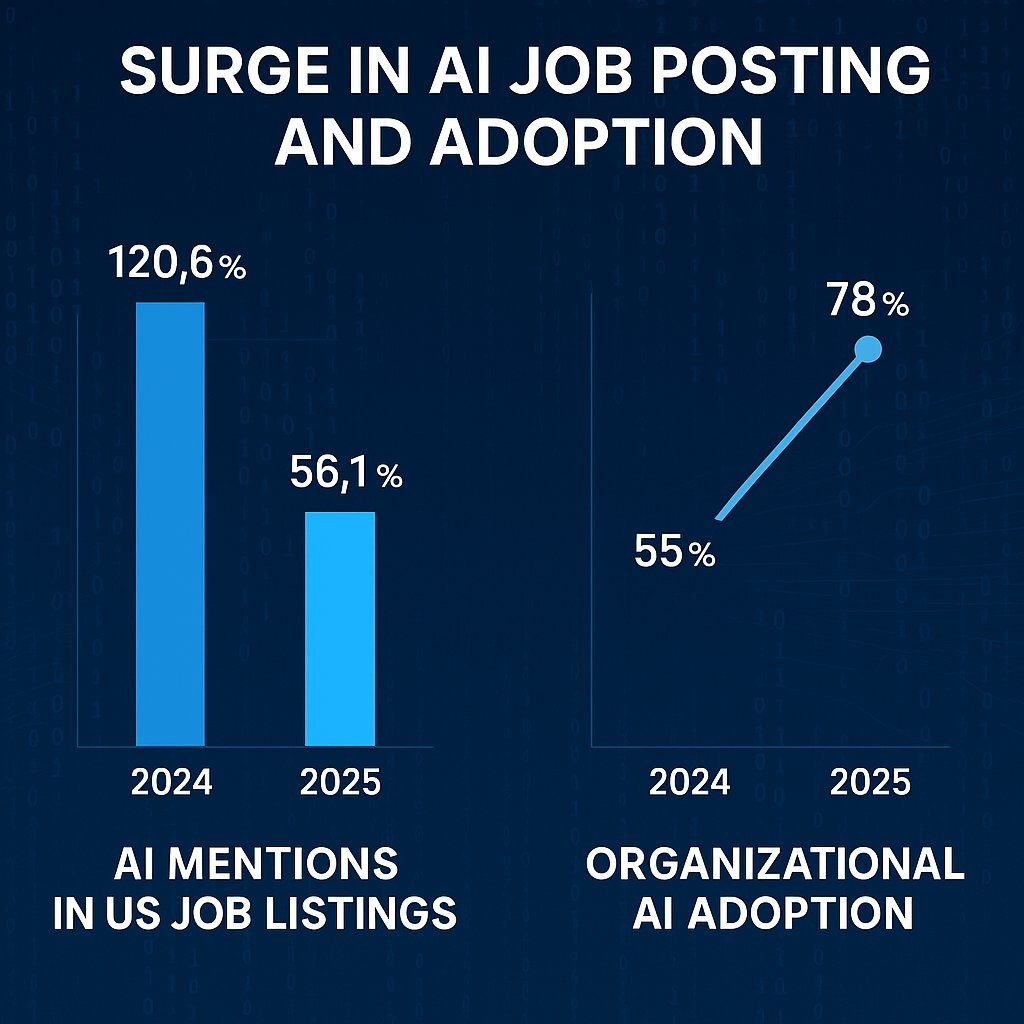

The AI demand shockwave is here. Mentions of AI in US job listings have surged, growing by 120.6% in 2024 and another 56.1% in the first part of 2025 alone. This isn't a niche tech-sector phenomenon. Across all industries, 78% of organizations reported using AI in 2024, a massive leap from 55% the previous year. This market-wide hunger for AI skills has created a formidable talent gap and a predictable, staggering price tag for those who can fill it.

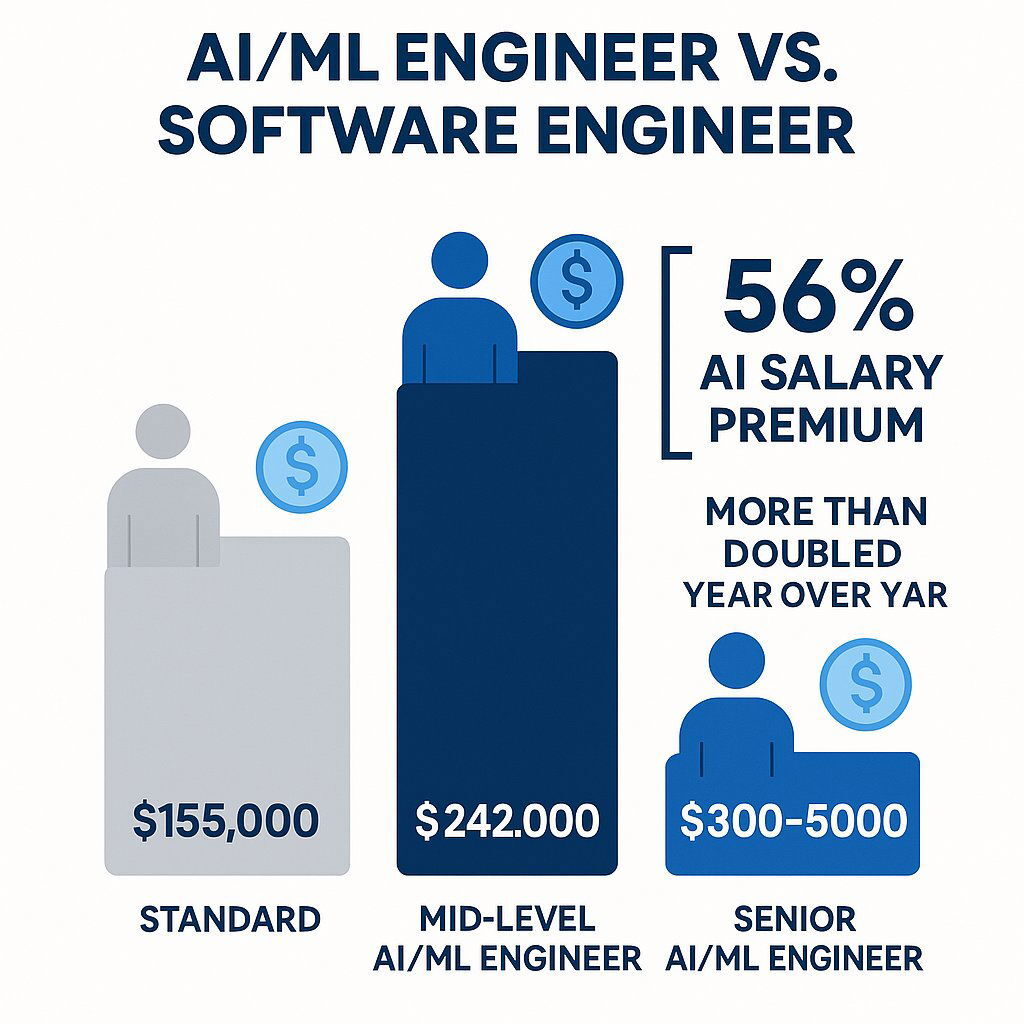

The Direct Cost: The AI Wage Premium

The intense demand directly fuels a massive wage premium for specialized AI talent. On average, workers with AI skills command a 56% higher salary than their non-AI counterparts in the exact same role—a premium that more than doubled from 25% in the prior year.

The median total compensation for a mid-level ML/AI Software Engineer now stands at $242,000 . Senior roles easily command base salaries approaching or exceeding

$200,000 , with total compensation packages at major tech firms pushing into the $300,000 to $500,000 range. This is a stark contrast to the average salary for a senior software engineer, which hovers around $155,000.

The Hidden Costs of Hiring

The sticker shock of AI salaries is only the beginning. The true cost of hiring externally includes a cascade of indirect expenses and risks that are often overlooked in initial budget planning.

- Recruitment and Downtime: The average cost to hire a single new tech employee is $23,450 . This figure accounts for recruiter fees, advertising, and, most significantly, the lost productivity from an empty seat, which takes an average of 10 weeks to fill.

- The "Bad Hire" Multiplier: The true cost of a bad hire can be three to four times their annual salary. In a field as complex as AI, a mismatched hire can do more than just fail to deliver; they can derail critical projects, disrupt team dynamics, and damage the company's technical reputation.

- The Retention Risk: Even successful hires are a gamble. External hires are 21% more likely to leave within their first year compared to internally promoted employees. For a role with a quarter-million-dollar salary, this represents a catastrophic and immediate loss on investment.

The Upskilling Alternative: A More Efficient Investment

In stark contrast, the cost of upskilling your existing team is significantly lower and far more predictable. The average cost to upskill an existing employee for a new IT role is just $15,231 . In fact, more than half of organizations (57%) report spending less than $5,000 per employee on such training initiatives.

The financial argument becomes crystal clear when laid out side-by-side.

| Cost Factor | External AI Engineer Hire (Median) | Internal Developer Upskilling |

|---|---|---|

| Annual Salary | $242,000 | Base Salary + Stipend (e.g., $140,000 + $5,000) |

| Recruitment Fees (avg.) | $23,450 | $0 |

| Training & Onboarding | Included in ramp-up | $15,231 (avg.) |

| Productivity Loss (10-week vacancy) | ~$23,450 | Minimal (learning alongside current duties) |

| Time to Full Productivity | 6-9 months | 1-3 months (already knows systems/culture) |

| Attrition Risk (1st year) | High (21% more likely to leave) | Low (investment boosts retention) |

| Total Estimated 12-Month Cost | ~$288,900+ | ~$160,231 |

This analysis shifts the entire framing of the decision. Hiring is a high-cost, high-risk, transactional expense to fill a gap. Upskilling is a strategic investment in a proven asset—your employee. That investment pays compounding dividends. It leverages deep institutional knowledge, dramatically improves employee retention, and builds a more flexible, resilient workforce capable of adapting to future technological shifts. You are not just filling a role; you are building a capability that becomes a durable competitive advantage.

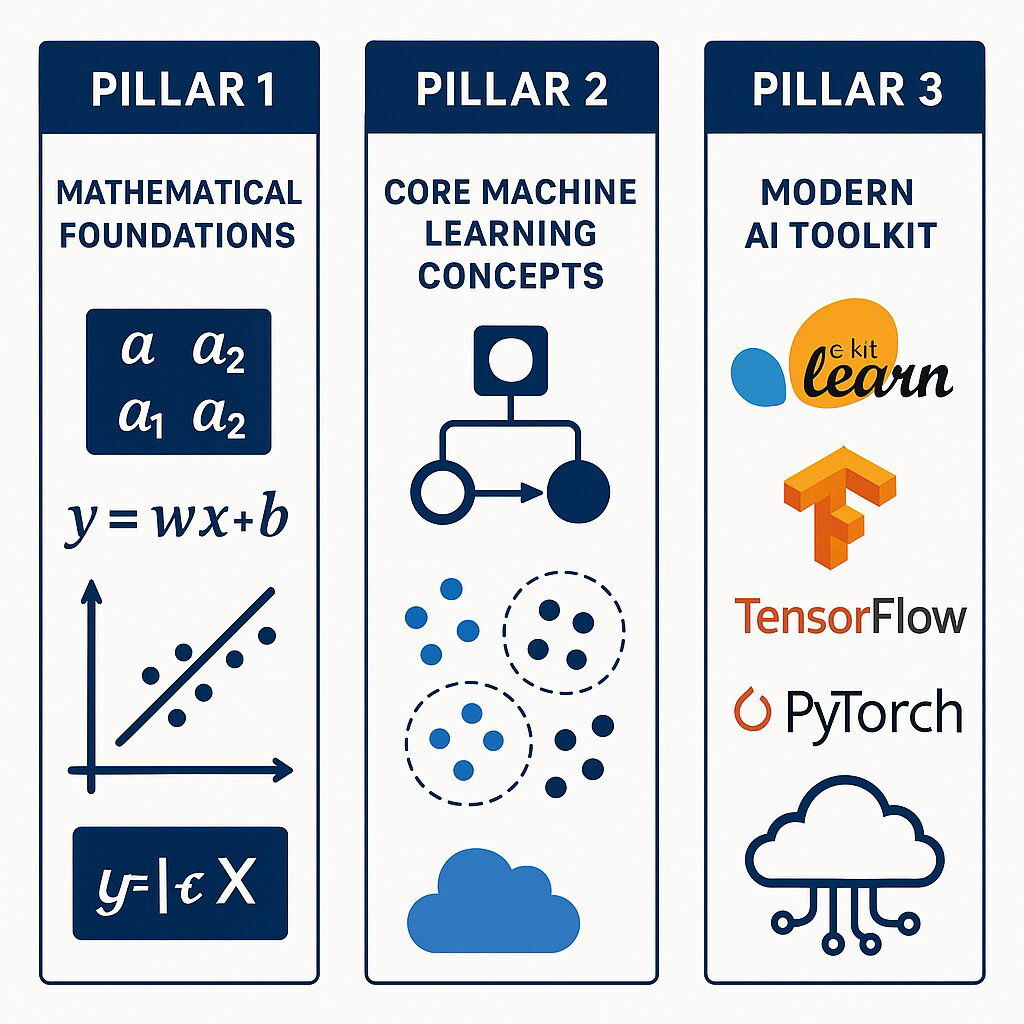

The AI Upskilling Curriculum: From Software Engineer to AI Practitioner

Transitioning a talented software engineer into a capable AI practitioner does not require a PhD in theoretical physics. It requires a pragmatic, structured curriculum focused on applied knowledge. This three-pillar approach is designed to build on the strong foundation your developers already possess, equipping them with the specific mathematical intuition, algorithmic understanding, and practical tools to build and deploy real-world AI solutions.

Pillar 1: Rebuilding the Mathematical Foundation (The "Why" Behind the Code)

The goal here is not to turn developers into pure mathematicians but to provide the essential intuition for understanding why AI algorithms work. This foundational knowledge is what separates a developer who can merely call an API from one who can effectively debug, tune, and innovate with machine learning models.

- Linear Algebra: The Language of Data. At its core, machine learning is about manipulating large arrays of numbers. Linear algebra provides the vocabulary and operations for this. A developer must be comfortable with:

- Scalars, Vectors, and Matrices: Understanding these as the fundamental data structures for representing everything from a single data point to an entire dataset or the weights of a neural network.

- Core Operations: Concepts like the dot product, matrix multiplication, and transpositions are not just abstract math; they are the high-performance operations that libraries like NumPy and TensorFlow use to process data in parallel, making modern AI computationally feasible.

- Calculus: The Engine of Optimization. The central task in "training" a model is to minimize its error. Calculus provides the mechanism to do this efficiently. The key concept is:

- Derivatives and Gradients: A developer needs to grasp that the derivative (or gradient in multiple dimensions) of a function points in the direction of the steepest ascent. In machine learning, we use this to perform Gradient Descent —the iterative process of taking small steps in the opposite direction of the gradient to find the point of minimum error. This is the fundamental algorithm that "teaches" most models, and understanding it is crucial for tuning learning rates and debugging convergence issues.

- Probability & Statistics: The Framework for Uncertainty. AI models rarely give "certain" answers; they make probabilistic predictions. A solid statistical foundation is essential for interpreting and building reliable models. Key concepts include:

- Core Principles: Understanding probability distributions (like Gaussian and Binomial), conditional probability, and Bayes' Theorem is fundamental to algorithms like Naive Bayes and for reasoning about model confidence.

- Estimation Techniques: Concepts like Maximum Likelihood Estimation (MLE) provide a formal framework for determining the most likely model parameters given the training data, forming the statistical basis for how many models are fitted.

Pillar 2: Mastering Core Machine Learning Concepts (The "What" of the Problem)

With the mathematical foundation in place, the next step is to understand the primary families of machine learning algorithms, organized by the type of problem they are designed to solve. This practical, problem-first approach aligns directly with how an engineer evaluates a business requirement.

- Regression (Predicting Continuous Values): This is for any problem where the goal is to predict a number.

- Use Cases: Forecasting quarterly sales, predicting the price of a house based on its features, or estimating the energy consumption of a building.

- Key Algorithms: Start with Linear Regression as the foundational model to understand the core concepts of fitting a line to data. Then, move to more powerful ensemble methods like Gradient Boosted Trees that are workhorses in many real-world prediction tasks.

- Classification (Assigning a Category): This is for any problem where the goal is to assign a discrete label.

- Use Cases: Identifying whether an email is spam or not spam (binary classification), determining customer sentiment as positive, negative, or neutral (multiclass classification), or diagnosing a medical image.

- Key Algorithms: Logistic Regression is the classification counterpart to linear regression. From there, explore Support Vector Machines (SVM) , and tree-based methods like Decision Trees and their more robust evolution, Random Forests .

- Clustering (Finding Hidden Groups): This is the primary tool for unsupervised learning, used when you don't have pre-labeled data and want the algorithm to discover the natural structure within it.

- Use Cases: Segmenting your customer base into distinct personas based on purchasing behavior, detecting anomalous network activity, or grouping related news articles.

- Key Algorithm: K-Means is the most intuitive and widely used clustering algorithm. It works by partitioning data into a pre-specified number ( k ) of clusters, making it excellent for tasks like customer segmentation where you want to define a specific number of groups.

Pillar 3: Wielding the Modern AI Toolkit (The "How" of Implementation)

This pillar is about translating theory into practice. It focuses on the libraries, platforms, and methodologies that developers will use to build, train, and deploy models in a production environment.

- The Foundational Library: Scikit-learn. This is the indispensable starting point for any aspiring ML practitioner. It's the "Swiss Army knife" of machine learning, providing clean, robust, and well-documented Python implementations of nearly all the algorithms covered in Pillar 2. A standard workflow in Scikit-learn provides the perfect learning path:

- Load and preprocess data.

- Split the data into training and testing sets.

- Initialize and

fit()a model on the training data. - Use the trained model to

predict()on the test data. - Evaluate the model's performance using built-in metrics.

- The Deep Learning Powerhouses: TensorFlow & PyTorch. For more complex problems, particularly in computer vision and natural language processing (NLP), developers will need to graduate to a deep learning framework.

- TensorFlow (Google) and PyTorch (Meta) are the two industry standards. While their APIs differ, they share the core concept of building and training neural networks through operations on multi-dimensional arrays called tensors. Providing developers with beginner-friendly tutorials for both will allow them to tackle advanced challenges.

- The Production Environment: Cloud AI & MLOps. This is where the upskilling strategy truly shines. Deploying, scaling, and maintaining AI models in production is not an esoteric art; it is a direct extension of modern DevOps principles—a domain where your engineers are already experts.

- Leveraging Cloud Platforms: Instead of building complex infrastructure from scratch, teams can use managed AI services from Azure, AWS, or GCP . These platforms handle the heavy lifting of provisioning compute (including GPUs), model versioning, and creating scalable API endpoints for inference, dramatically reducing the operational burden.

- MLOps is DevOps for AI: A typical MLOps workflow is remarkably familiar to a modern software engineer. It involves packaging a trained model into a Docker container, managing and orchestrating that container with Kubernetes , and automating the entire build-test-deploy lifecycle with a CI/CD platform like Azure DevOps . Your team already has deep expertise in this stack. They aren't starting from scratch; they are simply learning to deploy a new type of software artifact—the ML model—using the robust, scalable processes they've already mastered. This connection demystifies AI deployment and positions it as a natural evolution of their existing skill set.

Putting the Plan into Action: Fostering a Culture of Continuous Learning

A world-class curriculum is only a document. To bring it to life, you must cultivate an engineering culture where learning is not an event, but a continuous, integrated part of the daily workflow. This requires deliberate action and visible commitment from leadership.

- Executive Buy-In and Strategic Alignment: First and foremost, upskilling must be framed as a core business strategy, not a discretionary training perk. It needs a dedicated budget and vocal, visible support from the C-suite. Learning initiatives must be explicitly tied to business goals to ensure they are delivering tangible value and to justify the investment. This isn't about learning for learning's sake; it's about building the capabilities required to win in the market.

- Time as a Resource: The single biggest barrier to any upskilling program is the lack of time. If learning is something engineers are expected to do "on the side," it will fail. Leaders must formally allocate and protect time for it. Practical strategies include:

- Dedicated Learning Time: Institute "Innovation Days," "20% Time," or dedicated learning hours each week where engineers are explicitly encouraged to experiment with new tools and work through the curriculum.

- Project-Based Learning: The most effective learning is applied learning. Integrate small-scale AI features or proof-of-concept projects into the existing product roadmap. Tasking a developer with building a simple recommendation engine or a sentiment analysis tool for customer feedback makes the learning process tangible, immediately relevant, and directly contributory to business value.

- Creating Psychological Safety: Innovation requires experimentation, and experimentation requires failure. Engineers must feel safe to try new things, ask "dumb" questions, and fail without fear of reprisal. This psychological safety is the absolute "bedrock of learning". Leaders can foster this by celebrating learning efforts, not just successful outcomes, and by structuring processes like code reviews as collaborative, educational sessions rather than critical evaluations.

- Knowledge Sharing Mechanisms: To scale learning across the team, you must create systems for knowledge to flow freely. Isolated learning is inefficient. A community of practice accelerates everyone's growth.

- Internal Demos and "Lunch and Learns": Create regular, informal forums where team members can share what they've learned, demo a new tool, or walk through a project they've completed.

- Mentorship and Peer Support: Pair engineers who are further along in their learning journey with those who are just starting. Encourage peer-to-peer code reviews and problem-solving sessions.

- Centralized Knowledge Hubs: Establish an internal wiki or a shared code repository for AI best practices, reusable code snippets, project templates, and links to valuable resources. This prevents knowledge from being siloed and accelerates onboarding for new learners.

Ultimately, building a culture of continuous learning yields a benefit far greater than just AI proficiency. It creates an organization that is inherently more adaptive, resilient, and innovative. An engineer who feels empowered to master a new AI library today is the same engineer who will be ready to tackle the next major technological disruption tomorrow. The investment in upskilling, therefore, is an investment in your organization's long-term ability to evolve and thrive.

Your Next Move: Building Your AI-Ready Team

The AI talent gap is not a problem to be solved, but a new market reality to be navigated. While your competitors are locked in an expensive, high-risk bidding war for a scarce pool of external talent, you have a strategic advantage: your existing team. They are proven, loyal, and possess invaluable context about your business, your code, and your customers.

Upskilling is not the easy path, but it is the smart one. It is a deliberate, strategic investment that transforms a cost center into a long-term competitive advantage. It builds not just skills, but loyalty. It fosters not just capability, but a culture of innovation. While others are paying a premium for mercenaries, you can build an army.

Here are your immediate, actionable next steps:

- Assess Your Team: Identify the engineers within your organization who show a strong aptitude for problem-solving and a genuine interest in AI. These will be your champions and the seeds of your new capability.

- Start Small: Do not try to boil the ocean. Select a single, well-defined business problem with a high potential for impact and scope it as a pilot AI project. Success here will build momentum and secure broader buy-in.

- Commit to the Curriculum: Adapt the three-pillar framework to your team's specific needs. Dedicate the time and resources necessary for genuine learning to occur.

Building an AI-ready team is a significant undertaking. At Baytech Consulting, we specialize in crafting custom software solutions and empowering teams with the cutting-edge tech skills needed to succeed. Our expertise in agile deployment and tailored technology can accelerate your journey. If you're ready to turn your engineering talent into an AI powerhouse, let's have a conversation about your roadmap.

Further Reading

- https://evizi.com/insights/artificial-intelligence-ai/the-ai-renaissance-strategic-insights-for-ctos-in-2025-and-beyond/

- https://www.mckinsey.com/capabilities/people-and-organizational-performance/our-insights/the-critical-role-of-strategic-workforce-planning-in-the-age-of-ai

- https://www.mckinsey.com/industries/retail/our-insights/eight-tech-forward-imperatives-for-consumer-ctos-in-2025

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.